我们以爬取sina时尚模块为例

准备工作

为进行爬虫爬取工作,我们需要进行相关库的准备以及对网页设置布局的了解

- 相关库的准备

import os

import re

import urllib

from bs4 import BeautifulSoup

from lxml import etree

import json

import requests

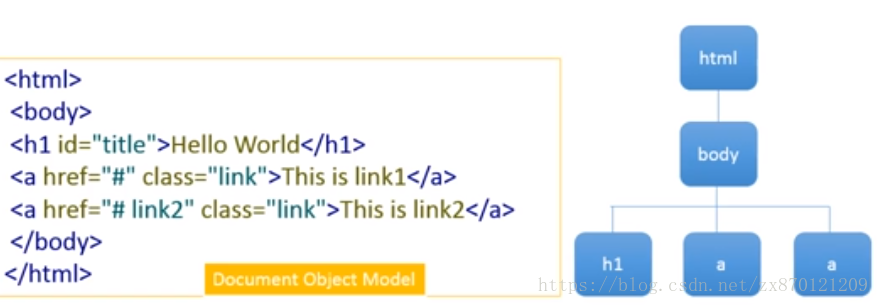

- 网页布局的信息获取

我们进入sina时尚板块,选取一则时尚新闻,进入相关网页,我们利用chrome浏览器来进行右键检查,观察新闻的文档内容以及图片存放的位置

结合chrome浏览器的插件使用,我们得到了以上的存放位置。

借此我们可以完成单一新闻的获取,但这样还不够,我们想做到大量时尚新闻的获取。

我们还需要进一步的对网页进行了解

我们再次回到时尚首页,右键检查网页,打开network,进行检查,刷新网页之后,发现了如图的文件

我们复制并进入它的url,发现了这就是保存每一页网页的json文件

并通过测试发现了,这个json文件的url规律,真正控制其的是up=%d,所以我们只需要修改up的值即可以获取到大量的新闻,再通过前述的操作,得到文档和图片。

具体实现

我们利用正则获取每一个新闻的url和文档,但在显示结果时,发现并不能直接对其利用

pat1 = r'"title":"(.*?)",'

titles = re.findall(pat1, res)

print(titles)

#'\\u674e\\u5b87\\u6625\\u767b\\u300a\\u65f6\\u5c1aCOSMO\\u300b\\u5341\\u4e8c\\u6708\\u520a\\u5c01\\u9762 \\u6f14\\u7ece\\u51ac\\u65e5\\u590d\\u53e4\\u98ce'

pat2 = r'"url":"(.*?)",'

links = re.findall(pat2, res)

print(links)

#'http:\\/\\/slide.fashion.sina.com.cn\\/s\\/slide_24_84625_138650.html', 'https:\\/\\/fashion.sina.com.cn\\/s\\/fo\\/2020-11-13\\/1051\\/doc-iiznctke1091604.shtml'

有多余的反斜杠,所以我们要对链接和title处理,才能得到正确的结果。

操作的具体解释

针对网址的处理

pat2 = r'"url":"(.*?)",'

links = re.findall(pat2, res)

for i in range(len(links)):links[i]=links[i].replace('\\','')print(links[i])

对于文档的处理:

pat1 = r'"title":"(.*?)",'

titles = re.findall(pat1, res)

#print(titles)

for i in range(len(titles)):titles[i]=titles[i].replace('\\','\\')titles[i]=titles[i].encode('utf-8').decode('unicode_escape')print(titles[i])

基于此,我们可以进行正确的批量的新闻获取

具体代码如下:

import os

import re

import urllib

from bs4 import BeautifulSoup

from lxml import etree

import json

import requests#test 阶段

#User-Agent的选取选取使用可能影响到数据能否爬取到

# url='https://fashion.sina.com.cn/style/man/2020-11-10/1939/doc-iiznezxs1005705.shtml'

# headers = {'content-type': 'application/json',

# 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36'}

# req = urllib.request.Request(url, headers=headers)

# response = urllib.request.urlopen(req,timeout=15)

# html = response.read().decode('utf-8', errors='ignore')

# list = etree.HTML(html)

# print(html)# req = urllib.request.urlopen(url)

# res = req.read().decode('utf-8',errors='ignore')

#list = etree.HTML(res)

#text=list.xpath("//div[@id='artibody']/p//text()")

#print(text)# soup=BeautifulSoup(html,"html.parser")

# doctext2=soup.find('div', id='artibody')

# print(doctext2)

#imageurl=list.xpath('//div[@class="img_wrapper"]/img/@src')

#print(imageurl)#//n.sinaimg.cn/sinakd2020119s/124/w1034h690/20201109/4728-kcpxnww9833831.jpg

# for i in range(len(imageurl)):

# imageurl[i] = 'http:' + imageurl[i]

# print(imageurl)

#对获取到的图片的数据加前缀 http:# timeline=list.xpath("//div[@class='date-source']/span//text()")

# print(timeline[0][:11])headers = {'content-type': 'application/json','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36'}

#页面访问

def gethtml(url):try:req = urllib.request.Request(url, headers=headers)response = urllib.request.urlopen(req,timeout=15)html = response.read().decode('UTF-8', errors='ignore')return htmlexcept Exception as e:print("网页{}爬取失败",format(url),"原因",e)#获取图片内容

def get_pic(url):try:pic_response = requests.get(url, timeout=10, headers=headers)pic = pic_response.contentreturn picexcept Exception as e:print("图片{}爬取失败",format(url),"原因",e)#图片的具体内容存储

def save_imgfile(texttitle,filename, content):#参数分别代表着 文档标题 图片名称 图片获取save_dir = r'E:\fashioninfo'#定义存储路径save_dir=save_dir+"\\"+texttitle#把图片存到以新闻命名的文件夹中if not os.path.exists(save_dir):os.makedirs(save_dir)if filename[-4:] in ['.jpg', '.png', 'webp', '.png', 'jpeg', '.gif', '.bmp']:with open(save_dir + '\\' + filename, "wb+") as f:f.write(content)return print('写入{}'.format(filename) + '成功')#题目修改

def title_modify(title):# 用于修改title 避免不合法命名字符title = title.replace(':', '')title = title.replace('"', '')title = title.replace('|', '')title = title.replace('/', '')title = title.replace('\\', '')title = title.replace('*', '')title = title.replace('<', '')title = title.replace('>', '')title = title.replace('?', '')# print(title)return title#text文本的获取及下载

def download_text(title, url):#req = urllib.request.urlopen(url)#res = req.read()res = gethtml(url)list = etree.HTML(res)#soup = BeautifulSoup(res, 'html.parser')# print(soup)# print(soup.prettify())try:doctext = list.xpath("//div[@id='artibody']/p//text()")timeinfo = list.xpath("//div[@class='date-source']/span//text()")time = timeinfo[0][:11]title=title_modify(title)file_name = r'E:\fashioninfo\\' + title + '.txt'# file = open(file_name, 'w', encoding='utf-8')with open(file_name, 'w', encoding='UTF8') as file:file.writelines(time)file.writelines('\n')for i in doctext:file.write(i)except Exception as e:print("文章{}爬取失败".format(title), "原因", e)def download_imgurlgetting(title, url):#从新闻中得到我们要取得的图片url#req = urllib.request.urlopen(url)#res = req.read()imagenumber=0res = gethtml(url)title = title_modify(title)list = etree.HTML(res)img_url= list.xpath('//div[@class="img_wrapper"]/img/@src')#获取到的数据少了前缀http: 我们需要补上才能正常访问for i in range(len(img_url)):img_url[i] = 'http:' + img_url[i]for i in img_url:pic_name_model = '.*/(.*?).(jpg|png)'pic_filename = re.compile(pic_name_model, re.S).findall(i)try:if i[0] == 'h':pic1 = get_pic(i)pic_filename1 = pic_filename[0][0] + '.' + pic_filename[0][1]filename1 = "%d" % (imagenumber)filename1 = filename1 + pic_filename1[-4:]# print(filename1)save_imgfile(title, filename1, pic1)imagenumber += 1else:pic2 = url + ipic2 = get_pic(pic2)pic_filename2 = pic_filename[0][0] + '.' + pic_filename[0][1]filename2 = "%d" % (imagenumber)filename2 = filename2 + pic_filename2[-4:]save_imgfile(title, filename2, pic2)imagenumber += 1except Exception as e:print('图片{}匹配识别'.format(pic_filename), '原因', e)if __name__ == '__main__':urlmodel='https://interface.sina.cn/pc_api/public_news_data.d.json?callback=jQuery1112010660975891532987_1605232311914&cids=260&type=std_news%2Cstd_video%2Cstd_slide&pdps=&editLevel=0%2C1%2C2%2C3&pageSize=20&up=0&down=0&top_id=iznctke1064637%2Ciznezxs1485561%2Ciznezxs1265693%2Ciznezxs1422737%2Ciznctke0646879%2Ciznezxs0057242%2Ciznctke0819305&mod=nt_home_fashion_latest&cTime=1602640501&action=0&_=1605232311915'number=input("请输入要爬取的页数:")urllist=[]for i in range(len(number)):temp='https://interface.sina.cn/pc_api/public_news_data.d.json?callback=jQuery1112010660975891532987_1605232311914&cids=260&type=std_news%2Cstd_video%2Cstd_slide&pdps=&editLevel=0%2C1%2C2%2C3&pageSize=20&up={}&down=0&top_id=iznctke1064637%2Ciznezxs1485561%2Ciznezxs1265693%2Ciznezxs1422737%2Ciznctke0646879%2Ciznezxs0057242%2Ciznctke0819305&mod=nt_home_fashion_latest&cTime=1602640501&action=0&_=1605232311915'.format(number)urllist.append(temp)for url in urllist:res = gethtml(url)pat1 = r'"title":"(.*?)",'pat2 = r'"url":"(.*?)",' # 获取具体时尚信息网页不在tlink 而在docurltitles = re.findall(pat1, res)for i in range(len(titles)):titles[i] = titles[i].replace('\\', '\\')titles[i] = titles[i].encode('utf-8').decode('unicode_escape')#print(titles[i])links = re.findall(pat2, res)for i in range(len(links)):links[i] = links[i].replace('\\', '')#print(links[i])#print(links)#print(titles)count = 1for t, l in zip(titles, links):print('正在爬取第{}个时尚信息'.format(count), t)download_text(t, l)download_imgurlgetting(t, l)count += 1

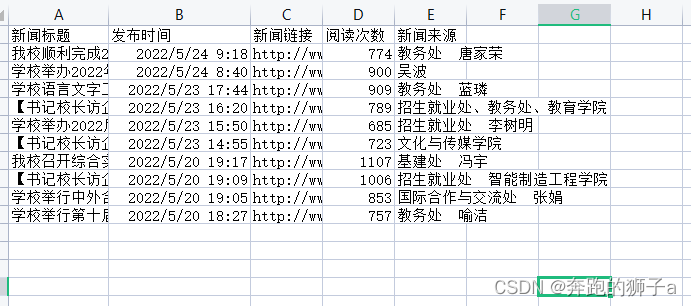

爬取后的数据如图:

总结及注意

- User-Agent的正确使用

- 多余反斜杠的处理

- 对json文件url的观察,总结规律,得到大批量的数据方便获取