简单演示一下基于卷积神经网络的一维信号降噪,有个大致印象即可。

%% Plot the previous training CNN.

set_plot_defaults('on')

load('denoiser_sparse.mat');

h1{1} = double(conv1);

h1{2} = double(conv2);

h1{3} = reshape(double(conv3),[8,1,17]);

figure(1)

[r,c,~] = size(h1{1});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{1}(i,j,:))))

hold on

plot(flip(squeeze(h1{1}(i,j,:))))

hold off

box off

end

end

sgtitle('layer1 (8x1)', 'FontSize', 10);

figure(2)

[r,c,~] = size(h1{3});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{3}(i,j,:))))

hold on

plot(flip(squeeze(h1{3}(i,j,:))))

hold off

box off

end

end

sgtitle('layer3 (1x8)', 'FontSize', 10);

%% Plot the previous training CNN.

load('remove_13_retrained.mat');

h1{1} = double(conv1);

h1{2} = double(conv2);

h1{3} = double(conv3);

set_plot_defaults('on')

figure(3)

[r,c,~] = size(h1{1});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{1}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{1}(i,j,:))))

hold off

xlim([0,10])

ylim([-1.2,1.2])

box off

end

end

sgtitle('layer1 (2x1)', 'FontSize', 10);

%

figure(4)

[r,c,~] = size(h1{2});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{2}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{2}(i,j,:))))

hold off

xlim([0,38])

ylim([-0.25,inf])

box off

end

end

sgtitle('layer2 (2x2)', 'FontSize', 10);

figure(5)

[r,c,~] = size(h1{3});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{3}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{3}(i,j,:))))

hold off

box off

end

end

sgtitle('layer3 (1x2)', 'FontSize', 10);

clear

% close all

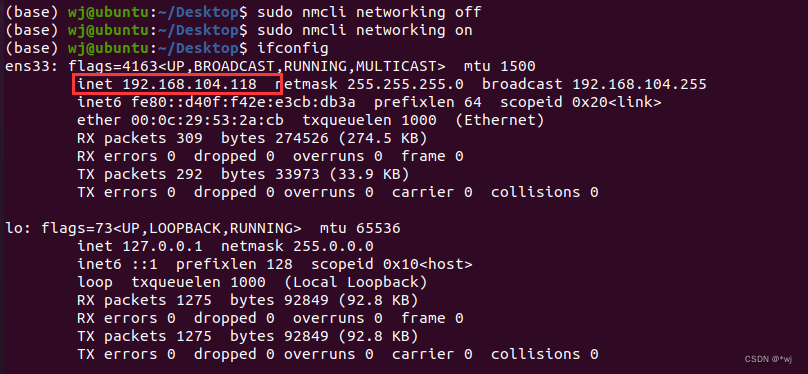

N = 500;

K = 50;

sigma = 1;

groundtruth = zeros(1, N);

index_random = randperm(N);

index = index_random(1:K);

groundtruth(index) = 10*2*(rand(1,K) - 0.5);

noise = sigma * randn(1,N);

input = groundtruth + noise;%% Plot the components of the input signal.

set_plot_defaults('on')

figure(6)

subplot(3,1,1)

plot(1:N, groundtruth)

title('S[n]')

ylim([-10 10])

box off

subplot(3,1,2)

plot(1:N, noise)

title('N[n]')

ylim([-10 10])box off

subplot(3,1,3)

plot(1:N, input)

title('x[n]')

ylim([-10 10])

box off

%% Create the proposed CNN

threshold1 = 2.4;

threshold2 = 4.8;

rho = 1; % rho is the ratio between output and input signal.

l = 37; % l is the length of the filters in the second layer.

training_sigma = 2; % The standard deviation of the Gaussian noise in the training data is between 0 and training_sigm

training_num = 60000; % training_num is the number of the training signals.

training_type = 2; % 1 means Uniform and 2 means Gaussian.

istrain_flag = false; % istrain_flag can determine if training a new CNN or directly using the trained parameters.

h1 = create_denoiser(l,rho,threshold1,threshold2,training_type,istrain_flag);

%% Plot the output of each layer

set_plot_defaults('on')

figure(7)

l1 = layer(input, h1{1});

subplot(3,1,1)

plot(1:N,input)

title('x[n]')

ylim([-12,12])

xlim([0,500])

box off

subplot(3,1,2)

plot(1:N,l1(1,:))

title('x_1[n]')

ylim([0,12])

xlim([0,500])

box off

subplot(3,1,3)

plot(1:N,l1(2,:))

title('x_2[n]')

ylim([0,12])

xlim([0,500])

box off

% set(

figure(8)

l2_NR = conv1d(l1,h1{2});

l2 = ReLU(l2_NR);

subplot(2,2,1)

plot(1:N,l2_NR(1,:))

title('c_1[n]')

ylim([-10,20])

xlim([0,500])

box off

subplot(2,2,3)

plot(1:N,l2_NR(2,:))

title('c_2[n]')

ylim([-10,20])

xlim([0,500])

box off

subplot(2,2,2)

plot(1:N,l2(1,:))

title('c_1[n] after ReLU')

ylim([0,12])

xlim([0,500])

box off

subplot(2,2,4)

plot(1:N,l2(1,:))

title('c_1[n] after ReLU')

ylim([0,12])

xlim([0,500])

box off

figure(9)

l3 = conv1d(l2,h1{3});

plot(1:N,l3)

title('y[n]')

ylim([-12,12])

xlim([0,500])

box off

title('y[n]')

%% Plot the structure of the proposed CNN

set_plot_defaults('on')

figure(10)

[r,c,~] = size(h1{1});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{1}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{1}(i,j,:))))

hold off

xlim([0,10])

ylim([-1.2,1.2])

box off

end

end

sgtitle('layer1 (2x1)', 'FontSize', 10);

figure(11)

title('Layer 2')

[r,c,~] = size(h1{2});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{2}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{2}(i,j,:))))

hold on

plot(19,h1{2}(i,j,19), 'MarkerSize', 10)

hold on

if i == j

text(20,h1{2}(i,j,19)-0.05, '\alpha_1')

text(30,h1{2}(i,j,8)+0.4, '\beta_1')

else

text(20,h1{2}(i,j,19)-0.05, '\alpha_2')

text(30,h1{2}(i,j,8)+0.2, '\beta_2')

end

plot(30,h1{2}(i,j,8), 'MarkerSize', 10)

hold off

xlim([0,38])

ylim([-0.25,inf])

box off

end

end

sgtitle('layer2 (2x2)', 'FontSize', 10);

figure(12)

title('Layer 3')

[r,c,~] = size(h1{3});

for i=1:1:r

for j=1:1:c

subplot(r,c,c*i-(c-j))

stem(flip(squeeze(h1{3}(i,j,:))), 'filled', 'MarkerSize', 2)

hold on

plot(flip(squeeze(h1{3}(i,j,:))))

hold off

box off

end

end

sgtitle('layer3 (1x2)', 'FontSize', 10);

%% Plot the output signal v.s. input signal and display the thresholds.

set_plot_defaults('on')

output = CNN(input,h1);

figure(13)

plot(input, output, 'o', 'MarkerSize', 4)

hold on;

line([threshold1 threshold1], [-10 10],'Color','magenta','LineStyle','--')

line([threshold2 threshold2], [-10 10],'Color','red','LineStyle','--')

line([-threshold1 -threshold1], [-10 10],'Color','magenta','LineStyle','--')

line([-threshold2 -threshold2], [-10 10],'Color','red','LineStyle','--')

xlabel('Input')

ylabel('Output')

grid

axis equal square

line([-10 10], [-10 10])

axis([-10,10,-10,10])

box off

%% Create the proposed CNN

threshold1 = 2.61;

threshold2 = 5.26;

rho = 1; % rho is the ratio between output and input signal.

l = 37; % l is the length of the filters in the second layer.

training_sigma = 2; % The standard deviation of the Gaussian noise in the training data is between 0 and training_sigm

training_num = 60000; % training_num is the number of the training signals.

training_type = 1; % 1 means Uniform and 2 means Gaussian.

istrain_flag = false; % istrain_flag can determine if training a new CNN or directly using the trained parameters.

h1 = create_denoiser(l,rho,threshold1,threshold2,training_type,istrain_flag);

N = 500;

K = 25;

sigma = 0.5;

groundtruth = zeros(1, N);

index_random = randperm(N);

index = index_random(1:K);

groundtruth(index) = 10*2*(rand(1,K) - 0.5);

noise = sigma * randn(1,N);

input = groundtruth + noise;

%% Display groundtruth, input signal and output signal. Plot input signal v.s. output signal in two forms. Pure signal cas

set_plot_defaults('on')

figure(14)

output = CNN(groundtruth, h1);

subplot(3,1,1)

plot(groundtruth)

title('pure signal (S[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,2)

plot(groundtruth)

title('noisy signal (x[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,3)

plot(output)

title('output signal (y[n])');

xlim([1,500])

ylim([-10,10])

box off

n = 1:N;

figure(15)

stem(n, groundtruth, 'MarkerSize', 4)

hold on

plot(n, output, 'ro', 'MarkerSize', 4)

hold off

legend('Input (x[n])', 'Output (y[n])', 'Location', 'SouthEast', 'FontSize', 10)

xlim([1,500])

ylim([-10,10])

% title('Input v.s. output')

box off

figure(16)

plot(groundtruth, output, 'ro')

hold on

xlabel('Input')

ylabel('Output')

grid

axis equal square

line([-10 10], [-10 10])

hold off

% title('Input v.s. output')

box off

%% Display groundtruth, input signal and output signal. Plot input signal v.s. output signal in two forms. Pure noise case

set_plot_defaults('on')

figure(17)

output = CNN(noise, h1);

subplot(3,1,1)

plot(zeros(1,N))

title('pure signal (S[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,2)

plot(noise)

title('noisy signal (x[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,3)

plot(output)

title('output signal (y[n])');

xlim([1,500])

ylim([-10,10])

box off

n = 1:N;

figure(18)

stem(n, noise, 'MarkerSize', 4)

hold on

plot(n, output, 'ro', 'MarkerSize', 4)

hold off

legend('Input (x[n])', 'Output (y[n])')

xlim([1,500])

ylim([-4,4])

% title('Input v.s. output')

box off

figure(20)

output = CNN(input, h1);

subplot(3,1,1)

plot(groundtruth)

title('pure signal (S[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,2)

plot(input)

title('noisy signal (x[n])');

xlim([1,500])

ylim([-10,10])

box off

subplot(3,1,3)

plot(output)

title('output signal (y[n])');

xlim([1,500])

ylim([-10,10])

box off

%% Create input signal (noisy signal) and ground truth (pure signal) for the performance part.

% N is the total length of the pure sparse signal.

% K is the number of non-zeros in the pure sparse signal.

% As a result, 1-K/N determines the sparsity of the pure signal.

N = 500;

num = 1000;

sigma_set = logspace(log10(0.1), log10(2), 20);

% sigma_set = 0.1:0.1:2;

MSE_output_ave = zeros(3,length(sigma_set));

MSE_input_ave = zeros(3,length(sigma_set));

SNR_output_ave = zeros(3,length(sigma_set));

SNR_input_ave = zeros(3,length(sigma_set));

for m = 1:1:3

K = 25 * m;

for i = 1:1:length(sigma_set)

sigma = sigma_set(i);

SNR_output = zeros(1,num);

SNR_input = zeros(1,num);

MSE_output = zeros(1,num);

MSE_input = zeros(1,num);

for j = 1:1:num

groundtruth = zeros(1, N);

index_random = randperm(N);

index = index_random(1:K);

groundtruth(index) = 10*2*(rand(1,K) - 0.5);

% groundtruth(index) = 10*randn(1,K);

input_noise = sigma*randn(1,N);

input = groundtruth + input_noise;

output = CNN(input, h1);

noise = output - groundtruth;

MSE_output(j) = mean(noise.^2);

MSE_input(j) = mean(input_noise.^2);

SNR_output(j) = 10*log10(mean(groundtruth.^2)/MSE_output(j));

SNR_input(j) = 10*log10(mean(groundtruth.^2)/MSE_input(j));

end

SNR_output_ave(m,i) = mean(SNR_output);

SNR_input_ave(m,i) = mean(SNR_input);

% MSE_output_ave(m,i) = mean(MSE_output);

% MSE_input_ave(m,i) = mean(MSE_input);

MSE_output_ave(m,i) = sqrt(mean(MSE_output));

MSE_input_ave(m,i) = sqrt(mean(MSE_input));

end

end

%% Plot the performance v.s. sparsity and noise level.

set_plot_defaults('on')

figure(23)

semilogx(sigma_set,SNR_output_ave(1,:),'r.-',sigma_set,SNR_output_ave(2,:),'k.-',sigma_set,SNR_output_ave(3,:),'g.-')

hold on

semilogx(sigma_set,SNR_input_ave(1,:),'rx-',sigma_set,SNR_input_ave(2,:),'kx-',sigma_set,SNR_input_ave(3,:),'gx-')

hold off

legend('Output SNR, sparsity = 95%','Output SNR, sparsity = 90%','Output SNR, sparsity = 85%', ...

'Input SNR, sparsity = 95%', 'Input SNR, sparsity = 90%', 'Input SNR, sparsity = 85%')

xlabel('σ')

ylabel('SNR in dB')

set(gca, 'xtick', [0.1 0.2 0.5 1 2.0])

xlim([min(sigma_set) max(sigma_set)])

box off

%

工学博士,担任《Mechanical System and Signal Processing》《中国电机工程学报》等期刊审稿专家,擅长领域:现代信号处理,机器学习/深度学习,时间序列分析/预测,设备智能故障诊断与健康管理PHM等。