写一个天眼查的爬虫,大家有需要的可以直接拿来用,是基于selenium写的。所以使用之前得下载上这个插件。

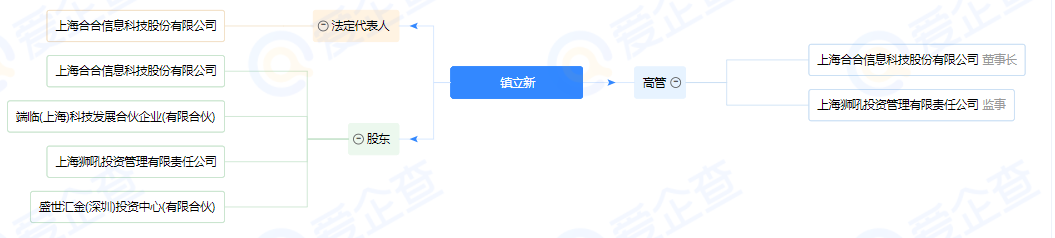

爬的是上面的那个页面

from selenium import webdriver

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC, wait

from selenium.webdriver.common.by import By

from selenium.webdriver import ActionChains

import time

import random

import csv

import json

#打开网页

def openwangzhi():new='山西网才信息技术有限公司'driver = webdriver.Chrome() # 代码在执行的时候回自行去寻找chromedriver.exe(在python目录下寻找),不再需要制定chromedriver.exe路径driver.get("https://www.tianyancha.com/search?key="+new)driver.maximize_window()time.sleep(5)#这里开始输入我们要的那个公司名称 从这里开始我们是做一个循环的path = "b.csv"with open(path, "r+", encoding='utf-8') as f:csv_read = csv.reader(f)a = []for line in csv_read:a.extend(list(line))print(type(a))f.close()companyList=['山西森甲能源科技有限公司']over=[]while len(companyList)>0:try:driver=xunhuanbianli(driver,companyList[0])over.append(companyList[0])companyList.pop(0)print('还有:'+str(len(companyList))+'家没有查到')except Exception as e:print(e)#这里应该写进入日志的companyList.append(companyList[0])companyList.pop(0)#print('当前公司的id为:'+str(comp_id)+'----'+companyList[comp_id]+'----公司的查询出错')print(companyList[0]+'----公司的查询出错')

def xunhuanbianli(driver,companyname):input=driver.find_element_by_xpath('//div[@class="live-search-wrap"]/input')input.clear()time.sleep(random.randint(1,5))input.send_keys(companyname)driver.find_element_by_xpath('//div[@class="input-group-btn btn -sm btn-primary"]').click()WebDriverWait(driver,30, 0.5).until(EC.presence_of_element_located((By.CLASS_NAME, "num-title")))num=driver.find_element_by_xpath('//span[@class="tips-num"]').textnum=int(num)print('查到公司-'+companyname+'共:------'+str(num)+'------家')scroll_to_bottom(driver,5,4)if num>0:#说明可以查到这个公司,点击进入到这个公司的链接中div=driver.find_elements_by_xpath('//div[@class="result-list sv-search-container"]/div')time.sleep(random.randint(1,3))a_href=driver.find_elements_by_xpath('//a[@class="name select-none "]')[0].get_attribute('href')driver.get(a_href)driver.switch_to.window(driver.window_handles[-1])table=driver.find_element_by_xpath('//table[@class="table -striped-col -border-top-none -breakall"]')scroll_to_bottom(driver, 8, 7)title=driver.find_element_by_xpath('//div[@class="content"]/div[@class="header"]/h1[@class="name"]').textjiexitable(table,title)driver.switch_to.window(driver.window_handles[-1])time.sleep(random.randint(1,5))return driverelse:print('这个---- '+companyname+'-----公司查不到相关信息')

#解析table

def jiexitable(tbody,title):rows=[]trs=tbody.find_elements_by_xpath('.//tr')row = []row_dic = {}for tr in trs:tds=tr.find_elements_by_xpath('.//td')for td in tds:row.append(jixitd(td))if (len(row))%2!=0 :row.append(title)for i in range(0,len(row),2):row_dic[row[i]]=row[i+1]rows.append(row_dic)print(row_dic)jsObj = json.dumps(row_dic)fileObject = open('a.json', 'a', encoding='utf-8')#fileObject.write(jsObj)json.dump(row_dic, fileObject, indent=4, ensure_ascii=False)fileObject.close()return row_dic

#解析td

def jixitd(td):strs=''try:strs=td.find_element_by_xpath('.//*').textexcept:strs=td.textreturn strs

#让页面滚动起来

def scroll_to_bottom(driver,after,hou):js = "return action=document.body.scrollHeight"# 初始化现在滚动条所在高度为0height = 0# 当前窗口总高度new_height = driver.execute_script(js)to_location=random.randint(int(new_height/after),int(new_height/hou))while height < new_height:# 将滚动条调整至页面底部for i in range(height, to_location, 100):driver.execute_script('window.scrollTo(0, {})'.format(i))time.sleep(0.5)height = new_heighttime.sleep(2)new_height = driver.execute_script(js)

if __name__ == '__main__':openwangzhi()