文章目录

- 单目测距

- 在kitti数据集中的测试结果

- C++工程

- 原理

- 代码注释

- 其他视觉测距算法-基于相似三角形的单目测距

- 算法原理

- 代码

- 参考资料

单目测距

在kitti数据集中的测试结果

C++工程

C++工程代码下载地址。

原理

主要的思想就是借鉴3D Bounding Box Estimation Using Deep Learning and Geometry论文进行实现。

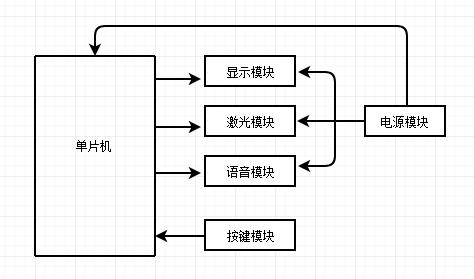

使用yolo进行2D图像目标检测+目标大小姿态估计网络+目标3D中心点解算模块实现单目测距。其中2D目标检测和大小姿态估计Apollo使用caffe框架进行构建深度学习模型,这部分的代码Apollo未开源,不在本文的讨论范围,本文主要说明的是Apollo的单目测距原理。

其算法流程图如下:

Apollo经过deep learning模块之后将会得到目标2D box, 目标类型,目标的宽度w,目标高度h,目标长度l。算法的解算依据如下:

代码注释

流程图1中的代码:

/* 进行2D转3D */

bool GeometryCameraConverter::Convert(std::vector<std::shared_ptr<VisualObject>> *objects) {if (!objects) return false;for (auto &obj : *objects) {Eigen::Vector2f trunc_center_pixel = Eigen::Vector2f::Zero();// 检测截断,在进行目标检测时有进行目标的截断CheckTruncation(obj, &trunc_center_pixel);// 根据目标的类型判断目标的大小是否越界,若是越界则将目标的大小重新设定CheckSizeSanity(obj);// 目标框float deg_alpha = obj->alpha * 180.0f / M_PI; //对象的观测角度Eigen::Vector2f upper_left(obj->upper_left.x(), obj->upper_left.y()); // 左上角:x1, y1Eigen::Vector2f lower_right(obj->lower_right.x(), obj->lower_right.y());// 右下角:x2, y2// 计算目标的距离distance和像素中心mass_center_pixelfloat distance = 0.0;Eigen::Vector2f mass_center_pixel = Eigen::Vector2f::Zero();if (obj->trunc_height < 0.25f) {// No truncation on 2D height 在二维高度上没有截断ConvertSingle(obj->height, obj->width, obj->length, deg_alpha, upper_left,lower_right, false, &distance, &mass_center_pixel);} else if (obj->trunc_width < 0.25f && obj->trunc_height > 0.25f) {// 2D height truncation and no width truncation 二维高度截断,没有宽度截断ConvertSingle(obj->height, obj->width, obj->length, deg_alpha, upper_left,lower_right, true, &distance, &mass_center_pixel);} else {// truncation on both sides 两边截断// Give fix values for detected box with both side and bottom truncation 给出被检测框的边和底截断的固定值distance = 10.0f;// Estimation of center pixel due to unknown truncation ratio 由于截断率未知,中心像素的估计mass_center_pixel = trunc_center_pixel;}// 反投影变换,计算目标的质心在相机坐标系下鸟瞰的坐标obj->distance = distance;Eigen::Vector3f camera_ray = camera_model_.unproject(mass_center_pixel);// 相机坐标系: 计算目标质心与光心的夹角DecideAngle(camera_ray, obj);// Center (3D Mass Center of 3D BBox),3D质心坐标去掉前面计算的尺度变换问题float scale = obj->distance / sqrt(camera_ray.x() * camera_ray.x() +camera_ray.y() * camera_ray.y() +camera_ray.z() * camera_ray.z());obj->center = camera_ray * scale;// Set 8 corner pixels,像素坐标系:计算8个顶点,SetBoxProjection(obj);}return true;

}// 根据目标类型对目标的物理长、宽、高进行修正

void GeometryCameraConverter::CheckSizeSanity(std::shared_ptr<VisualObject> obj) const {if (obj->type == ObjectType::VEHICLE) {obj->length = std::max(obj->length, 3.6f);obj->width = std::max(obj->width, 1.6f);obj->height = std::max(obj->height, 1.5f);} else if (obj->type == ObjectType::PEDESTRIAN) {obj->length = std::max(obj->length, 0.5f);obj->width = std::max(obj->width, 0.5f);obj->height = std::max(obj->height, 1.7f);} else if (obj->type == ObjectType::BICYCLE) {obj->length = std::max(obj->length, 1.8f);obj->width = std::max(obj->width, 1.2f);obj->height = std::max(obj->height, 1.5f);} else {obj->length = std::max(obj->length, 0.5f);obj->width = std::max(obj->width, 0.5f);obj->height = std::max(obj->height, 1.5f);}

}// 检测截断,在进行目标检测时有进行目标的截断

void GeometryCameraConverter::CheckTruncation(std::shared_ptr<VisualObject> obj,Eigen::Matrix<float, 2, 1> *trunc_center_pixel) const {auto width = camera_model_.get_width();auto height = camera_model_.get_height();// Ad-hoc 2D box truncation binary determination 二次确定特殊的2D框截断if (obj->upper_left.x() < 30.0f || width - 30.0f < obj->lower_right.x()) {obj->trunc_width = 0.5f;if (obj->upper_left.x() < 30.0f) {trunc_center_pixel->x() = obj->upper_left.x();} else {trunc_center_pixel->x() = obj->lower_right.x();}}if (obj->upper_left.y() < 30.0f || height - 30.0f < obj->lower_right.y()) {obj->trunc_height = 0.5f;trunc_center_pixel->x() =(obj->upper_left.x() + obj->lower_right.x()) / 2.0f;}trunc_center_pixel->y() = (obj->upper_left.y() + obj->lower_right.y()) / 2.0f;

}void GeometryCameraConverter::DecideAngle(const Eigen::Vector3f &camera_ray,std::shared_ptr<VisualObject> obj) const {float beta = std::atan2(camera_ray.x(), camera_ray.z());// Orientation is not reliable in these cases (DL model specific issue) 定位在这些情况下是不可靠的(DL模型特定的问题)if (obj->distance > 50.0f || obj->trunc_width > 0.25f) {obj->theta = -1.0f * M_PI_2;obj->alpha = obj->theta - beta;if (obj->alpha > M_PI) {obj->alpha -= 2 * M_PI;} else if (obj->alpha < -M_PI) {obj->alpha += 2 * M_PI;}} else { // Normal cases 正常的情况下 float theta = obj->alpha + beta;if (theta > M_PI) {theta -= 2 * M_PI;} else if (theta < -M_PI) {theta += 2 * M_PI;}obj->theta = theta;}

}void GeometryCameraConverter::SetBoxProjection(std::shared_ptr<VisualObject> obj) const {obj->pts8.resize(16);if (obj->trunc_width < 0.25f && obj->trunc_height < 0.25f) { // No truncationfor (int i = 0; i < 8; i++) {obj->pts8[i * 2] = pixel_corners_[i].x();obj->pts8[i * 2 + 1] = pixel_corners_[i].y();}}

}流程图第2部分代码:

bool GeometryCameraConverter::LoadCameraIntrinsics(const std::string &file_path) {YAML::Node node = YAML::LoadFile(file_path);// 获取相机内参Eigen::Matrix3f intrinsic_k;for (int i = 0; i < 3; ++i) {for (int j = 0; j < 3; ++j) {int index = i * 3 + j;intrinsic_k(i, j) = node["K"][index].as<float>();}}// 获取畸变系数Eigen::Matrix<float, 5, 1> intrinsic_d;for (int i = 0; i < 5; i++) {intrinsic_d(i, 0) = node["D"][i].as<float>();}// 获取图像的宽高float height = node["height"].as<float>();float width = node["width"].as<float>();camera_model_.set(intrinsic_k, width, height);camera_model_.set_distort_params(intrinsic_d);return true;

}

/*

h: height, 三维定向包围框的高度的物理大小

w: width, 三维定向包围框的宽度的物理大小

l: length, 三维定向包围框的长度的物理大小

alpha_deg: 对象的观测角度,像素

upper_left: 左上角:x1, y1

lower_right:右下角:x2, y2

use_width: 是否使用宽度

istance: 距离

mass_center_pixel: 质量中心,像素坐标

*/bool GeometryCameraConverter::ConvertSingle(const float h, const float w, const float l, const float alpha_deg,const Eigen::Vector2f &upper_left, const Eigen::Vector2f &lower_right,bool use_width, float *distance, Eigen::Vector2f *mass_center_pixel) {// Target Goals: Projection target//获取像素宽高int pixel_width = static_cast<int>(lower_right.x() - upper_left.x());int pixel_height = static_cast<int>(lower_right.y() - upper_left.y());int pixel_length = pixel_height;if (use_width) pixel_length = pixel_width;// Target Goals: Box center pixel// 获取像素目标框的中心Eigen::Matrix<float, 2, 1> box_center_pixel;box_center_pixel.x() = (lower_right.x() + upper_left.x()) / 2.0f;box_center_pixel.y() = (lower_right.y() + upper_left.y()) / 2.0f;// Generate alpha rotated 3D template here. Corners in Camera space:// Bottom: FL, FR, RR, RL => Top: FL, FR, RR, RL// 构建3D框,并根据角度进行旋转float deg_alpha = alpha_deg;float h_half = h / 2.0f;float w_half = w / 2.0f;float l_half = l / 2.0f;std::vector<Eigen::Vector3f> corners;corners.resize(8);corners[0] = Eigen::Vector3f(l_half, h_half, w_half);corners[1] = Eigen::Vector3f(l_half, h_half, -w_half);corners[2] = Eigen::Vector3f(-l_half, h_half, -w_half);corners[3] = Eigen::Vector3f(-l_half, h_half, w_half);corners[4] = Eigen::Vector3f(l_half, -h_half, w_half);corners[5] = Eigen::Vector3f(l_half, -h_half, -w_half);corners[6] = Eigen::Vector3f(-l_half, -h_half, -w_half);corners[7] = Eigen::Vector3f(-l_half, -h_half, w_half);Rotate(deg_alpha, &corners);corners_ = corners;pixel_corners_.clear();pixel_corners_.resize(8);// Try to get an initial Mass center pixel and vector 尝试得到一个初始质心像素和向量// 防止目标box超出图片的大小,给后续计算带来误差Eigen::Matrix<float, 3, 1> middle_v(0.0f, 0.0f, 20.0f);// camera_model_.project:在图像上投影一个3D点Eigen::Matrix<float, 2, 1> center_pixel = camera_model_.project(middle_v);// 将物理的3D框投影到图像中,得到最大外接框float max_pixel_x = std::numeric_limits<float>::min();float min_pixel_x = std::numeric_limits<float>::max();float max_pixel_y = std::numeric_limits<float>::min();float min_pixel_y = std::numeric_limits<float>::max();for (size_t i = 0; i < corners.size(); ++i) {Eigen::Vector2f point_2d = camera_model_.project(corners[i] + middle_v);min_pixel_x = std::min(min_pixel_x, point_2d.x());max_pixel_x = std::max(max_pixel_x, point_2d.x());min_pixel_y = std::min(min_pixel_y, point_2d.y());max_pixel_y = std::max(max_pixel_y, point_2d.y());}/* 初步计算目标在图像中的质心 */// 初始质心像素 与 投影到图像的3D-BOX的像素距离float relative_x =(center_pixel.x() - min_pixel_x) / (max_pixel_x - min_pixel_x);float relative_y =(center_pixel.y() - min_pixel_y) / (max_pixel_y - min_pixel_y);// 计算box质量中心,像素坐标mass_center_pixel->x() =(lower_right.x() - upper_left.x()) * relative_x + upper_left.x();mass_center_pixel->y() =(lower_right.y() - upper_left.y()) * relative_y + upper_left.y();// 像素坐标系转到3D坐标系的投影,其中z设为1Eigen::Matrix<float, 3, 1> mass_center_v =camera_model_.unproject(*mass_center_pixel);// 计算单位尺寸,就算每个维度与距离的比值,也就是目标质心的x,y,z坐标与距离是成正比的mass_center_v = MakeUnit(mass_center_v);// Distance search 使用二分法进行距离搜索,150.0f为相机的可视长度,0.1为起始距离。主要精度为0.1*distance =SearchDistance(pixel_length, use_width, mass_center_v, 0.1f, 150.0f);for (size_t i = 0; i < 1; ++i) {// Mass center search 质量中心搜索,与SearchDistance算法思想一样,更新mass_center_pixelSearchCenterDirection(box_center_pixel, *distance, &mass_center_v,mass_center_pixel);// Distance search,提高距离的精度*distance = SearchDistance(pixel_length, use_width, mass_center_v,0.9f * (*distance), 1.1f * (*distance));}return true;

}void GeometryCameraConverter::Rotate(const float alpha_deg, std::vector<Eigen::Vector3f> *corners) const {Eigen::AngleAxisf yaw(alpha_deg / 180.0f * M_PI, Eigen::Vector3f::UnitY());Eigen::AngleAxisf pitch(0.0, Eigen::Vector3f::UnitX());Eigen::AngleAxisf roll(0.0, Eigen::Vector3f::UnitZ());Eigen::Matrix3f rotation = yaw.toRotationMatrix() * pitch.toRotationMatrix() *roll.toRotationMatrix();Eigen::Matrix4f transform;transform.setIdentity();transform.block(0, 0, 3, 3) = rotation;for (auto &corner : *corners) {Eigen::Vector4f temp(corner.x(), corner.y(), corner.z(), 1.0f);temp = transform * temp;corner = Eigen::Vector3f(temp.x(), temp.y(), temp.z());}

}/**主要原理:使用二分法进行物理距离的搜索,主要评判指标为:物理坐标系投影到图像中的长度 等于 像素box的长度

**/

float GeometryCameraConverter::SearchDistance(const int pixel_length, const bool &use_width,const Eigen::Matrix<float, 3, 1> &mass_center_v, float close_d,float far_d) {float curr_d = 0.0f;int depth = 0;while (close_d <= far_d && depth < kMaxDistanceSearchDepth_) {curr_d = (far_d + close_d) / 2.0f;Eigen::Vector3f curr_p = mass_center_v * curr_d;float min_p = std::numeric_limits<float>::max();float max_p = 0.0f;for (size_t i = 0; i < corners_.size(); ++i) {Eigen::Vector2f point_2d = camera_model_.project(corners_[i] + curr_p);float curr_pixel = 0.0f;if (use_width) {curr_pixel = point_2d.x();} else {curr_pixel = point_2d.y();}min_p = std::min(min_p, curr_pixel);max_p = std::max(max_p, curr_pixel);}int curr_pixel_length = static_cast<int>(max_p - min_p);if (curr_pixel_length == pixel_length) {break;} else if (pixel_length < curr_pixel_length) {close_d = curr_d + 0.1f;} else { // pixel_length > curr_pixel_lengthfar_d = curr_d - 0.1f;}// Early break for 0.1m accuracyfloat next_d = (far_d + close_d) / 2.0f;if (std::abs(next_d - curr_d) < 0.1f) {break;}++depth;}// Only copy the last projection outEigen::Vector3f curr_p = mass_center_v * curr_d;for (size_t i = 0; i < corners_.size(); ++i) {Eigen::Vector2f point_2d = camera_model_.project(corners_[i] + curr_p);pixel_corners_[i] = point_2d;}return curr_d;

}void GeometryCameraConverter::SearchCenterDirection(const Eigen::Matrix<float, 2, 1> &box_center_pixel, const float curr_d,Eigen::Matrix<float, 3, 1> *mass_center_v,Eigen::Matrix<float, 2, 1> *mass_center_pixel) const {int depth = 0;while (depth < kMaxCenterDirectionSearchDepth_) {Eigen::Matrix<float, 3, 1> new_center_v = *mass_center_v * curr_d;float max_pixel_x = std::numeric_limits<float>::min();float min_pixel_x = std::numeric_limits<float>::max();float max_pixel_y = std::numeric_limits<float>::min();float min_pixel_y = std::numeric_limits<float>::max();for (size_t i = 0; i < corners_.size(); ++i) {Eigen::Vector2f point_2d =camera_model_.project(corners_[i] + new_center_v);min_pixel_x = std::min(min_pixel_x, point_2d.x());max_pixel_x = std::max(max_pixel_x, point_2d.x());min_pixel_y = std::min(min_pixel_y, point_2d.y());max_pixel_y = std::max(max_pixel_y, point_2d.y());}Eigen::Matrix<float, 2, 1> current_box_center_pixel;current_box_center_pixel.x() = (max_pixel_x + min_pixel_x) / 2.0;current_box_center_pixel.y() = (max_pixel_y + min_pixel_y) / 2.0;// Update mass center*mass_center_pixel += box_center_pixel - current_box_center_pixel;*mass_center_v = camera_model_.unproject(*mass_center_pixel);*mass_center_v = MakeUnit(*mass_center_v);if (std::abs(mass_center_pixel->x() - box_center_pixel.x()) < 1.0 &&std::abs(mass_center_pixel->y() - box_center_pixel.y()) < 1.0) {break;}++depth;}return;

}

/*单位距离时,x,y,z的比例*/

Eigen::Matrix<float, 3, 1> GeometryCameraConverter::MakeUnit(const Eigen::Matrix<float, 3, 1> &v) const {Eigen::Matrix<float, 3, 1> unit_v = v;float to_unit_scale =std::sqrt(unit_v.x() * unit_v.x() + unit_v.y() * unit_v.y() +unit_v.z() * unit_v.z());unit_v /= to_unit_scale;return unit_v;

}流程图的第3和第四部分:

/******************************************************************************* Copyright 2018 The Apollo Authors. All Rights Reserved.** Licensed under the Apache License, Version 2.0 (the "License");* you may not use this file except in compliance with the License.* You may obtain a copy of the License at** http://www.apache.org/licenses/LICENSE-2.0** Unless required by applicable law or agreed to in writing, software* distributed under the License is distributed on an "AS IS" BASIS,* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.* See the License for the specific language governing permissions and* limitations under the License.*****************************************************************************/#ifndef MODULES_PERCEPTION_OBSTACLE_CAMERA_COMMON_CAMERA_H_

#define MODULES_PERCEPTION_OBSTACLE_CAMERA_COMMON_CAMERA_H_#include <Eigen/Core>

#include <Eigen/Dense>#include <algorithm>namespace apollo {

namespace perception {template <typename T>

class CameraModel;

template <typename T>

class CameraDistort;/**@brief Print the matrix.*/

template <typename T>

std::ostream& operator<<(std::ostream& cout, const CameraModel<T>& camera);template <typename T>

std::ostream& operator<<(std::ostream& cout, const CameraDistort<T>& camera);/**@brief camera intrinsic of pin-hole camera model*/

template <typename T>

class CameraModel {public:CameraModel() {focal_length_x_ = 1;focal_length_y_ = 1;center_x_ = 0;center_y_ = 0;intrinsic_(0, 0) = 1;intrinsic_(0, 1) = 0;intrinsic_(0, 2) = 0;intrinsic_(1, 0) = 0;intrinsic_(1, 1) = 1;intrinsic_(1, 2) = 0;intrinsic_(2, 0) = 0;intrinsic_(2, 1) = 0;intrinsic_(2, 2) = 1;width_ = 1;height_ = 1;}void set(const Eigen::Matrix<T, 3, 3>& params, T w, T h) {intrinsic_ = params;focal_length_x_ = intrinsic_(0, 0);focal_length_y_ = intrinsic_(1, 1);center_x_ = intrinsic_(0, 2);center_y_ = intrinsic_(1, 2);width_ = w;height_ = h;}void set(T focal_length_x, T focal_length_y, T center_x, T center_y, T w,T h) {focal_length_x_ = focal_length_x;focal_length_y_ = focal_length_y;center_x_ = center_x;center_y_ = center_y;width_ = w;height_ = h;intrinsic_(0, 0) = focal_length_x_;intrinsic_(1, 1) = focal_length_y_;intrinsic_(0, 2) = center_x_;intrinsic_(1, 2) = center_y_;}/**@brief Project a 3D point on an image. 在图像上投影一个3D点,去掉了世界坐标到相机坐标系的变换。默认输入的数据就是相机坐标系的数据*/virtual Eigen::Matrix<T, 2, 1> project(const Eigen::Matrix<T, 3, 1>& pt3d) const {Eigen::Matrix<T, 2, 1> pt2d;pt2d[0] = pt3d[0] / pt3d[2];pt2d[1] = pt3d[1] / pt3d[2];return pixel_denormalize(pt2d);}/**@brief Unproject a pixel to 3D point on a given XY plane, where z = 1 在给定的XY平面上像素到3D点的投影,其中z为1*/virtual Eigen::Matrix<T, 3, 1> unproject(const Eigen::Matrix<T, 2, 1>& pt2d) const {Eigen::Matrix<T, 3, 1> pt3d;Eigen::Matrix<T, 2, 1> pt2d_tmp = pixel_normalize(pt2d);pt3d[0] = pt2d_tmp[0];pt3d[1] = pt2d_tmp[1];pt3d[2] = 1;return pt3d;}/**@brief Project a 3D point on an image. */virtual Eigen::Matrix<T, 2, 1> project(const Eigen::Matrix<T, 4, 4>& transform,const Eigen::Matrix<T, 3, 1>& pt3d) const {Eigen::Matrix<T, 3, 1> local_pt3d;local_pt3d[0] = transform(0, 0) * pt3d[0] + transform(0, 1) * pt3d[1] +transform(0, 2) * pt3d[2] + transform(0, 3);local_pt3d[1] = transform(1, 0) * pt3d[0] + transform(1, 1) * pt3d[1] +transform(1, 2) * pt3d[2] + transform(1, 3);local_pt3d[2] = transform(2, 0) * pt3d[0] + transform(2, 1) * pt3d[1] +transform(2, 2) * pt3d[2] + transform(2, 3);return project(local_pt3d);}/**@brief Check if a 3D point is in the view*/bool is_in_view(const Eigen::Matrix<T, 4, 4>& transform,const Eigen::Matrix<T, 3, 1>& pt3d) const {Eigen::Matrix<T, 3, 1> local_pt3d;local_pt3d[0] = transform(0, 0) * pt3d[0] + transform(0, 1) * pt3d[1] +transform(0, 2) * pt3d[2] + transform(0, 3);local_pt3d[1] = transform(1, 0) * pt3d[0] + transform(1, 1) * pt3d[1] +transform(1, 2) * pt3d[2] + transform(1, 3);local_pt3d[2] = transform(2, 0) * pt3d[0] + transform(2, 1) * pt3d[1] +transform(2, 2) * pt3d[2] + transform(2, 3);if (local_pt3d[2] <= 0) {return false;}Eigen::Matrix<T, 2, 1> pt2d = project(local_pt3d);if (pt2d[0] > 0 && pt2d[0] < width_ && pt2d[1] > 0 && pt2d[1] < height_) {return true;}return false;}/**@brief Get the x focal length. */inline T get_focal_length_x() const { return focal_length_x_; }/**@brief Get the y focal length. */inline T get_focal_length_y() const { return focal_length_y_; }/**@brief Get the optical center x. */inline T get_center_x() const { return center_x_; }/**@brief Get the optical center y. */inline T get_center_y() const { return center_y_; }/**@brief Get the intrinsic matrix K. */inline const Eigen::Matrix<T, 3, 3>& get_intrinsic() const {return intrinsic_;}/**@brief Get the intrinsic matrix K. */inline Eigen::Matrix<T, 3, 3>& get_intrinsic() { return intrinsic_; }/**@brief Get the image width */inline T get_width() const { return width_; }/**@brief Get the image height */inline T get_height() const { return height_; }friend std::ostream& operator<<<>(std::ostream& out,const CameraModel<T>& camera);protected:/**@brief Normalize a 2D pixel. Convert a 2D pixel as if the image is taken* with a camera,* whose K = identity matrix. */virtual Eigen::Matrix<T, 2, 1> pixel_normalize(const Eigen::Matrix<T, 2, 1>& pt2d) const {Eigen::Matrix<T, 2, 1> p;p[0] = (pt2d[0] - center_x_) / focal_length_x_;p[1] = (pt2d[1] - center_y_) / focal_length_y_;return p;}/**@brief Denormalize a 2D pixel. Convert a 2D pixel as if the image is taken 非规格化2D像素。转换一个2D像素,就像图像是用一个相机拍摄的,其K = intrinsic_。(不考虑畸变系数)* with a camera,* whose K = intrinsic_. */virtual Eigen::Matrix<T, 2, 1> pixel_denormalize(const Eigen::Matrix<T, 2, 1>& pt2d) const {Eigen::Matrix<T, 2, 1> p;p[0] = pt2d[0] * focal_length_x_ + center_x_;p[1] = pt2d[1] * focal_length_y_ + center_y_;return p;}protected:/**@brief The camera intrinsic matrix. */Eigen::Matrix<T, 3, 3> intrinsic_; /**@brief The focal length x. */T focal_length_x_;/**@brief The focal length y. */T focal_length_y_;/**@brief The optical center x. */T center_x_;/**@brief The optical center y. */T center_y_;/**@brief Image width */T width_;/**@brief Image height */T height_;

};/**@brief camera intrinsic of pin-hole camera model with distortion*/

template <typename T>

class CameraDistort : public CameraModel<T> {public:/**@brief The default constructor. */CameraDistort() {distort_params_[0] = 0;distort_params_[1] = 0;distort_params_[2] = 0;distort_params_[3] = 0;distort_params_[4] = 0;}/**@brief Project a 3D point on an image. */virtual Eigen::Matrix<T, 2, 1> project(const Eigen::Matrix<T, 3, 1>& pt3d) const {Eigen::Matrix<T, 2, 1> pt2d;pt2d[0] = pt3d[0] / pt3d[2];pt2d[1] = pt3d[1] / pt3d[2];return pixel_denormalize(pt2d);}/**@brief Unproject a pixel to 3D point on a given XY plane, where z = 1 */virtual Eigen::Matrix<T, 3, 1> unproject(const Eigen::Matrix<T, 2, 1>& pt2d) const {Eigen::Matrix<T, 3, 1> pt3d;Eigen::Matrix<T, 2, 1> pt2d_tmp = pixel_normalize(pt2d);pt3d[0] = pt2d_tmp[0];pt3d[1] = pt2d_tmp[1];pt3d[2] = 1;return pt3d;}/**@brief Project a 3D point on an image. */virtual Eigen::Matrix<T, 2, 1> project(const Eigen::Matrix<T, 4, 4>& transform,const Eigen::Matrix<T, 3, 1>& pt3d) const {Eigen::Matrix<T, 3, 1> local_pt3d;local_pt3d[0] = transform(0, 0) * pt3d[0] + transform(0, 1) * pt3d[1] +transform(0, 2) * pt3d[2] + transform(0, 3);local_pt3d[1] = transform(1, 0) * pt3d[0] + transform(1, 1) * pt3d[1] +transform(1, 2) * pt3d[2] + transform(1, 3);local_pt3d[2] = transform(2, 0) * pt3d[0] + transform(2, 1) * pt3d[1] +transform(2, 2) * pt3d[2] + transform(2, 3);return project(local_pt3d);}/**@brief Set the distortion parameters. */void set_distort_params(T d0, T d1, T d2, T d3, T d4) {distort_params_[0] = d0;distort_params_[0] = d1;distort_params_[0] = d2;distort_params_[0] = d3;distort_params_[0] = d4;}/**@brief Set the distortion parameters. */inline void set_distort_params(const Eigen::Matrix<T, 5, 1>& params) {distort_params_ = params;}/**@brief Get the distortion parameters. */inline const Eigen::Matrix<T, 5, 1>& get_distort_params() const {return distort_params_;}/**@brief Get the distortion parameters. */inline Eigen::Matrix<T, 5, 1>& get_distort_params() {return distort_params_;}friend std::ostream& operator<<<>(std::ostream& out,const CameraDistort<T>& camera);protected:/**@brief Normalize a 2D pixel. Convert a 2D pixel as if the image is taken* with a camera,* whose K = identity matrix. */virtual Eigen::Matrix<T, 2, 1> pixel_normalize(const Eigen::Matrix<T, 2, 1>& pt2d) const {Eigen::Matrix<T, 2, 1> pt2d_distort = CameraModel<T>::pixel_normalize(pt2d);Eigen::Matrix<T, 2, 1> pt2d_undistort = pt2d_distort; // Initial guessfor (unsigned int i = 0; i < 20; ++i) {T r_sq = pt2d_undistort[0] * pt2d_undistort[0] +pt2d_undistort[1] * pt2d_undistort[1];T k_radial = 1.0 + distort_params_[0] * r_sq +distort_params_[1] * r_sq * r_sq +distort_params_[4] * r_sq * r_sq * r_sq;T delta_x_0 =2 * distort_params_[2] * pt2d_undistort[0] * pt2d_undistort[1] +distort_params_[3] *(r_sq + 2 * pt2d_undistort[0] * pt2d_undistort[0]);T delta_x_1 =distort_params_[2] *(r_sq + 2 * pt2d_undistort[1] * pt2d_undistort[1]) +2 * distort_params_[3] * pt2d_undistort[0] * pt2d_undistort[1];pt2d_undistort[0] = (pt2d_distort[0] - delta_x_0) / k_radial;pt2d_undistort[1] = (pt2d_distort[1] - delta_x_1) / k_radial;}return pt2d_undistort;}/**@brief Denormalize a 2D pixel. Convert a 2D pixel as if the image is taken. 非规格化2D像素。转换一个2D像素,就像图像是生成。(考虑畸变系数)* with a camera,* whose K = intrinsic_. */virtual Eigen::Matrix<T, 2, 1> pixel_denormalize(const Eigen::Matrix<T, 2, 1>& pt2d) const {// Add distortionT r_sq = pt2d[0] * pt2d[0] + pt2d[1] * pt2d[1];Eigen::Matrix<T, 2, 1> pt2d_radial =pt2d *(1 + distort_params_[0] * r_sq + distort_params_[1] * r_sq * r_sq +distort_params_[4] * r_sq * r_sq * r_sq);Eigen::Matrix<T, 2, 1> dpt2d;dpt2d[0] = 2 * distort_params_[2] * pt2d[0] * pt2d[1] +distort_params_[3] * (r_sq + 2 * pt2d[0] * pt2d[0]);dpt2d[1] = distort_params_[2] * (r_sq + 2 * pt2d[1] * pt2d[1]) +2 * distort_params_[3] * pt2d[0] * pt2d[1];Eigen::Matrix<T, 2, 1> pt2d_undistort;pt2d_undistort[0] = pt2d_radial[0] + dpt2d[0];pt2d_undistort[1] = pt2d_radial[1] + dpt2d[1];// Add intrinsic Kreturn CameraModel<T>::pixel_denormalize(pt2d_undistort);}protected:/**@brief The distortion parameters.** See here for the definition of the parameters:* http://www.vision.caltech.edu/bouguetj/calib_doc/htmls/parameters.html*/Eigen::Matrix<T, 5, 1> distort_params_;

};template <typename T>

std::ostream& operator<<(std::ostream& cout, const CameraModel<T>& camera) {cout << camera.intrinsic_ << "\n [" << camera.width_ << "," << camera.height_<< "]\n";return cout;

}template <typename T>

std::ostream& operator<<(std::ostream& cout, const CameraDistort< camera) {cout << camera.intrinsic_ << "\n [" << camera.width_ << "," << camera.height_<< "]\n";cout << camera.distort_params_;return cout;

}typedef CameraModel<double> CameraD;

typedef CameraDistort<double> CameraDistortD;} // namespace perception

} // namespace apollo#endif // MODULES_PERCEPTION_OBSTACLE_CAMERA_COMMON_CAMERA_H_

目标属性部分:

struct alignas(16) VisualObject {// Per-frame object id, assigned from detection 每帧对象id,从检测开始分配int id = 0;// Confidence of objectness, ranging as [0, 1] 对象的可信度,范围为[0,1]float score = 0.0f;// [pixel] 2D bounding box [像素]2D边框// upper-left corner: x1, y1 左上角:x1, y1Eigen::Vector2f upper_left; // lower-right corner: x2, y2 右下角:x2, y2Eigen::Vector2f lower_right;// front box upper-left corner: x1, y1 前框左上角:x1, y1Eigen::Vector2d front_upper_left;// front box lower-right corner: x2, y2 前框右下角:x2, y2Eigen::Vector2d front_lower_right;// front box upper-left corner: x1, y1 后框左上角:x1, y1Eigen::Vector2d back_upper_left;// front box lower-right corner: x2, y2 后框右下角:x2, y2Eigen::Vector2d back_lower_right;// 2Dto3D, pts8.resize(16), x, y...std::vector<float> pts8;// 2D bounding box truncation ratio, for out-of-image objects 非图像对象的2D边框截断率float trunc_width = 0.0f;float trunc_height = 0.0f;// Object type from detection 来自检测的对象类型ObjectType type = ObjectType::UNKNOWN;// Probability of each object type 每个对象类型的概率std::vector<float> type_probs;// ROI pooling feature from layers of deep learning detection model. 深度学习检测模型的ROI pooling特征。单个物体的特征维度,这里是576std::vector<float> object_feature;// Internal object classification type. 内部对象分类类型InternalObjectType internal_type;// Internal probability of each type, used for track type. 每种类型的内部概率,用于跟踪类型float internal_type_probs[INT_MAX_OBJECT_TYPE];// [meter] physical size of 3D oriented bounding box 。[物理]三维定向包围框的物理大小// length is the size in the main direction 。长度是主要方向的尺寸float length = 0.0f;float width = 0.0f;float height = 0.0f;// [radian] observation angle of object, ranging as [-pi, pi] 。 [radian]对象的观测角度,范围为[-pi, pi]float alpha = 0.0f;// [radian] Rotation around the vertical axis, ranging as [-pi, pi] 。[radian]绕垂直轴旋转,范围为[-pi, pi]// the yaw angle, theta = 0.0f means direction = (1, 0, 0) 。偏航角,theta = 0.0f表示方向= (1,0,0)float theta = 0.0f;// main direction 主要方向Eigen::Vector3f direction = Eigen::Vector3f(1.0f, 0.0f, 0.0f);// [meter] center of the object。 [物理] 物体的中心Eigen::Vector3f center = Eigen::Vector3f::Zero();// [meter] distance to object physical center from camera origin [meter]从相机原点到物体物理中心的距离float distance = 0.0f;// [meter / second] physical velocity of the object, (vx, vy, vz) [米/秒]物体的物理速度,(vx, vy, vz)Eigen::Vector3f velocity = Eigen::Vector3f::Zero();// kalman filter state uncertainty set by different sensor type 根据不同的传感器类型设置卡尔曼滤波器的状态不确定度// each sensor need to model individually 每个传感器需要单独建模Eigen::Matrix<double, 4, 4> state_uncertainty =Eigen::Matrix<double, 4, 4>::Identity();// globally unique tracking id for camera visual objects 摄像头视觉对象的全局唯一跟踪idint track_id = 0;// [second] age of the tracked object [second]被跟踪对象的年龄double track_age = 0.0;// [second] the last observed timestamp [second]最后观察到的时间戳double last_track_timestamp = 0.0;

};

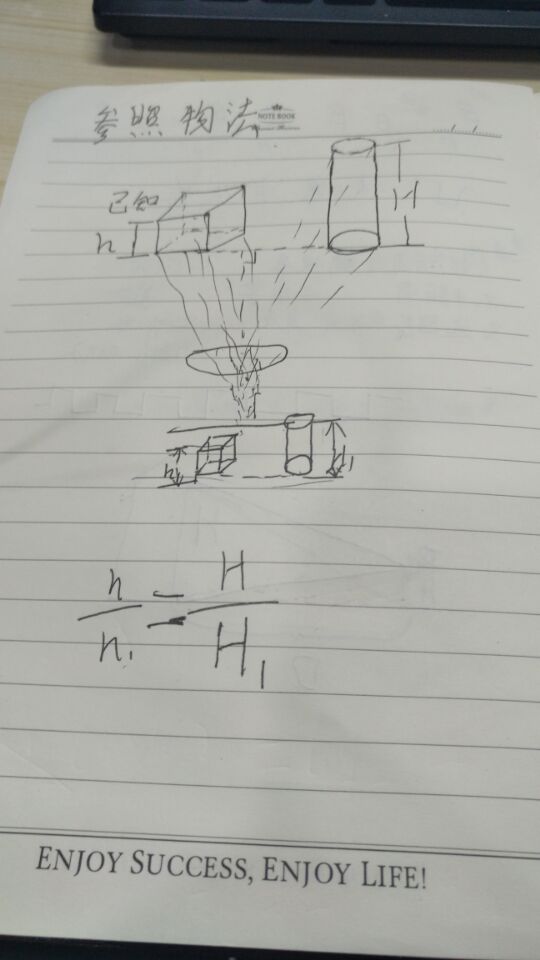

其他视觉测距算法-基于相似三角形的单目测距

算法原理

我们将使用相似三角形来计算相机到一个已知的物体或者目标的距离。 相似三角形就是这么一回事:假设我们有一个宽度为 W 的目标或者物体。然后我们将这个目标放在距离我们的相机为 D 的位置。我们用相机对物体进行拍照并且测量物体的像素宽度 P 。这样我们就得出了相机焦距的公式:F = (P x D) / W

举个例子,假设我在离相机距离 D = 24 英寸的地方放一张标准的 8.5 x 11 英寸 A4 纸(横着放;W = 11)并且拍下一张照片。我测量出照片中 A4 纸的像素宽度为 P = 249 像素。因此我的焦距 F 是:

F = (248px x 24in) / 11in = 543.45

当我继续将我的相机移动靠近或者离远物体或者目标时,我可以用相似三角形来计算出物体离相机的距离:D’ = (W x F) / P

从以上的解释中,我们可以看到,要想得到距离,我们就要知道摄像头的焦距和目标物体的大小,这两个已知条件根据公式:D’ = (W x F) / P

得出目标到摄像机的距离D,其中P是指像素距离,W是A4纸的宽度,F是摄像机焦距。

代码

import numpy as np

import cv2

# 找到目标函数

def find_marker(image):# convert the image to grayscale, blur it, and detect edges#将图像转换成灰度、模糊和检测边缘gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)gray = cv2.GaussianBlur(gray, (5, 5), 0)edged = cv2.Canny(gray, 35, 125)# find the contours in the edged image and keep the largest one;#在边缘图像中找到轮廓并保持最大的轮廓# we'll assume that this is our piece of paper in the image#我们假设这是我们在图像中的一张纸(_, cnts, _) = cv2.findContours(edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)# 求最大面积c = max(cnts, key = cv2.contourArea)# compute the bounding box of the of the paper region and return it#计算纸张区域的边界框并返回它# cv2.minAreaRect() c代表点集,返回rect[0]是最小外接矩形中心点坐标,# rect[1][0]是width,rect[1][1]是height,rect[2]是角度return cv2.minAreaRect(c)# 距离计算函数

def distance_to_camera(knownWidth, focalLength, perWidth):# compute and return the distance from the maker to the camera#计算并返回从目标到相机的距离return (knownWidth * focalLength) / perWidth# initialize the known distance from the camera to the object, which

# in this case is 24 inches

#初始化已知距离从相机到对象,在这种情况下是24英寸

KNOWN_DISTANCE = 24.0# initialize the known object width, which in this case, the piece of

# paper is 11 inches wide

#初始化已知的物体宽度,在这种情况下,纸是11英寸宽。

# A4纸的长和宽(单位:inches)

KNOWN_WIDTH = 11.69

KNOWN_HEIGHT = 8.27# initialize the list of images that we'll be using

#初始化我们将要使用的图像列表

IMAGE_PATHS = ["Picture1.jpg", "Picture2.jpg", "Picture3.jpg"]# load the furst image that contains an object that is KNOWN TO BE 2 feet

# from our camera, then find the paper marker in the image, and initialize

# the focal length

#加载包含一个距离我们相机2英尺的物体的第一张图像,然后找到图像中的纸张标记,并初始化焦距

#读入第一张图,通过已知距离计算相机焦距

image = cv2.imread("E:\\lena.jpg") #应使用摄像头拍的图

marker = find_marker(image)

focalLength = (marker[1][0] * KNOWN_DISTANCE) / KNOWN_WIDTH # D’ = (W x F) / P#通过摄像头标定获取的像素焦距

#focalLength = 811.82

print('focalLength = ',focalLength)#打开摄像头

camera = cv2.VideoCapture(0)while camera.isOpened():# get a frame(grabbed, frame) = camera.read()marker = find_marker(frame)if marker == 0:print(marker)continueinches = distance_to_camera(KNOWN_WIDTH, focalLength, marker[1][0])# draw a bounding box around the image and display it#在图像周围绘制一个边界框并显示它box = cv2.boxPoints(marker)box = np.int0(box)cv2.drawContours(frame, [box], -1, (0, 255, 0), 2)# inches 转换为 cmcv2.putText(frame, "%.2fcm" % (inches *30.48/ 12),(frame.shape[1] - 200, frame.shape[0] - 20), cv2.FONT_HERSHEY_SIMPLEX,2.0, (0, 255, 0), 3)# show a framecv2.imshow("capture", frame)if cv2.waitKey(1) & 0xFF == ord('q'):break

camera.release()

cv2.destroyAllWindows()

参考资料

单目摄像机测距

毕业课题项目——基于单目摄像头的距离测量