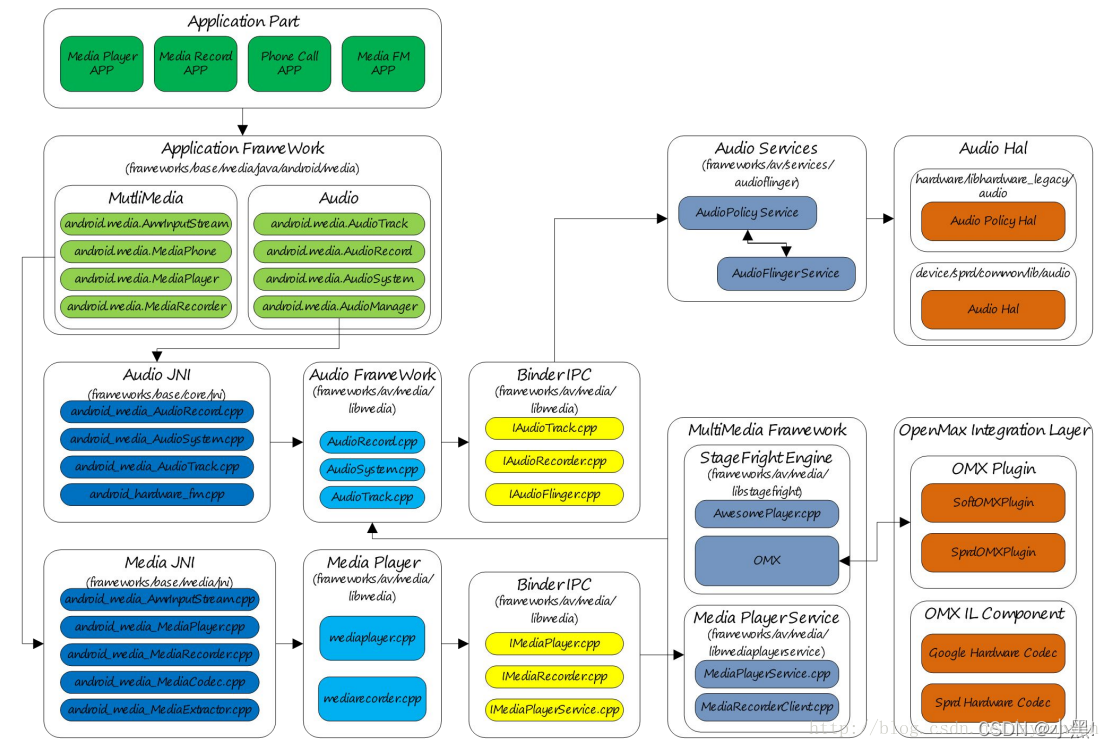

由于要实现a2dp的sink功能。所以大致看了下af和aps的结构。以下是学习摘要。

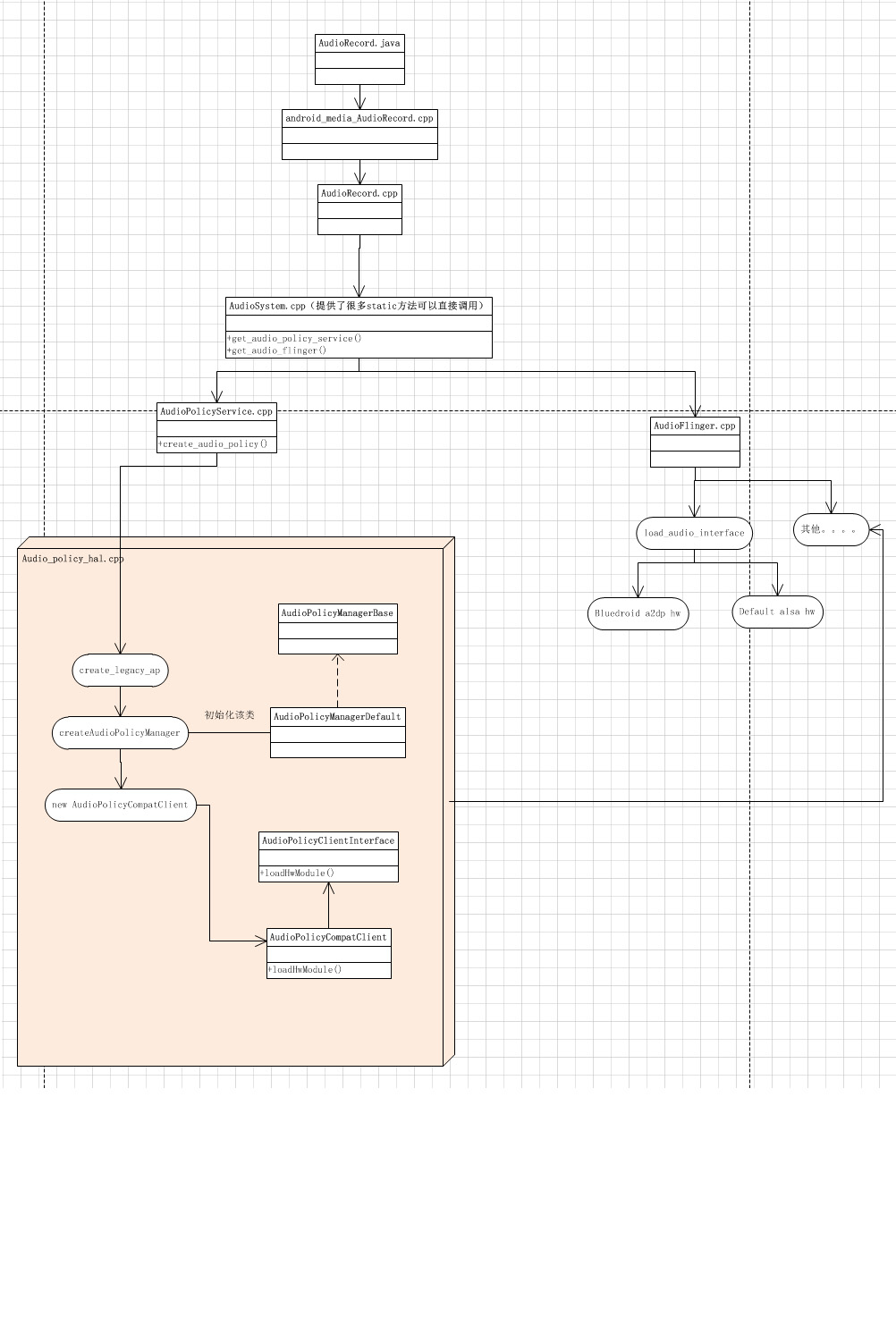

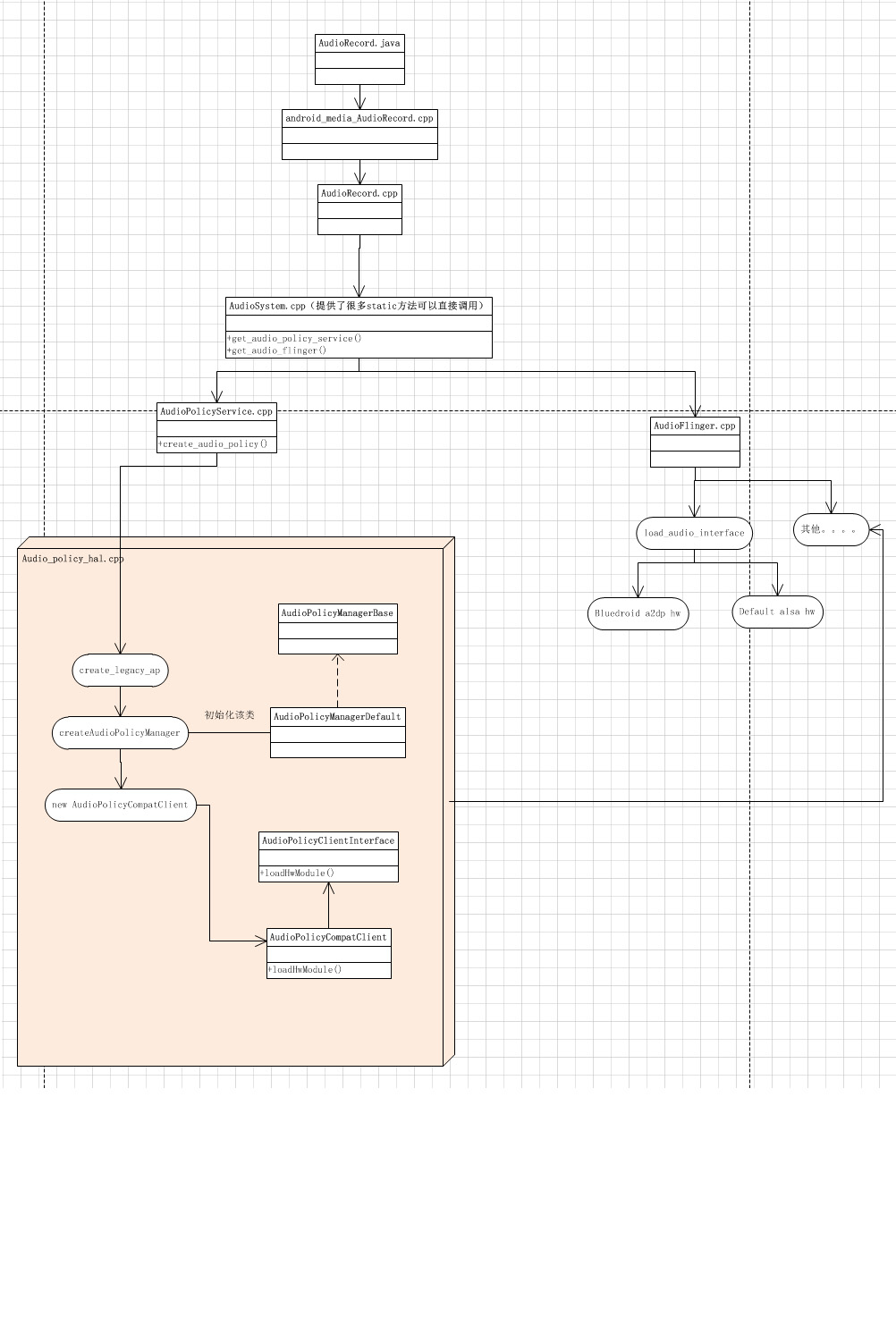

在看文章前,我们先来看下AudioFlinger和AudioPolicyService这两个东西,AudioFlinger是具体干活的,包括后者调用的具体处理;AudioPolicyService则主要进行输入输出设备通道选择策略的处理。

那么Android设备是如何得知当前设备各种情景下有哪些设备可用呢?

在audio_policy.conf (位于libhardware_legacy/audio/)中定义了如下信息

# audio hardware module section: contains descriptors for all audio hw modules present on the

# device. Each hw module node is named after the corresponding hw module library base name.

# For instance, "primary" corresponds to audio.primary.<device>.so.

# The "primary" module is mandatory and must include at least one output with

# AUDIO_OUTPUT_FLAG_PRIMARY flag.

# Each module descriptor contains one or more output profile descriptors and zero or more

# input profile descriptors. Each profile lists all the parameters supported by a given output

# or input stream category.attached_output_devices AUDIO_DEVICE_OUT_SPEAKERdefault_output_device AUDIO_DEVICE_OUT_SPEAKERattached_input_devices AUDIO_DEVICE_IN_BUILTIN_MIC|AUDIO_DEVICE_IN_REMOTE_SUBMIX

}# audio hardware module section: contains descriptors for all audio hw modules present on the

# device. Each hw module node is named after the corresponding hw module library base name.

# For instance, "primary" corresponds to audio.primary.<device>.so.

# The "primary" module is mandatory and must include at least one output with

# AUDIO_OUTPUT_FLAG_PRIMARY flag.

# Each module descriptor contains one or more output profile descriptors and zero or more

# input profile descriptors. Each profile lists all the parameters supported by a given output

# or input stream category.

# The "channel_masks", "formats", "devices" and "flags" are specified using strings corresponding

# to enums in audio.h and audio_policy.h. They are concatenated by use of "|" without space or "\n".audio_hw_modules {primary {outputs {primary {sampling_rates 44100channel_masks AUDIO_CHANNEL_OUT_STEREOformats AUDIO_FORMAT_PCM_16_BITdevices AUDIO_DEVICE_OUT_EARPIECE|AUDIO_DEVICE_OUT_SPEAKER|AUDIO_DEVICE_OUT_WIRED_HEADSET|AUDIO_DEVICE_OUT_AUX_DIGITAL|AUDIO_DEVICE_OUT_WIRED_HEADPHONEflags AUDIO_OUTPUT_FLAG_PRIMARY}}inputs {primary {sampling_rates 8000|11025|16000|22050|32000|44100|48000channel_masks AUDIO_CHANNEL_IN_MONO|AUDIO_CHANNEL_IN_STEREOformats AUDIO_FORMAT_PCM_16_BITdevices AUDIO_DEVICE_IN_BUILTIN_MIC|AUDIO_DEVICE_IN_WIRED_HEADSET|AUDIO_DEVICE_IN_WFD}}}a2dp {outputs {a2dp {sampling_rates 44100channel_masks AUDIO_CHANNEL_OUT_STEREOformats AUDIO_FORMAT_PCM_16_BITdevices AUDIO_DEVICE_OUT_ALL_A2DP}}}****省略×××××

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

这些内容指出了该设备使用的输入和输出通道。针对各种不同的场景模式中有不同的通道。

在AudioPolicyManagerBase.cpp文件中,初始化的时候会loadAudioPolicyConfig(),每个配置都会被一个IOProfile对象所保存,然后存在类似于primary/a2dp等模块的mInputProfiles和mOutputProfiles集合中。最后这些模块统一保存在mHwModules列表对象中。

load完后,

1. 会调用

mHwModules[i]->mHandle = mpClientInterface->loadHwModule(mHwModules[i]->mName);

来得到具体hw的处理handler。这样才能在系统应用调用时让具体的对应人去处理。

这里其实调用的是AudioFlinger中的loadHwModule()。它根据传入的不容profilename,去/system/lib/hw/下寻找对应的so,

shell@Hi3798MV100:/system/lib/hw $ ls

ls

alsa.default.so

audio.a2dp.default.so

audio.primary.bigfish.so

audio.primary.default.so

audio_policy.default.so

bluetooth.default.so

bluetoothmp.default.so

camera.bigfish.so

gps.bigfish.so

gralloc.bigfish.so

根据代码,a2dp用的是audio.a2dp.default.so(在./bluetooth/bluedroid/audio_a2dp_hw/android.mk有定义生成),而primary用的则是audio.primary.bigfish.so.

Audio相关一共有三种hw实现,

static const char * const audio_interfaces[] = {AUDIO_HARDWARE_MODULE_ID_PRIMARY,AUDIO_HARDWARE_MODULE_ID_A2DP,AUDIO_HARDWARE_MODULE_ID_USB,

};

他们都实现了同样的接口,所以对上层来说是不用关心使用的时候load了哪个的。

2.然后我们会过滤这些通道是否真实存在,过滤掉不存在的之后,我们要告诉设备哪些strategy需要使用哪种设定。

我们可以看到有6种不同场景默认的策略

enum routing_strategy {STRATEGY_MEDIA,STRATEGY_PHONE,STRATEGY_SONIFICATION,STRATEGY_SONIFICATION_RESPECTFUL,STRATEGY_DTMF,STRATEGY_ENFORCED_AUDIBLE,NUM_STRATEGIES};

updateDevicesAndOutputs()中getDeviceForStrategy为具体的策略选择,比如如果我们是播放音乐的场景:

STRATEGY_MEDIA:

case STRATEGY_MEDIA: {uint32_t device2 = AUDIO_DEVICE_NONE;switch (mForceUse[AudioSystem::FOR_MEDIA]){case AudioSystem::FORCE_USB_HEADSET: ALOGV("FORCE_headset: AudioPolicyManagerBase.cpp");if (device2 == 0) {device2 = mAvailableOutputDevices & AudioSystem::DEVICE_OUT_WIRED_HEADSET;}if(device2) break;goto DEFAULT_PRORITY;case AudioSystem::FORCE_BT_A2DP: ALOGV("FORCE_bluetooth: AudioPolicyManagerBase.cpp");if (device2 == 0) {device2 = mAvailableOutputDevices & AudioSystem::DEVICE_OUT_ALL_A2DP;}if(device2) break;goto DEFAULT_PRORITY;*****省略*****}device |= device2;if (device) break;device = mDefaultOutputDevice;if (device == AUDIO_DEVICE_NONE) {ALOGE("getDeviceForStrategy() no device found for STRATEGY_MEDIA");}} break;

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

这里的mAvailableOutputDevices之前已经被初始化过,是当前所有支持的通道类型的一个与值(uint值),比如我们在已经连上蓝牙音箱的前提下,会被设置为 device2 = mAvailableOutputDevices & AudioSystem::DEVICE_OUT_ALL_A2DP;也就是只有蓝牙类别的output通道才能被使用。

OK,这是初始化的时候进行的检查。

那么我们在连上耳机,或者拔掉耳机的时候,设备又怎么更新呢?android中的AudioService有个AudioServiceBroadcastReceiver帮我们监听着这一切。

private class AudioServiceBroadcastReceiver extends BroadcastReceiver {@Overridepublic void onReceive(Context context, Intent intent) {if (action.equals(Intent.ACTION_DOCK_EVENT)) {int dockState = intent.getIntExtra(Intent.EXTRA_DOCK_STATE,Intent.EXTRA_DOCK_STATE_UNDOCKED);} else if (action.equals(BluetoothHeadset.ACTION_CONNECTION_STATE_CHANGED)) {state = intent.getIntExtra(BluetoothProfile.EXTRA_STATE,BluetoothProfile.STATE_DISCONNECTED);} else if (action.equals(Intent.ACTION_USB_AUDIO_ACCESSORY_PLUG) ||action.equals(Intent.ACTION_USB_AUDIO_DEVICE_PLUG)) {} else if (action.equals(BluetoothHeadset.ACTION_AUDIO_STATE_CHANGED)) {} }

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

当状态改变时,会盗用到

AudioSystem.setDeviceConnectionState(device,AudioSystem.DEVICE_STATE_UNAVAILABLE,//状态mConnectedDevices.get(device))

当然到最后还是调用了AudioPolicyManagerBase.cpp setDeviceConnectionState(audio_devices_t device,//32位数字,代表设备类型

AudioSystem::device_connection_state state,

const char *device_address)

在这个函数中,mAvailableInputDevices将会被更新为不同的组合。

好了,铺垫完成后我们来进入实际的例子。

首先系统初始化的时候会建立AudioPolicyService对象,

AudioPolicyService::AudioPolicyService(): BnAudioPolicyService() , mpAudioPolicyDev(NULL) , mpAudioPolicy(NULL)

{char value[PROPERTY_VALUE_MAX];const struct hw_module_t *module;int forced_val;int rc;Mutex::Autolock _l(mLock);mTonePlaybackThread = new AudioCommandThread(String8("ApmTone"), this);mAudioCommandThread = new AudioCommandThread(String8("ApmAudio"), this);mOutputCommandThread = new AudioCommandThread(String8("ApmOutput"), this);rc = hw_get_module(AUDIO_POLICY_HARDWARE_MODULE_ID, &module);if (rc)return;rc = audio_policy_dev_open(module, &mpAudioPolicyDev);ALOGE_IF(rc, "couldn't open audio policy device (%s)", strerror(-rc));if (rc)return;rc = mpAudioPolicyDev->create_audio_policy(mpAudioPolicyDev, &aps_ops, this,&mpAudioPolicy);ALOGE_IF(rc, "couldn't create audio policy (%s)", strerror(-rc));if (rc)return;rc = mpAudioPolicy->init_check(mpAudioPolicy);ALOGE_IF(rc, "couldn't init_check the audio policy (%s)", strerror(-rc));if (rc)return;ALOGI("Loaded audio policy from %s (%s)", module->name, module->id);if (access(AUDIO_EFFECT_VENDOR_CONFIG_FILE, R_OK) == 0) {loadPreProcessorConfig(AUDIO_EFFECT_VENDOR_CONFIG_FILE);} else if (access(AUDIO_EFFECT_DEFAULT_CONFIG_FILE, R_OK) == 0) {loadPreProcessorConfig(AUDIO_EFFECT_DEFAULT_CONFIG_FILE);}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

其他先忽略,来看下create_audio_policy,它调用了hal层的初始化函数,同时传入了一些参数,其中aps_ops参数是个函数结构体,

namespace {struct audio_policy_service_ops aps_ops = {open_output : aps_open_output,open_duplicate_output : aps_open_dup_output,close_output : aps_close_output,suspend_output : aps_suspend_output,restore_output : aps_restore_output,open_input : aps_open_input,close_input : aps_close_input,set_stream_volume : aps_set_stream_volume,set_stream_output : aps_set_stream_output,set_parameters : aps_set_parameters,get_parameters : aps_get_parameters,start_tone : aps_start_tone,stop_tone : aps_stop_tone,set_voice_volume : aps_set_voice_volume,move_effects : aps_move_effects,load_hw_module : aps_load_hw_module,open_output_on_module : aps_open_output_on_module,open_input_on_module : aps_open_input_on_module,};

}; //

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

这个参数很重要,里面的每个函数都是通过调用AudioFlinger去最终实现,而我们的AudioPolicyManagerBase中涉及到硬件调用也是通过回调这些函数来实现的,也印证了一开始的说法,那就是AudioFlinger才是干正经事的。

下面我们来看下当我们录制一段声音的时候,所走的流程。

我们这次用的是AudioRecord对象,当然也可以用MediaRecord。

基本上在app里,使用AudioRecord时,是Setup->StartRecording->Read->Close这样一个过程,下面我们分步来看下。

当我们new了一个AudioRecord对象后,会调用到android_media_AudioRecord_setup()函数。

sp<AudioRecord> lpRecorder = new AudioRecord();

lpRecorder->set((audio_source_t) source,sampleRateInHertz,format, channelMask,frameCount,recorderCallback,lpCallbackData,0, true, sessionId);

在AudioRecord.cpp的set函数中,主要有如下两个操作

status = openRecord_l(0 );if (status) {return status;}if (cbf != NULL) {mAudioRecordThread = new AudioRecordThread(*this, threadCanCallJava);mAudioRecordThread->run("AudioRecord", ANDROID_PRIORITY_AUDIO);}

openRecord_l中

audio_io_handle_t input = AudioSystem::getInput(mInputSource, mSampleRate, mFormat,mChannelMask, mSessionId);if (input == 0) {ALOGE("Could not get audio input for record source %d", mInputSource);return BAD_VALUE;}int originalSessionId = mSessionId;sp<IAudioRecord> record = audioFlinger->openRecord(input,mSampleRate, mFormat,mChannelMask,mFrameCount,&trackFlags,tid,&mSessionId,&status);

注释1. 先是getInput,他调用的是hal层的Audio_policy_hal接口,最终调用到AudioPolicyManagerBase中的getInput()。

audio_io_handle_t AudioPolicyManagerBase::getInput(int inputSource,uint32_t samplingRate,uint32_t format,uint32_t channelMask,AudioSystem::audio_in_acoustics acoustics)

{audio_io_handle_t input = 0;audio_devices_t device = getDeviceForInputSource(inputSource);IOProfile *profile = getInputProfile(device,samplingRate,format,channelMask);****省略****input = mpClientInterface->openInput(profile->mModule->mHandle,&inputDesc->mDevice,&inputDesc->mSamplingRate,&inputDesc->mFormat,&inputDesc->mChannelMask);****省略****

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

这里主要看下mpClientInterface->openInput()函数,最终调用到的是AudioFlinger中的openInput函数

audio_io_handle_t AudioFlinger::openInput(audio_module_handle_t module,audio_devices_t *pDevices,uint32_t *pSamplingRate,audio_format_t *pFormat,audio_channel_mask_t *pChannelMask)

{inHwDev = findSuitableHwDev_l(module, *pDevices);if (inHwDev == NULL)return 0;status = inHwHal->open_input_stream(inHwHal, id, *pDevices, &config,&inStream);if (status == NO_ERROR && inStream != NULL) {AudioStreamIn *input = new AudioStreamIn(inHwDev, inStream);thread = new RecordThread(this,input,reqSamplingRate,reqChannels,id,primaryOutputDevice_l(),*pDevices

#ifdef TEE_SINK, teeSink

#endif);mRecordThreads.add(id, thread);thread->audioConfigChanged_l(AudioSystem::INPUT_OPENED);return id;}return 0;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

注释1. 找到适合的接口,如a2dp。

注释2. malloc了一个a2dp_stream_in变量。(见audio_a2dp_hw.c)

注释3.建立一个thread来进行录音(具体的实现见threads.cpp),在这个thread类中有个onFirstRef方法,会让他线程跑起来。这个线程里包含了去bluedroid那取数据。

注释4.通知

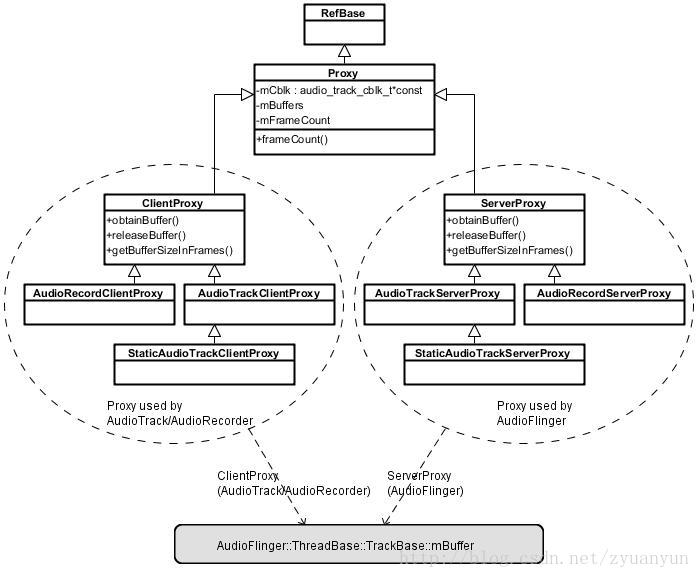

注释2. audioFlinger->openRecord,这个函数中主要做了两件事

recordTrack = thread->createRecordTrack_l(client, sampleRate, format, channelMask,frameCount, lSessionId,IPCThreadState::self()->getCallingUid(),flags, tid, &lStatus);recordHandle = new RecordHandle(recordTrack);

前者建立了一个track,track可以看成是一个播放或者录音任务的最小单元。

后者建立了一个处理recodethread的handler。后续的start(),stop()等调用都是由它来处理的。

好了。AudioRecord的init工作基本上做完了,那么就来startRecording()吧。注意,这里setup可能会花费比较长的时间,so如果是两个线程进行操作的话,必须得去判断是否已经是INITIALIZED,否则会导致crash!!如上所说,先经过RecordHandle,再调用RecordTrack的start()方法。接着调用RecordThread::start()方法。

在此方法中,会调用status_t status = AudioSystem::startInput(mId);,即AudioPolicyManagerBase::startInput(),这里通过a2dp_hw做了一些操作设置参数的操作。

接下来我们要通过read步骤来读取数据了。还记得在AudioRecord.cpp的set函数中,

mAudioRecordThread = new AudioRecordThread(*this, threadCanCallJava);

mAudioRecordThread->run("AudioRecord", ANDROID_PRIORITY_AUDIO);

有这个线程吗?这个线程就是用来读取RecordThread取来的数据的,当我们调用start的时候,已经发信号给它让他切换到读取状态了。

在我们的另外一篇文章里看过playbackthread的大致结构,在threadLoop()有个循环,不断去读取传过来的数据,

status_t status = mActiveTrack->getNextBuffer(&buffer);if (status == NO_ERROR) {mBytesRead = mInput->stream->read(mInput->stream, readInto,mBufferSize);

这里有个问题,由于是单独的线程,如果active recordtrack的读取者读取的不够快,会导致RecordThread: buffer overflow错误,此时会让线程睡眠一段时间以便让client读取数据。

status_t AudioFlinger::RecordThread::RecordTrack::getNextBuffer(AudioBufferProvider::Buffer* buffer,int64_t pts)

{ServerProxy::Buffer buf;buf.mFrameCount = buffer->frameCount;status_t status = mServerProxy->obtainBuffer(&buf);buffer->frameCount = buf.mFrameCount;buffer->raw = buf.mRaw;if (buf.mFrameCount == 0) {(void) android_atomic_or(CBLK_OVERRUN, &mCblk->mFlags);}return status;

}

AudioTrackShared.cpp中有obtainBuffer的定义。