文章目录

- 一、整体结构框图

- 二、AudioTrack的解析

- 2.1 AudioTrack API 两种数据传输模式

- 2.2 AudioTrack API 音频流类型

- 2.3 getMinBufferSize 函数分析

- 2.4 AudioTrack 对象创建

- 2.5 AudioTrack 在JNI 中的使用

- 2.6 AudioTrack 的play和write

- 2.7 new AudioTrack 和 set 的调用

- 三、整体总结

最近公司刚好做了一个关于音视频的培训,但是感觉听完云里雾里,所以自己总结一番,做个笔记。

参考文档:

https://www.cnblogs.com/innost/archive/2011/01/09/1931457.html和

https://blog.csdn.net/zyuanyun/article/details/60890534?spm=1001.2014.3001.5501#t4

我的代码在于rk3288的平台代码

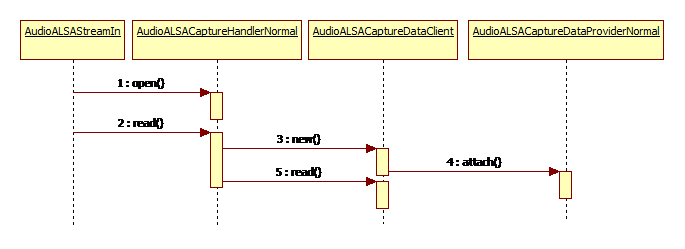

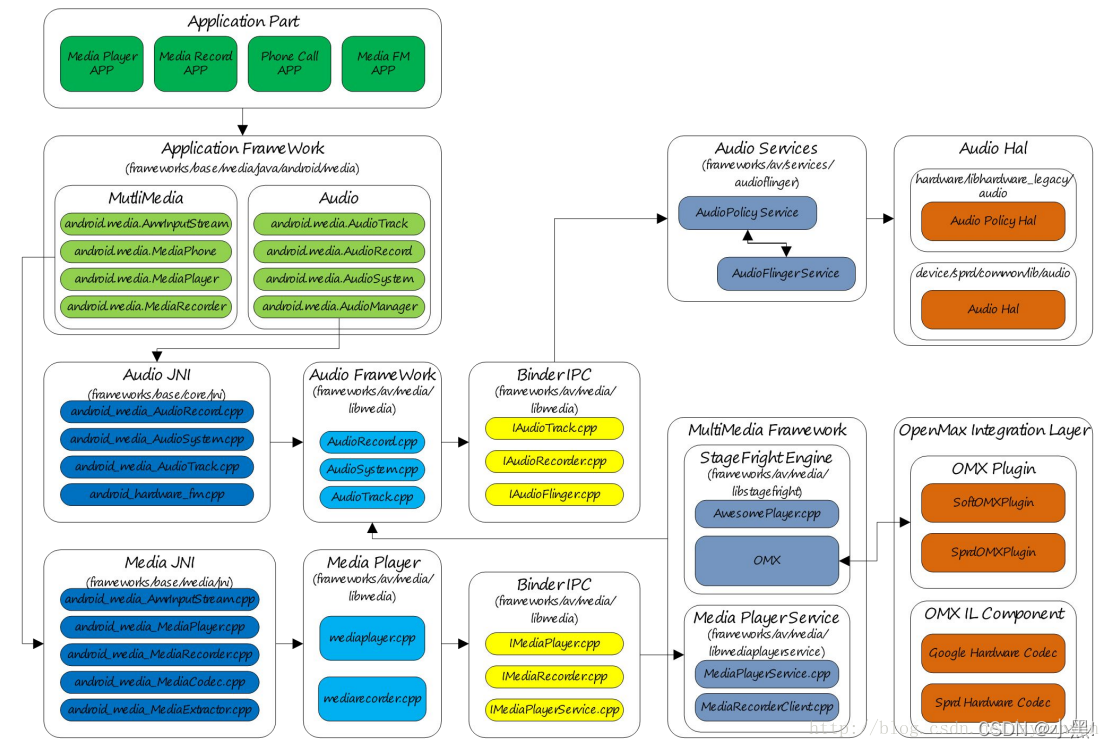

一、整体结构框图

这个框图算是很详细的解析了在android的里面的audio的一个整体逻辑,从上层到底层。

1) Audio Application Framework:音频应用框架

- AudioTrack:负责回放数据的输出,属 Android 应用框架 API 类

- AudioRecord:负责录音数据的采集,属 Android 应用框架 API 类

- AudioSystem: 负责音频事务的综合管理,属 Android 应用框架 API 类

2)Audio Native Framework:音频本地框架 (cpp的那三个)

- AudioTrack:负责回放数据的输出,属 Android 本地框架 API 类

- AudioRecord:负责录音数据的采集,属 Android 本地框架 API 类

- AudioSystem: 负责音频事务的综合管理,属 Android 本地框架 API 类

3)Audio Services:音频服务

- AudioPolicyService:音频策略的制定者,负责音频设备切换的策略抉择、音量调节策略等

- AudioFlinger:音频策略的执行者,负责输入输出流设备的管理及音频流数据的处理传输

4)Audio HAL:音频硬件抽象层,负责与音频硬件设备的交互,由 AudioFlinger 直接调用

代码位置

framework\base\media\java\android\media\AudioTrack.java

二、AudioTrack的解析

从图上可以看到播放声音可以使app调用 MediaPlayer 和 AudioTrack这两种接口,两者都提供 Java API 给应用开发者使用。两者的差别在于:MediaPlayer 可以播放多种格式的音源,如 mp3、flac、wma、ogg、wav 等,而 AudioTrack 只能播放解码后的 PCM 数据流。所以 MediaPlayer 的应用场景更广,一般情况下使用它也更方便;只有一些对声音时延要求非常苛刻的应用场景才需要用到AudioTrack。

2.1 AudioTrack API 两种数据传输模式

正常来说,我们会播放一些音乐,打电话,也有系统自带的铃声这种。

对于系统来说,我们需要寻找一个最佳的方式来播放,这样才能追求效率,所以才有了两种模式。

音频不会经过cpu而是通过dma来传输数据,为了达到效率,我们会有一个buffer,也就是缓冲区域,我播放音乐的时候就会把对应的音频数据写入到这个buffer里面,然后我们的AudioTrack就会去读取这个buffer里面的数据,由此来达到播放的效果。

- MODE_STATIC 模式:整个音频文件全部数一起写入buffer。适用于铃声等内存占用较小,延时要求较高的声音。

- MODE_STREAM模式: 应用程序通过write方式把数据一次一次得写到buffer里面,然后audiotrack去读取buffer的数据,基本适用所有的音频场景,但是有时候会出现卡顿,阻塞的情况。

2.2 AudioTrack API 音频流类型

在对应的源码目录下有这样的注释。

/*** Returns the volume stream type of this AudioTrack.* Compare the result against {@link AudioManager#STREAM_VOICE_CALL},* {@link AudioManager#STREAM_SYSTEM}, {@link AudioManager#STREAM_RING},* {@link AudioManager#STREAM_MUSIC}, {@link AudioManager#STREAM_ALARM},* {@link AudioManager#STREAM_NOTIFICATION}, {@link AudioManager#STREAM_DTMF} or* {@link AudioManager#STREAM_ACCESSIBILITY}.*/public int getStreamType() {return mStreamType;

这些铃声都已经定义好了,

- STREAM_VOICE_CALL 电话语音

- STREAM_SYSTEM 系统声音

- STREAM_RING 铃声声音,如来电铃声、闹钟铃声等

- STREAM_MUSIC 音乐声音

- STREAM_ALARM 警告音

- STREAM_NOTIFICATION 通知音

- STREAM_DTMF DTMF 音(拨号盘按键音)

安卓之所以要定义这么多音频流类型主要是为了方便的音频管理策略。

你关闭媒体音量,不会影响到你的通话音量,代耳机和不带耳机的音量,还有其余的不相关的音量。还有就是比如插着有线耳机期间,音乐声(STREAM_MUSIC)只会输出到有线耳机,而铃声(STREAM_RING)会同时输出到有线耳机和外放。就是方便管理。

2.3 getMinBufferSize 函数分析

这个函数和最小要求的缓冲区大小相关,所以我们先分析这个函数

/*** Returns the estimated minimum buffer size required for an AudioTrack* object to be created in the {@link #MODE_STREAM} mode.* The size is an estimate because it does not consider either the route or the sink,* since neither is known yet. Note that this size doesn't* guarantee a smooth playback under load, and higher values should be chosen according to* the expected frequency at which the buffer will be refilled with additional data to play.* For example, if you intend to dynamically set the source sample rate of an AudioTrack* to a higher value than the initial source sample rate, be sure to configure the buffer size* based on the highest planned sample rate.* @param sampleRateInHz the source sample rate expressed in Hz.* {@link AudioFormat#SAMPLE_RATE_UNSPECIFIED} is not permitted.* @param channelConfig describes the configuration of the audio channels.* See {@link AudioFormat#CHANNEL_OUT_MONO} and* {@link AudioFormat#CHANNEL_OUT_STEREO}* @param audioFormat the format in which the audio data is represented.* See {@link AudioFormat#ENCODING_PCM_16BIT} and* {@link AudioFormat#ENCODING_PCM_8BIT},* and {@link AudioFormat#ENCODING_PCM_FLOAT}.* @return {@link #ERROR_BAD_VALUE} if an invalid parameter was passed,* or {@link #ERROR} if unable to query for output properties,* or the minimum buffer size expressed in bytes.*//**对应的源码已经有了注释:md,英语真的重要*/static public int getMinBufferSize(int sampleRateInHz, int channelConfig, int audioFormat) {int channelCount = 0;/*这里就是声道选择,单声道或则双声道*/switch(channelConfig) {case AudioFormat.CHANNEL_OUT_MONO:case AudioFormat.CHANNEL_CONFIGURATION_MONO:channelCount = 1;break;case AudioFormat.CHANNEL_OUT_STEREO:case AudioFormat.CHANNEL_CONFIGURATION_STEREO:channelCount = 2;break;default:if (!isMultichannelConfigSupported(channelConfig)) {loge("getMinBufferSize(): Invalid channel configuration.");return ERROR_BAD_VALUE;} else {channelCount = AudioFormat.channelCountFromOutChannelMask(channelConfig);}}if (!AudioFormat.isPublicEncoding(audioFormat)) {loge("getMinBufferSize(): Invalid audio format.");return ERROR_BAD_VALUE;}// sample rate, note these values are subject to change// Note: AudioFormat.SAMPLE_RATE_UNSPECIFIED is not allowed/*就是采样率,人耳朵在20hz到20khz之间。*采样率支持:4KHz~192KHz好像可以找到设定的值*/if ( (sampleRateInHz < AudioFormat.SAMPLE_RATE_HZ_MIN) ||(sampleRateInHz > AudioFormat.SAMPLE_RATE_HZ_MAX) ) {loge("getMinBufferSize(): " + sampleRateInHz + " Hz is not a supported sample rate.");return ERROR_BAD_VALUE;}/*frameworks/base/core/jni/android_media_AudioTrack.cpp中 android_media_AudioTrack_get_min_buff_size这个函数来实现的*/int size = native_get_min_buff_size(sampleRateInHz, channelCount, audioFormat);if (size <= 0) {loge("getMinBufferSize(): error querying hardware");return ERROR;}else {return size;}}好像有的源码这里还有pcm的写法,这里只有注释有说明,目前只有8bit和16bit两种模式支持。

android_media_AudioTrack.cpp对应的代码

static jint android_media_AudioTrack_get_min_buff_size(JNIEnv *env, jobject thiz,jint sampleRateInHertz, jint channelCount, jint audioFormat) {size_t frameCount;/*这个函数用于确定至少设置多少个 frame 才能保证声音正常播放,也就是最低帧数*/const status_t status = AudioTrack::getMinFrameCount(&frameCount, AUDIO_STREAM_DEFAULT,sampleRateInHertz);if (status != NO_ERROR) {ALOGE("AudioTrack::getMinFrameCount() for sample rate %d failed with status %d",sampleRateInHertz, status);return -1;}const audio_format_t format = audioFormatToNative(audioFormat);if (audio_has_proportional_frames(format)) {const size_t bytesPerSample = audio_bytes_per_sample(format);/*PCM 数据最小缓冲区大小*最小缓冲区的大小 = 最低帧数 * 声道数 * 采样深度,(采样深度以字节为单位)*/return frameCount * channelCount * bytesPerSample;} else {return frameCount;}

}

什么是frame:

frame就是帧,也就是1个采样点的字节数*声道。为啥搞个frame出来?因为对于多声道的话,用1个采样点的字节数表示不全,因为播放的时候肯定是多个声道的数据都要播出来。所以为了方便,就说1秒钟有多少个frame,这样就能抛开声道数,把意思表示全了。

2.4 AudioTrack 对象创建

建立对象函数:

public AudioTrack(int streamType, int sampleRateInHz, int channelConfig, int audioFormat,int bufferSizeInBytes, int mode)

throws IllegalArgumentException {this(streamType, sampleRateInHz, channelConfig, audioFormat,bufferSizeInBytes, mode, AudioManager.AUDIO_SESSION_ID_GENERATE);

}

.....

audioBuffSizeCheck(bufferSizeInBytes);

/*在这里用到了刚才的BufferSize*/

.....

int initResult = native_setup(new WeakReference<AudioTrack>(this), mAttributes,sampleRate, mChannelMask, mChannelIndexMask, mAudioFormat,mNativeBufferSizeInBytes, mDataLoadMode, session, 0 /*nativeTrackInJavaObj*/,offload);

.......

主要由这些函数完成,实在是读不懂这个代码。。

又回到android_media_AudioTrack.cpp这个代码中

static jint

android_media_AudioTrack_setup(JNIEnv *env, jobject thiz, jobject weak_this, jobject jaa,jintArray jSampleRate, jint channelPositionMask, jint channelIndexMask,jint audioFormat, jint buffSizeInBytes, jint memoryMode, jintArray jSession,jlong nativeAudioTrack, jboolean offload) {ALOGV("sampleRates=%p, channel mask=%x, index mask=%x, audioFormat(Java)=%d, buffSize=%d,"" nativeAudioTrack=0x%" PRIX64 ", offload=%d",jSampleRate, channelPositionMask, channelIndexMask, audioFormat, buffSizeInBytes,nativeAudioTrack, offload);sp<AudioTrack> lpTrack = 0;if (jSession == NULL) {ALOGE("Error creating AudioTrack: invalid session ID pointer");return (jint) AUDIO_JAVA_ERROR;}jint* nSession = (jint *) env->GetPrimitiveArrayCritical(jSession, NULL);if (nSession == NULL) {ALOGE("Error creating AudioTrack: Error retrieving session id pointer");return (jint) AUDIO_JAVA_ERROR;}audio_session_t sessionId = (audio_session_t) nSession[0];env->ReleasePrimitiveArrayCritical(jSession, nSession, 0);nSession = NULL;AudioTrackJniStorage* lpJniStorage = NULL;jclass clazz = env->GetObjectClass(thiz);if (clazz == NULL) {ALOGE("Can't find %s when setting up callback.", kClassPathName);return (jint) AUDIOTRACK_ERROR_SETUP_NATIVEINITFAILED;}// if we pass in an existing *Native* AudioTrack, we don't need to create/initialize one.if (nativeAudioTrack == 0) {if (jaa == 0) {ALOGE("Error creating AudioTrack: invalid audio attributes");return (jint) AUDIO_JAVA_ERROR;}if (jSampleRate == 0) {ALOGE("Error creating AudioTrack: invalid sample rates");return (jint) AUDIO_JAVA_ERROR;}int* sampleRates = env->GetIntArrayElements(jSampleRate, NULL);int sampleRateInHertz = sampleRates[0];env->ReleaseIntArrayElements(jSampleRate, sampleRates, JNI_ABORT);// Invalid channel representations are caught by !audio_is_output_channel() below.audio_channel_mask_t nativeChannelMask = nativeChannelMaskFromJavaChannelMasks(channelPositionMask, channelIndexMask);if (!audio_is_output_channel(nativeChannelMask)) {ALOGE("Error creating AudioTrack: invalid native channel mask %#x.", nativeChannelMask);return (jint) AUDIOTRACK_ERROR_SETUP_INVALIDCHANNELMASK;}uint32_t channelCount = audio_channel_count_from_out_mask(nativeChannelMask);// check the format.// This function was called from Java, so we compare the format against the Java constantsaudio_format_t format = audioFormatToNative(audioFormat);if (format == AUDIO_FORMAT_INVALID) {ALOGE("Error creating AudioTrack: unsupported audio format %d.", audioFormat);return (jint) AUDIOTRACK_ERROR_SETUP_INVALIDFORMAT;}// compute the frame countsize_t frameCount;if (audio_has_proportional_frames(format)) {const size_t bytesPerSample = audio_bytes_per_sample(format);frameCount = buffSizeInBytes / (channelCount * bytesPerSample);} else {frameCount = buffSizeInBytes;}// create the native AudioTrack objectlpTrack = new AudioTrack();// read the AudioAttributes valuesauto paa = JNIAudioAttributeHelper::makeUnique();jint jStatus = JNIAudioAttributeHelper::nativeFromJava(env, jaa, paa.get());if (jStatus != (jint)AUDIO_JAVA_SUCCESS) {return jStatus;}ALOGV("AudioTrack_setup for usage=%d content=%d flags=0x%#x tags=%s",paa->usage, paa->content_type, paa->flags, paa->tags);// initialize the callback information:// this data will be passed with every AudioTrack callbacklpJniStorage = new AudioTrackJniStorage();lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);// we use a weak reference so the AudioTrack object can be garbage collected.lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);lpJniStorage->mCallbackData.isOffload = offload;lpJniStorage->mCallbackData.busy = false;audio_offload_info_t offloadInfo;if (offload == JNI_TRUE) {offloadInfo = AUDIO_INFO_INITIALIZER;offloadInfo.format = format;offloadInfo.sample_rate = sampleRateInHertz;offloadInfo.channel_mask = nativeChannelMask;offloadInfo.has_video = false;offloadInfo.stream_type = AUDIO_STREAM_MUSIC; //required for offload}// initialize the native AudioTrack objectstatus_t status = NO_ERROR;switch (memoryMode) {case MODE_STREAM:status = lpTrack->set(AUDIO_STREAM_DEFAULT,// stream type, but more info conveyed in paa (last argument)sampleRateInHertz,format,// word length, PCMnativeChannelMask,offload ? 0 : frameCount,offload ? AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD : AUDIO_OUTPUT_FLAG_NONE,audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user)0,// notificationFrames == 0 since not using EVENT_MORE_DATA to feed the AudioTrack0,// shared memtrue,// thread can call JavasessionId,// audio session IDoffload ? AudioTrack::TRANSFER_SYNC_NOTIF_CALLBACK : AudioTrack::TRANSFER_SYNC,offload ? &offloadInfo : NULL,-1, -1, // default uid, pid valuespaa.get());break;case MODE_STATIC:// AudioTrack is using shared memoryif (!lpJniStorage->allocSharedMem(buffSizeInBytes)) {ALOGE("Error creating AudioTrack in static mode: error creating mem heap base");goto native_init_failure;}status = lpTrack->set(AUDIO_STREAM_DEFAULT,// stream type, but more info conveyed in paa (last argument)sampleRateInHertz,format,// word length, PCMnativeChannelMask,frameCount,AUDIO_OUTPUT_FLAG_NONE,audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user));0,// notificationFrames == 0 since not using EVENT_MORE_DATA to feed the AudioTracklpJniStorage->mMemBase,// shared memtrue,// thread can call JavasessionId,// audio session IDAudioTrack::TRANSFER_SHARED,NULL, // default offloadInfo-1, -1, // default uid, pid valuespaa.get());break;default:ALOGE("Unknown mode %d", memoryMode);goto native_init_failure;}if (status != NO_ERROR) {ALOGE("Error %d initializing AudioTrack", status);goto native_init_failure;}} else { // end if (nativeAudioTrack == 0)lpTrack = (AudioTrack*)nativeAudioTrack;// TODO: We need to find out which members of the Java AudioTrack might// need to be initialized from the Native AudioTrack// these are directly returned from getters:// mSampleRate// mAudioFormat// mStreamType// mChannelConfiguration// mChannelCount// mState (?)// mPlayState (?)// these may be used internally (Java AudioTrack.audioParamCheck():// mChannelMask// mChannelIndexMask// mDataLoadMode// initialize the callback information:// this data will be passed with every AudioTrack callbacklpJniStorage = new AudioTrackJniStorage();lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);// we use a weak reference so the AudioTrack object can be garbage collected.lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);lpJniStorage->mCallbackData.busy = false;}nSession = (jint *) env->GetPrimitiveArrayCritical(jSession, NULL);if (nSession == NULL) {ALOGE("Error creating AudioTrack: Error retrieving session id pointer");goto native_init_failure;}// read the audio session ID back from AudioTrack in case we create a new sessionnSession[0] = lpTrack->getSessionId();env->ReleasePrimitiveArrayCritical(jSession, nSession, 0);nSession = NULL;{const jint elements[1] = { (jint) lpTrack->getSampleRate() };env->SetIntArrayRegion(jSampleRate, 0, 1, elements);}{ // scope for the lockMutex::Autolock l(sLock);sAudioTrackCallBackCookies.add(&lpJniStorage->mCallbackData);}// save our newly created C++ AudioTrack in the "nativeTrackInJavaObj" field// of the Java object (in mNativeTrackInJavaObj)setAudioTrack(env, thiz, lpTrack);// save the JNI resources so we can free them later//ALOGV("storing lpJniStorage: %x\n", (long)lpJniStorage);env->SetLongField(thiz, javaAudioTrackFields.jniData, (jlong)lpJniStorage);// since we had audio attributes, the stream type was derived from them during the// creation of the native AudioTrack: push the same value to the Java objectenv->SetIntField(thiz, javaAudioTrackFields.fieldStreamType, (jint) lpTrack->streamType());return (jint) AUDIO_JAVA_SUCCESS;// failures:

native_init_failure:if (nSession != NULL) {env->ReleasePrimitiveArrayCritical(jSession, nSession, 0);}env->DeleteGlobalRef(lpJniStorage->mCallbackData.audioTrack_class);env->DeleteGlobalRef(lpJniStorage->mCallbackData.audioTrack_ref);delete lpJniStorage;env->SetLongField(thiz, javaAudioTrackFields.jniData, 0);// lpTrack goes out of scope, so reference count drops to zeroreturn (jint) AUDIOTRACK_ERROR_SETUP_NATIVEINITFAILED;

}

我已经不想去读源码了,超纲了,以后在复读先记录。有想法的同学可以去看看我贴的参考网址。

2.5 AudioTrack 在JNI 中的使用

在android_media_AudioTrack.cpp代码中

AudioTrack在JNI主要实现的是一个内存共享的机制,对应的源码如下:

class AudioTrackJniStorage {public:sp<MemoryHeapBase> mMemHeap;sp<MemoryBase> mMemBase;audiotrack_callback_cookie mCallbackData;sp<JNIDeviceCallback> mDeviceCallback;AudioTrackJniStorage() {mCallbackData.audioTrack_class = 0;mCallbackData.audioTrack_ref = 0;mCallbackData.isOffload = false;}~AudioTrackJniStorage() {mMemBase.clear();mMemHeap.clear();}

/*这里就是把mMemHeap传到mMemBase中去*/bool allocSharedMem(int sizeInBytes) {mMemHeap = new MemoryHeapBase(sizeInBytes, 0, "AudioTrack Heap Base");if (mMemHeap->getHeapID() < 0) {return false;}mMemBase = new MemoryBase(mMemHeap, 0, sizeInBytes);return true;}

};

2.6 AudioTrack 的play和write

之前的逻辑和共享内存大致都已经说了,最后就是上层调用读写接口去读写内容

首先我们看读的代码:

static void

android_media_AudioTrack_start(JNIEnv *env, jobject thiz)

{sp<AudioTrack> lpTrack = getAudioTrack(env, thiz);if (lpTrack == NULL) {jniThrowException(env, "java/lang/IllegalStateException","Unable to retrieve AudioTrack pointer for start()");return;}lpTrack->start();

}

再看写的代码:

template <typename T>

static jint writeToTrack(const sp<AudioTrack>& track, jint audioFormat, const T *data,jint offsetInSamples, jint sizeInSamples, bool blocking) {// give the data to the native AudioTrack object (the data starts at the offset)ssize_t written = 0;// regular write() or copy the data to the AudioTrack's shared memory?size_t sizeInBytes = sizeInSamples * sizeof(T);if (track->sharedBuffer() == 0) {//共享内存written = track->write(data + offsetInSamples, sizeInBytes, blocking);// for compatibility with earlier behavior of write(), return 0 in this caseif (written == (ssize_t) WOULD_BLOCK) {written = 0;}} else {// writing to shared memory, check for capacityif ((size_t)sizeInBytes > track->sharedBuffer()->size()) {sizeInBytes = track->sharedBuffer()->size();}//就直接把数据拷贝到共享内存里,STATIC模式memcpy(track->sharedBuffer()->pointer(), data + offsetInSamples, sizeInBytes);written = sizeInBytes;}if (written >= 0) {return written / sizeof(T);}//返回值return interpretWriteSizeError(written);

}

......

//返回值的函数

static inline

jint interpretWriteSizeError(ssize_t writeSize) {if (writeSize == WOULD_BLOCK) {return (jint)0;} else if (writeSize == NO_INIT) {return AUDIO_JAVA_DEAD_OBJECT;} else {ALOGE("Error %zd during AudioTrack native read", writeSize);return nativeToJavaStatus(writeSize);}

}

2.7 new AudioTrack 和 set 的调用

先看jni层的set函数

status_t status = NO_ERROR;switch (memoryMode) {case MODE_STREAM:status = lpTrack->set(AUDIO_STREAM_DEFAULT,// stream type, but more info conveyed in paa (last argument)sampleRateInHertz,format,// word length, PCMnativeChannelMask,offload ? 0 : frameCount,offload ? AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD : AUDIO_OUTPUT_FLAG_NONE,audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user)0,// notificationFrames == 0 since not using EVENT_MORE_DATA to feed the AudioTrack0,// shared memtrue,// thread can call JavasessionId,// audio session IDoffload ? AudioTrack::TRANSFER_SYNC_NOTIF_CALLBACK : AudioTrack::TRANSFER_SYNC,offload ? &offloadInfo : NULL,-1, -1, // default uid, pid valuespaa.get());break;

再看audio track中 set函数:这个代码太长了。

status_t AudioTrack::set(audio_stream_type_t streamType,uint32_t sampleRate,audio_format_t format,audio_channel_mask_t channelMask,size_t frameCount,audio_output_flags_t flags,callback_t cbf,void* user,int32_t notificationFrames,const sp<IMemory>& sharedBuffer,bool threadCanCallJava,audio_session_t sessionId,transfer_type transferType,const audio_offload_info_t *offloadInfo,uid_t uid,pid_t pid,const audio_attributes_t* pAttributes,bool doNotReconnect,float maxRequiredSpeed,audio_port_handle_t selectedDeviceId){

......

}

这里就是创造track函数的地方

status_t AudioTrack::createTrack_l()

{status_t status;bool callbackAdded = false;const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();if (audioFlinger == 0) {ALOGE("%s(%d): Could not get audioflinger",__func__, mPortId);status = NO_INIT;goto exit;}{// mFlags (not mOrigFlags) is modified depending on whether fast request is accepted.// After fast request is denied, we will request again if IAudioTrack is re-created.// Client can only express a preference for FAST. Server will perform additional tests.if (mFlags & AUDIO_OUTPUT_FLAG_FAST) {// either of these use cases:// use case 1: shared bufferbool sharedBuffer = mSharedBuffer != 0;bool transferAllowed =// use case 2: callback transfer mode(mTransfer == TRANSFER_CALLBACK) ||// use case 3: obtain/release mode(mTransfer == TRANSFER_OBTAIN) ||// use case 4: synchronous write((mTransfer == TRANSFER_SYNC || mTransfer == TRANSFER_SYNC_NOTIF_CALLBACK)&& mThreadCanCallJava);bool fastAllowed = sharedBuffer || transferAllowed;if (!fastAllowed) {ALOGW("%s(%d): AUDIO_OUTPUT_FLAG_FAST denied by client,"" not shared buffer and transfer = %s",__func__, mPortId,convertTransferToText(mTransfer));mFlags = (audio_output_flags_t) (mFlags & ~AUDIO_OUTPUT_FLAG_FAST);}}IAudioFlinger::CreateTrackInput input;if (mStreamType != AUDIO_STREAM_DEFAULT) {input.attr = AudioSystem::streamTypeToAttributes(mStreamType);} else {input.attr = mAttributes;}input.config = AUDIO_CONFIG_INITIALIZER;input.config.sample_rate = mSampleRate;input.config.channel_mask = mChannelMask;input.config.format = mFormat;input.config.offload_info = mOffloadInfoCopy;input.clientInfo.clientUid = mClientUid;input.clientInfo.clientPid = mClientPid;input.clientInfo.clientTid = -1;if (mFlags & AUDIO_OUTPUT_FLAG_FAST) {// It is currently meaningless to request SCHED_FIFO for a Java thread. Even if the// application-level code follows all non-blocking design rules, the language runtime// doesn't also follow those rules, so the thread will not benefit overall.if (mAudioTrackThread != 0 && !mThreadCanCallJava) {input.clientInfo.clientTid = mAudioTrackThread->getTid();}}input.sharedBuffer = mSharedBuffer;input.notificationsPerBuffer = mNotificationsPerBufferReq;input.speed = 1.0;if (audio_has_proportional_frames(mFormat) && mSharedBuffer == 0 &&(mFlags & AUDIO_OUTPUT_FLAG_FAST) == 0) {input.speed = !isPurePcmData_l() || isOffloadedOrDirect_l() ? 1.0f :max(mMaxRequiredSpeed, mPlaybackRate.mSpeed);}input.flags = mFlags;input.frameCount = mReqFrameCount;input.notificationFrameCount = mNotificationFramesReq;input.selectedDeviceId = mSelectedDeviceId;input.sessionId = mSessionId;IAudioFlinger::CreateTrackOutput output;sp<IAudioTrack> track = audioFlinger->createTrack(input,output,&status);if (status != NO_ERROR || output.outputId == AUDIO_IO_HANDLE_NONE) {ALOGE("%s(%d): AudioFlinger could not create track, status: %d output %d",__func__, mPortId, status, output.outputId);if (status == NO_ERROR) {status = NO_INIT;}goto exit;}ALOG_ASSERT(track != 0);mFrameCount = output.frameCount;mNotificationFramesAct = (uint32_t)output.notificationFrameCount;mRoutedDeviceId = output.selectedDeviceId;mSessionId = output.sessionId;mSampleRate = output.sampleRate;if (mOriginalSampleRate == 0) {mOriginalSampleRate = mSampleRate;}mAfFrameCount = output.afFrameCount;mAfSampleRate = output.afSampleRate;mAfLatency = output.afLatencyMs;mLatency = mAfLatency + (1000LL * mFrameCount) / mSampleRate;// AudioFlinger now owns the reference to the I/O handle,// so we are no longer responsible for releasing it.// FIXME compare to AudioRecord//读写的地方sp<IMemory> iMem = track->getCblk();if (iMem == 0) {ALOGE("%s(%d): Could not get control block", __func__, mPortId);status = NO_INIT;goto exit;}void *iMemPointer = iMem->pointer();if (iMemPointer == NULL) {ALOGE("%s(%d): Could not get control block pointer", __func__, mPortId);status = NO_INIT;goto exit;}// invariant that mAudioTrack != 0 is true only after set() returns successfullyif (mAudioTrack != 0) {IInterface::asBinder(mAudioTrack)->unlinkToDeath(mDeathNotifier, this);mDeathNotifier.clear();}mAudioTrack = track;mCblkMemory = iMem;IPCThreadState::self()->flushCommands();audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);mCblk = cblk;mAwaitBoost = false;if (mFlags & AUDIO_OUTPUT_FLAG_FAST) {if (output.flags & AUDIO_OUTPUT_FLAG_FAST) {ALOGI("%s(%d): AUDIO_OUTPUT_FLAG_FAST successful; frameCount %zu -> %zu",__func__, mPortId, mReqFrameCount, mFrameCount);if (!mThreadCanCallJava) {mAwaitBoost = true;}} else {ALOGW("%s(%d): AUDIO_OUTPUT_FLAG_FAST denied by server; frameCount %zu -> %zu",__func__, mPortId, mReqFrameCount, mFrameCount);}}mFlags = output.flags;//mOutput != output includes the case where mOutput == AUDIO_IO_HANDLE_NONE for first creationif (mDeviceCallback != 0) {if (mOutput != AUDIO_IO_HANDLE_NONE) {AudioSystem::removeAudioDeviceCallback(this, mOutput, mPortId);}AudioSystem::addAudioDeviceCallback(this, output.outputId, output.portId);callbackAdded = true;}mPortId = output.portId;// We retain a copy of the I/O handle, but don't own the referencemOutput = output.outputId;mRefreshRemaining = true;// Starting address of buffers in shared memory. If there is a shared buffer, buffers// is the value of pointer() for the shared buffer, otherwise buffers points// immediately after the control block. This address is for the mapping within client// address space. AudioFlinger::TrackBase::mBuffer is for the server address space.void* buffers;if (mSharedBuffer == 0) {buffers = cblk + 1;} else {buffers = mSharedBuffer->pointer();if (buffers == NULL) {ALOGE("%s(%d): Could not get buffer pointer", __func__, mPortId);status = NO_INIT;goto exit;}}mAudioTrack->attachAuxEffect(mAuxEffectId);// If IAudioTrack is re-created, don't let the requested frameCount// decrease. This can confuse clients that cache frameCount().if (mFrameCount > mReqFrameCount) {mReqFrameCount = mFrameCount;}// reset server position to 0 as we have new cblk.mServer = 0;// update proxyif (mSharedBuffer == 0) {mStaticProxy.clear();mProxy = new AudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);} else {mStaticProxy = new StaticAudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);mProxy = mStaticProxy;}mProxy->setVolumeLR(gain_minifloat_pack(gain_from_float(mVolume[AUDIO_INTERLEAVE_LEFT]),gain_from_float(mVolume[AUDIO_INTERLEAVE_RIGHT])));mProxy->setSendLevel(mSendLevel);const uint32_t effectiveSampleRate = adjustSampleRate(mSampleRate, mPlaybackRate.mPitch);const float effectiveSpeed = adjustSpeed(mPlaybackRate.mSpeed, mPlaybackRate.mPitch);const float effectivePitch = adjustPitch(mPlaybackRate.mPitch);mProxy->setSampleRate(effectiveSampleRate);AudioPlaybackRate playbackRateTemp = mPlaybackRate;playbackRateTemp.mSpeed = effectiveSpeed;playbackRateTemp.mPitch = effectivePitch;mProxy->setPlaybackRate(playbackRateTemp);mProxy->setMinimum(mNotificationFramesAct);mDeathNotifier = new DeathNotifier(this);IInterface::asBinder(mAudioTrack)->linkToDeath(mDeathNotifier, this);}exit:if (status != NO_ERROR && callbackAdded) {// note: mOutput is always valid is callbackAdded is trueAudioSystem::removeAudioDeviceCallback(this, mOutput, mPortId);}mStatus = status;// sp<IAudioTrack> track destructor will cause releaseOutput() to be called by AudioFlingerreturn status;

}三、整体总结

之前没有玩过audio的东西,也没有学过java和c++,现在看这些真的头痛,虽然大致理了下思路,但是还是觉得没有入门,有空还得多看看博客和源码,还需要去找赵对应的api文档,后面继续根新把,上面说的也不一定是对的,毕竟代码总在进步。

整理下之前代码的整体逻辑:

1、首先就是就是计算buffer的大小,和采样率,声道数这些有关。

2、当Buffer计算好了过后,AudioTrack 在JNI就会有一个逻辑去实现共享内存,比如服务端先分配两个BnMemoryHeapBase和BnMemoryBase,然后通过BnMemoryBase把数据传到代理端,通过机制,代理端就可以读取BnMemoryBase的数据。

3、共享内存实现过后,就是上层读写数据实现播放声音这些。

当然这些里面还有一些深奥的东西,比如进程间的同步,共享内存怎么能保证不被破坏,如何实现的,audioTrack和audioFlinger之间的交互,等等,这些都是谜团,还有很多东西要去解析呢。