目录

如何判断树莓派型号

车牌识别

HyperLPR

CPP 依赖

Linux/Mac 编译

CPP demo

还是试一试python版把

demo.py的逻辑关系

模型资源说明

实现对摄像头进行拍照

在树莓派上安装环境

ssh

pip install tensorflow errer:

FFFFFFFFFFFFFFFFFFFinally

如何判断树莓派型号

可以到系统上通过此处方法来查看,或者直接看外观这边有标明

Raspberry Pi 3 Model B V1.2

Raspberry 2015

FCC ID:2ABCB-RP132

IC:20953-RP132

FCC全称是Federal Communications Commission,中文为美国联邦通信委员会。于1934年根据Communications Act建立,是美国政府的一个独立机构,直接对国会负责。FCC通过控制无线电广播、电视、电信、卫星和电缆来协调国内和国际的通信。涉及美国50多个州、哥伦比亚以及美国所属地区为确保与生命财产有关的无线电和有线通信产品的安全性,FCC的工程技术部(Office of Engineering and Technology)负责委员会的技术支持,同时负责设备认可方面的事务。许多无线电应用产品、通讯产品和数字产品要进入美国市场,都要求FCC的认可。所以我手上的是这款:

Raspberry Pi 3 Model B 树莓派三代B型

1.4GHZ CPU、低功耗蓝牙4.1、1G内存现在只有一个壳子,我手上还缺5v/2A电源及电源线、HDMI转VGA线(其实还好,我那边有HDMI的显示屏)

但是着一切都不是问题,树莓派有use接口,并且我可以通过PuTTY连接树莓派。

因为不知道这个系统是谁的,而且出现上电没有HDMI输出的现象

所以我们现在先在linux下对树莓派烧录系统

shumeipai.nxez.com/download

shumeipai.nxez.com/2013/12/08/linux-command-line-burn-raspberry-pi-mirror-to-sd-card.html

我首先用linux的dd,因为SD卡的分区显示的是mmcblk0p1和mmcblk0p2,我的dd指令是sudo dd bs=4M if=2019-09-26-raspbian-buster.img of=/dev/mmcblk0p的时候会提示

dd: 写入'/dev/mmcblk0p' 出错: 设备上没有空间

记录了474+0 的读入

记录了473+0 的写出

1988042752 bytes (2.0 GB, 1.9 GiB) copied, 51.1839 s, 38.8 MB/s

所以我改成sudo dd bs=4M if=2019-09-26-raspbian-buster.img of=/dev/mmcblk0

成功了

asber@asber-X550VX:~/Downloads$ sudo dd bs=4M if=2019-09-26-raspbian-buster.img of=/dev/mmcblk0

记录了40+0 的读入

记录了40+0 的写出

167772160 bytes (168 MB, 160 MiB) copied, 9.67998 s, 17.3 MB/s

记录了913+0 的读入

记录了913+0 的写出

3829399552 bytes (3.8 GB, 3.6 GiB) copied, 340.545 s, 11.2 MB/s车牌识别

https://www.cnblogs.com/subconscious/p/3979988.html

为了避免系统中安装的老版本opencv对编译的影响,需要在 CMakeLists.txt 中修改:

set(CMAKE_PREFIX_PATH ${CMAKE_PREFIX_PATH} "/usr/local/opt/opencv3")

改为:

if (CMAKE_SYSTEM_NAME MATCHES "Darwin")set(CMAKE_PREFIX_PATH ${CMAKE_PREFIX_PATH} "/home/asber/opencv-3.2.0")

endif ()但是make的时候出错了

现在有两个选择,继续debug此文件,进去和群里面的人一起商讨,但是我们现在选择下面这个工程,其识别车型也更多。

HyperLPR

https://github.com/zeusees/HyperLPR

https://github.com/armaab/hyperlpr-train

基于深度学习高性能中文车牌识别

支持python3,支持Windows Mac Linux 树莓派等。

CPP 依赖

- Opencv 3.4 以上版本

查看linux下的opencv安装版本:

pkg-config opencv --modversion我的是3.2.0

安装一下3.4版本的opencv

ubuntu16.04上的OpenCV多版本共存

ubuntu opencv3.4.6安装

注意(如果你安装了anaconda然后make出错):https://www.cnblogs.com/dinghongkai/p/11288338.html)

[ 50%] Linking CXX shared library ../../lib/libopencv_objdetect.so

[ 50%] Built target opencv_objdetect

[ 50%] Linking CXX shared library ../../lib/libopencv_stitching.so

[ 50%] Built target opencv_stitching

Makefile:162: recipe for target 'all' failed

make: *** [all] Error 2

make报错,可能是cmake的参数问题?还是cmake的版本问题?(更新过)还是anaconda的问题?

Linux/Mac 编译

- 仅需要的依赖OpenCV 3.4 (需要DNN框架)

cd Prj-Linux

mkdir build

cd build

cmake ../

sudo make -j CPP demo

#include "../include/Pipeline.h"

int main(){pr::PipelinePR prc("model/cascade.xml","model/HorizonalFinemapping.prototxt","model/HorizonalFinemapping.caffemodel","model/Segmentation.prototxt","model/Segmentation.caffemodel","model/CharacterRecognization.prototxt","model/CharacterRecognization.caffemodel","model/SegmentationFree.prototxt","model/SegmentationFree.caffemodel");//定义模型文件cv::Mat image = cv::imread("test.png");std::vector<pr::PlateInfo> res = prc.RunPiplineAsImage(image,pr::SEGMENTATION_FREE_METHOD);//使用端到端模型模型进行识别 识别结果将会保存在res里面for(auto st:res) {if(st.confidence>0.75) {std::cout << st.getPlateName() << " " << st.confidence << std::endl;//输出识别结果 、识别置信度cv::Rect region = st.getPlateRect();//获取车牌位置cv::rectangle(image,cv::Point(region.x,region.y),cv::Point(region.x+region.width,region.y+region.height),cv::Scalar(255,255,0),2);//画出车牌位置}}cv::imshow("image",image);cv::waitKey(0);return 0 ;

}而且这个文档带UI界面的工程是windows下的

还是试一试python版把

windows中python版本的使用教程 linux下的与之类似

所需依赖:

pip install Keras

pip install Theano

pip install Numpy

pip install Scipy

pip install opencv-python

pip install scikit-image

pip install pillow

pip install tensorflowsudo cp -r /home/asber/platerecong/HyperPR/HyperLPR/hyperlpr_py3 /home/asber/anaconda3/lib/hyperlpr

然后发现……果然不行阿,到底如何将这个库放到linux下的anaconda呢?

然后我TM惊奇的发现,直接

pip install hyperlpr

就可以用了。。。

但是发现??

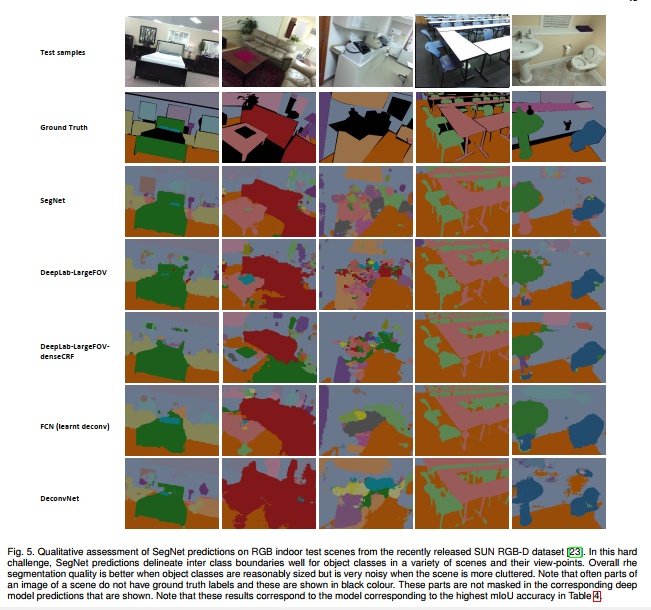

竟然还是没有检测出来,后来群里小伙伴说直接运行里面的demo.py就可以为试了一下,(demo中2.jpg变成了2_.jpg搞得一直抱错)既然跑出来了,我们就来分析一下这个程序的逻辑关系把。

demo.py的逻辑关系

import sys

reload(sys)

sys.setdefaultencoding("utf-8")#这个是计时函数,其实也是运行这个识别的核心代码

import timedef SpeedTest(image_path):grr = cv2.imread(image_path)model = pr.LPR("model/cascade.xml", "model/model12.h5", "model/ocr_plate_all_gru.h5")model.SimpleRecognizePlateByE2E(grr)t0 = time.time()for x in range(20):model.SimpleRecognizePlateByE2E(grr)t = (time.time() - t0)/20.0print "Image size :" + str(grr.shape[1])+"x"+str(grr.shape[0]) + " need " + str(round(t*1000,2))+"ms"#这个就是在图片上的某个框中标注车牌号

from PIL import ImageFont

from PIL import Image

from PIL import ImageDraw

fontC = ImageFont.truetype("./Font/platech.ttf", 14, 0)def drawRectBox(image,rect,addText):cv2.rectangle(image, (int(rect[0]), int(rect[1])), (int(rect[0] + rect[2]), int(rect[1] + rect[3])), (0,0, 255), 2,cv2.LINE_AA)cv2.rectangle(image, (int(rect[0]-1), int(rect[1])-16), (int(rect[0] + 115), int(rect[1])), (0, 0, 255), -1,cv2.LINE_AA)img = Image.fromarray(image)draw = ImageDraw.Draw(img)draw.text((int(rect[0]+1), int(rect[1]-16)), addText.decode("utf-8"), (255, 255, 255), font=fontC)imagex = np.array(img)return imageximport HyperLPRLite as pr

import cv2

import numpy as np

grr = cv2.imread(r"images_rec/2.jpg")

cv2.namedWindow('image',0)

cv2.imshow('image',grr)

cv2.waitKey()

cv2.destroyAllWindows()

model = pr.LPR(r"model/cascade.xml",r"model/model12.h5","model/ocr_plate_all_gru.h5")

for pstr,confidence,rect in model.SimpleRecognizePlateByE2E(grr):if confidence>0.7:image = drawRectBox(grr, rect, pstr+" "+str(round(confidence,3)))print "plate_str:"print pstrprint "plate_confidence"print confidencecv2.imshow("image",image)

cv2.waitKey(0)SpeedTest("images_rec/2_.jpg")代码很好理解,model的初始化列表需要1.检测模型2.识别模型3.不知道

我们还是看一看HyperLPRLite这个py把

#coding=utf-8

import cv2

import numpy as np

from keras import backend as K

from keras.models import *

from keras.layers import *chars = [u"京", u"沪", u"津", u"渝", u"冀", u"晋", u"蒙", u"辽", u"吉", u"黑", u"苏", u"浙", u"皖", u"闽", u"赣", u"鲁", u"豫", u"鄂", u"湘", u"粤", u"桂",u"琼", u"川", u"贵", u"云", u"藏", u"陕", u"甘", u"青", u"宁", u"新", u"0", u"1", u"2", u"3", u"4", u"5", u"6", u"7", u"8", u"9", u"A",u"B", u"C", u"D", u"E", u"F", u"G", u"H", u"J", u"K", u"L", u"M", u"N", u"P", u"Q", u"R", u"S", u"T", u"U", u"V", u"W", u"X",u"Y", u"Z",u"港",u"学",u"使",u"警",u"澳",u"挂",u"军",u"北",u"南",u"广",u"沈",u"兰",u"成",u"济",u"海",u"民",u"航",u"空"]class LPR():def __init__(self,model_detection,model_finemapping,model_seq_rec):self.watch_cascade = cv2.CascadeClassifier(model_detection)#检测模型self.modelFineMapping = self.model_finemapping()self.modelFineMapping.load_weights(model_finemapping)#左右边界回归模型self.modelSeqRec = self.model_seq_rec(model_seq_rec)#序列模型def computeSafeRegion(self,shape,bounding_rect):#防止bounding_rect的值出错,进行控制top = bounding_rect[1] # ybottom = bounding_rect[1] + bounding_rect[3] # y + hleft = bounding_rect[0] # xright = bounding_rect[0] + bounding_rect[2] # x + wmin_top = 0max_bottom = shape[0]min_left = 0max_right = shape[1]if top < min_top:top = min_topif left < min_left:left = min_leftif bottom > max_bottom:bottom = max_bottomif right > max_right:right = max_rightreturn [left,top,right-left,bottom-top]def cropImage(self,image,rect):#返回一个bounding box中的图像x, y, w, h = self.computeSafeRegion(image.shape,rect)return image[y:y+h,x:x+w]def detectPlateRough(self,image_gray,resize_h = 720,en_scale =1.08 ,top_bottom_padding_rate = 0.05):##返回图像中的所有车牌if top_bottom_padding_rate>0.2:print("error:top_bottom_padding_rate > 0.2:",top_bottom_padding_rate)exit(1)height = image_gray.shape[0]padding = int(height*top_bottom_padding_rate)scale = image_gray.shape[1]/float(image_gray.shape[0])#原图的长宽比image = cv2.resize(image_gray, (int(scale*resize_h), resize_h))#将图片resize模型要求的输入sizeimage_color_cropped = image[padding:resize_h-padding,0:image_gray.shape[1]]image_gray = cv2.cvtColor(image_color_cropped,cv2.COLOR_RGB2GRAY)#返回的是方框listwatches = self.watch_cascade.detectMultiScale(image_gray, en_scale, 2, minSize=(36, 9),maxSize=(36*40, 9*40))#多尺度检测 minSize为目标的最小尺寸 maxSize为目标的最大尺寸 scaleFactor=en_scale表示每次图像尺寸减小的比例 cropped_images = []for (x, y, w, h) in watches:x -= w * 0.14w += w * 0.28y -= h * 0.15h += h * 0.3cropped = self.cropImage(image_color_cropped, (int(x), int(y), int(w), int(h)))cropped_images.append([cropped,[x, y+padding, w, h]])return cropped_images# 最后返回图像中的所有车牌的图片def fastdecode(self,y_pred):results = ""confidence = 0.0table_pred = y_pred.reshape(-1, len(chars)+1)res = table_pred.argmax(axis=1)for i,one in enumerate(res):if one<len(chars) and (i==0 or (one!=res[i-1])):results+= chars[one]confidence+=table_pred[i][one]confidence/= len(results)return results,confidencedef model_seq_rec(self,model_path):width, height, n_len, n_class = 164, 48, 7, len(chars)+ 1rnn_size = 256input_tensor = Input((164, 48, 3))x = input_tensorbase_conv = 32for i in range(3):x = Conv2D(base_conv * (2 ** (i)), (3, 3))(x)x = BatchNormalization()(x)x = Activation('relu')(x)x = MaxPooling2D(pool_size=(2, 2))(x)conv_shape = x.get_shape()x = Reshape(target_shape=(int(conv_shape[1]), int(conv_shape[2] * conv_shape[3])))(x)x = Dense(32)(x)x = BatchNormalization()(x)x = Activation('relu')(x)gru_1 = GRU(rnn_size, return_sequences=True, kernel_initializer='he_normal', name='gru1')(x)gru_1b = GRU(rnn_size, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='gru1_b')(x)gru1_merged = add([gru_1, gru_1b])gru_2 = GRU(rnn_size, return_sequences=True, kernel_initializer='he_normal', name='gru2')(gru1_merged)gru_2b = GRU(rnn_size, return_sequences=True, go_backwards=True, kernel_initializer='he_normal', name='gru2_b')(gru1_merged)x = concatenate([gru_2, gru_2b])x = Dropout(0.25)(x)x = Dense(n_class, kernel_initializer='he_normal', activation='softmax')(x)base_model = Model(inputs=input_tensor, outputs=x)base_model.load_weights(model_path)return base_modeldef model_finemapping(self):input = Input(shape=[16, 66, 3]) # change this shape to [None,None,3] to enable arbitraty shape inputx = Conv2D(10, (3, 3), strides=1, padding='valid', name='conv1')(input)x = Activation("relu", name='relu1')(x)x = MaxPool2D(pool_size=2)(x)x = Conv2D(16, (3, 3), strides=1, padding='valid', name='conv2')(x)x = Activation("relu", name='relu2')(x)x = Conv2D(32, (3, 3), strides=1, padding='valid', name='conv3')(x)x = Activation("relu", name='relu3')(x)x = Flatten()(x)output = Dense(2,name = "dense")(x)output = Activation("relu", name='relu4')(output)model = Model([input], [output])return modeldef finemappingVertical(self,image,rect):#进行图片的fine turnresized = cv2.resize(image,(66,16))#resize成可以接受的 66 16 sizeresized = resized.astype(np.float)/255res_raw= self.modelFineMapping.predict(np.array([resized]))[0]res =res_raw*image.shape[1]res = res.astype(np.int)H,T = resH-=3if H<0:H=0T+=2;if T>= image.shape[1]-1:T= image.shape[1]-1rect[2] -= rect[2]*(1-res_raw[1] + res_raw[0])rect[0]+=res[0]image = image[:,H:T+2]image = cv2.resize(image, (int(136), int(36)))return image,rectdef recognizeOne(self,src):#识别字符x_tempx = srcx_temp = cv2.resize(x_tempx,( 164,48))x_temp = x_temp.transpose(1, 0, 2)y_pred = self.modelSeqRec.predict(np.array([x_temp]))y_pred = y_pred[:,2:,:]return self.fastdecode(y_pred)def SimpleRecognizePlateByE2E(self,image):#核心函数 输入一个图片images = self.detectPlateRough(image,image.shape[0],top_bottom_padding_rate=0.1)#返回图像中的所有车牌图片和其bounding boxres_set = []for j,plate in enumerate(images):plate, rect =plate image_rgb,rect_refine = self.finemappingVertical(plate,rect)#fine turnres,confidence = self.recognizeOne(image_rgb)#返回识别的字符和可能性res_set.append([res,confidence,rect_refine])#返回识别的字符和可能性以及bounding boxreturn res_set模型资源说明

- cascade.xml- 目前效果最好的cascade检测模型

- cascade_lbp.xml 召回率效果较好,但其错检太多

- char_chi_sim.h5 Keras模型-可识别34类数字和大写英文字 使用14W样本训练

- char_rec.h5 Keras模型-可识别34类数字和大写英文字 使用7W样本训练

- ocr_plate_all_w_rnn_2.h5 基于CNN的序列模型

- ocr_plate_all_gru.h5 基于GRU的序列模型从OCR模型修改,效果目前最好但速度较慢,需要20ms。

- plate_type.h5 用于车牌颜色判断的模型

- model12.h5 左右边界回归模型

其实SimpleRecognizePlateByE2E大概就是首先检测出车牌所在的位置,然后fine turn,然后识别字符。

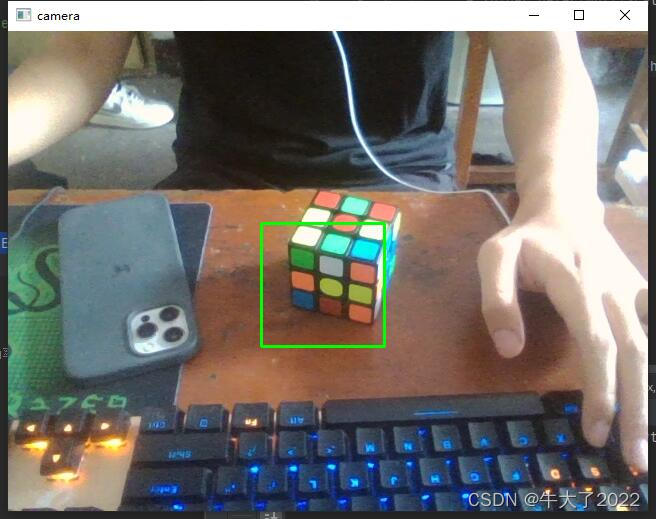

实现对摄像头进行拍照

https://blog.csdn.net/xiexu911/article/details/81225456

测试OK

在树莓派上安装环境

ssh

https://blog.csdn.net/perry0418/article/details/80994840

2019/11/2日早上早早来到协会,没有人的协会速度超快,但是还是出现问题然后没下好tensorflow,找原因找了一会儿协会又没有网了。。干脆等等到学校下载好了。

ubuntu16.04 ssh配置和树莓派远程登录

sudo gedit /etc/ssh/sshd_config

#LoginGraceTime 2m

#PermitRootLogin prohibit-password

PermitRootLogin yes

StrictModes yes

#MaxAuthTries 6

#MaxSessions 10

Permission denied, please try again 一直提示这个,首先我们都是同一个局域网(手机热点)其次ssh的环境弄好了。sshd_config可能是问题之一。

方案一:修改/etc/ssh/sshd_config ---无效:

PermitRootLogin yes

StrictModes yes

都对了然后service ssh restart 还是不行 = =

然后我修改了both root和pi的密码,还是拒绝。

pip install tensorflow errer:

Traceback (most recent call last):File "/usr/lib/python2.7/dist-packages/pip/_internal/cli/base_command.py", line 143, in mainstatus = self.run(options, args)File "/usr/lib/python2.7/dist-packages/pip/_internal/commands/install.py", line 338, in runresolver.resolve(requirement_set)File "/usr/lib/python2.7/dist-packages/pip/_internal/resolve.py", line 102, in resolveself._resolve_one(requirement_set, req)File "/usr/lib/python2.7/dist-packages/pip/_internal/resolve.py", line 256, in _resolve_oneabstract_dist = self._get_abstract_dist_for(req_to_install)File "/usr/lib/python2.7/dist-packages/pip/_internal/resolve.py", line 209, in _get_abstract_dist_forself.require_hashesFile "/usr/lib/python2.7/dist-packages/pip/_internal/operations/prepare.py", line 283, in prepare_linked_requirementprogress_bar=self.progress_barFile "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 836, in unpack_urlprogress_bar=progress_barFile "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 673, in unpack_http_urlprogress_bar)File "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 897, in _download_http_url_download_url(resp, link, content_file, hashes, progress_bar)File "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 617, in _download_urlhashes.check_against_chunks(downloaded_chunks)File "/usr/lib/python2.7/dist-packages/pip/_internal/utils/hashes.py", line 48, in check_against_chunksfor chunk in chunks:File "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 585, in written_chunksfor chunk in chunks:File "/usr/lib/python2.7/dist-packages/pip/_internal/utils/ui.py", line 159, in iterfor x in it:File "/usr/lib/python2.7/dist-packages/pip/_internal/download.py", line 574, in resp_readdecode_content=False):File "/usr/share/python-wheels/urllib3-1.24.1-py2.py3-none-any.whl/urllib3/response.py", line 494, in streamdata = self.read(amt=amt, decode_content=decode_content)File "/usr/share/python-wheels/urllib3-1.24.1-py2.py3-none-any.whl/urllib3/response.py", line 442, in readdata = self._fp.read(amt)File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/filewrapper.py", line 63, in readself._close()File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/filewrapper.py", line 50, in _closeself.__callback(self.__buf.getvalue())File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/controller.py", line 275, in cache_responseself.serializer.dumps(request, response, body=body),File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/serialize.py", line 55, in dumps"body": _b64_encode_bytes(body),File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/serialize.py", line 12, in _b64_encode_bytesreturn base64.b64encode(b).decode("ascii")later on , I use pip install --no-cache-dir tensorflow ,install successfully,but also get those error

pi@raspberrypi:~ $ pip install --no-cache-dir tensorflow

Looking in indexes: https://pypi.org/simple, https://www.piwheels.org/simple

Collecting tensorflowDownloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.14.0-cp27-none-linux_armv7l.whl (100.7MB)100% |████████████████████████████████| 100.7MB 44kB/s

Requirement already satisfied: mock>=2.0.0 in /usr/lib/python2.7/dist-packages (from tensorflow) (2.0.0)

Requirement already satisfied: enum34>=1.1.6 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.1.6)

Requirement already satisfied: numpy<2.0,>=1.14.5 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.16.2)

Collecting absl-py>=0.7.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/3b/72/e6e483e2db953c11efa44ee21c5fdb6505c4dffa447b4263ca8af6676b62/absl-py-0.8.1.tar.gz (103kB)100% |████████████████████████████████| 112kB 450kB/s

Requirement already satisfied: wheel in /usr/lib/python2.7/dist-packages (from tensorflow) (0.32.3)

Requirement already satisfied: keras-preprocessing>=1.0.5 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.1.0)

Collecting tensorboard<1.15.0,>=1.14.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/f4/37/e6a7af1c92c5b68fb427f853b06164b56ea92126bcfd87784334ec5e4d42/tensorboard-1.14.0-py2-none-any.whl (3.1MB)100% |████████████████████████████████| 3.2MB 5.1MB/s

Collecting wrapt>=1.11.1 (from tensorflow)Downloading https://files.pythonhosted.org/packages/23/84/323c2415280bc4fc880ac5050dddfb3c8062c2552b34c2e512eb4aa68f79/wrapt-1.11.2.tar.gz

Collecting backports.weakref>=1.0rc1 (from tensorflow)Downloading https://files.pythonhosted.org/packages/88/ec/f598b633c3d5ffe267aaada57d961c94fdfa183c5c3ebda2b6d151943db6/backports.weakref-1.0.post1-py2.py3-none-any.whl

Collecting keras-applications>=1.0.6 (from tensorflow)Downloading https://files.pythonhosted.org/packages/21/56/4bcec5a8d9503a87e58e814c4e32ac2b32c37c685672c30bc8c54c6e478a/Keras_Applications-1.0.8.tar.gz (289kB)100% |████████████████████████████████| 296kB 2.9MB/s

Requirement already satisfied: six>=1.10.0 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.12.0)

Collecting grpcio>=1.8.6 (from tensorflow)Downloading https://files.pythonhosted.org/packages/53/1f/1d43a8a497148e7482aec1652fb8ab18b124b4b744ffff1c5d5fe33e9fba/grpcio-1.24.3-cp27-cp27mu-linux_armv7l.whl (12.6MB)100% |████████████████████████████████| 12.6MB 7.1MB/s

Collecting astor>=0.6.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/d1/4f/950dfae467b384fc96bc6469de25d832534f6b4441033c39f914efd13418/astor-0.8.0-py2.py3-none-any.whl

Collecting termcolor>=1.1.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/8a/48/a76be51647d0eb9f10e2a4511bf3ffb8cc1e6b14e9e4fab46173aa79f981/termcolor-1.1.0.tar.gz

Collecting gast>=0.2.0 (from tensorflow)Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', BadStatusLine('No status line received - the server has closed the connection',))': /simple/gast/Downloading https://files.pythonhosted.org/packages/1f/04/4e36c33f8eb5c5b6c622a1f4859352a6acca7ab387257d4b3c191d23ec1d/gast-0.3.2.tar.gz

Collecting google-pasta>=0.1.6 (from tensorflow)Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', BadStatusLine('No status line received - the server has closed the connection',))': /simple/google-pasta/Downloading https://files.pythonhosted.org/packages/35/95/d41cd87d147742ef72d5d1dc317318486e3fbffdadf24a60e70dedf01d56/google_pasta-0.1.7-py2-none-any.whl (55kB)100% |████████████████████████████████| 61kB 5.0MB/s

Collecting protobuf>=3.6.1 (from tensorflow)Downloading https://files.pythonhosted.org/packages/ad/c2/86c65136e280607ddb2e5dda19e2953c1174f9919b557d1d154574481de4/protobuf-3.10.0-py2.py3-none-any.whl (434kB)100% |████████████████████████████████| 440kB 1.6MB/s

Collecting tensorflow-estimator<1.15.0rc0,>=1.14.0rc0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/3c/d5/21860a5b11caf0678fbc8319341b0ae21a07156911132e0e71bffed0510d/tensorflow_estimator-1.14.0-py2.py3-none-any.whl (488kB)100% |████████████████████████████████| 491kB 1.3MB/s

Collecting futures>=3.1.1; python_version < "3" (from tensorboard<1.15.0,>=1.14.0->tensorflow)Downloading https://files.pythonhosted.org/packages/d8/a6/f46ae3f1da0cd4361c344888f59ec2f5785e69c872e175a748ef6071cdb5/futures-3.3.0-py2-none-any.whl

Collecting setuptools>=41.0.0 (from tensorboard<1.15.0,>=1.14.0->tensorflow)Downloading https://files.pythonhosted.org/packages/d9/de/554b6310ac87c5b921bc45634b07b11394fe63bc4cb5176f5240addf18ab/setuptools-41.6.0-py2.py3-none-any.whl (582kB)100% |████████████████████████████████| 583kB 1.8MB/s

Collecting markdown>=2.6.8 (from tensorboard<1.15.0,>=1.14.0->tensorflow)Downloading https://files.pythonhosted.org/packages/c0/4e/fd492e91abdc2d2fcb70ef453064d980688762079397f779758e055f6575/Markdown-3.1.1-py2.py3-none-any.whl (87kB)100% |████████████████████████████████| 92kB 4.5MB/s

Requirement already satisfied: werkzeug>=0.11.15 in /usr/lib/python2.7/dist-packages (from tensorboard<1.15.0,>=1.14.0->tensorflow) (0.14.1)

Collecting h5py (from keras-applications>=1.0.6->tensorflow)Downloading https://files.pythonhosted.org/packages/5f/97/a58afbcf40e8abecededd9512978b4e4915374e5b80049af082f49cebe9a/h5py-2.10.0.tar.gz (301kB)100% |████████████████████████████████| 307kB 680kB/s

Installing collected packages: absl-py, futures, setuptools, protobuf, grpcio, markdown, tensorboard, wrapt, backports.weakref, h5py, keras-applications, astor, termcolor, gast, google-pasta, tensorflow-estimator, tensorflowRunning setup.py install for absl-py ... doneThe script markdown_py is installed in '/home/pi/.local/bin' which is not on PATH.Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.The script tensorboard is installed in '/home/pi/.local/bin' which is not on PATH.Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.Running setup.py install for wrapt ... doneRunning setup.py install for h5py ... errorComplete output from command /usr/bin/python -u -c "import setuptools, tokenize;__file__='/tmp/pip-install-27Z_dY/h5py/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-record-Ke61Li/install-record.txt --single-version-externally-managed --compile --user --prefix=:Unable to find pgen, not compiling formal grammar.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Plex/Scanners.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Plex/Actions.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/Scanning.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/Visitor.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/FlowControl.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Runtime/refnanny.pyx because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/FusedNode.py because it changed.Compiling /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Tempita/_tempita.py because it changed.[1/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/FlowControl.py[2/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/FusedNode.py[3/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/Scanning.py[4/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Compiler/Visitor.py[5/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Plex/Actions.py[6/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Plex/Scanners.py[7/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Runtime/refnanny.pyx[8/8] Cythonizing /tmp/easy_install-Uedch2/Cython-0.29.14/Cython/Tempita/_tempita.pywarning: no files found matching 'Doc/*'warning: no files found matching '*.pyx' under directory 'Cython/Debugger/Tests'warning: no files found matching '*.pxd' under directory 'Cython/Debugger/Tests'warning: no files found matching '*.pxd' under directory 'Cython/Utility'warning: no files found matching 'pyximport/README'Installed /tmp/pip-install-27Z_dY/h5py/.eggs/Cython-0.29.14-py2.7-linux-armv7l.eggrunning installrunning buildrunning build_pycreating buildcreating build/lib.linux-armv7l-2.7creating build/lib.linux-armv7l-2.7/h5pycopying h5py/highlevel.py -> build/lib.linux-armv7l-2.7/h5pycopying h5py/h5py_warnings.py -> build/lib.linux-armv7l-2.7/h5pycopying h5py/ipy_completer.py -> build/lib.linux-armv7l-2.7/h5pycopying h5py/__init__.py -> build/lib.linux-armv7l-2.7/h5pycopying h5py/version.py -> build/lib.linux-armv7l-2.7/h5pycreating build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/selections.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/compat.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/dataset.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/dims.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/selections2.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/attrs.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/datatype.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/group.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/__init__.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/vds.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/filters.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/base.py -> build/lib.linux-armv7l-2.7/h5py/_hlcopying h5py/_hl/files.py -> build/lib.linux-armv7l-2.7/h5py/_hlcreating build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dataset_swmr.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_threads.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_attrs_data.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_completions.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_datatype.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_file.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dimension_scales.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/common.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_selections.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_objects.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5f.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_base.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_attrs.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_file_image.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_deprecation.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dtype.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dims_dimensionproxy.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_filters.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/__init__.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5t.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_attribute_create.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5p.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dataset.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_group.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_dataset_getitem.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_slicing.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_file2.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5pl.py -> build/lib.linux-armv7l-2.7/h5py/testscopying h5py/tests/test_h5d_direct_chunk.py -> build/lib.linux-armv7l-2.7/h5py/testscreating build/lib.linux-armv7l-2.7/h5py/tests/test_vdscopying h5py/tests/test_vds/test_highlevel_vds.py -> build/lib.linux-armv7l-2.7/h5py/tests/test_vdscopying h5py/tests/test_vds/test_lowlevel_vds.py -> build/lib.linux-armv7l-2.7/h5py/tests/test_vdscopying h5py/tests/test_vds/__init__.py -> build/lib.linux-armv7l-2.7/h5py/tests/test_vdscopying h5py/tests/test_vds/test_virtual_source.py -> build/lib.linux-armv7l-2.7/h5py/tests/test_vdsrunning build_ext('Loading library to get version:', 'libhdf5.so')error: libhdf5.so: cannot open shared object file: No such file or directory----------------------------------------

Command "/usr/bin/python -u -c "import setuptools, tokenize;__file__='/tmp/pip-install-27Z_dY/h5py/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-record-Ke61Li/install-record.txt --single-version-externally-managed --compile --user --prefix=" failed with error code 1 in /tmp/pip-install-27Z_dY/h5py/after install h5py by sudo apt-get install libhdf5-dev & sudo apt-get install python-h5py,I finally successfully install tensorflow

pi@raspberrypi:~ $ pip install --no-cache-dir tensorflow

Looking in indexes: https://pypi.org/simple, https://www.piwheels.org/simple

Collecting tensorflowDownloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.14.0-cp27-none-linux_armv7l.whl (100.7MB)100% |████████████████████████████████| 100.7MB 3.6MB/s

Requirement already satisfied: mock>=2.0.0 in /usr/lib/python2.7/dist-packages (from tensorflow) (2.0.0)

Requirement already satisfied: enum34>=1.1.6 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.1.6)

Requirement already satisfied: numpy<2.0,>=1.14.5 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.16.2)

Requirement already satisfied: absl-py>=0.7.0 in ./.local/lib/python2.7/site-packages (from tensorflow) (0.8.1)

Requirement already satisfied: wheel in /usr/lib/python2.7/dist-packages (from tensorflow) (0.32.3)

Requirement already satisfied: keras-preprocessing>=1.0.5 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.1.0)

Requirement already satisfied: tensorboard<1.15.0,>=1.14.0 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.14.0)

Requirement already satisfied: wrapt>=1.11.1 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.11.2)

Requirement already satisfied: backports.weakref>=1.0rc1 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.0.post1)

Collecting keras-applications>=1.0.6 (from tensorflow)Downloading https://files.pythonhosted.org/packages/21/56/4bcec5a8d9503a87e58e814c4e32ac2b32c37c685672c30bc8c54c6e478a/Keras_Applications-1.0.8.tar.gz (289kB)100% |████████████████████████████████| 296kB 3.4MB/s

Requirement already satisfied: six>=1.10.0 in /usr/lib/python2.7/dist-packages (from tensorflow) (1.12.0)

Requirement already satisfied: grpcio>=1.8.6 in ./.local/lib/python2.7/site-packages (from tensorflow) (1.24.3)

Collecting astor>=0.6.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/d1/4f/950dfae467b384fc96bc6469de25d832534f6b4441033c39f914efd13418/astor-0.8.0-py2.py3-none-any.whl

Collecting termcolor>=1.1.0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/8a/48/a76be51647d0eb9f10e2a4511bf3ffb8cc1e6b14e9e4fab46173aa79f981/termcolor-1.1.0.tar.gz

Collecting gast>=0.2.0 (from tensorflow)Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', BadStatusLine('No status line received - the server has closed the connection',))': /simple/gast/Downloading https://files.pythonhosted.org/packages/1f/04/4e36c33f8eb5c5b6c622a1f4859352a6acca7ab387257d4b3c191d23ec1d/gast-0.3.2.tar.gz

Collecting google-pasta>=0.1.6 (from tensorflow)Downloading https://files.pythonhosted.org/packages/35/95/d41cd87d147742ef72d5d1dc317318486e3fbffdadf24a60e70dedf01d56/google_pasta-0.1.7-py2-none-any.whl (55kB)100% |████████████████████████████████| 61kB 5.8MB/s

Requirement already satisfied: protobuf>=3.6.1 in ./.local/lib/python2.7/site-packages (from tensorflow) (3.10.0)

Collecting tensorflow-estimator<1.15.0rc0,>=1.14.0rc0 (from tensorflow)Downloading https://files.pythonhosted.org/packages/3c/d5/21860a5b11caf0678fbc8319341b0ae21a07156911132e0e71bffed0510d/tensorflow_estimator-1.14.0-py2.py3-none-any.whl (488kB)100% |████████████████████████████████| 491kB 2.5MB/s

Requirement already satisfied: futures>=3.1.1; python_version < "3" in ./.local/lib/python2.7/site-packages (from tensorboard<1.15.0,>=1.14.0->tensorflow) (3.3.0)

Requirement already satisfied: setuptools>=41.0.0 in ./.local/lib/python2.7/site-packages (from tensorboard<1.15.0,>=1.14.0->tensorflow) (41.6.0)

Requirement already satisfied: markdown>=2.6.8 in ./.local/lib/python2.7/site-packages (from tensorboard<1.15.0,>=1.14.0->tensorflow) (3.1.1)

Requirement already satisfied: werkzeug>=0.11.15 in /usr/lib/python2.7/dist-packages (from tensorboard<1.15.0,>=1.14.0->tensorflow) (0.14.1)

Requirement already satisfied: h5py in /usr/lib/python2.7/dist-packages (from keras-applications>=1.0.6->tensorflow) (2.8.0)

Installing collected packages: keras-applications, astor, termcolor, gast, google-pasta, tensorflow-estimator, tensorflowRunning setup.py install for keras-applications ... doneRunning setup.py install for termcolor ... doneRunning setup.py install for gast ... doneThe scripts freeze_graph, saved_model_cli, tensorboard, tf_upgrade_v2, tflite_convert, toco and toco_from_protos are installed in '/home/pi/.local/bin' which is not on PATH.Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed astor-0.8.0 gast-0.3.2 google-pasta-0.1.7 keras-applications-1.0.8 tensorflow-1.14.0 tensorflow-estimator-1.14.0 termcolor-1.1.0

after this I wanna install Keras but also got this

C compiler: arm-linux-gnueabihf-gcc -pthread -DNDEBUG -g -fwrapv -O2 -Wall -Wstrict-prototypes -fno-strict-aliasing -Wdate-time -D_FORTIFY_SOURCE=2 -g -fdebug-prefix-map=/build/python2.7-9NJ3qw/python2.7-2.7.16=. -fstack-protector-strong -Wformat -Werror=format-security -fPICcompile options: '-I/usr/include/python2.7 -c'arm-linux-gnueabihf-gcc: _configtest.carm-linux-gnueabihf-gcc -pthread _configtest.o -o _configtestsuccess!removing: _configtest.c _configtest.o _configtest.o.d _configtestbuilding data_files sourcesbuild_src: building npy-pkg config filesrunning build_pycopying scipy/version.py -> build/lib.linux-armv7l-2.7/scipycopying build/src.linux-armv7l-2.7/scipy/__config__.py -> build/lib.linux-armv7l-2.7/scipyrunning build_clibcustomize UnixCCompilercustomize UnixCCompiler using build_clibbuilding 'dfftpack' libraryerror: library dfftpack has Fortran sources but no Fortran compiler found----------------------------------------

Command "/usr/bin/python -u -c "import setuptools, tokenize;__file__='/tmp/pip-install-lzYfrA/scipy/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-record-EIWwon/install-record.txt --single-version-externally-managed --compile --user --prefix=" failed with error code 1 in /tmp/pip-install-lzYfrA/scipy/

pip install scipy also get this

install --record /tmp/pip-record-XgluiL/install-record.txt --single-version-externally-managed --compile --user --prefix=" failed with error code 1 in /tmp/pip-install-B9SdP1/scipy

so it seems that you derectly download scipy by pip

so I use sudo apt-get install python-scipy (seems once I done this before)

Keras:

pi@raspberrypi:~ $ pip install Keras

Looking in indexes: https://pypi.org/simple, https://www.piwheels.org/simple

Collecting KerasUsing cached https://files.pythonhosted.org/packages/ad/fd/6bfe87920d7f4fd475acd28500a42482b6b84479832bdc0fe9e589a60ceb/Keras-2.3.1-py2.py3-none-any.whl

Requirement already satisfied: numpy>=1.9.1 in /usr/lib/python2.7/dist-packages (from Keras) (1.16.2)

Requirement already satisfied: pyyaml in ./.local/lib/python2.7/site-packages (from Keras) (5.1.2)

Requirement already satisfied: keras-preprocessing>=1.0.5 in ./.local/lib/python2.7/site-packages (from Keras) (1.1.0)

Requirement already satisfied: six>=1.9.0 in /usr/lib/python2.7/dist-packages (from Keras) (1.12.0)

Requirement already satisfied: h5py in /usr/lib/python2.7/dist-packages (from Keras) (2.8.0)

Requirement already satisfied: keras-applications>=1.0.6 in ./.local/lib/python2.7/site-packages (from Keras) (1.0.8)

Requirement already satisfied: scipy>=0.14 in /usr/lib/python2.7/dist-packages (from Keras) (1.1.0)

Installing collected packages: Keras

Successfully installed Keras-2.3.1pi@raspberrypi:~ $ python

Python 2.7.16 (default, Apr 6 2019, 01:42:57)

[GCC 8.2.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import keras

Using TensorFlow backend.

>>> exit()

FFFFFFFFFFFFFFFFFFFinally

其他参考资料:

https://www.cnblogs.com/charlotte77/p/8431077.html

https://cloud.tencent.com/developer/article/1480222

https://github.com/GuiltyNeuron/ANPR

https://blog.csdn.net/weixin_43648821/article/details/97016769

https://blog.csdn.net/ShadowN1ght/article/details/78571187