1. 问题:

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

20/11/05 21:03:21 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder':at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1053)at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130)at org.apache.spark.sql.SparkSession$$anonfun$sessionState$2.apply(SparkSession.scala:130)at scala.Option.getOrElse(Option.scala:121)at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:129)at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:126)at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938)at org.apache.spark.sql.SparkSession$Builder$$anonfun$getOrCreate$5.apply(SparkSession.scala:938)at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:99)at scala.collection.mutable.HashMap$$anonfun$foreach$1.apply(HashMap.scala:99)at scala.collection.mutable.HashTable$class.foreachEntry(HashTable.scala:230)at scala.collection.mutable.HashMap.foreachEntry(HashMap.scala:40)at scala.collection.mutable.HashMap.foreach(HashMap.scala:99)at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:938)at org.apache.spark.repl.Main$.createSparkSession(Main.scala:97)... 47 elided

Caused by: org.apache.spark.sql.AnalysisException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/root/1af1f42a-4233-4abd-b335-62a580f200e5. Name node is in safe mode.

The reported blocks 122 needs additional 5 blocks to reach the threshold 0.9990 of total blocks 127.

The number of live datanodes 1 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1366)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4258)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4233)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:853)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:600)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)

;at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:106)at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:193)at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:105)at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:93)at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:39)at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:54)at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52)at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:35)at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:289)at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1050)... 61 more

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/root/1af1f42a-4233-4abd-b335-62a580f200e5. Name node is in safe mode.

The reported blocks 122 needs additional 5 blocks to reach the threshold 0.9990 of total blocks 127.

The number of live datanodes 1 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1366)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4258)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4233)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:853)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:600)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:522)at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:191)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:264)at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:362)at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:266)at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:66)at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:65)at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:194)at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194)at org.apache.spark.sql.hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194)at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97)... 70 more

Caused by: org.apache.hadoop.ipc.RemoteException: Cannot create directory /tmp/hive/root/1af1f42a-4233-4abd-b335-62a580f200e5. Name node is in safe mode.

The reported blocks 122 needs additional 5 blocks to reach the threshold 0.9990 of total blocks 127.

The number of live datanodes 1 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1366)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4258)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4233)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:853)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:600)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)at org.apache.hadoop.ipc.Client.call(Client.java:1470)at org.apache.hadoop.ipc.Client.call(Client.java:1401)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)at com.sun.proxy.$Proxy21.mkdirs(Unknown Source)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:539)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)at com.sun.proxy.$Proxy22.mkdirs(Unknown Source)at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2742)at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2713)at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:870)at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:866)at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:866)at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:859)at org.apache.hadoop.hive.ql.session.SessionState.createPath(SessionState.java:639)at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:574)at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:508)... 84 more

<console>:14: error: not found: value sparkimport spark.implicits._^

<console>:14: error: not found: value sparkimport spark.sql^

Welcome to____ __/ __/__ ___ _____/ /___\ \/ _ \/ _ `/ __/ '_//___/ .__/\_,_/_/ /_/\_\ version 2.2.0/_/Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_202)

Type in expressions to have them evaluated.

Type :help for more information.2. 执行:

NameNode启动时会进入该模式进行检测,检查数据块的完整性,处于该模式下的集群无法对HDFS进行操作,可以手动离开安全模式

hdfs dfsadmin -safemode leave而进入安全模式是

# hadoop dfsadmin -saftmode enter 获取当前安全模式的状态

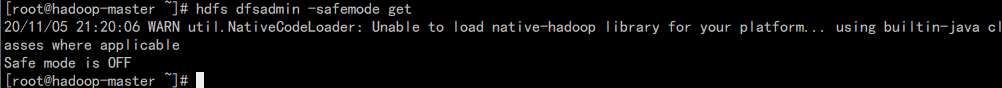

hdfs dfsadmin -safemode get