import socket

import os

import sys

import time

import requests

from bs4 import BeautifulSoup

from urllib. parse import urlparse

def get_ip ( ip) : data = socket. gethostbyname( ip) print ( data) return data

'''

21/tcp FTP 文件传输协议

22/tcp SSH 安全登录、文件传送(SCP)和端口重定向

23/tcp Telnet 不安全的文本传送

25/tcp SMTP Simple Mail Transfer Protocol (E-mail)

69/udp TFTP Trivial File Transfer Protocol

79/tcp finger Finger

80/tcp HTTP 超文本传送协议 (WWW)

88/tcp Kerberos Authenticating agent

110/tcp POP3 Post Office Protocol (E-mail)

113/tcp ident old identification server system

119/tcp NNTP used for usenet newsgroups

220/tcp IMAP3

443/tcp HTTPS used for securely transferring web pages

'''

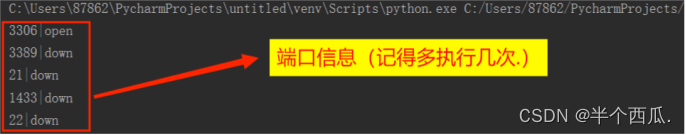

def get_port ( add) : print ( add) server = socket. socket( socket. AF_INET, socket. SOCK_STREAM) ports = { '21' , '22' , '23' , '25' , '69' , '79' , '80' , '88' , '110' , '113' , '119' , '220' , '443' } for port in ports: result = server. connect_ex( ( add, int ( port) ) ) if result == 0 : print ( port + ":open" ) else : print ( port + ":close" )

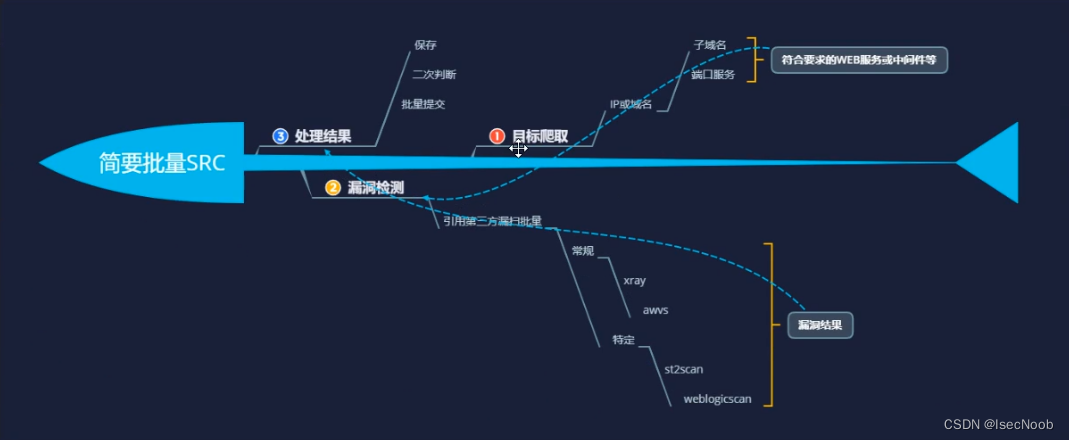

def get_CDN ( add) : parm = 'nslookup ' + addresult = os. popen( parm) . read( ) if result. count( "." ) > 8 : print ( add + " 存在CDN" ) else : print ( add + " 不存在CDN" )

def zym_list_check ( url) : for zym_list in open ( 'zym_list.txt' ) : zym_list= zym_list. replace( '\n' , "" ) zym_url = zym_list + "." + urltry : ip = socket. gethostbyname( zym_url) print ( zym_url + "-->" + ip) time. sleep( 0.1 ) except Exception as e: print ( zym_url + "-->" + ip + "error" ) time. sleep( 0.1 )

def bing_search ( site, pages) : Subdomain = [ ] headers = { 'Accept' : '*/*' , 'Accept-Language' : 'en-US,en;q=0.8' , 'Cache-Control' : 'max-age=0' , 'User-Agent' : 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36' , 'Connection' : 'keep-alive' , 'Referer' : 'http://www.baidu.com/' } for i in range ( 1 , int ( pages) + 1 ) : url= "https://cn.bing.com/search?q=site%3A" + site+ "&go=Search&qs=ds&first=" + str ( ( int ( i) - 1 ) * 10 ) + "&FORM=PERE" conn = requests. session( ) conn. get( 'http://cn.bing.com' , headers= headers) html = conn. get( url, stream= True , headers= headers) soup = BeautifulSoup( html. content, 'html.parser' ) job_bt = soup. findAll( 'h2' ) for i in job_bt: link = i. a. get( 'href' ) domain = str ( urlparse( link) . scheme + "://" + urlparse( link) . netloc) if domain in Subdomain: pass else : Subdomain. append( domain) print ( domain) if __name__ == '__main__' : if len ( sys. argv) == 3 : site = sys. argv[ 1 ] page = sys. argv[ 2 ] else : print ( "usage:%s baidu.com 10" % sys. argv[ 0 ] ) sys. exit( - 1 ) Subdomain = bing_search( site, page)