环境

kubesphere : v3.3.1

Docker:20.10.8

Fluent-Bit:2.0.6-2.0.8

ES+Kibana:7.9.3

Docker日志示例

{"log":"2023-01-10 11:32:50.021 - INFO --- [scheduling-1] traceId: p6spy : 1|conn-0|statement|SELECT fd_id AS id,fd_user_id AS userId,fd_specific_user AS specificUser,fd_home_assessment AS homeAssessment,fd_home_assessment_time AS homeAssessmentTime,fd_end_home_assessment AS endHomeAssessment,fd_end_home_assessment_time AS endHomeAssessmentTime,fd_daily_assessment AS dailyAssessment,fd_daily_count AS dailyCount,fd_daily_assessment_time AS dailyAssessmentTime,fd_sort AS sort,fd_delete_flag AS deleteFlag,fd_update_time AS updateTime,fd_create_time AS createTime,fd_version AS version,fd_org_id AS orgId FROM t_patient_assessment WHERE (fd_daily_assessment = 1) \n","stream":"stdout","time":"2023-01-10T03:32:50.021311904Z"}

{"log":"2023-01-10 11:32:50.022 - INFO --- [scheduling-1] traceId: com.gjwlyy.covid.core.job.DailyReminderJob : \u003c\u003c\u003c 当前是用户1gkfb79bq39pe8mi37jknh81crt5k82m隔离的-23天 \n","stream":"stdout","time":"2023-01-10T03:32:50.023079165Z"}

{"log":"2023-01-10 11:32:50.025 - ERROR --- [scheduling-1] traceId: org.springframework.scheduling.support.TaskUtils$LoggingErrorHandler : Unexpected error occurred in scheduled task java.lang.NumberFormatException: For input string: \"[{\"relation\":\"or\",\"rule\":\"=\",\"value\":\"0\"}]\"\n","stream":"stdout","time":"2023-01-10T03:32:50.025703129Z"}

{"log":"\u0009at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)\n","stream":"stdout","time":"2023-01-10T03:32:50.025727938Z"}

{"log":"\u0009at java.lang.Long.parseLong(Long.java:589)\n","stream":"stdout","time":"2023-01-10T03:32:50.025733862Z"}

{"log":"\u0009at java.lang.Long.parseLong(Long.java:631)\n","stream":"stdout","time":"2023-01-10T03:32:50.025737051Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob.lambda$dealPatientDailyJob$1(DailyReminderJob.java:116)\n","stream":"stdout","time":"2023-01-10T03:32:50.025740047Z"}

{"log":"\u0009at java.util.ArrayList.forEach(ArrayList.java:1257)\n","stream":"stdout","time":"2023-01-10T03:32:50.025743184Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob.lambda$dealPatientDailyJob$2(DailyReminderJob.java:110)\n","stream":"stdout","time":"2023-01-10T03:32:50.025747023Z"}

{"log":"\u0009at java.util.ArrayList.forEach(ArrayList.java:1257)\n","stream":"stdout","time":"2023-01-10T03:32:50.025750286Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob.lambda$dealPatientDailyJob$3(DailyReminderJob.java:109)\n","stream":"stdout","time":"2023-01-10T03:32:50.025753216Z"}

{"log":"\u0009at java.util.ArrayList.forEach(ArrayList.java:1257)\n","stream":"stdout","time":"2023-01-10T03:32:50.025756256Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob.dealPatientDailyJob(DailyReminderJob.java:106)\n","stream":"stdout","time":"2023-01-10T03:32:50.025759298Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob.dailyJob(DailyReminderJob.java:61)\n","stream":"stdout","time":"2023-01-10T03:32:50.025762317Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob$$FastClassBySpringCGLIB$$34fb91e0.invoke(\u003cgenerated\u003e)\n","stream":"stdout","time":"2023-01-10T03:32:50.025765223Z"}

{"log":"\u0009at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)\n","stream":"stdout","time":"2023-01-10T03:32:50.025768376Z"}

{"log":"\u0009at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:688)\n","stream":"stdout","time":"2023-01-10T03:32:50.025771336Z"}

{"log":"\u0009at com.gjwlyy.covid.core.job.DailyReminderJob$$EnhancerBySpringCGLIB$$7af1cd70.dailyJob(\u003cgenerated\u003e)\n","stream":"stdout","time":"2023-01-10T03:32:50.025774261Z"}

{"log":"\u0009at sun.reflect.GeneratedMethodAccessor167.invoke(Unknown Source)\n","stream":"stdout","time":"2023-01-10T03:32:50.025777303Z"}

{"log":"\u0009at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\n","stream":"stdout","time":"2023-01-10T03:32:50.025780146Z"}

{"log":"\u0009at java.lang.reflect.Method.invoke(Method.java:498)\n","stream":"stdout","time":"2023-01-10T03:32:50.025783075Z"}

{"log":"\u0009at org.springframework.scheduling.support.ScheduledMethodRunnable.run(ScheduledMethodRunnable.java:84)\n","stream":"stdout","time":"2023-01-10T03:32:50.025785872Z"}

{"log":"\u0009at org.springframework.scheduling.support.DelegatingErrorHandlingRunnable.run(DelegatingErrorHandlingRunnable.java:54)\n","stream":"stdout","time":"2023-01-10T03:32:50.025788727Z"}

{"log":"\u0009at org.springframework.scheduling.concurrent.ReschedulingRunnable.run(ReschedulingRunnable.java:93)\n","stream":"stdout","time":"2023-01-10T03:32:50.025798209Z"}

{"log":"\u0009at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)\n","stream":"stdout","time":"2023-01-10T03:32:50.02580237Z"}

{"log":"\u0009at java.util.concurrent.FutureTask.run(FutureTask.java:266)\n","stream":"stdout","time":"2023-01-10T03:32:50.02580537Z"}

{"log":"\u0009at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)\n","stream":"stdout","time":"2023-01-10T03:32:50.025808239Z"}

{"log":"\u0009at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)\n","stream":"stdout","time":"2023-01-10T03:32:50.025811169Z"}

{"log":"\u0009at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)\n","stream":"stdout","time":"2023-01-10T03:32:50.025814181Z"}

{"log":"\u0009at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)\n","stream":"stdout","time":"2023-01-10T03:32:50.025817118Z"}

{"log":"\u0009at java.lang.Thread.run(Thread.java:748)\n","stream":"stdout","time":"2023-01-10T03:32:50.025820003Z"}

{"log":"\n","stream":"stdout","time":"2023-01-10T03:32:50.025822812Z"}

{"log":"2023-01-10 11:33:00.001 - INFO --- [scheduling-1] traceId: com.gjwlyy.covid.core.job.DailyReminderJob : \u003c\u003c\u003c dailyJob 患者日程定时任务开始.请求参数 \n","stream":"stdout","time":"2023-01-10T03:33:00.001296014Z"}

{"log":"2023-01-10 11:33:00.007 - INFO --- [scheduling-1] traceId: p6spy : 1|conn-0|statement|SELECT fd_id AS id,fd_schedule_name AS scheduleName,fd_schedule_trigger_time AS scheduleTriggerTime,fd_status AS status,fd_sort AS sort,fd_delete_flag AS deleteFlag,fd_update_time AS updateTime,fd_create_time AS createTime,fd_version AS version,fd_org_id AS orgId FROM t_management_schedule WHERE (fd_delete_flag = false AND fd_status = true) \n","stream":"stdout","time":"2023-01-10T03:33:00.007959022Z"}

{"log":"2023-01-10 11:33:00.008 - INFO --- [scheduling-1] traceId: com.gjwlyy.covid.core.job.DailyReminderJob : \u003c\u003c\u003c 定时任务涉及的管理日程记录id:[100099] \n","stream":"stdout","time":"2023-01-10T03:33:00.008512022Z"}安装Fluent-Bit

kubectl create ns fluent-bit

helm repo add fluent https://fluent.github.io/helm-charts

helm upgrade --install fluent-bit fluent/fluent-bit -n fluent-bit

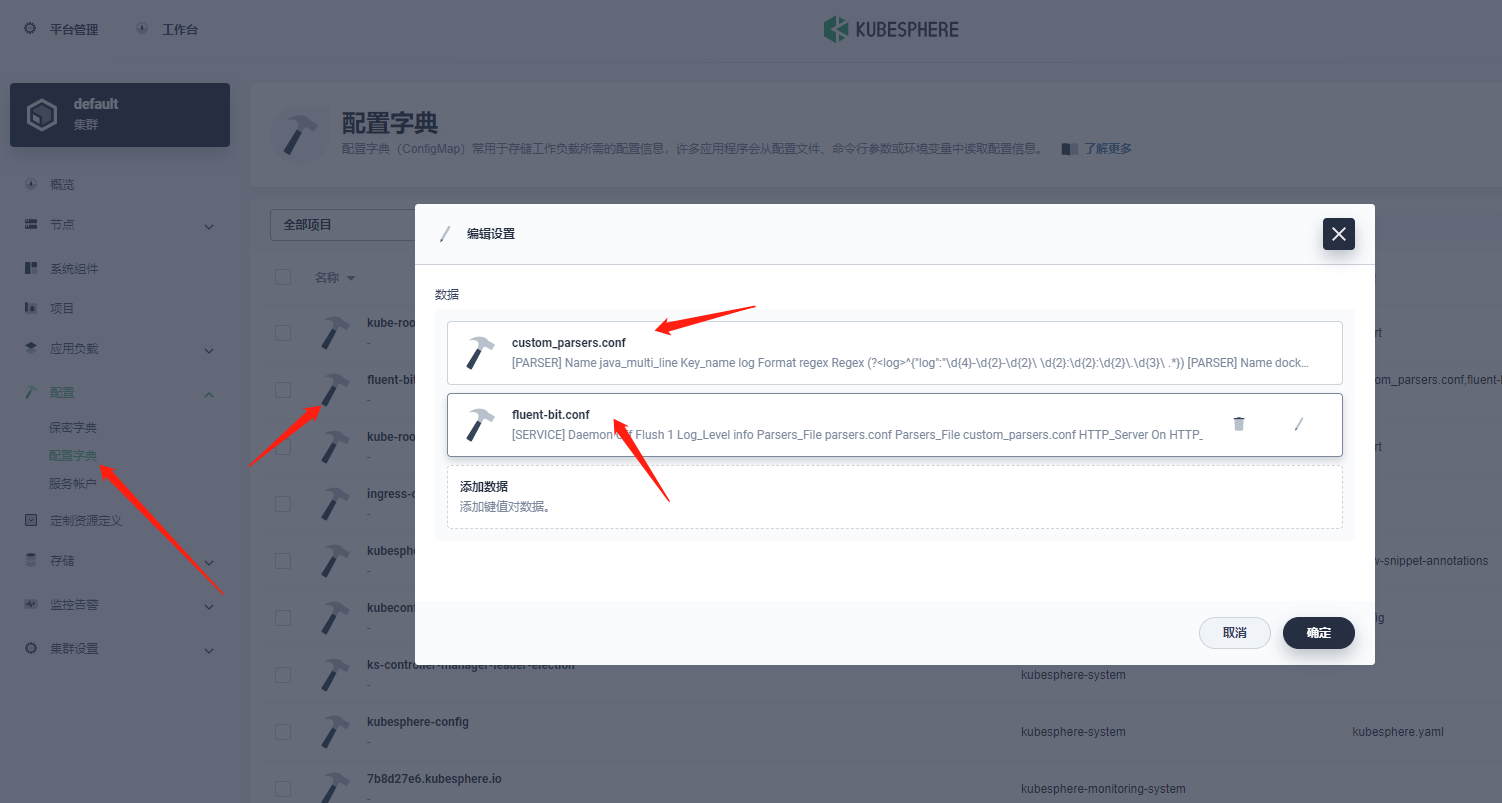

配置Fluent-Bit

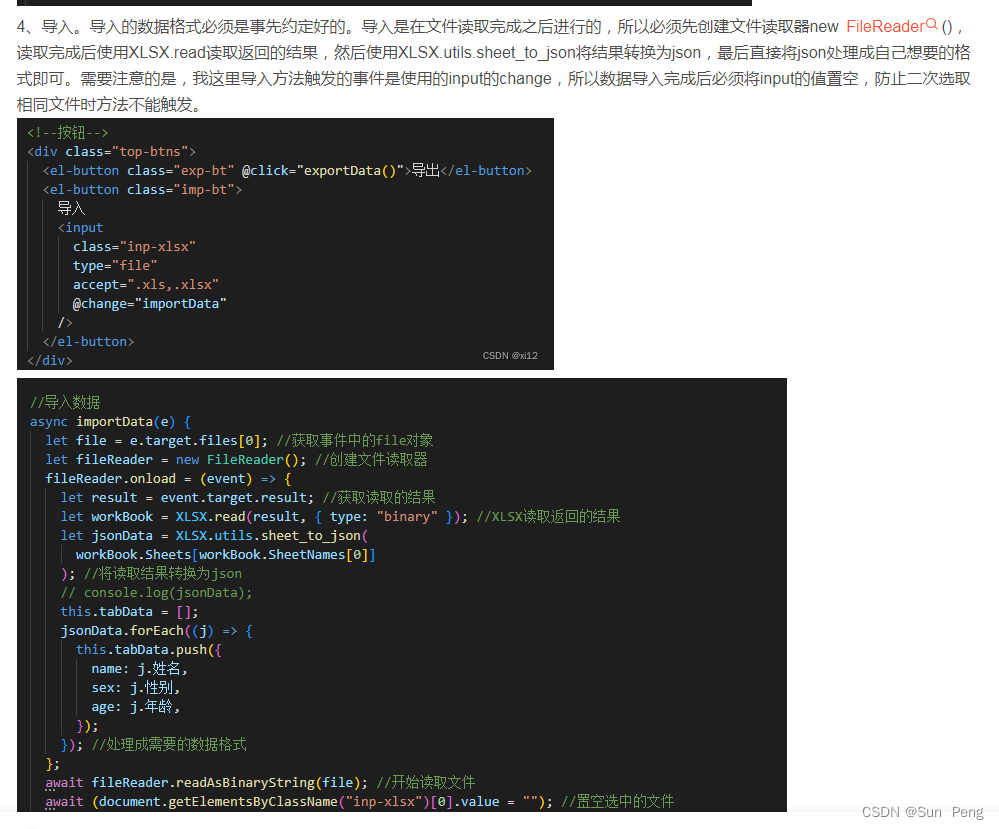

编写文件custom_parsers.conf

[PARSER]Name java_multi_line #起个名Key_name log #默认的Key_name为logFormat regex #固定值Regex (?<log>^{"log":"\d{4}-\d{2}-\d{2}\ \d{2}:\d{2}:\d{2}\.\d{3}\ .*}) #匹配log字段(时间点,例如:2023-01-09 03:59:24.617)

[PARSER]Name docker_no_timeFormat jsonTime_Keep OffTime_Key timeTime_Format %Y-%m-%dT%H:%M:%S.%L编写文件fluent-bit.conf

[SERVICE]Daemon OffFlush 1Log_Level infoParsers_File parsers.confParsers_File custom_parsers.confHTTP_Server OnHTTP_Listen 0.0.0.0HTTP_Port 2020Health_Check On[INPUT]Name tailPath /var/log/containers/*.log #收集日志的地址Exclude_Path /var/log/containers/*_kube*-system_*.log #排除不想收集的日志Docker_Mode On #如果启用,插件将重新组合分离的Docker日志行,然后将它们传递给上面配置的任何解析器。该模式不能与Multiline 模式同时使用。 (重要)Docker_Mode_Flush 5 #等待时间(以秒为单位),以处理排队的多行消息 (重要)Docker_Mode_Parser java_multi_line #指定处理器的名称,custom_parsers.conf里面定义的 (重要)Parser docker# multiline.parser docker, criTag kube.*Mem_Buf_Limit 5MBSkip_Long_Lines On

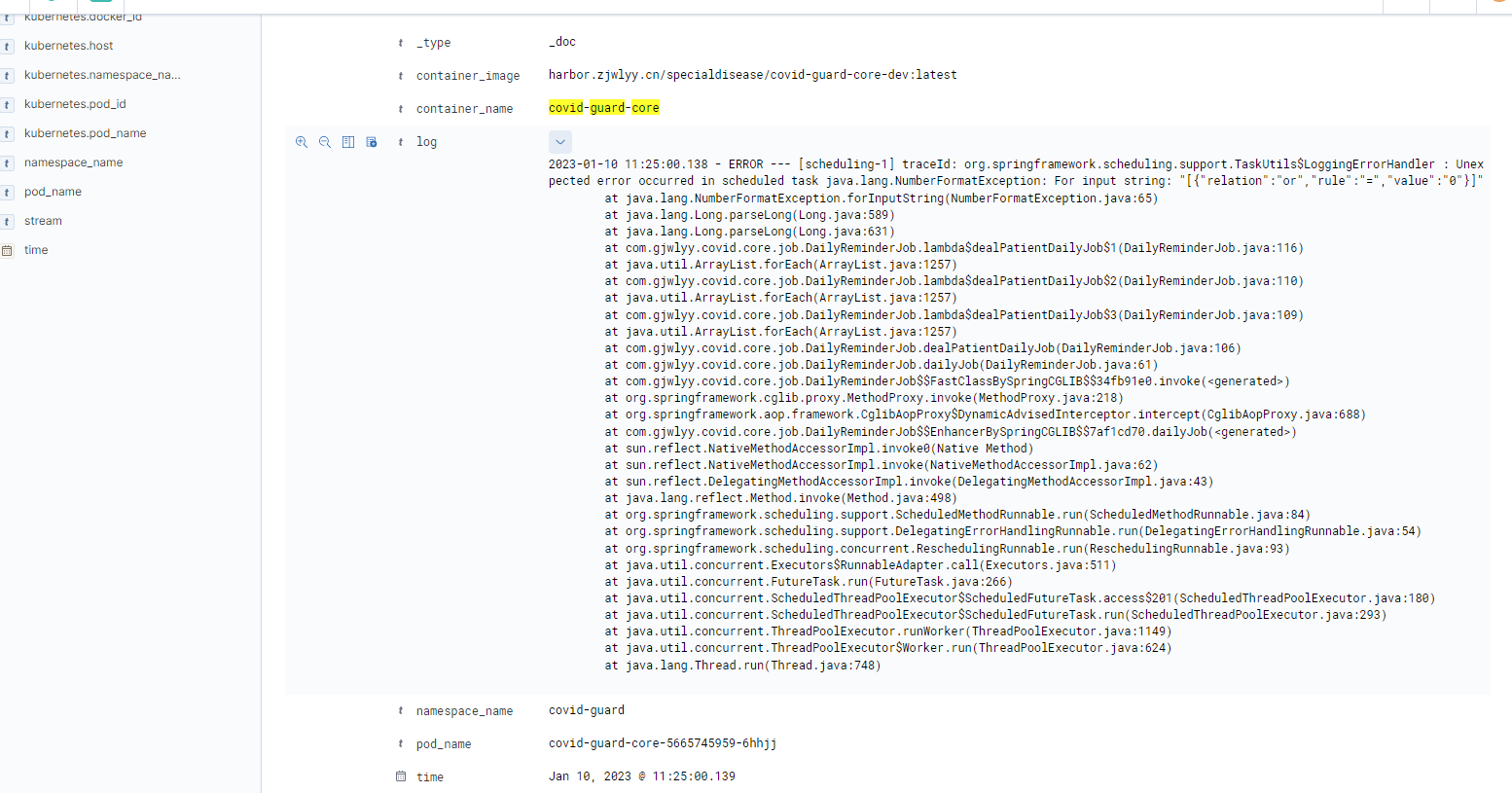

[FILTER]Name kubernetesMatch kube.*Annotations Off #不收集注解Labels Off #不收集标签Merge_Log Off #当启用时,它检查日志字段内容是否是JSON字符串映射,如果是,它将映射字段作为日志结构的一部分追加。Keep_Log OffK8S-Logging.Parser On #允许Kubernetes Pods建议预定义的解析K8S-Logging.Exclude On #允许Kubernetes Pods从日志处理器中排除它们的日志[FILTER]Name nest #操作嵌套数据Match kube.*Operation lift #通过lift模式,从记录的将指定map中的key value都提取出来放到上一层Nested_under kubernetes #指定需要提取的map名Add_prefix kubernetes_ #添加前缀[Filter]Name modifyMatch kube.*Remove stream #移除字段streamRemove kubernetes_docker_idRemove kubernetes_pod_idRemove kubernetes_hostRemove kubernetes_container_hashRename kubernetes_namespace_name namespace_name #修改字段名称Rename kubernetes_pod_name pod_nameRename kubernetes_container_name container_nameRename kubernetes_container_image container_image[OUTPUT]Name esHost 192.168.31.253Port 9200HTTP_User elasticHTTP_Passwd 6ygXXXXXXXXXXXXXLogstash_Format OnReplace_Dots On #替换“.”变为“_”Logstash_Prefix kubesphere #索引名称前缀Retry_Limit False效果展示