1.隐马尔可夫模型是关于时序的概率模型,描述由一个隐藏的马尔可夫链随机生成不可观测的状态的序列,再由各个状态随机生成一个观测而产生观测的序列的过程。

隐马尔可夫模型由初始状态概率向量 π \pi π、状态转移概率矩阵 A A A和观测概率矩阵 B B B决定。因此,隐马尔可夫模型可以写成 λ = ( A , B , π ) \lambda=(A, B, \pi) λ=(A,B,π)。

隐马尔可夫模型是一个生成模型,表示状态序列和观测序列的联合分布,但是状态序列是隐藏的,不可观测的。

隐马尔可夫模型可以用于标注,这时状态对应着标记。标注问题是给定观测序列预测其对应的标记序列。

2.概率计算问题。给定模型 λ = ( A , B , π ) \lambda=(A, B, \pi) λ=(A,B,π)和观测序列 O = ( o 1 , o 2 , … , o T ) O=(o_1,o_2,…,o_T) O=(o1,o2,…,oT),计算在模型 λ \lambda λ下观测序列 O O O出现的概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。前向-后向算法是通过递推地计算前向-后向概率可以高效地进行隐马尔可夫模型的概率计算。

3.学习问题。已知观测序列 O = ( o 1 , o 2 , … , o T ) O=(o_1,o_2,…,o_T) O=(o1,o2,…,oT),估计模型 λ = ( A , B , π ) \lambda=(A, B, \pi) λ=(A,B,π)参数,使得在该模型下观测序列概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)最大。即用极大似然估计的方法估计参数。Baum-Welch算法,也就是EM算法可以高效地对隐马尔可夫模型进行训练。它是一种非监督学习算法。

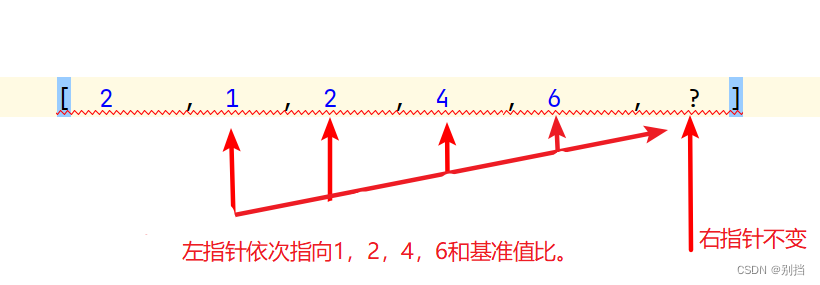

4.预测问题。已知模型 λ = ( A , B , π ) \lambda=(A, B, \pi) λ=(A,B,π)和观测序列 O = ( o 1 , o 2 , … , o T ) O=(o_1,o_2,…,o_T) O=(o1,o2,…,oT),求对给定观测序列条件概率 P ( I ∣ O ) P(I|O) P(I∣O)最大的状态序列 I = ( i 1 , i 2 , … , i T ) I=(i_1,i_2,…,i_T) I=(i1,i2,…,iT)。维特比算法应用动态规划高效地求解最优路径,即概率最大的状态序列。

python">import numpy as npclass HiddenMarkov:def __init__(self):self.alphas = Noneself.forward_P = Noneself.betas = Noneself.backward_P = None# 前向算法def forward(self, Q, V, A, B, O, PI):# 状态序列的大小N = len(Q)# 观测序列的大小M = len(O)# 初始化前向概率alpha值alphas = np.zeros((N, M))# 时刻数=观测序列数T = M# 遍历每一个时刻,计算前向概率alpha值for t in range(T):# 得到序列对应的索引indexOfO = V.index(O[t])# 遍历状态序列for i in range(N):# 初始化alpha初值if t == 0:# 公式(10.15)alphas[i][t] = PI[t][i] * B[i][indexOfO]print('alpha1(%d) = p%db%db(o1) = %f' %(i + 1, i, i, alphas[i][t]))else:# 公式(10.16)alphas[i][t] = np.dot([alpha[t - 1] for alpha in alphas],[a[i] for a in A]) * B[i][indexOfO]print('alpha%d(%d) = [sigma alpha%d(i)ai%d]b%d(o%d) = %f' %(t + 1, i + 1, t - 1, i, i, t, alphas[i][t]))# 公式(10.17)self.forward_P = np.sum([alpha[M - 1] for alpha in alphas])self.alphas = alphas# 后向算法def backward(self, Q, V, A, B, O, PI):# 状态序列的大小N = len(Q)# 观测序列的大小M = len(O)# 初始化后向概率beta值,公式(10.19)betas = np.ones((N, M))#for i in range(N):print('beta%d(%d) = 1' % (M, i + 1))# 对观测序列逆向遍历for t in range(M - 2, -1, -1):# 得到序列对应的索引indexOfO = V.index(O[t + 1])# 遍历状态序列for i in range(N):# 公式(10.20)betas[i][t] = np.dot(np.multiply(A[i], [b[indexOfO] for b in B]),[beta[t + 1] for beta in betas])realT = t + 1realI = i + 1print('beta%d(%d) = sigma[a%djbj(o%d)beta%d(j)] = (' %(realT, realI, realI, realT + 1, realT + 1),end='')for j in range(N):print("%.2f * %.2f * %.2f + " %(A[i][j], B[j][indexOfO], betas[j][t + 1]),end='')print("0) = %.3f" % betas[i][t])# 取出第一个值indexOfO = V.index(O[0])self.betas = betas# 公式(10.21)P = np.dot(np.multiply(PI, [b[indexOfO] for b in B]),[beta[0] for beta in betas])self.backward_P = Pprint("P(O|lambda) = ", end="")for i in range(N):print("%.1f * %.1f * %.5f + " %(PI[0][i], B[i][indexOfO], betas[i][0]),end="")print("0 = %f" % P)# 维特比算法def viterbi(self, Q, V, A, B, O, PI):# 状态序列的大小N = len(Q)# 观测序列的大小M = len(O)# 初始化daltasdeltas = np.zeros((N, M))# 初始化psispsis = np.zeros((N, M))# 初始化最优路径矩阵,该矩阵维度与观测序列维度相同I = np.zeros((1, M))# 遍历观测序列for t in range(M):# 递推从t=2开始realT = t + 1# 得到序列对应的索引indexOfO = V.index(O[t])for i in range(N):realI = i + 1if t == 0:# 算法10.5 步骤(1)deltas[i][t] = PI[0][i] * B[i][indexOfO]psis[i][t] = 0print('delta1(%d) = pi%d * b%d(o1) = %.2f * %.2f = %.2f' %(realI, realI, realI, PI[0][i], B[i][indexOfO],deltas[i][t]))print('psis1(%d) = 0' % (realI))else:# 算法10.5 步骤(2)deltas[i][t] = np.max(np.multiply([delta[t - 1] for delta in deltas],[a[i] for a in A])) * B[i][indexOfO]print('delta%d(%d) = max[delta%d(j)aj%d]b%d(o%d) = %.2f * %.2f = %.5f'% (realT, realI, realT - 1, realI, realI, realT,np.max(np.multiply([delta[t - 1] for delta in deltas],[a[i] for a in A])), B[i][indexOfO],deltas[i][t]))psis[i][t] = np.argmax(np.multiply([delta[t - 1] for delta in deltas],[a[i] for a in A]))print('psis%d(%d) = argmax[delta%d(j)aj%d] = %d' %(realT, realI, realT - 1, realI, psis[i][t]))#print(deltas)#print(psis)# 得到最优路径的终结点I[0][M - 1] = np.argmax([delta[M - 1] for delta in deltas])print('i%d = argmax[deltaT(i)] = %d' % (M, I[0][M - 1] + 1))# 递归由后向前得到其他结点for t in range(M - 2, -1, -1):I[0][t] = psis[int(I[0][t + 1])][t + 1]print('i%d = psis%d(i%d) = %d' %(t + 1, t + 2, t + 2, I[0][t] + 1))# 输出最优路径print('最优路径是:', "->".join([str(int(i + 1)) for i in I[0]]))

习题10.1

给定盒子和球组成的隐马尔可夫模型 λ = ( A , B , π ) \lambda=(A,B,\pi) λ=(A,B,π),其中, A = [ 0.5 0.2 0.3 0.3 0.5 0.2 0.2 0.3 0.5 ] , B = [ 0.5 0.5 0.4 0.6 0.7 0.3 ] , π = ( 0.2 , 0.4 , 0.4 ) T A=\left[\begin{array}{ccc}0.5&0.2&0.3\\0.3&0.5&0.2\\0.2&0.3&0.5\end{array}\right], \quad B=\left[\begin{array}{cc}0.5&0.5\\0.4&0.6\\0.7&0.3\end{array}\right], \quad \pi=(0.2,0.4,0.4)^T A= 0.50.30.20.20.50.30.30.20.5 ,B= 0.50.40.70.50.60.3 ,π=(0.2,0.4,0.4)T设 T = 4 , O = ( 红 , 白 , 红 , 白 ) T=4,O=(红,白,红,白) T=4,O=(红,白,红,白),试用后向算法计算 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

解答:

python">Q = [1, 2, 3]

V = ['红', '白']

A = [[0.5, 0.2, 0.3], [0.3, 0.5, 0.2], [0.2, 0.3, 0.5]]

B = [[0.5, 0.5], [0.4, 0.6], [0.7, 0.3]]

# O = ['红', '白', '红', '红', '白', '红', '白', '白']

O = ['红', '白', '红', '白']

PI = [[0.2, 0.4, 0.4]]

HMM = HiddenMarkov()

# HMM.forward(Q, V, A, B, O, PI)

HMM.backward(Q, V, A, B, O, PI)

HMM.viterbi(Q, V, A, B, O, PI)

beta4(1) = 1

beta4(2) = 1

beta4(3) = 1

beta3(1) = sigma[a1jbj(o4)beta4(j)] = (0.50 * 0.50 * 1.00 + 0.20 * 0.60 * 1.00 + 0.30 * 0.30 * 1.00 + 0) = 0.460

beta3(2) = sigma[a2jbj(o4)beta4(j)] = (0.30 * 0.50 * 1.00 + 0.50 * 0.60 * 1.00 + 0.20 * 0.30 * 1.00 + 0) = 0.510

beta3(3) = sigma[a3jbj(o4)beta4(j)] = (0.20 * 0.50 * 1.00 + 0.30 * 0.60 * 1.00 + 0.50 * 0.30 * 1.00 + 0) = 0.430

beta2(1) = sigma[a1jbj(o3)beta3(j)] = (0.50 * 0.50 * 0.46 + 0.20 * 0.40 * 0.51 + 0.30 * 0.70 * 0.43 + 0) = 0.246

beta2(2) = sigma[a2jbj(o3)beta3(j)] = (0.30 * 0.50 * 0.46 + 0.50 * 0.40 * 0.51 + 0.20 * 0.70 * 0.43 + 0) = 0.231

beta2(3) = sigma[a3jbj(o3)beta3(j)] = (0.20 * 0.50 * 0.46 + 0.30 * 0.40 * 0.51 + 0.50 * 0.70 * 0.43 + 0) = 0.258

beta1(1) = sigma[a1jbj(o2)beta2(j)] = (0.50 * 0.50 * 0.25 + 0.20 * 0.60 * 0.23 + 0.30 * 0.30 * 0.26 + 0) = 0.112

beta1(2) = sigma[a2jbj(o2)beta2(j)] = (0.30 * 0.50 * 0.25 + 0.50 * 0.60 * 0.23 + 0.20 * 0.30 * 0.26 + 0) = 0.122

beta1(3) = sigma[a3jbj(o2)beta2(j)] = (0.20 * 0.50 * 0.25 + 0.30 * 0.60 * 0.23 + 0.50 * 0.30 * 0.26 + 0) = 0.105

P(O|lambda) = 0.2 * 0.5 * 0.11246 + 0.4 * 0.4 * 0.12174 + 0.4 * 0.7 * 0.10488 + 0 = 0.060091

delta1(1) = pi1 * b1(o1) = 0.20 * 0.50 = 0.10

psis1(1) = 0

delta1(2) = pi2 * b2(o1) = 0.40 * 0.40 = 0.16

psis1(2) = 0

delta1(3) = pi3 * b3(o1) = 0.40 * 0.70 = 0.28

psis1(3) = 0

delta2(1) = max[delta1(j)aj1]b1(o2) = 0.06 * 0.50 = 0.02800

psis2(1) = argmax[delta1(j)aj1] = 2

delta2(2) = max[delta1(j)aj2]b2(o2) = 0.08 * 0.60 = 0.05040

psis2(2) = argmax[delta1(j)aj2] = 2

delta2(3) = max[delta1(j)aj3]b3(o2) = 0.14 * 0.30 = 0.04200

psis2(3) = argmax[delta1(j)aj3] = 2

delta3(1) = max[delta2(j)aj1]b1(o3) = 0.02 * 0.50 = 0.00756

psis3(1) = argmax[delta2(j)aj1] = 1

delta3(2) = max[delta2(j)aj2]b2(o3) = 0.03 * 0.40 = 0.01008

psis3(2) = argmax[delta2(j)aj2] = 1

delta3(3) = max[delta2(j)aj3]b3(o3) = 0.02 * 0.70 = 0.01470

psis3(3) = argmax[delta2(j)aj3] = 2

delta4(1) = max[delta3(j)aj1]b1(o4) = 0.00 * 0.50 = 0.00189

psis4(1) = argmax[delta3(j)aj1] = 0

delta4(2) = max[delta3(j)aj2]b2(o4) = 0.01 * 0.60 = 0.00302

psis4(2) = argmax[delta3(j)aj2] = 1

delta4(3) = max[delta3(j)aj3]b3(o4) = 0.01 * 0.30 = 0.00220

psis4(3) = argmax[delta3(j)aj3] = 2

i4 = argmax[deltaT(i)] = 2

i3 = psis4(i4) = 2

i2 = psis3(i3) = 2

i1 = psis2(i2) = 3

最优路径是: 3->2->2->2

习题10.2

给定盒子和球组成的隐马尔可夫模型 λ = ( A , B , π ) \lambda=(A,B,\pi) λ=(A,B,π),其中, A = [ 0.5 0.1 0.4 0.3 0.5 0.2 0.2 0.2 0.6 ] , B = [ 0.5 0.5 0.4 0.6 0.7 0.3 ] , π = ( 0.2 , 0.3 , 0.5 ) T A=\left[\begin{array}{ccc}0.5&0.1&0.4\\0.3&0.5&0.2\\0.2&0.2&0.6\end{array}\right], \quad B=\left[\begin{array}{cc}0.5&0.5\\0.4&0.6\\0.7&0.3\end{array}\right], \quad \pi=(0.2,0.3,0.5)^T A= 0.50.30.20.10.50.20.40.20.6 ,B= 0.50.40.70.50.60.3 ,π=(0.2,0.3,0.5)T设 T = 8 , O = ( 红 , 白 , 红 , 红 , 白 , 红 , 白 , 白 ) T=8,O=(红,白,红,红,白,红,白,白) T=8,O=(红,白,红,红,白,红,白,白),试用前向-后向概率计算 P ( i 4 = q 3 ∣ O , λ ) P(i_4=q_3|O,\lambda) P(i4=q3∣O,λ)

解答:

python">Q = [1, 2, 3]

V = ['红', '白']

A = [[0.5, 0.1, 0.4], [0.3, 0.5, 0.2], [0.2, 0.2, 0.6]]

B = [[0.5, 0.5], [0.4, 0.6], [0.7, 0.3]]

O = ['红', '白', '红', '红', '白', '红', '白', '白']

PI = [[0.2, 0.3, 0.5]]

HMM.forward(Q, V, A, B, O, PI)

HMM.backward(Q, V, A, B, O, PI)

alpha1(1) = p0b0b(o1) = 0.100000

alpha1(2) = p1b1b(o1) = 0.120000

alpha1(3) = p2b2b(o1) = 0.350000

alpha2(1) = [sigma alpha0(i)ai0]b0(o1) = 0.078000

alpha2(2) = [sigma alpha0(i)ai1]b1(o1) = 0.084000

alpha2(3) = [sigma alpha0(i)ai2]b2(o1) = 0.082200

alpha3(1) = [sigma alpha1(i)ai0]b0(o2) = 0.040320

alpha3(2) = [sigma alpha1(i)ai1]b1(o2) = 0.026496

alpha3(3) = [sigma alpha1(i)ai2]b2(o2) = 0.068124

alpha4(1) = [sigma alpha2(i)ai0]b0(o3) = 0.020867

alpha4(2) = [sigma alpha2(i)ai1]b1(o3) = 0.012362

alpha4(3) = [sigma alpha2(i)ai2]b2(o3) = 0.043611

alpha5(1) = [sigma alpha3(i)ai0]b0(o4) = 0.011432

alpha5(2) = [sigma alpha3(i)ai1]b1(o4) = 0.010194

alpha5(3) = [sigma alpha3(i)ai2]b2(o4) = 0.011096

alpha6(1) = [sigma alpha4(i)ai0]b0(o5) = 0.005497

alpha6(2) = [sigma alpha4(i)ai1]b1(o5) = 0.003384

alpha6(3) = [sigma alpha4(i)ai2]b2(o5) = 0.009288

alpha7(1) = [sigma alpha5(i)ai0]b0(o6) = 0.002811

alpha7(2) = [sigma alpha5(i)ai1]b1(o6) = 0.002460

alpha7(3) = [sigma alpha5(i)ai2]b2(o6) = 0.002535

alpha8(1) = [sigma alpha6(i)ai0]b0(o7) = 0.001325

alpha8(2) = [sigma alpha6(i)ai1]b1(o7) = 0.001211

alpha8(3) = [sigma alpha6(i)ai2]b2(o7) = 0.000941

beta8(1) = 1

beta8(2) = 1

beta8(3) = 1

beta7(1) = sigma[a1jbj(o8)beta8(j)] = (0.50 * 0.50 * 1.00 + 0.10 * 0.60 * 1.00 + 0.40 * 0.30 * 1.00 + 0) = 0.430

beta7(2) = sigma[a2jbj(o8)beta8(j)] = (0.30 * 0.50 * 1.00 + 0.50 * 0.60 * 1.00 + 0.20 * 0.30 * 1.00 + 0) = 0.510

beta7(3) = sigma[a3jbj(o8)beta8(j)] = (0.20 * 0.50 * 1.00 + 0.20 * 0.60 * 1.00 + 0.60 * 0.30 * 1.00 + 0) = 0.400

beta6(1) = sigma[a1jbj(o7)beta7(j)] = (0.50 * 0.50 * 0.43 + 0.10 * 0.60 * 0.51 + 0.40 * 0.30 * 0.40 + 0) = 0.186

beta6(2) = sigma[a2jbj(o7)beta7(j)] = (0.30 * 0.50 * 0.43 + 0.50 * 0.60 * 0.51 + 0.20 * 0.30 * 0.40 + 0) = 0.241

beta6(3) = sigma[a3jbj(o7)beta7(j)] = (0.20 * 0.50 * 0.43 + 0.20 * 0.60 * 0.51 + 0.60 * 0.30 * 0.40 + 0) = 0.176

beta5(1) = sigma[a1jbj(o6)beta6(j)] = (0.50 * 0.50 * 0.19 + 0.10 * 0.40 * 0.24 + 0.40 * 0.70 * 0.18 + 0) = 0.106

beta5(2) = sigma[a2jbj(o6)beta6(j)] = (0.30 * 0.50 * 0.19 + 0.50 * 0.40 * 0.24 + 0.20 * 0.70 * 0.18 + 0) = 0.101

beta5(3) = sigma[a3jbj(o6)beta6(j)] = (0.20 * 0.50 * 0.19 + 0.20 * 0.40 * 0.24 + 0.60 * 0.70 * 0.18 + 0) = 0.112

beta4(1) = sigma[a1jbj(o5)beta5(j)] = (0.50 * 0.50 * 0.11 + 0.10 * 0.60 * 0.10 + 0.40 * 0.30 * 0.11 + 0) = 0.046

beta4(2) = sigma[a2jbj(o5)beta5(j)] = (0.30 * 0.50 * 0.11 + 0.50 * 0.60 * 0.10 + 0.20 * 0.30 * 0.11 + 0) = 0.053

beta4(3) = sigma[a3jbj(o5)beta5(j)] = (0.20 * 0.50 * 0.11 + 0.20 * 0.60 * 0.10 + 0.60 * 0.30 * 0.11 + 0) = 0.043

beta3(1) = sigma[a1jbj(o4)beta4(j)] = (0.50 * 0.50 * 0.05 + 0.10 * 0.40 * 0.05 + 0.40 * 0.70 * 0.04 + 0) = 0.026

beta3(2) = sigma[a2jbj(o4)beta4(j)] = (0.30 * 0.50 * 0.05 + 0.50 * 0.40 * 0.05 + 0.20 * 0.70 * 0.04 + 0) = 0.023

beta3(3) = sigma[a3jbj(o4)beta4(j)] = (0.20 * 0.50 * 0.05 + 0.20 * 0.40 * 0.05 + 0.60 * 0.70 * 0.04 + 0) = 0.027

beta2(1) = sigma[a1jbj(o3)beta3(j)] = (0.50 * 0.50 * 0.03 + 0.10 * 0.40 * 0.02 + 0.40 * 0.70 * 0.03 + 0) = 0.015

beta2(2) = sigma[a2jbj(o3)beta3(j)] = (0.30 * 0.50 * 0.03 + 0.50 * 0.40 * 0.02 + 0.20 * 0.70 * 0.03 + 0) = 0.012

beta2(3) = sigma[a3jbj(o3)beta3(j)] = (0.20 * 0.50 * 0.03 + 0.20 * 0.40 * 0.02 + 0.60 * 0.70 * 0.03 + 0) = 0.016

beta1(1) = sigma[a1jbj(o2)beta2(j)] = (0.50 * 0.50 * 0.01 + 0.10 * 0.60 * 0.01 + 0.40 * 0.30 * 0.02 + 0) = 0.006

beta1(2) = sigma[a2jbj(o2)beta2(j)] = (0.30 * 0.50 * 0.01 + 0.50 * 0.60 * 0.01 + 0.20 * 0.30 * 0.02 + 0) = 0.007

beta1(3) = sigma[a3jbj(o2)beta2(j)] = (0.20 * 0.50 * 0.01 + 0.20 * 0.60 * 0.01 + 0.60 * 0.30 * 0.02 + 0) = 0.006

P(O|lambda) = 0.2 * 0.5 * 0.00633 + 0.3 * 0.4 * 0.00685 + 0.5 * 0.7 * 0.00578 + 0 = 0.003477

可知, P ( i 4 = q 3 ∣ O , λ ) = P ( i 4 = q 3 , O ∣ λ ) P ( O ∣ λ ) = α 4 ( 3 ) β 4 ( 3 ) P ( O ∣ λ ) \displaystyle P(i_4=q_3|O,\lambda)=\frac{P(i_4=q_3,O|\lambda)}{P(O|\lambda)}=\frac{\alpha_4(3)\beta_4(3)}{P(O|\lambda)} P(i4=q3∣O,λ)=P(O∣λ)P(i4=q3,O∣λ)=P(O∣λ)α4(3)β4(3)

python">print("alpha4(3)=", HMM.alphas[3 - 1][4 - 1])

print("beta4(3)=", HMM.betas[3 - 1][4 - 1])

print("P(O|lambda)=", HMM.backward_P[0])

result = (HMM.alphas[3 - 1][4 - 1] *HMM.betas[3 - 1][4 - 1]) / HMM.backward_P[0]

print("P(i4=q3|O,lambda) =", result)

alpha4(3)= 0.043611119999999996

beta4(3)= 0.04280618

P(O|lambda)= 0.0034767094492824

P(i4=q3|O,lambda) = 0.5369518160647322

习题10.3

在习题10.1中,试用维特比算法求最优路径 I ∗ = ( i 1 ∗ , i 2 ∗ , i 3 ∗ , i 4 ∗ ) I^*=(i_1^*,i_2^*,i_3^*,i_4^*) I∗=(i1∗,i2∗,i3∗,i4∗)。

解答: 见习题10.1的结果。

习题10.4

试用前向概率和后向概率推导 P ( O ∣ λ ) = ∑ i = 1 N ∑ j = 1 N α t ( i ) a i j b j ( o t + 1 ) β t + 1 ( j ) , t = 1 , 2 , ⋯ , T − 1 P(O|\lambda)=\sum_{i=1}^N\sum_{j=1}^N\alpha_t(i)a_{ij}b_j(o_{t+1})\beta_{t+1}(j),\quad t=1,2,\cdots,T-1 P(O∣λ)=i=1∑Nj=1∑Nαt(i)aijbj(ot+1)βt+1(j),t=1,2,⋯,T−1

解答:

P ( O ∣ λ ) = P ( o 1 , o 2 , . . . , o T ∣ λ ) = ∑ i = 1 N P ( o 1 , . . , o t , i t = q i ∣ λ ) P ( o t + 1 , . . , o T ∣ i t = q i , λ ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , . . , o t , i t = q i ∣ λ ) P ( o t + 1 , i t + 1 = q j ∣ i t = q i , λ ) P ( o t + 2 , . . , o T ∣ i t + 1 = q j , λ ) = ∑ i = 1 N ∑ j = 1 N [ P ( o 1 , . . , o t , i t = q i ∣ λ ) P ( o t + 1 ∣ i t + 1 = q j , λ ) P ( i t + 1 = q j ∣ i t = q i , λ ) P ( o t + 2 , . . , o T ∣ i t + 1 = q j , λ ) ] = ∑ i = 1 N ∑ j = 1 N α t ( i ) a i j b j ( o t + 1 ) β t + 1 ( j ) , t = 1 , 2 , . . . , T − 1 \begin{aligned} P(O|\lambda) &= P(o_1,o_2,...,o_T|\lambda) \\ &= \sum_{i=1}^N P(o_1,..,o_t,i_t=q_i|\lambda) P(o_{t+1},..,o_T|i_t=q_i,\lambda) \\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,..,o_t,i_t=q_i|\lambda) P(o_{t+1},i_{t+1}=q_j|i_t=q_i,\lambda)P(o_{t+2},..,o_T|i_{t+1}=q_j,\lambda) \\ &= \sum_{i=1}^N \sum_{j=1}^N [P(o_1,..,o_t,i_t=q_i|\lambda) P(o_{t+1}|i_{t+1}=q_j,\lambda) P(i_{t+1}=q_j|i_t=q_i,\lambda) \\ & \quad \quad \quad \quad P(o_{t+2},..,o_T|i_{t+1}=q_j,\lambda)] \\ &= \sum_{i=1}^N \sum_{j=1}^N \alpha_t(i) a_{ij} b_j(o_{t+1}) \beta_{t+1}(j),{\quad}t=1,2,...,T-1 \end{aligned} P(O∣λ)=P(o1,o2,...,oT∣λ)=i=1∑NP(o1,..,ot,it=qi∣λ)P(ot+1,..,oT∣it=qi,λ)=i=1∑Nj=1∑NP(o1,..,ot,it=qi∣λ)P(ot+1,it+1=qj∣it=qi,λ)P(ot+2,..,oT∣it+1=qj,λ)=i=1∑Nj=1∑N[P(o1,..,ot,it=qi∣λ)P(ot+1∣it+1=qj,λ)P(it+1=qj∣it=qi,λ)P(ot+2,..,oT∣it+1=qj,λ)]=i=1∑Nj=1∑Nαt(i)aijbj(ot+1)βt+1(j),t=1,2,...,T−1

命题得证。

习题10.5

比较维特比算法中变量 δ \delta δ的计算和前向算法中变量 α \alpha α的计算的主要区别。

解答:

前向算法:

- 初值 α 1 ( i ) = π i b i ( o i ) , i = 1 , 2 , ⋯ , N \alpha_1(i)=\pi_ib_i(o_i),i=1,2,\cdots,N α1(i)=πibi(oi),i=1,2,⋯,N

- 递推,对 t = 1 , 2 , ⋯ , T − 1 t=1,2,\cdots,T-1 t=1,2,⋯,T−1: α t + 1 ( i ) = [ ∑ j = 1 N α t ( j ) a j i ] b i ( o t + 1 ) , i = 1 , 2 , ⋯ , N \alpha_{t+1}(i)=\left[\sum_{j=1}^N \alpha_t(j) a_{ji} \right]b_i(o_{t+1}),i=1,2,\cdots,N αt+1(i)=[j=1∑Nαt(j)aji]bi(ot+1),i=1,2,⋯,N

维特比算法:

- 初始化 δ 1 ( i ) = π i b i ( o 1 ) , i = 1 , 2 , ⋯ , N \delta_1(i)=\pi_ib_i(o_1),i=1,2,\cdots,N δ1(i)=πibi(o1),i=1,2,⋯,N

- 递推,对 t = 2 , 3 , ⋯ , T t=2,3,\cdots,T t=2,3,⋯,T δ t ( i ) = max 1 ⩽ j ⩽ N [ δ t − 1 ( j ) a j i ] b i ( o t ) , i = 1 , 2 , ⋯ , N \delta_t(i)=\max_{1 \leqslant j \leqslant N} [\delta_{t-1}(j)a_{ji}]b_i(o_t), i=1,2,\cdots,N δt(i)=1⩽j⩽Nmax[δt−1(j)aji]bi(ot),i=1,2,⋯,N

由上面算法可知,计算变量 α \alpha α的时候直接对上个的结果进行数值计算,而计算变量 δ \delta δ需要在上个结果计算的基础上选择最大值

HMM_350">多元高斯隐马尔可夫模型(GMM-HMM)

多元高斯隐马尔可夫模型(Gaussian Hidden Markov Model, GMM-HMM)是隐马尔可夫模型(HMM)的一种变体,用于建模连续观测数据。它结合了隐马尔可夫模型的离散隐藏状态和多元高斯分布的连续观测值模型特性。

关键概念

-

隐藏状态(Hidden States):这些是模型内部的不可见状态,表示系统在不同时间点的状态。通常假设为一个离散状态空间。

-

观测值(Observations):观测值 O t O_t Ot 是在给定隐藏状态 Q t Q_t Qt 的条件下生成的。在GMM-HMM中,假设每个隐藏状态 Q t Q_t Qt 对应一个多元高斯分布,其观测值服从该分布。

-

模型参数:

- 转移概率(Transition Probability):表示从一个隐藏状态转移到另一个隐藏状态的概率。

- 观测概率(Emission Probability):在GMM-HMM中,观测概率被建模为多元高斯分布。

数学描述

在 GMM-HMM 中,观测序列 O = ( O 1 , O 2 , … , O T ) O = (O_1, O_2, \ldots, O_T) O=(O1,O2,…,OT) 被假设为由隐藏状态序列 Q = ( Q 1 , Q 2 , … , Q T ) Q = (Q_1, Q_2, \ldots, Q_T) Q=(Q1,Q2,…,QT) 生成,其中:

-

初始状态分布(Initial State Distribution): π = { π i } \pi = \{ \pi_i \} π={πi} 表示初始时每个状态的概率分布。

-

状态转移概率矩阵(State Transition Probability Matrix): A = { a i j } A = \{ a_{ij} \} A={aij} 表示从状态 i i i 转移到状态 j j j 的概率。

-

观测概率分布(Observation Probability Distribution):在 GMM-HMM 中,给定状态 i i i,观测值的概率分布是多元高斯分布: B i ( O t ) = N ( O t ∣ μ i , Σ i ) B_i(O_t) = \mathcal{N}(O_t | \mu_i, \Sigma_i) Bi(Ot)=N(Ot∣μi,Σi),其中 μ i \mu_i μi 是均值向量, Σ i \Sigma_i Σi 是协方差矩阵。

应用领域

GMM-HMM 在以下领域有广泛应用:

- 语音识别:分段语音信号并应用 GMM-HMM 识别言语内容。

- 时间序列分析:建模和预测数据趋势,如股票价格或气象模式。

- 生物信息学:分析基因序列等生物数据。

python">import numpy as np

from hmmlearn import hmm# 创建一个多元高斯隐马尔可夫模型,隐藏状态数设置为2,协方差矩阵类型设置为 "full",表示每个隐藏状态都有自己的协方差矩阵

model = hmm.GaussianHMM(n_components=2, covariance_type="full", n_iter=500)# 生成一些示例数据,这些数据是二维的观测值,每一行代表一个观测向量

X = np.array([[0.5, 1.0], [0.7, 1.1], [0.6, 1.2], [3.0, 3.5], [3.2, 3.6], [3.1, 3.7], [5.5, 6.0], [5.7, 6.1], [5.6, 6.2],[2.3, 2.5]])# 拟合模型

model.fit(X)# 预测隐藏状态

hidden_states = model.predict(X)print("Hidden states:")

print(hidden_states)# 打印模型的转移矩阵,转移矩阵描述了隐藏状态之间的转移概率

print("Transition matrix:")

print(model.transmat_)# 打印每个隐藏状态的均值和方差

print("Means and variances of each hidden state:")

for i in range(model.n_components):print(f"Hidden state {i}")print(f"Mean = {model.means_[i]}")print(f"Variance = {np.diag(model.covars_[i])}")Hidden states:

[1 0 1 0 0 0 0 0 0 1]

Transition matrix:

[[7.22727769e-01 2.77272231e-01][9.99999444e-01 5.56018679e-07]]

Means and variances of each hidden state:

Hidden state 0

Mean = [3.80824226 4.29438185]

Variance = [3.02061731 3.10719472]

Hidden state 1

Mean = [1.13841536 1.56988913]

Variance = [0.68976917 0.44903693]

参考书籍:李航《机器学习方法》

代码来自:https://github.com/fengdu78/lihang-code

习题解答:https://github.com/datawhalechina/statistical-learning-method-solutions-manual