SpringBoot教程(三十二) | SpringBoot集成Skywalking链路跟踪

- Skywalking是什么?

- Skywalking与JDK版本的对应关系

- Skywalking下载

- Skywalking 数据存储

- Skywalking 的启动

- 部署探针

- 方式一:IDEA 部署探针

- 方式二:Java 命令行启动方式

- 方式三:编写sh脚本启动(linux环境)

- Springboot 的启动

- IDEA 部署探针方式启动

- Skywalking 进行日志配置

Skywalking是什么?

SkyWalking是一个开源的、用于观测分布式系统(特别是微服务、云原生和容器化应用)的平台。

它提供了对分布式系统的追踪、监控和诊断能力。

Skywalking与JDK版本的对应关系

SkyWalking 8.x版本要求Java版本至少为8(即JDK 1.8),

SkyWalking 9.x版本则要求Java版本至少为11(即JDK 11)

所以选择的时候需要注意一下JDK版本。

Skywalking下载

Skywalking 官网下载地址 https://skywalking.apache.org/downloads/

-

其他的版本的 APM 地址

https://archive.apache.org/dist/skywalking/ -

其他的java 版本的 Agents 地址

https://archive.apache.org/dist/skywalking/java-agent/

注意点:

7.x及以下版本 APM 包里面有包括 Agents,但是8.x的就发现被分开了,所以8.x的及以上的 就需要 Agents 也得下载

目前该文选择 下载 APM 8.9.1 和 Agents 8.9.0 后解压

接着把 Agents 文件放到 APM 文件中

Skywalking 数据存储

Skywalking 存在多种数据存储

- h2(默认的存储方式,重启后数据会丢失)

- Elasticsearch (最常用的数据存储方式)

- MySQL

- TiDB

- …

相关文件OAP 配置文件(config/application.yml)

我只截取了关于设置存储方式的部分

storage:selector: ${SW_STORAGE:h2}elasticsearch:namespace: ${SW_NAMESPACE:""}clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}connectTimeout: ${SW_STORAGE_ES_CONNECT_TIMEOUT:500}socketTimeout: ${SW_STORAGE_ES_SOCKET_TIMEOUT:30000}numHttpClientThread: ${SW_STORAGE_ES_NUM_HTTP_CLIENT_THREAD:0}user: ${SW_ES_USER:""}password: ${SW_ES_PASSWORD:""}trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexesindexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.indexTemplateOrder: ${SW_STORAGE_ES_INDEX_TEMPLATE_ORDER:0} # the order of index templatebulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests# flush the bulk every 10 seconds whatever the number of requests# INT(flushInterval * 2/3) would be used for index refresh period.flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requestsresultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.advanced: ${SW_STORAGE_ES_ADVANCED:""}h2:driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db;DB_CLOSE_DELAY=-1}user: ${SW_STORAGE_H2_USER:sa}metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:100}asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:1}mysql:properties:jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://localhost:3306/swtest?rewriteBatchedStatements=true"}dataSource.user: ${SW_DATA_SOURCE_USER:root}dataSource.password: ${SW_DATA_SOURCE_PASSWORD:root@1234}dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}tidb:properties:jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://localhost:4000/tidbswtest?rewriteBatchedStatements=true"}dataSource.user: ${SW_DATA_SOURCE_USER:root}dataSource.password: ${SW_DATA_SOURCE_PASSWORD:""}dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}dataSource.useAffectedRows: ${SW_DATA_SOURCE_USE_AFFECTED_ROWS:true}metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}influxdb:# InfluxDB configurationurl: ${SW_STORAGE_INFLUXDB_URL:http://localhost:8086}user: ${SW_STORAGE_INFLUXDB_USER:root}password: ${SW_STORAGE_INFLUXDB_PASSWORD:}database: ${SW_STORAGE_INFLUXDB_DATABASE:skywalking}actions: ${SW_STORAGE_INFLUXDB_ACTIONS:1000} # the number of actions to collectduration: ${SW_STORAGE_INFLUXDB_DURATION:1000} # the time to wait at most (milliseconds)batchEnabled: ${SW_STORAGE_INFLUXDB_BATCH_ENABLED:true}fetchTaskLogMaxSize: ${SW_STORAGE_INFLUXDB_FETCH_TASK_LOG_MAX_SIZE:5000} # the max number of fetch task log in a requestconnectionResponseFormat: ${SW_STORAGE_INFLUXDB_CONNECTION_RESPONSE_FORMAT:MSGPACK} # the response format of connection to influxDB, cannot be anything but MSGPACK or JSON.postgresql:properties:jdbcUrl: ${SW_JDBC_URL:"jdbc:postgresql://localhost:5432/skywalking"}dataSource.user: ${SW_DATA_SOURCE_USER:postgres}dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}zipkin-elasticsearch:namespace: ${SW_NAMESPACE:""}clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexesindexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.user: ${SW_ES_USER:""}password: ${SW_ES_PASSWORD:""}secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests# flush the bulk every 10 seconds whatever the number of requests# INT(flushInterval * 2/3) would be used for index refresh period.flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requestsresultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.advanced: ${SW_STORAGE_ES_ADVANCED:""}iotdb:host: ${SW_STORAGE_IOTDB_HOST:127.0.0.1}rpcPort: ${SW_STORAGE_IOTDB_RPC_PORT:6667}username: ${SW_STORAGE_IOTDB_USERNAME:root}password: ${SW_STORAGE_IOTDB_PASSWORD:root}storageGroup: ${SW_STORAGE_IOTDB_STORAGE_GROUP:root.skywalking}sessionPoolSize: ${SW_STORAGE_IOTDB_SESSIONPOOL_SIZE:16}fetchTaskLogMaxSize: ${SW_STORAGE_IOTDB_FETCH_TASK_LOG_MAX_SIZE:1000} # the max number of fetch task log in a requestSkywalking 的启动

进入 D:\apache-skywalking-apm-8.9.1\apache-skywalking-apm-bin\bin ,双击运行 startup.bat(用管理员方式启动),会开启两个命令行窗口。

- (1)Skywalking-Collector:追踪信息收集器,通过 gRPC/Http 收集客户端的采集信息 。Http默认端口 12800,gRPC默认端口 11800。(如需要修改,可前往 apache-skywalking-apm-bin\config\applicaiton.yml 进行修改)

- (2)Skywalking-Webapp:管理平台页面 默认端口 8080 (如需要修改,可前往 apache-skywalking-apm-bin\webapp\webapp.yml 进行修改)

启动图如下:

接着浏览器Skywalking访问:http://localhost:8080/

这个右边有个自动刷新的按钮,一定要启动起来

不然到时候,springboot工程启动以后,你以为没有连接成功(F5刷新页面是没有用的)

部署探针

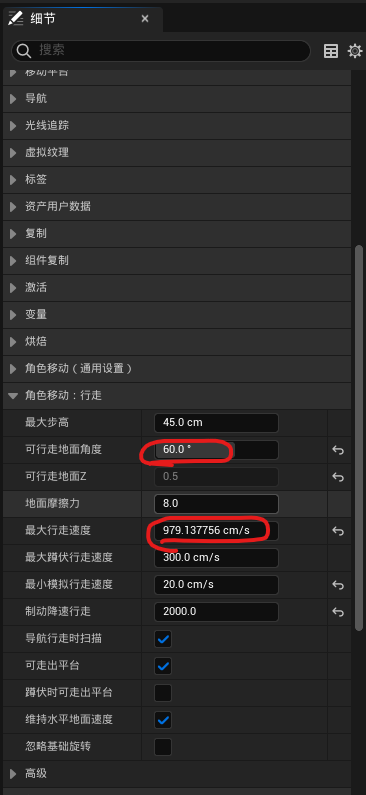

方式一:IDEA 部署探针

修改启动类的 VM options(虚拟机选项)配置

配置的jvm参数如下:

-javaagent:D:\apache-skywalking-apm-8.9.1\apache-skywalking-apm-bin\skywalking-agent\skywalking-agent.jar

-DSW_AGENT_NAME=woqu-ndy

-DSW_AGENT_COLLECTOR_BACKEND_SERVICES=127.0.0.1:11800

- javaagent: 表示 skywalking‐agent.jar的本地磁盘的路径

- DSW_AGENT_NAME:表示在skywalking上显示的服务名

- DSW_AGENT_COLLECTOR_BACKEND_SERVICES:表示skywalking的collector服务的IP及端口

- 注意:DSW_AGENT_COLLECTOR_BACKEND_SERVICES 可以指定远程地址, 但是 javaagent 必须绑定你本机物理路径的skywalking-agent.jar

方式二:Java 命令行启动方式

java -javaagent:C:\Users\ke\Desktop\apache-skywalking-apm-6.6.0\apache-skywalking-apm-bin\agent/skywalking-agent.jar=-Dskywalking.agent.service_name=service-myapp,-Dskywalking.collector.backend_service=localhost:11800 -jar service-myapp.jar

方式三:编写sh脚本启动(linux环境)

#!/bin/bash # 设置 SkyWalking Agent 的路径

AGENT_PATH="/home/yourusername/Desktop/apache-skywalking-apm-6.6.0/apache-skywalking-apm-bin/agent" # 设置 Java 应用的 JAR 文件路径

JAR_PATH="/path/to/your/service-myapp.jar" # 设置 SkyWalking 服务名称和 Collector 后端服务地址

SERVICE_NAME="service-myapp"

COLLECTOR_BACKEND_SERVICE="localhost:11800" # 构造 Java Agent 参数

JAVA_AGENT="-javaagent:$AGENT_PATH/skywalking-agent.jar \ -Dskywalking.agent.service_name=$SERVICE_NAME \ -Dskywalking.collector.backend_service=$COLLECTOR_BACKEND_SERVICE" # 启动 Java 应用

java $JAVA_AGENT -jar $JAR_PATH

Springboot 的启动

IDEA 部署探针方式启动

启动后,控制台日志输出开头出现了以下的记录,就表示连接上Skywalking了

再看 Skywalking(http://localhost:8080/) 页面那边,你就会发现有个这个图(表示连接上了)

我们再请求一下 Controller 的接口,就会发现捕获了相关接口记录

(但是目前,还是没有接口具体详细的日志入参或者出参的)

Skywalking 进行日志配置

为log日志增加 skywalking的 traceId(追踪ID)。便于排查

首先引入maven依赖

<!-- skywalking-logback skyWalking中的traceId记录到logback日志 ↓ -->

<dependency><groupId>org.apache.skywalking</groupId><artifactId>apm-toolkit-logback-1.x</artifactId><version>9.0.0</version>

</dependency>

接着在 resources文件夹下创建 logback-spring.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false"><!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径--><property name="LOG_HOME" value="D:/logs/" ></property><!-- 彩色日志 --><conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter" /><!--控制台日志, 控制台输出 --><appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"><encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder"><layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.mdc.TraceIdMDCPatternLogbackLayout"><!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符--><pattern>%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} [%X{tid}] %clr([%-10.10thread]){faint} %clr(%-5level) %clr(%-50.50logger{50}:%-3L){cyan} %clr(-){faint} %msg%n</pattern></layout></encoder></appender><!--文件日志, 按照每天生成日志文件 (只能是 由 Logger 或者 LoggerFactory 记录的日志消息哦)--><!--以下关于 日志文件的pattern 需要去掉颜色,防止出现 ANSI转义序列--><appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender"><rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy"><!--日志文件输出的文件名--><FileNamePattern>${LOG_HOME}/%d{yyyy-MM-dd}/pro.log</FileNamePattern><!--日志文件保留天数--><MaxHistory>30</MaxHistory></rollingPolicy><encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder"><layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.mdc.TraceIdMDCPatternLogbackLayout"><!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符--><!-- <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern>--><pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{tid}] [%-10.10thread] %-5level %-50.50logger{50}:%-3L - %msg%n</pattern></layout></encoder><!--日志文件最大的大小--><triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy"><MaxFileSize>10MB</MaxFileSize></triggeringPolicy></appender><!--skywalking grpc 日志收集--><appender name="grpc" class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.log.GRPCLogClientAppender"><encoder class="ch.qos.logback.core.encoder.LayoutWrappingEncoder"><layout class="org.apache.skywalking.apm.toolkit.log.logback.v1.x.mdc.TraceIdMDCPatternLogbackLayout"><Pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{tid}] [%thread] %-5level %logger{36} -%msg%n</Pattern></layout></encoder></appender><!-- 日志输出级别 --><root level="INFO"><appender-ref ref="STDOUT" ></appender-ref><appender-ref ref="FILE" ></appender-ref><appender-ref ref="grpc"/></root>

</configuration>请求接口就可以发现TID的输出

(在这里是882c67dc859046c398fbfc5725df9de0.109.17288962842340001)

然后把它放到 追踪 栏目的追踪id ,可以查到记录

然后把它放到 日志 栏目的追踪id ,可以查到记录

参考文章

【1】skywalking环境搭建(windows)

【2】windows下安装skywalking 9.2

【3】SpringBoot集成Skywalking日志收集