参考配置教程: Configure Zeppelin for a Kerberos-Enabled Cluster

zeppelin spark kerberos 使用过程遇到的问题

ambari创建zeppelin时,会创建Kerberos account and keytab

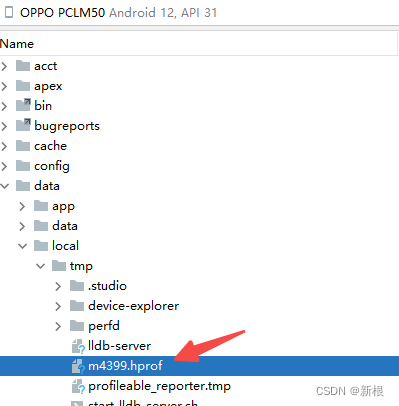

/etc/security/keytabs/zeppelin.server.kerberos.keytab

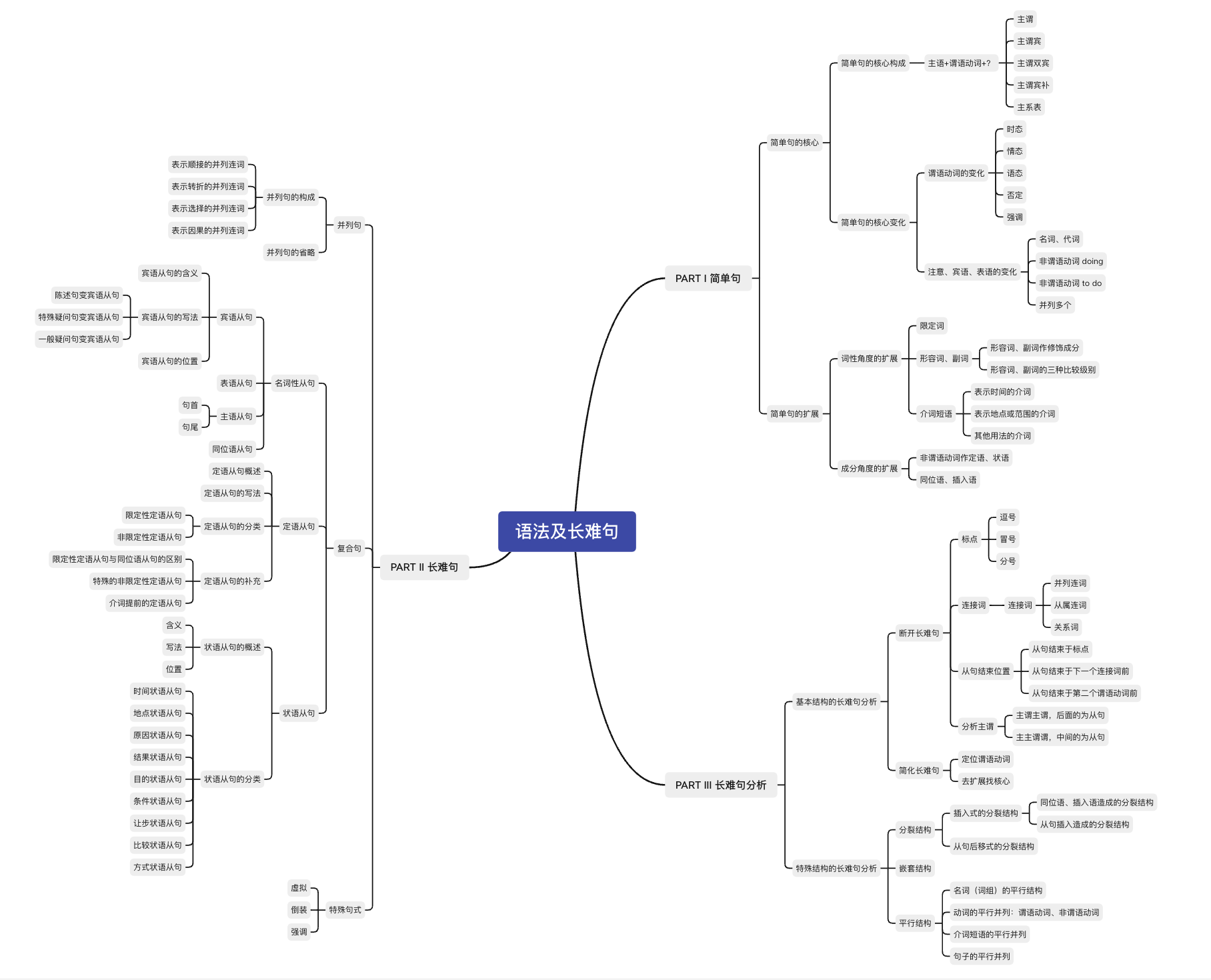

interpreter配置 keytab

| Interpreter | Keytab Property | Principal Property |

| %jdbc | zeppelin.jdbc.keytab.location | zeppelin.jdbc.principal |

| %livy | zeppelin.livy.keytab | zeppelin.livy.principal |

| %sh | zeppelin.shell.keytab.location | zeppelin.shell.principal |

| %spark | spark.yarn.keytab | spark.yarn.principal |

spark.yarn.keytab = /etc/security/keytabs/zeppelin.server.kerberos.keytab spark.yarn.principal = zeppelin-cluster1@EXAMPLE.COM

报错0 : kinit: command not found

sudo apt-get install -y krb5-user \ libpam-krb5 libpam-ccreds \ libkrb5-dev \

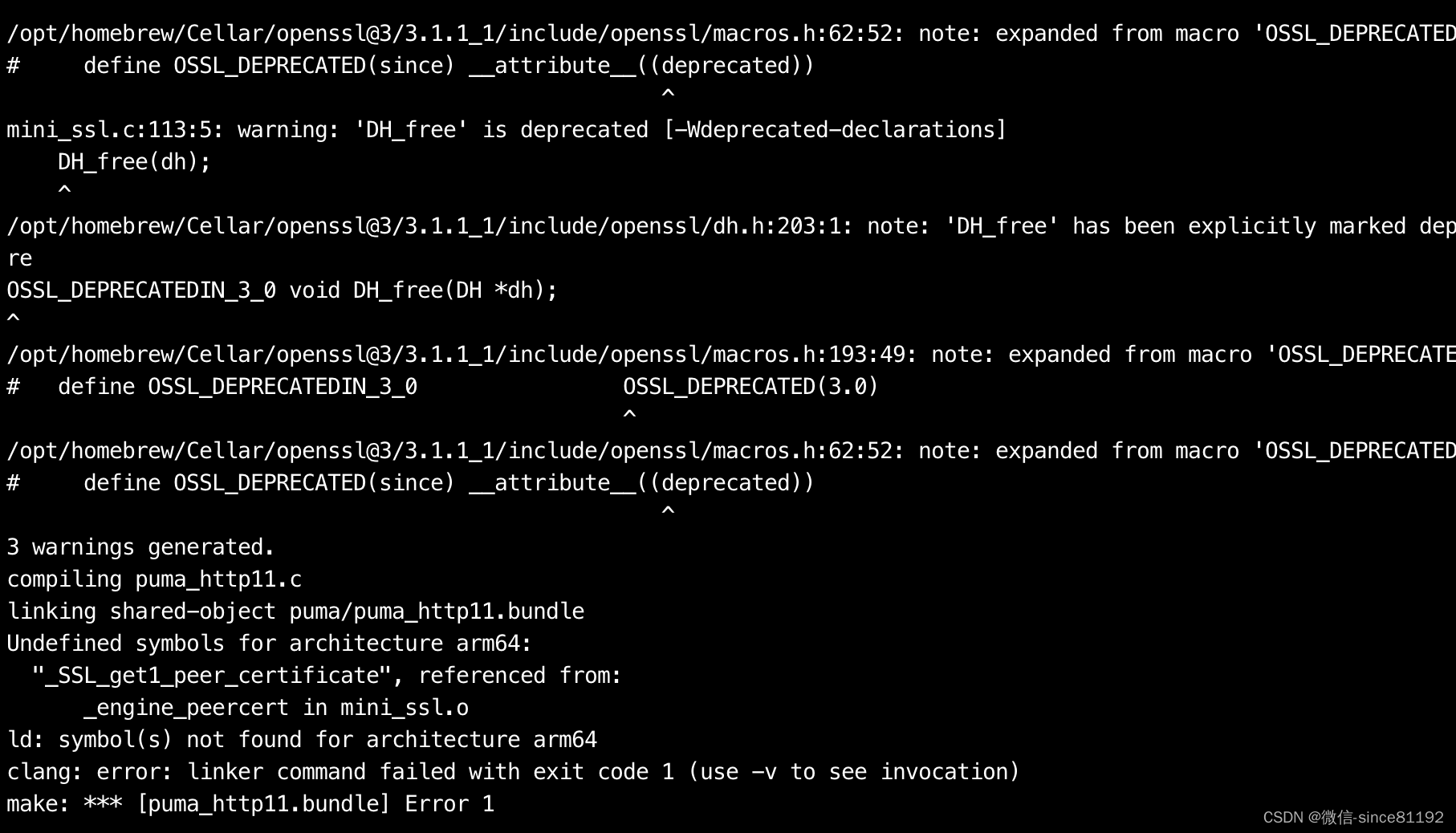

报错1: kinit: Cannot find KDC for realm "EXAMPLE.COM" while getting initial credentials

sudo vi /etc/krb5.conf 在realms最下面增加EXAMPLE.COM = { admin_server = hadoop0003 kdc = hadoop0003 } [libdefaults] default_realm = EXAMPLE.COM报错: kinit: Key table file 'spark.yarn.keytab' not found while getting initial credentials发现 interpreter 中的 spark.yarn.keytab 填写错了,应该填写: /etc/security/keytabs/zeppelin.server.kerberos.keytab报错2:Caused by: org.apache.spark.SparkException: Master must either be yarn or start with spark, mesos, k8s, or local

修改 spark interpreterspark.master=yarn

spark.submit.deployMode=client遇到依赖加载不了问题, 参考报错8,使用packages以及本地maven仓库sudo apt install maven

mvn install:install-file -DgroupId=com.databricks -DartifactId=spark-avro_2.11 -Dversion=4.0.0 -Dfile=/home/gaosong/jars/spark-avro_2.11-4.0.0.jar -Dpackaging=jar maven setttings.xml 修改 到20的本地仓库, repo地址保持不动, zeppelin有默认使用本地库时间同步sudo apt install ntpdatesudo ntpdate 172.16.20.20修改时区timedatectlsudo timedatectl set-timezone Asia/Shanghai报错3: Caused by: org.apache.zeppelin.interpreter.InterpreterException: Fail to open SparkInterpreter

解决办法

Caused by: java.io.FileNotFoundException: File file:/etc/spark/conf/spark-thrift-fairscheduler.xml does not exist这是因为默认的spark配置找不到的原因172.16.21.30sudo mdkir -p /etc/spark/confcd /etc/spark/conf/sudo scp root@172.16.20.21:/etc/spark/conf/* ./报错4 Caused by: java.lang.IllegalArgumentException: Server has invalid Kerberos principal: rm/hadoop0002@ATHENA.MIT.EDU, expecting: rm/hadoop0002@EXAMPLE.COM

解决办法

这是因为 /etc/krb5.conf 文件中默认 default_realm 没有配置对, 修改成我们使用的EXAMPLE.COM 即可报错4-1:Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=zeppelin, access=WRITE, inode="/user":hdfs:hdfs:drwxr-xr-x

解决办法

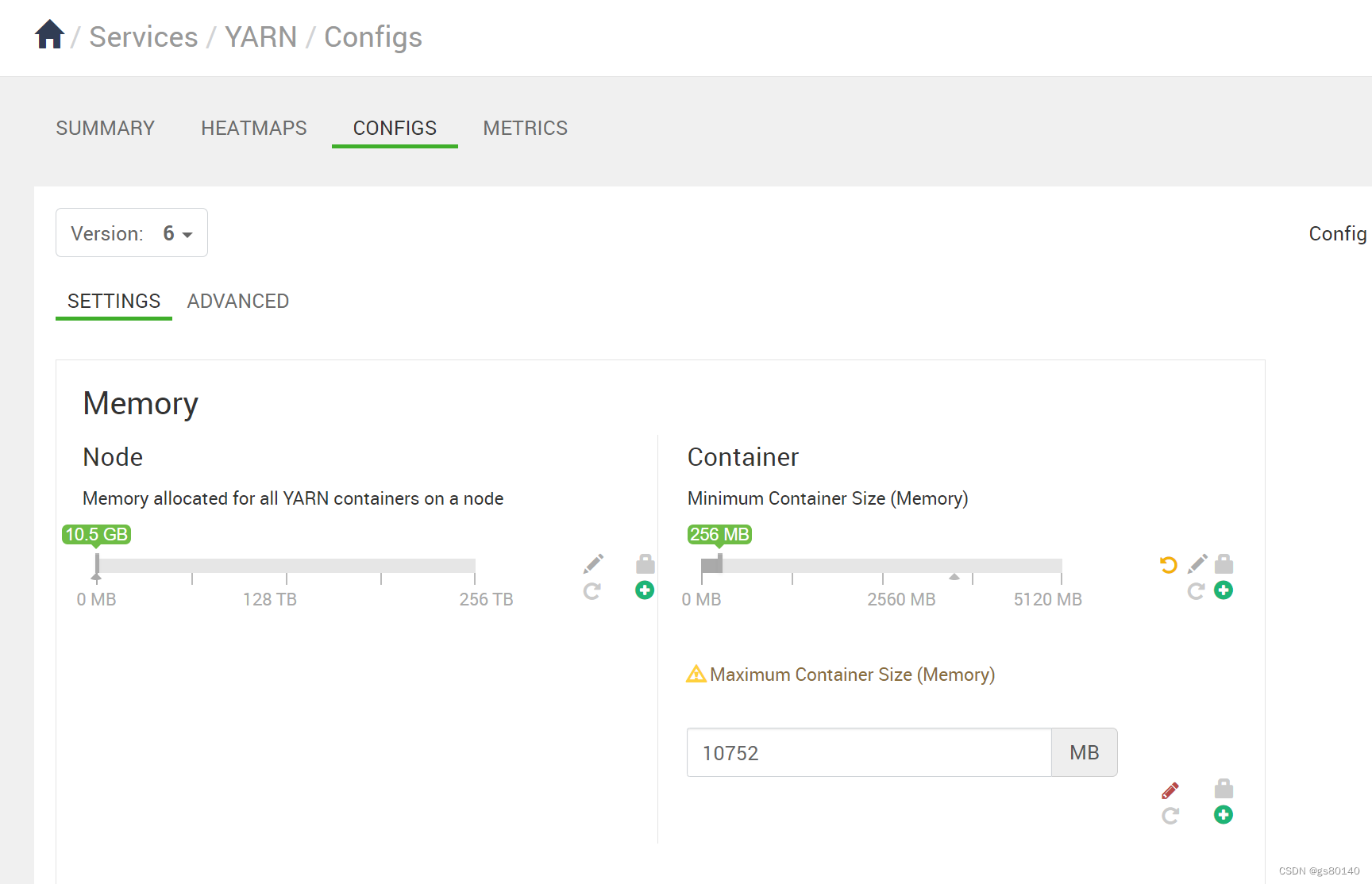

hadoop fs -chmod 777 /user报错5: Application is added to the scheduler and is not yet activated. Queue's AM resource limit exceeded. Details : AM Partition = ; AM Resource Request = ; Queue Resource Limit for AM = ; User AM Resource Limit of the queue = ; Queue AM Resource Usage = ;

yarn资源不足

解决办法: 修改 zeppelin-env.sh

export SPARK_SUBMIT_OPTIONS="--queue default --driver-memory 1G --executor-memory 1G --num-executors 1 --executor-cores 1" 启动 bin/zeppelin-daemon.sh start 停止 bin/zeppelin-daemon.sh stop spark.driver.cores = 1 spark.driver.memory = 512m spark.executor.cores = 1 spark.executor.memory=512m spark.executor.instances=1 zeppelin设置 %spark.conf SPARK_HOME /usr/bigtop/3.2.0/usr/lib/spark # set driver memory to 512m spark.driver.memory 512m # set executor number to be 1 spark.executor.instances 1 # set executor memory 512m spark.executor.memory 512m # Any other spark properties can be set here. Here's avaliable spark configruation you can set. (http://spark.apache.org/docs/latest/configuration.html) 以上修改没起作用, 仍然报错

修改 Container 为 256M

以上修改有效

报错6 main : run as user is admin main : requested yarn user is admin User admin not found

解决办法

各个节点添加用户useradd admin报错7 :Caused by: java.io.IOException: Failed to connect to server:37861

未解决问题, 解决过程

这个server是原来zeppelin的主机名, 后来改成了 gpuserverzeppelin所在机器 172.16.21.30 文件 cat /etc/hosts原因: 172.16.21.30 server ?修改成172.16.21.30 gpuserver报错:Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: server/172.16.21.30:44419防火墙 sudo ufw status 状态是关闭的关闭防火墙 sudo ufw disable开启: sudo ufw enable以上没有解决问题 将 master yarn-client 改成 master yarn-cluster改成 master yarn-cluster 后

报错8 Caused by: java.lang.ClassNotFoundException: org.apache.spark.sql.avro.AvroFileFormat.DefaultSource

解决办法:

修改 #spark.jars 注释掉spark.jars.packages com.databricks:spark-avro_2.11:4.0.0,org.apache.spark:spark-avro_2.12:2.4.5spark.jars.repositories http://172.16.20.20:8081/repository/maven-public/报错9: Path does not exist: hdfs://hadoop0001:8020/user/admin/users.avro

解决办法

意思是此文件要上传到hdfs /user/admin/ 目录命令行 先登陆 kinit hdfs/admin@EXAMPLE.COM 输入密码 9LDrv2XS 复制文件 hdfs dfs -copyFromLocal /usr/bigtop/3.2.0/usr/lib/spark/examples/src/main/resources/users.avro /user/admin/