今天抓取的是百度文库doc文档,但是要求确实随机的1000万份文档并存为txt文本,下载百度文库的开源项目已经有了,那么去哪里找到1000万个百度文库doc文档的url呢,并且在短时间内下载下来。

因为爬虫是一个IO密集型业务,所以使用协程效率则最高,第一时间想到了gevent。

首先分析百度文库的url

https://wenku.baidu.com/search?word=%BD%CC%CA%A6&org=0&fd=0&lm=0&od=0&pn=10

不难发现 word为关键字,pn为当前页数,经过测试,pn最大值为760,超过了这个值百度文库也不会显示,那么也就意味着一个关键字最多显示760个url

url_list = re.findall('<a href="(.*)\?from=search', html) # 获取关键词页面的urls

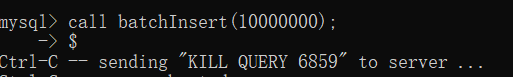

通过循环遍历1-25000的数字,每个数字作为关键词,进行拼接url,便可以得到超过1000W的url来进行下载了。

urls = []

for i in range(1, 25000):for x in range(0, 760, 10):url = 'https://wenku.baidu.com/search/main?word={0}&org=0&fd=0&lm=1&od=0&pn={1}'.format(i, x) # lm=1为下载doc文档urls.append(url)

好了 接下来就是使用gevent协程来跑爬虫了

import re

import sys

import timeimport gevent

from gevent import monkey

from urllib.request import urlopen, Requestmonkey.patch_all()

sys.setrecursionlimit(1000000)def myspider(range1):urls = []if range1 == 50:range2 = 1else:range2 = range1 - 100for i in range(range2, range1):for x in range(0, 760, 10):url = 'https://wenku.baidu.com/search/main?word={0}&org=0&fd=0&lm=1&od=0&pn={1}'.format(i, x)urls.append(url)wenku_urls = []for url in urls:print(url)try:# r = Request(url, headers=headers)resp = urlopen(url)data = resp.read()list1 = re.findall('<a href="(.*)\?from=search', data.decode('gb18030'))wenku_urls += list1print(list1)print(url)except Exception as e:print(e)continueprint(wenku_urls)print(len(wenku_urls))with open('{0}.txt'.format(range1//50), 'w', encoding='utf-8') as f: # 记录urlsfor i in list(set(wenku_urls)):f.write(i + '\n')async_time_start = time.time()jobs = []

for i in range(50, 25001, 50):jobs.append(i)

print(jobs)job = [gevent.spawn(myspider, range1) for range1 in jobs]

gevent.joinall(job)print("异步耗时:", time.time() - async_time_start)

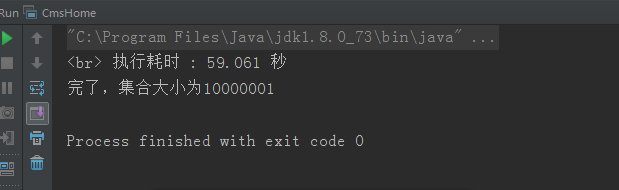

启动500个协程,瞬间跑满了带宽,我的辣鸡电脑进过长达8个小时的奋战,终与跑完了所有的url