Zero2Hero 4 - Gradient

- 创建一个

Value类,属性包含变量的值和梯度信息,并支持梯度计算。 - 举例说明梯度反向计算过程。

- 基于

Value类构建MLP模型、并实现参数的更新。

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

Value类

支持加法、乘法和Tanh的梯度计算

class Value:def __init__(self, data, _children=(), _op='', label=''):self.data = dataself.grad = 0.0self._backward = lambda: Noneself._prev = set(_children)self._op = _opself.label = labeldef __repr__(self):return f"Value(data={self.data})"def __add__(self, other):out = Value(self.data + other.data, (self, other), '+')def _backward():self.grad += 1.0 * out.gradother.grad += 1.0 * out.gradout._backward = _backwardreturn outdef __mul__(self, other):out = Value(self.data * other.data, (self, other), '*')def _backward():self.grad += other.data * out.gradother.grad += self.data * out.gradout._backward = _backwardreturn outdef tanh(self):x = self.datat = (math.exp(2*x) - 1)/(math.exp(2*x) + 1)out = Value(t, (self, ), 'tanh')def _backward():self.grad += (1 - t**2) * out.gradout._backward = _backwardreturn outdef backward(self):# topological order all of the children in the graphtopo = []visited = set()def build_topo(v):if v not in visited:visited.add(v)for child in v._prev:build_topo(child)topo.append(v)build_topo(self)self.grad = 1.0for node in reversed(topo):node._backward()

例子1:加法

a = Value(-4.0)

b = Value(2.0)

c = a + b

c.backward()

print("a.grad : ",a.grad)

print("b.grad : ",b.grad)

a.grad : 1.0

b.grad : 1.0

计算图

简单分析以下,反向传播和梯度计算的过程:

c.prev : (Value(data=-4.0), Value(data=2.0))

a.grad = 0.0

b.grad = 0.0

- 创建空列表topo,和空集合visited。

- 调用

build_topo()方法,把c加入列表topo中和集合visited中。 - 然后遍历

c.prev中的Value对象,(Value(data=-4.0), Value(data=2.0))- 对每个Value对象都调用,

build_topo()方法 - 先检查Value是否存在visited集合中,否,加入列表和集合

- 然后如每个Value对象的prev不为空,继续递归调用下去

- 对每个Value对象都调用,

- 直到遍历完计算图中全部的Value对象,初始化

c.grad = 1.0。 - 逆序遍历topo列,调用

_backward()计算每个Value对象的梯度c._backward():a.grad += 1.0 * c.gradb.grad += 1.0 * c.grada._backward = lambda : Noneb._backward = lambda : None

a.grad: 1.0,b.grad: 1.0

例子2:加法、乘法混合运算

a = Value(2.0, label='a')

b = Value(-3.0, label='b')

c = Value(10.0, label='c')

e = a*b; e.label = 'e'

d = e + c; d.label = 'd'

f = Value(-2.0, label='f')

L = d * f; L.label = 'L'

L

Value(data=-8.0)

正向传播过程:

反向传播过程:

L.backward()

print("d.grad : ",d.grad)

print("f.grad : ",f.grad)

print("c.grad : ",c.grad)

print("e.grad : ",e.grad)

print("a.grad : ",a.grad)

print("b.grad : ",b.grad)

d.grad : -2.0

f.grad : 4.0

c.grad : -2.0

e.grad : -2.0

a.grad : 6.0

b.grad : -4.0

例子3:MLP

MLP结构如下:

# inputs x1,x2

x1 = Value(2.0, label='x1')

x2 = Value(0.0, label='x2')

# weights w

w1 = Value(-3.0, label='w1')

w2 = Value(1.0, label='w2')

# bias

b = Value(6.8813735870195432, label='b')

# x1*w1 + x2*w2 + b

o1 = x1*w1; o1.label = 'o1'

o2 = x2*w2; o2.label = 'o2'

ho = o1 + o2; ho.label = 'ho'

n = ho + b; n.label='n'

o = n.tanh(); o.label = 'o'

topo = []

visited = set()

def build_topo(v):if v not in visited:visited.add(v)for child in v._prev:build_topo(child)topo.append(v)

build_topo(o)

for val in reversed(topo):print(val.label,":",val,val.grad)

o : Value(data=0.7071067811865476) 0.0

n : Value(data=0.8813735870195432) 0.0

b : Value(data=6.881373587019543) 0.0

ho : Value(data=-6.0) 0.0

o2 : Value(data=0.0) 0.0

w2 : Value(data=1.0) 0.0

x2 : Value(data=0.0) 0.0

o1 : Value(data=-6.0) 0.0

x1 : Value(data=2.0) 0.0

w1 : Value(data=-3.0) 0.0

计算计算图中每个节点的梯度 step by step:

o.grad = 1.0

n.grad = n.grad + (1.0 - o.data**2) * o.grad

n.grad

0.4999999999999999

ho.grad = ho.grad + 1.0 * n.grad

b.grad = b.grad + 1.0 * n.grad

ho.grad, b.grad

(0.4999999999999999, 0.4999999999999999)

o1.grad = o1.grad + 1.0 * ho.grad

o2.grad = o2.grad + 1.0 * ho.grad

o1.grad, o2.grad

(0.4999999999999999, 0.4999999999999999)

x1.grad = x1.grad + w1.data * o1.grad

w1.grad = w1.grad + x1.data * o1.grad

x1.grad, w1.grad

(-1.4999999999999996, 0.9999999999999998)

x2.grad = x2.grad + w2.data * o2.grad

w2.grad = w2.grad + x2.data * o2.grad

x2.grad, w2.grad

(0.4999999999999999, 0.0)

for val in reversed(topo):print(val.label,":",val,val.grad)

o : Value(data=0.7071067811865476) 1.0

n : Value(data=0.8813735870195432) 0.4999999999999999

b : Value(data=6.881373587019543) 0.4999999999999999

ho : Value(data=-6.0) 0.4999999999999999

o2 : Value(data=0.0) 0.4999999999999999

w2 : Value(data=1.0) 0.0

x2 : Value(data=0.0) 0.4999999999999999

o1 : Value(data=-6.0) 0.4999999999999999

x1 : Value(data=2.0) -1.4999999999999996

w1 : Value(data=-3.0) 0.9999999999999998

扩展Value类

增加减法和指数运算

class Value:def __init__(self, data, _children=(), _op='', label=''):self.data = dataself.grad = 0.0self._backward = lambda: Noneself._prev = set(_children)self._op = _opself.label = labeldef __repr__(self):return f"Value(data={self.data})"def __add__(self, other):other = other if isinstance(other, Value) else Value(other)out = Value(self.data + other.data, (self, other), '+')def _backward():self.grad += 1.0 * out.gradother.grad += 1.0 * out.gradout._backward = _backwardreturn outdef __mul__(self, other):other = other if isinstance(other, Value) else Value(other)out = Value(self.data * other.data, (self, other), '*')def _backward():self.grad += other.data * out.gradother.grad += self.data * out.gradout._backward = _backward return outdef __pow__(self, other):assert isinstance(other, (int, float)), "only supporting int/float powers for now"out = Value(self.data**other, (self,), f'**{other}')def _backward():self.grad += other * (self.data ** (other - 1)) * out.gradout._backward = _backwardreturn outdef __rmul__(self, other): # other * selfreturn self * otherdef __truediv__(self, other): # self / otherreturn self * other**-1def __neg__(self): # -selfreturn self * -1def __sub__(self, other): # self - otherreturn self + (-other)def __radd__(self, other): # other + selfreturn self + otherdef tanh(self):x = self.datat = (math.exp(2*x) - 1)/(math.exp(2*x) + 1)out = Value(t, (self, ), 'tanh')def _backward():self.grad += (1 - t**2) * out.gradout._backward = _backwardreturn outdef exp(self):x = self.dataout = Value(math.exp(x), (self, ), 'exp')def _backward():self.grad += out.data * out.grad out._backward = _backwardreturn outdef backward(self):topo = []visited = set()def build_topo(v):if v not in visited:visited.add(v)for child in v._prev:build_topo(child)topo.append(v)build_topo(self)self.grad = 1.0for node in reversed(topo):node._backward()

例子4

# inputs x1,x2

x1 = Value(2.0, label='x1')

x2 = Value(0.0, label='x2')

# weights w1,w2

w1 = Value(-3.0, label='w1')

w2 = Value(1.0, label='w2')

# bias of the neuron

b = Value(6.8813735870195432, label='b')

# x1*w1 + x2*w2 + b

h1 = x1*w1; h1.label = 'h1'

h2 = x2*w2; h2.label = 'h2'

h = h1 + h2; h.label = 'h'

n = h + b; n.label = 'n'

# ----

e = (2*n).exp()

o = (e - 1) / (e + 1)

# ----

o.label = 'o'

o.backward()

计算图

简单实现MLP

class Neuron:def __init__(self, nin):self.w = [Value(np.random.uniform(-1,1)) for _ in range(nin)]self.b = Value(np.random.uniform(-1,1))def __call__(self, x):# w * x + bact = sum((wi*xi for wi, xi in zip(self.w, x)), self.b)out = act.tanh()return outdef parameters(self):return self.w + [self.b]

class Layer:def __init__(self, nin, nout):self.neurons = [Neuron(nin) for _ in range(nout)]def __call__(self, x):outs = [n(x) for n in self.neurons]return outs[0] if len(outs) == 1 else outsdef parameters(self):return [p for neuron in self.neurons for p in neuron.parameters()]

class MLP:def __init__(self, nin, nouts):sz = [nin] + noutsself.layers = [Layer(sz[i], sz[i+1]) for i in range(len(nouts))]def __call__(self, x):for layer in self.layers:x = layer(x)return xdef parameters(self):return [p for layer in self.layers for p in layer.parameters()]

# 初始化模型

m = MLP(3, [4, 4, 1])

# 训练数据

xs = [[2.0, 3.0, -1.0],[3.0, -1.0, 0.5],[0.5, 1.0, 1.0],[1.0, 1.0, -1.0],

]

ys = [1.0, -1.0, -1.0, 1.0] # desired targets

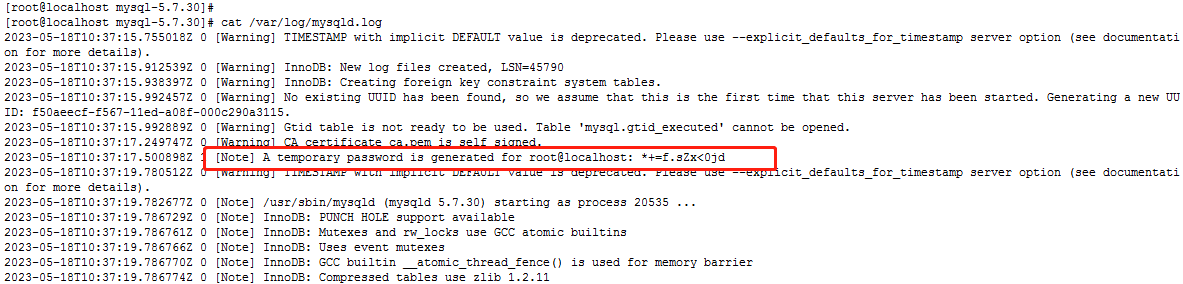

训练MLP

loss_history = []

for k in range(10000):# forward passypred = [n(x) for x in xs]loss = sum((yout - ygt)**2 for ygt, yout in zip(ys, ypred))# backward passfor p in n.parameters():p.grad = 0.0loss.backward()# updatefor p in n.parameters():p.data += -0.1 * p.gradloss_history.append(loss.data)

plt.figure(figsize=(5,3))

plt.plot(loss_history)