一般而言,不论我们是否要将该主干结构用于何种模型,一般都是在这个基础上进行的。例如,将在Yolact中用EfficientNet替换Resnet,网上有说可以在同等效果下让模型数据量降为原来的大约1/4到1/5左右。

下面我列出一下主干结构。

self._conv_stem

Conv2dStaticSamePadding((conv): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), bias=False) )

self._blocks

模型参数参考utils.py -- > efficientnet -- > block_args,所有的blocks都是基于这个参数自动派生出来的,

blocks_args = ['r1_k3_s11_e1_i32_o16_se0.25', 'r2_k3_s22_e6_i16_o24_se0.25','r2_k5_s22_e6_i24_o40_se0.25', 'r3_k3_s22_e6_i40_o80_se0.25','r3_k5_s11_e6_i80_o112_se0.25', 'r4_k5_s22_e6_i112_o192_se0.25','r1_k3_s11_e6_i192_o320_se0.25',]

其中的参数意义如下

args = ['r%d' % block.num_repeat,'k%d' % block.kernel_size,'s%d%d' % (block.strides[0], block.strides[1]),'e%s' % block.expand_ratio,'i%d' % block.input_filters,'o%d' % block.output_filters]

在生成模型时,根据输入的模型名称(utils.py),选择不同的(width, depth, resolution, dropout)参数,例如efficientnet-b0的参数就是(1.0, 1.0, 224, 0.2),

def efficientnet_params(model_name):""" Map EfficientNet model name to parameter coefficients. """params_dict = {# Coefficients: width,depth,res,dropout'efficientnet-b0': (1.0, 1.0, 224, 0.2),'efficientnet-b1': (1.0, 1.1, 240, 0.2),'efficientnet-b2': (1.1, 1.2, 260, 0.3),'efficientnet-b3': (1.2, 1.4, 300, 0.3),'efficientnet-b4': (1.4, 1.8, 380, 0.4),'efficientnet-b5': (1.6, 2.2, 456, 0.4),'efficientnet-b6': (1.8, 2.6, 528, 0.5),'efficientnet-b7': (2.0, 3.1, 600, 0.5),'efficientnet-b8': (2.2, 3.6, 672, 0.5),'efficientnet-l2': (4.3, 5.3, 800, 0.5),}return params_dict[model_name]在后面生成blocks时,这个depth会和前面提到的 block_args中的num_repeat相乘,以最终决定生成多少个子block;width则会和filters(也就是channel)相乘,以决定输出的filter数目。具体可参考下面两个函数:

def round_filters(filters, global_params):""" Calculate and round number of filters based on depth multiplier. """multiplier = global_params.width_coefficientif not multiplier:return filtersdivisor = global_params.depth_divisormin_depth = global_params.min_depthfilters *= multipliermin_depth = min_depth or divisornew_filters = max(min_depth, int(filters + divisor / 2) // divisor * divisor)if new_filters < 0.9 * filters: # prevent rounding by more than 10%new_filters += divisorreturn int(new_filters)

def round_repeats(repeats, global_params):""" Round number of filters based on depth multiplier. """multiplier = global_params.depth_coefficientif not multiplier:return repeatsreturn int(math.ceil(multiplier * repeats))其ModuleList共计有(r1+r2+r2+r3+r3+r4+r1) = 16层,如下,

ModuleList(

(0): MBConvBlock(

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1,), groups=32, bias=False) )

(_bn1): BatchNorm2d(32, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(32, 8, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(8, 32, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(32, 16, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(16, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(1): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(16, 96, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(96, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(96, 96, kernel_size=(3, 3), stride=(2,), groups=96, bias=False) )

(_bn1): BatchNorm2d(96, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(96, 4, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(4, 96, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(96, 24, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(24, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(2): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(144, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(144,144, kernel_size=(3,3), stride=(1,1), groups=144,bias=False))

(_bn1): BatchNorm2d(144, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(144, 6, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(6, 144, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(144, 24, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(24, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(3): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(144, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(144,144,kernel_size=(5,5), stride=(2,), groups=144, bias=False) )

(_bn1): BatchNorm2d(144, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(144, 6, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(6, 144, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(144, 40, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(40, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(4): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(40, 240, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(240, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(240,240, kernel_size=(5,5), stride=(1,1), groups=240,bias=False))

(_bn1): BatchNorm2d(240, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(240, 10, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(10, 240, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(240, 40, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(40, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(5): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(40, 240, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(240, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(240, 240, kernel_size=(3, 3), stride=(2,), groups=240, bias=False) )

(_bn1): BatchNorm2d(240, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(240, 10, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(10, 240, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(240, 80, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(80, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(6): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(80, 480, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(480,480, kernel_size=(3,3), stride=(1,1), groups=480,bias=False))

(_bn1): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(480, 20, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(20, 480, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(480, 80, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(80, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(7): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(80, 480, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(480,480, kernel_size=(3,3), stride=(1,1), groups=480, bias=False))

(_bn1): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(480, 20, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(20, 480, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(480, 80, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(80, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(8): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(80, 480, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding(

(conv): Conv2d(480, 480, kernel_size=(5, 5), stride=(1,), groups=480, bias=False)

)

(_bn1): BatchNorm2d(480, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(480, 20, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(20, 480, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(480, 112, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(112, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(9): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(672,672, kernel_size=(5,5), stride=(1,1), groups=672, bias=False))

(_bn1): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(112, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(10): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(672,672, kernel_size=(5,5), stride=(1,1), groups=672, bias=False))

(_bn1): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(112, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(11): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(672, 672, kernel_size=(5,5), stride=(2,), groups=672, bias=False))

(_bn1): BatchNorm2d(672, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(672, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(192, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(12): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(192, 1152, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv):Conv2d(1152, 1152, kernel_size=(5,5), stride=(1,1), groups=1152,bias=False)

)

(_bn1): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(1152, 48, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(48, 1152, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(1152, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(192, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(13): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(192, 1152, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(1152,1152, kernel_size=(5,5), stride=(1,1), groups=1152,bias=False))

(_bn1): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(1152, 48, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(48, 1152, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(1152, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(192, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(14): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(192, 1152, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding((conv): Conv2d(1152,1152, kernel_size=(5,5), stride=(1,1), groups=1152,bias=False))

(_bn1): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(1152, 48, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(48, 1152, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(1152, 192, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(192, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

(15): MBConvBlock(

(_expand_conv): Conv2dStaticSamePadding( (conv): Conv2d(192, 1152, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn0): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_depthwise_conv): Conv2dStaticSamePadding( (conv): Conv2d(1152,1152, kernel_size=(3,3), stride=(1,),groups=1152,bias=False))

(_bn1): BatchNorm2d(1152, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_se_reduce): Conv2dStaticSamePadding( (conv): Conv2d(1152, 48, kernel_size=(1, 1), stride=(1, 1)) )

(_se_expand): Conv2dStaticSamePadding( (conv): Conv2d(48, 1152, kernel_size=(1, 1), stride=(1, 1)) )

(_project_conv): Conv2dStaticSamePadding( (conv): Conv2d(1152, 320, kernel_size=(1, 1), stride=(1, 1), bias=False) )

(_bn2): BatchNorm2d(320, eps=0.001, momentum=0.010000000000000009, affine=True, track_running_stats=True)

(_swish): MemoryEfficientSwish()

)

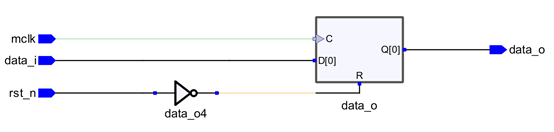

)这里,注意到1, 3, 5, 11 层的结果其stride都是[2, 2],一般在这里输出 feature map,加上最后一层(第15层),共计5层,也就是FPN和各层。EfficientDet就是利用这5层作为Feature Map的输入,事实上EfficientDet只用到了最后3层(p3, p4, p5),p5上采样生成p6,p7,这5层通过BiFPN操作后,最终生成class和box prediction layer,这些就不在这里具体展开了,可参考backbone.py:GitHub - zylo117/Yet-Another-EfficientDet-Pytorch: The pytorch re-implement of the official efficientdet with SOTA performance in real time and pretrained weights.。 后面的_conv_head,_fc,还有一些没列出来的averagepool等在EfficientDet应用中没有用到,直接删除掉了。

self._conv_head

Conv2dStaticSamePadding((conv): Conv2d(320, 1280, kernel_size=(1, 1), stride=(1, 1), bias=False) )

self._fc

Linear(in_features=1280, out_features=1000, bias=True)

主要参考模型:

GitHub - zylo117/Yet-Another-EfficientDet-Pytorch: The pytorch re-implement of the official efficientdet with SOTA performance in real time and pretrained weights.

GitHub - lukemelas/EfficientNet-PyTorch: A PyTorch implementation of EfficientNet and EfficientNetV2 (coming soon!)