深度相机和激光雷达是智能汽车上常用的传感器。但深度相机具有特征难以提取,容易受到视角影响。激光雷达存在数据不够直观且容易被吸收,从而丢失信息。因此在自动驾驶领域,需要对于不同传感器做数据的融合和传感器的标定。

相机内参标定

内参标定的原理和方法比较简单,由于只有焦距是未知量,因此计算焦距,求得内参。

相机的畸变

畸变属于成像的几何失真,它是由于焦平面上不同区域对影像的放大率不同而形成的画面扭曲变形现象。在内参标定时需要获取相机的畸变向量矩阵。

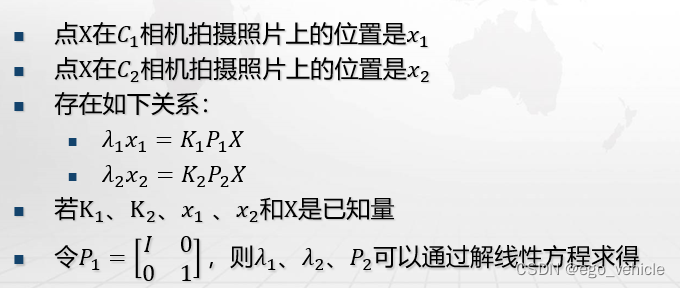

相机的外参标定

利用Atuoware获取融合标定参数

- 启动16线激光雷达和深度相机

- 录制标定过程bag包(过程中定时改变标定板位置)

- 编译标定工具箱calibration_camera_lidar

- 编译非线性库nlopt

- 利用calibration_camera_lidar进行联合标定

- 保存外参矩阵、内参矩阵和畸变矩阵

- 编写点云投影融合图像程序

- 完成标定与验证

具体的操作可以参考:【Autoware】激光雷达-摄像头联合标定1- Calibration Tool Kit - 简书 (jianshu.com)![]() https://www.jianshu.com/p/5e5902b46f3d

https://www.jianshu.com/p/5e5902b46f3d

编写雷达相机融合标定程序

以下是基于松灵小车的深度相机和激光雷达融合代码:

创建.h文件,包含头文件,初始化发布、订阅者和点云图像融合类。

#pragma once#include <vector>

#include <deque>#include "ros/ros.h"

#include <sensor_msgs/PointCloud2.h>#include <image_transport/image_transport.h>

#include "opencv2/opencv.hpp"

#include <cv_bridge/cv_bridge.h>#include <pcl/point_types.h>//支持点类型头文件

#include <pcl/point_cloud.h> //支持点类型头文件

#include <pcl_conversions/pcl_conversions.h>

#include <pcl/filters/passthrough.h>

#include <pcl/common/transforms.h>using namespace std;class ILProjection {private:// 节点句柄ros::NodeHandle nh;// 图像订阅发布image_transport::ImageTransport it;// 图像订阅者image_transport::Subscriber image_sub;// 雷达订阅者ros::Subscriber laser_sub;// 图像发布者image_transport::Publisher image_pub;// 雷达发布者ros::Publisher laser_pub;// 图像融合显示订阅者image_transport::Subscriber imageShow_sub;//使用队列实现同步std::deque<sensor_msgs::ImageConstPtr> imageDeque;std::deque<sensor_msgs::PointCloud2ConstPtr> laserDeque;cv::Mat extrinsic_mat; // 外参矩阵cv::Mat camera_mat; // 内参矩阵cv::Mat dist_coeff; // 畸变矩阵cv::Mat rotate_mat; // 旋转矩阵cv::Mat transform_vec; // 平移向量Eigen::Matrix4d laserToImageTransform; // 坐标变化矩阵int color[21][3] = {{255, 0, 0}, {255, 69, 0}, {255, 99, 71}, {255, 140, 0}, {255, 165, 0}, {238, 173, 14},{255, 193, 37}, {255, 255, 0}, {255, 236, 139},{202, 255, 112}, {0, 255, 0}, {84, 255, 159},{127, 255, 212}, {0, 229, 238}, {152, 245, 255},{178, 223, 238}, {126, 192, 238}, {28, 134, 238},{0, 0, 255}, {72, 118, 255}, {122, 103, 238} };float color_distance; //根据平面距离对激光雷达点进行着色的步长(z)public:ILProjection();~ILProjection();void imageCb(const sensor_msgs::ImageConstPtr &msg);void laserCb(const sensor_msgs::PointCloud2ConstPtr &msg);// 加载相机参数//加载相机参数void loadProjectionParam();// 判断是否能进行映射void doProjection();// 实现映射void projection(pcl::PointCloud<pcl::PointXYZI>::Ptr&ccloud,cv::Mat&img);// 激光雷达滤波void filterCloudPoint(pcl::PointCloud<pcl::PointXYZI>::Ptr& cloud_in,pcl::PointCloud<pcl::PointXYZI>::Ptr& cloud_out);//openCV显示图像void imageShowCb(const sensor_msgs::ImageConstPtr &msg);void imageShow();

};创建主函数文件,写融合标定类的实现方法

#include "ILProjection.h"

#include <iostream>// TODO 相机参数文件路径需要修改 (绝对路径)

// 小车路径: /home/agilex/songling-car-task2/src/image_laser_projection/param/Calibrate-data

const string path = "/home/ubuntu/songling-car-task2/src/image_laser_projection/param/Calibrate-data";ILProjection::ILProjection():it(nh) {color_distance = 1.2;loadProjectionParam();image_sub = it.subscribe("/camera/color/image_raw", 1, &ILProjection::imageCb, this);laser_sub = nh.subscribe<sensor_msgs::PointCloud2>("/rslidar_points",1,&ILProjection::laserCb, this);image_pub = it.advertise("/image_laser_projection/image", 1);laser_pub = nh.advertise<sensor_msgs::PointCloud2>("/image_laser_projection/laser", 1);

}ILProjection::~ILProjection() {}void ILProjection::imageCb(const sensor_msgs::ImageConstPtr &msg) {imageDeque.push_back(msg);doProjection();

}void ILProjection::laserCb(const sensor_msgs::PointCloud2ConstPtr &msg) {laserDeque.push_back(msg);doProjection();

}void ILProjection::loadProjectionParam(){//打开标定结果文件cv::FileStorage fs_read(path, cv::FileStorage::READ);fs_read["CameraExtrinsicMat"] >> extrinsic_mat; //从文件里读取4x4外参矩阵fs_read["CameraMat"] >> camera_mat; //从文件里读取3x3相机内参矩阵fs_read["DistCoeff"] >> dist_coeff; //从文件里读取1x5畸变矩阵fs_read.release(); //关闭文件// 下列代码段从外参矩阵中读取旋转矩阵、平移向量rotate_mat=cv::Mat(3, 3, cv::DataType<double>::type); // 将旋转矩阵赋值成3x3矩阵// 取前三行前三列for(int i=0;i<3;i++){for(int j=0;j<3;j++){rotate_mat.at<double>(i,j)=extrinsic_mat.at<double>(j,i);}}transform_vec=cv::Mat(3, 1, cv::DataType<double>::type); //将平移向量赋值成3x1矩阵transform_vec.at<double>(0)=extrinsic_mat.at<double>(1,3);transform_vec.at<double>(1)=extrinsic_mat.at<double>(2,3);transform_vec.at<double>(2)=-extrinsic_mat.at<double>(0,3);for(int i=0;i<4;i++){for(int j=0;j<4;j++){laserToImageTransform(i,j) = extrinsic_mat.at<double>(j,i);}}

}void ILProjection::doProjection() {// 当图像或雷达数据队列为空时,直接退出。if(imageDeque.empty() || laserDeque.empty()) {return;}// 从队列中获取图像消息sensor_msgs::ImageConstPtr imageMsgPtr = imageDeque.front();// 定义图像接收指针cv_bridge::CvImagePtr cv_ptr;try {// 接收消息cv_ptr = cv_bridge::toCvCopy(imageMsgPtr, sensor_msgs::image_encodings::BGR8);}catch (cv_bridge::Exception &e) {ROS_ERROR("cv_bridge exception: %s", e.what());return;}//从队列中获取激光雷达消息sensor_msgs::PointCloud2ConstPtr laserMsgPtr = laserDeque.front();// 定义点云pcl::PointCloud<pcl::PointXYZI>::Ptr lasercloud (new pcl::PointCloud<pcl::PointXYZI>);pcl::PointCloud<pcl::PointXYZI>::Ptr imageCloud (new pcl::PointCloud<pcl::PointXYZI>);pcl::PointCloud<pcl::PointXYZI>::Ptr laserFilteredCloud (new pcl::PointCloud<pcl::PointXYZI>);// 将接受到的雷达点云消息转化为接收点云数据类型pcl::fromROSMsg(*laserMsgPtr,*lasercloud);// 点云滤波filterCloudPoint(lasercloud,laserFilteredCloud);// 将激光雷达点云由激光雷达坐标转为摄像机坐标pcl::transformPointCloud(*laserFilteredCloud, *imageCloud, laserToImageTransform);// 删除较老的数据 (时间戳转为浮点数后,越大则越新)double imageTime = imageMsgPtr->header.stamp.toSec();double laserTime = laserMsgPtr->header.stamp.toSec();if(imageTime > laserTime) {laserDeque.pop_front();}else {imageDeque.pop_front();}// 调用融合函数projection(imageCloud,cv_ptr->image);

}void ILProjection::projection(pcl::PointCloud<pcl::PointXYZI>::Ptr&ccloud,cv::Mat&img) {std::vector<cv::Point3d> lidar_points;std::vector<cv::Scalar> dis_color;lidar_points.reserve(ccloud->size()+1);dis_color.reserve(ccloud->size()+1);//保留相机正前方的点(z>0)for(int i=0;i<=ccloud->points.size();i++){if(ccloud->points[i].z > 0) {lidar_points.push_back(cv::Point3d(ccloud->points[i].x, ccloud->points[i].y, ccloud->points[i].z));int color_order = int(ccloud->points[i].z / color_distance);if(color_order > 20) {color_order = 20;}dis_color.push_back(cv::Scalar(color[color_order][2], color[color_order][1], color[color_order][0]));}}vector<cv::Point2d> projectedPoints; //该vector用来存储投影过后的二维点// 投影核心函数// 第一个参数为原始3d点// 第二个参数为旋转矩阵// 第三个参数为平移向量// 第四个参数为相机内参矩阵// 第五个参数为投影结果cv::Mat rMat = cv::Mat::eye(3, 3, CV_64FC1);cv::Mat tVec = cv::Mat::zeros(3, 1, CV_64FC1);cv::projectPoints(lidar_points,rMat,tVec,camera_mat,dist_coeff,projectedPoints);//遍历投影结果for (int i = 0; i<projectedPoints.size(); i++){cv::Point2d p = projectedPoints[i];// 由于图像尺寸为480x640,所以投影后坐标处于图像范围内的点才在图像中进行标示if (p.y<480&&p.y>=0&&p.x<640&&p.x>=0){//cout << p.x << " " << p.y << " " << dis_color[i] << endl;//标示的方式是在对应位置画圆圈cv::circle(img, p, 1, dis_color[i], 2, 8, 0);}}//利用cv_bridge将opencv图像转为ros图像sensor_msgs::ImagePtr msg=cv_bridge::CvImage(std_msgs::Header(),"bgr8",img).toImageMsg(); image_pub.publish(msg); //image_pub是在函数外定义的一个ros图像发布器,将标示后的图像发布

}void ILProjection::filterCloudPoint(pcl::PointCloud<pcl::PointXYZI>::Ptr& cloud_in,pcl::PointCloud<pcl::PointXYZI>::Ptr& cloud_out) {pcl::PointCloud<pcl::PointXYZI>::Ptr temp (new pcl::PointCloud<pcl::PointXYZI>);//创建X轴滤波器对象pcl::PassThrough<pcl::PointXYZI> xPass;//输入点云xPass.setInputCloud(cloud_in); //设置在Z轴方向上进行滤波xPass.setFilterFieldName("x");//设置滤波范围为0~1,在范围之外的点会被剪除xPass.setFilterLimits(0.0, 1000.0);//是否反向过滤,也就是保留范围以外的点,默认为false//xPass.setFilterLimitsNegative (true);xPass.setFilterLimitsNegative(false);//执行滤波xPass.filter(*temp);//创建Y轴滤波器对象pcl::PassThrough<pcl::PointXYZI> yPass;//输入点云yPass.setInputCloud(temp); //设置在Z轴方向上进行滤波yPass.setFilterFieldName("y");//设置滤波范围为0~1,在范围之外的点会被剪除yPass.setFilterLimits(-0.6, 0.6);//是否反向过滤,也就是保留范围以外的点,默认为false//yPass.setFilterLimitsNegative (true);yPass.setFilterLimitsNegative(false);//执行滤波yPass.filter(*cloud_out);

}void ILProjection::imageShowCb(const sensor_msgs::ImageConstPtr &msg){cv_bridge::CvImagePtr image_msg_bridge;image_msg_bridge = cv_bridge::toCvCopy(msg,sensor_msgs::image_encodings::BGR8);cv::Mat image_cv = image_msg_bridge->image;cv::imshow("view",image_cv);cv::waitKey(3);

}void ILProjection::imageShow(){imageShow_sub = it.subscribe("/image_laser_projection/image",1, &ILProjection::imageShowCb, this);

}

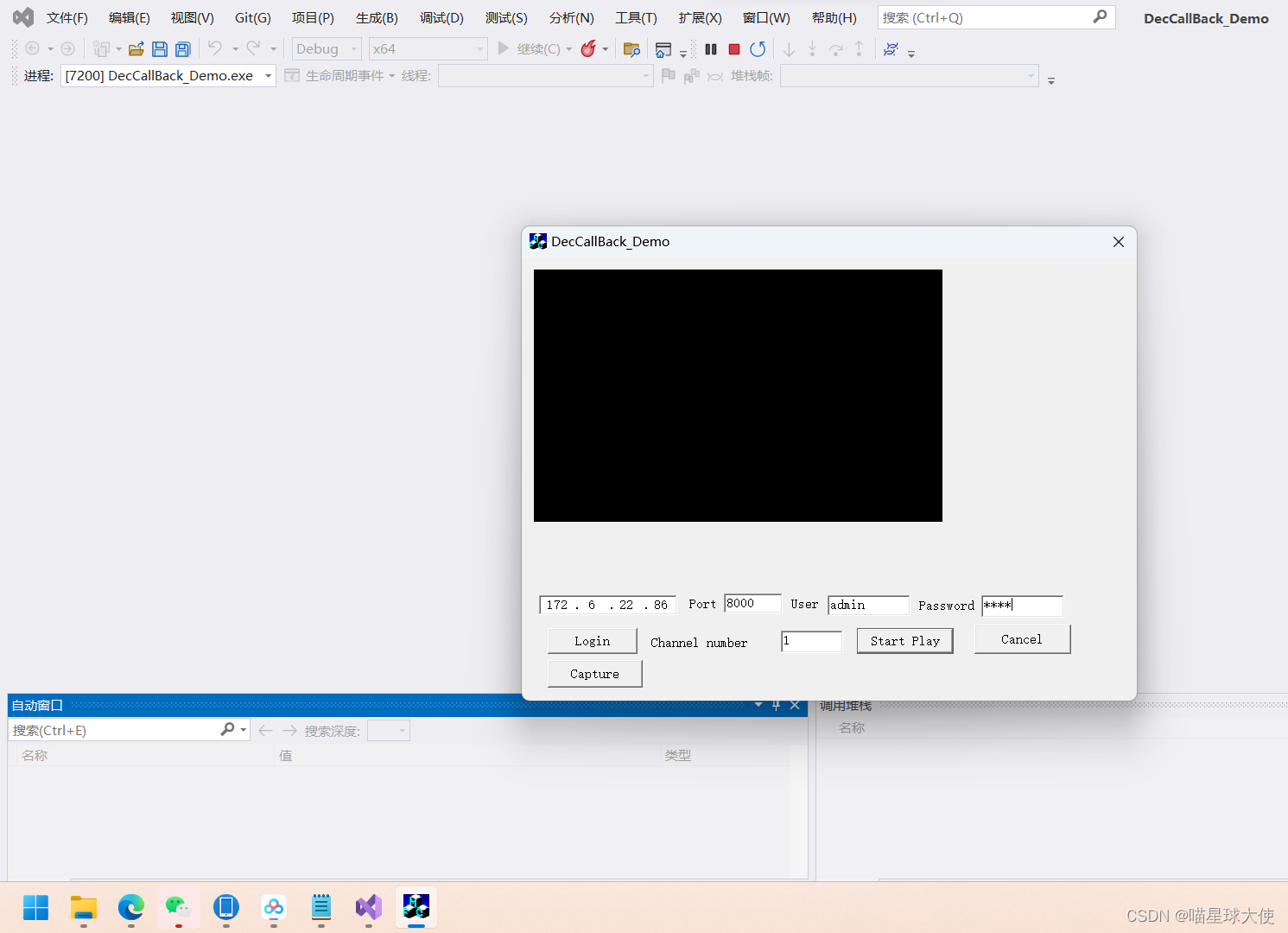

利用弹窗,直接显示融合标定后的结果

#include "ILProjection.h"int main(int argc,char *argv[])

{// 初始化节点ros::init(argc,argv,"image_laser_projection");// 创建句柄ros::NodeHandle nh;ILProjection imageLaserProjection;imageLaserProjection.imageShow();ros::spin();return 0;

}配置库文件,运行。

实现效果

代码仓库参考:

git@e.coding.net:cqu-top-one/SmartCarSoftware/songling-car-task2.git