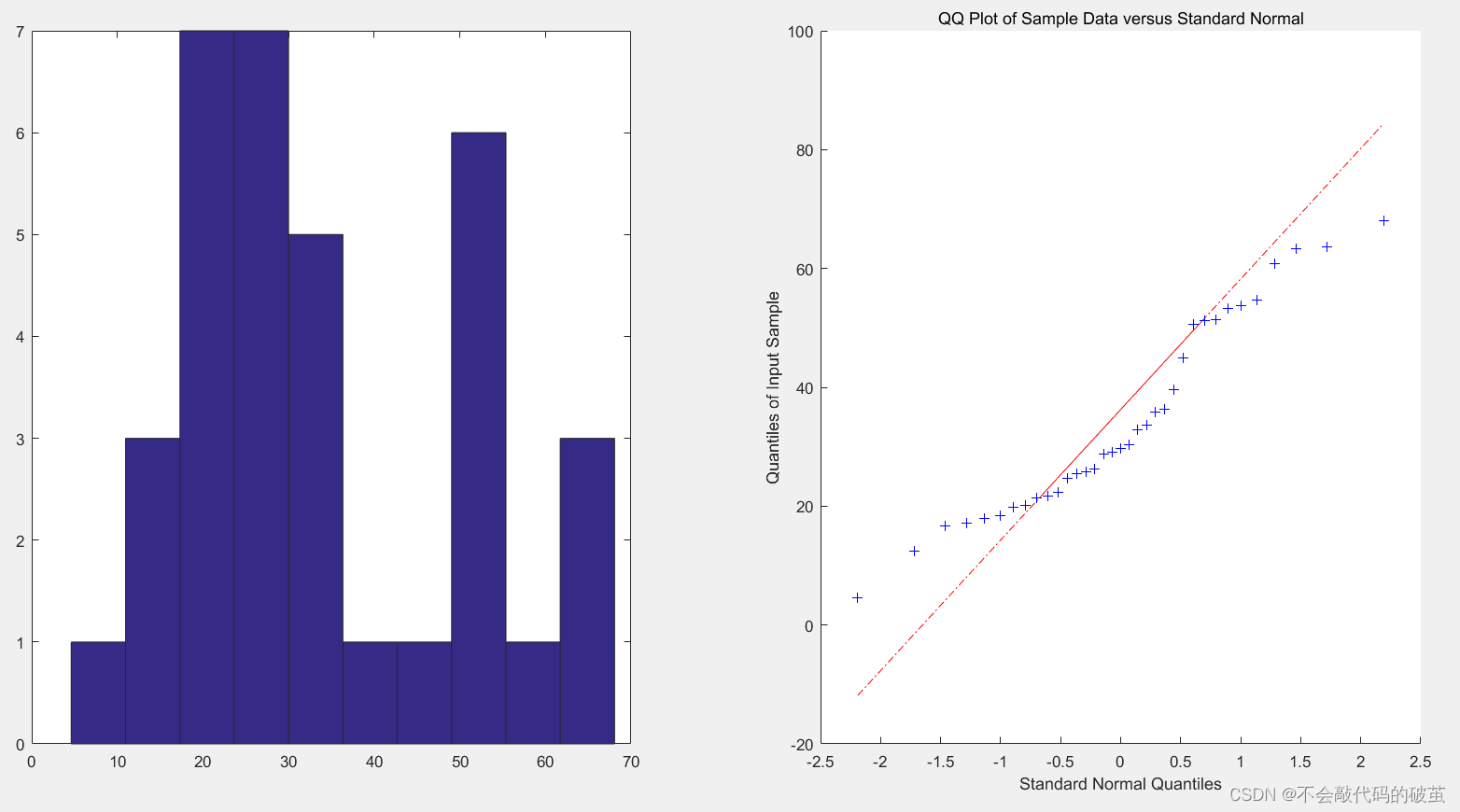

多元回归梯度下降算法实现(SGD优化)(数据集随机生成)

下面就是代码。其实博主做了很多实验,实验效果好不好,跟数据集的质量,跟学习率的选择,SGD 优化器batch的选择都很重要。

下面看一下代码叭:

import torch

from torch.autograd import Variable

from torch.utils import data

X =torch.normal(0,100,(100,4))

w=torch.tensor([1,2,3,4])Y =torch.matmul(X, w.type(dtype=torch.float)) + torch.normal(0, 1, (100, ))

print(Y)

Y=Y.reshape((-1, 1))

print(Y.type())

print(w.type())

print(X.type())

#将X,Y转成200 batch大小,1维度的数据def load_array(data_arrays, batch_size, is_train=True):dataset = data.TensorDataset(*data_arrays)return data.DataLoader(dataset, batch_size, shuffle=is_train)data_iter = load_array((X, Y), 32)model = torch.nn.Sequential(torch.nn.Linear(4, 1))w=torch.tensor([1,0.1,0.2,0.3])

b=torch.randn(1)

w=Variable(w,requires_grad=True)b=Variable(b,requires_grad=True)

print(w)def loss_function(w,x,y,choice,b):if choice==1:return torch.abs(torch.sum(w@x)+b-y)else:# print("fdasf:",torch.sum(w@x),y)# print(torch.pow(torch.sum(w@x)-y,2))return torch.sum(w@x)-y

index=0n=1000

batch=32

learning_rating=0.000001

def SGD(batch):grad=Variable(torch.tensor([0.0]),requires_grad=True)for j in range(batch):try:# print(w,X[index],Y[index],b,)# print(loss_function(w,X[index],Y[index],b,2))# print(torch.sum(w@X[index]),Y[index])grad=(torch.sum(w@X[index])-Y[index])*(-1)*X[index]+gradloss=loss_function(w,X[index],Y[index],2,b)index=index+1except:index=0return grad/batch,loss/batchwhile n:n=n-1grad,loss=SGD(batch)w.data=w.data+learning_rating*grad*w.data# print("b",b)print("w:",w)print("loss:",loss)# b.data=b.data-(learning_rating*b.grad.data)# print("b",b)