目录

内容

Python代码

C++ 代码

workspace 文件

BUILD文件

Java 代码

maven文件

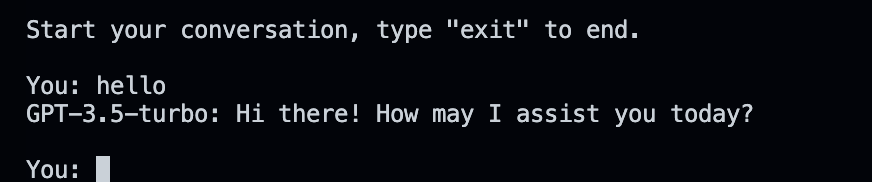

执行效果

内容

基于openai接口实现循环gpt问答,并使用一个文件将问答内容进行记录。

Python代码

# -*- coding: utf-8 -*-

import openai

import time

from pathlib import Path# Set the OpenAI API key

openai.api_key = ""class ChatBot:def __init__(self, model):self.user = "\nYou: "self.bot = "GPT-3.5-turbo: "self.model = modelself.question_list = []self.answer_list = []self.text = ''self.turns = []self.last_result = ''def save_dialogue(self):# Generate a timestamp and create a filename for saving the dialoguetimestamp = time.strftime("%Y%m%d-%H%M-%S", time.localtime()) # Timestampfile_name = 'output/Chat_' + timestamp + '.md' # Filenamef = Path(file_name)f.parent.mkdir(parents=True, exist_ok=True)# Save the dialogue to the filewith open(file_name, "w", encoding="utf-8") as f:for q, a in zip(self.question_list, self.answer_list):f.write(f"You: {q}\nGPT-3.5-turbo: {a}\n\n")print("Dialogue content saved to file: " + file_name)def generate(self):print('\nStart your conversation, type "exit" to end.')while True:question = input(self.user)self.question_list.append(question)prompt = self.bot + self.text + self.user + questionif question == 'exit':breakelse:try:response = openai.ChatCompletion.create(model=self.model,messages=[{"role": "user", "content": prompt},],)result = response["choices"][0]["message"]["content"].strip()print(result)self.answer_list.append(result)self.last_result = resultself.turns += [question] + [result]if len(self.turns) <= 10:self.text = " ".join(self.turns)else:self.text = " ".join(self.turns[-10:])except Exception as exc:print(exc)self.save_dialogue()if __name__ == '__main__':bot = ChatBot('gpt-3.5-turbo')bot.generate()在这个代码中,我们实现了一个与 GPT-3.5-turbo 模型进行对话的聊天机器人。以下是代码的执行流程:

- 首先,我们导入需要用到的库,包括 openai、time 和 pathlib。

- 我们设置 OpenAI API 密钥,以便能够调用 GPT-3.5-turbo 模型。

- 定义一个名为 ChatBot 的类,该类用于与 GPT-3.5-turbo 模型进行交互。

- 类的 __init__ 方法初始化聊天机器人,包括设定用户和机器人的提示符(self.user 和 self.bot),以及其他一些变量,如问题列表、回答列表等。

- save_dialogue 方法用于将聊天记录保存到文件中。这个方法首先创建一个带有时间戳的文件名,然后将问题和回答列表中的内容写入该文件。

- generate 方法用于与 GPT-3.5-turbo 模型进行交互。在这个方法中,我们使用一个循环来获取用户输入的问题,并将其添加到问题列表中。然后,我们构建提示并使用 OpenAI API 调用 GPT-3.5-turbo 模型。我们获取模型的回答,并将其添加到回答列表中。循环会一直进行,直到用户输入 "exit",此时循环结束,调用 save_dialogue 方法将对话内容保存到文件中。

- 在主函数(if __name__ == '__main__')中,我们创建一个 ChatBot 类的实例,并调用 generate 方法开始与 GPT-3.5-turbo 模型的交互。

C++ 代码

// main.cpp

#include <cpprest/http_client.h>

#include <cpprest/filestream.h>

#include <cpprest/json.h>

#include <iostream>

#include <fstream>

#include <string>

#include <ctime>

#include <iomanip>using namespace utility;

using namespace web;

using namespace web::http;

using namespace web::http::client;

using namespace concurrency::streams;

namespace {const std::string api_key = "";

}

class ChatBot {

public:

/**

* @brief Construct a new ChatBot object

*

* @param model The OpenAI GPT model to use (e.g., "gpt-3.5-turbo")

*/

explicit ChatBot(std::string model) : model_(model) {}/**

* @brief Save the dialogue content to a Markdown file

*

* @note This function saves the generated dialogue to a Markdown file named

* "Chat_timestamp.md" in the "output" directory.

*/

void SaveDialogue() {auto timestamp = std::time(nullptr);std::stringstream ss;ss << "output/Chat_" << std::put_time(std::localtime(×tamp), "%Y%m%d-%H%M-%S") << ".md";std::string file_name = ss.str();std::ofstream out(file_name);if (!out) {std::cerr << "Could not create the output file.\n";return;}for (size_t i = 0; i < question_list_.size(); ++i) {out << "You: " << question_list_[i] << "\nGPT-3.5-turbo: " << answer_list_[i] << "\n\n";}out.close();std::cout << "Dialogue content saved to file: " << file_name << std::endl;

}/**

* @brief Generate answers to the user's questions using the GPT model

*

* @note This function prompts the user for their questions, generates answers

* using the GPT model, and saves the dialogue to a file when the user exits.

*/

void Generate() {std::string question;std::cout << "\nStart your conversation, type \"exit\" to end." << std::endl;while (true) {std::cout << "\nYou: ";std::getline(std::cin, question);if (question == "exit") {break;}question_list_.push_back(question);auto answer = GetAnswer(question);if (!answer.empty()) {std::cout << "GPT-3.5-turbo: " << answer << std::endl;answer_list_.push_back(answer);}}SaveDialogue();

}private:

/**

* @brief Get the GPT model's answer to the given question

*

* @param question The user's question

* @return The GPT model's answer as a string

*

* @note This function sends the user's question to the GPT model and

* retrieves the model's answer as a string. If an error occurs, it

* returns an empty string.

*/

std::string GetAnswer(const std::string& question) {

http_client client(U("https://api.openai.com/v1/engines/gpt-3.5-turbo/completions"));

http_request request(methods::POST);

request.headers().add("Authorization", "Bearer "+api_key);

request.headers().add("Content-Type", "application/json");json::value body;

body[U("prompt")] = json::value::string(U("GPT-3.5-turbo: " + question));

body[U("max_tokens")] = 50;

body[U("n")] = 1;

body[U("stop")] = json::value::array({json::value::string(U("\n"))});

request.set_body(body);try {

auto response = client.request(request).get();

auto response_body = response.extract_json().get();

auto result = response_body.at(U("choices")).as_array()[0].at(U("text")).as_string();

return utility::conversions::to_utf8string(result);

} catch (const std::exception& e) {

std::cerr << "Error: " << e.what() << std::endl;

return "";

}

}std::string model_;

std::vector<std::string> question_list_;

std::vector<std::string> answer_list_;

};int main(){

ChatBot bot("gpt-3.5-turbo");

bot.Generate();

return 0;

}因为我的最近经常在使用bazel,所以给出一个基于bazel 的C++编译方法

workspace 文件

load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")# Add cpprestsdk as a dependency

http_archive(name = "cpprestsdk",build_file = "@//:cpprestsdk.BUILD",sha256 = "106e8a5a4f9667f329b8f277f8f25e1f2d31f1a7c7f9e1f366c5b1e3af2f8c4c",strip_prefix = "cpprestsdk-2.10.18",urls = ["https://github.com/microsoft/cpprestsdk/archive/v2.10.18.zip"],

)BUILD文件

cc_binary(name = "chatbot",srcs = ["main.cpp"],deps = ["@cpprestsdk//:cpprest",],linkopts = [# Required for cpprestsdk"-lboost_system","-lssl","-lcrypto",],

)# cpprestsdk.BUILD file content (create this file in the same directory as WORKSPACE)

'''

load("@rules_cc//cc:defs.bzl", "cc_library")cc_library(name = "cpprest",hdrs = glob(["cpprestsdk/cpprestsdk/Release/include/**/*.h"]),srcs = glob(["cpprestsdk/cpprestsdk/Release/src/http/client/*.cpp","cpprestsdk/cpprestsdk/Release/src/http/common/*.cpp","cpprestsdk/cpprestsdk/Release/src/http/listener/*.cpp","cpprestsdk/cpprestsdk/Release/src/json/*.cpp","cpprestsdk/cpprestsdk/Release/src/pplx/*.cpp","cpprestsdk/cpprestsdk/Release/src/uri/*.cpp","cpprestsdk/cpprestsdk/Release/src/utilities/*.cpp","cpprestsdk/cpprestsdk/Release/src/websockets/client/*.cpp",]),includes = ["cpprestsdk/cpprestsdk/Release/include"],copts = ["-std=c++11"],linkopts = ["-lboost_system","-lssl","-lcrypto",],visibility = ["//visibility:public"],

)

'''Java 代码

// ChatBot.java

import okhttp3.*;

import com.google.gson.*;

import java.io.*;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import java.util.ArrayList;public class ChatBot {private static final String API_KEY = "your_openai_api_key_here";private String model;private ArrayList<String> questionList;private ArrayList<String> answerList;public ChatBot(String model) {this.model = model;this.questionList = new ArrayList<>();this.answerList = new ArrayList<>();}public void saveDialogue() {LocalDateTime timestamp = LocalDateTime.now();DateTimeFormatter formatter = DateTimeFormatter.ofPattern("yyyyMMdd-HHmm-ss");String fileName = "output/Chat_" + timestamp.format(formatter) + ".md";try (BufferedWriter writer = new BufferedWriter(new FileWriter(fileName))) {for (int i = 0; i < questionList.size(); ++i) {writer.write("You: " + questionList.get(i) + "\nGPT-3.5-turbo: " + answerList.get(i) + "\n\n");}System.out.println("Dialogue content saved to file: " + fileName);} catch (IOException e) {System.err.println("Could not create the output file.");e.printStackTrace();}}public void generate() {System.out.println("\nStart your conversation, type \"exit\" to end.");BufferedReader reader = new BufferedReader(new InputStreamReader(System.in));String question;while (true) {System.out.print("\nYou: ");try {question = reader.readLine();} catch (IOException e) {System.err.println("Error reading input.");e.printStackTrace();break;}if ("exit".equalsIgnoreCase(question)) {break;}questionList.add(question);String answer = getAnswer(question);if (!answer.isEmpty()) {System.out.println("GPT-3.5-turbo: " + answer);answerList.add(answer);}}saveDialogue();}private String getAnswer(String question) {OkHttpClient client = new OkHttpClient();Gson gson = new Gson();String requestBodyJson = gson.toJson(new RequestBody("GPT-3.5-turbo: " + question, 50, 1, "\n"));RequestBody requestBody = RequestBody.create(requestBodyJson, MediaType.parse("application/json"));Request request = new Request.Builder().url("https://api.openai.com/v1/engines/gpt-3.5-turbo/completions").addHeader("Authorization", "Bearer " + API_KEY).addHeader("Content-Type", "application/json").post(requestBody).build();try (Response response = client.newCall(request).execute()) {if (!response.isSuccessful()) {throw new IOException("Unexpected code " + response);}String responseBodyJson = response.body().string();ResponseBody responseBody = gson.fromJson(responseBodyJson, ResponseBody.class);return responseBody.choices.get(0).text.trim();} catch (IOException e) {System.err.println("Error: " + e.getMessage());e.printStackTrace();return "";}}public static void main(String[] args) {ChatBot bot = new ChatBot("gpt-3.5-turbo");bot.generate();}private static class RequestBody {String prompt;int max_tokens;int n;String stop;RequestBody(String prompt, int max_tokens, int n, String stop) {this.prompt = prompt;this.max_tokens = max_tokens;this.n = n;this.stop = stop;}}private static class ResponseBody {ArrayList<Choice> choices;private static class Choice {String text;}}

}maven文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.example</groupId><artifactId>gpt-chatbot</artifactId><version>1.0-SNAPSHOT</version><properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><maven.compiler.source>1.8</maven.compiler.source><maven.compiler.target>1.8</maven.compiler.target></properties><dependencies><dependency><groupId>com.squareup.okhttp3</groupId><artifactId>okhttp</artifactId><version>4.9.3</version></dependency><dependency><groupId>com.google.code.gson</groupId><artifactId>gson</artifactId><version>2.8.9</version></dependency></dependencies><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>3.8.0</version></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-jar-plugin</artifactId><version>3.1.0</version><configuration><archive><manifest><addClasspath>true</addClasspath><classpathPrefix>lib/</classpathPrefix><mainClass>com.example.ChatBot</mainClass></manifest></archive></configuration></plugin><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-dependency-plugin</artifactId><version>3.1.1</version><executions><execution><id>copy-dependencies</id><phase>package</phase><goals><goal>copy-dependencies</goal></goals><configuration><outputDirectory>${project.build.directory}/lib</outputDirectory></configuration></execution></executions></plugin></plugins></build>

</project>执行效果