kerberos配置hbase出現問題

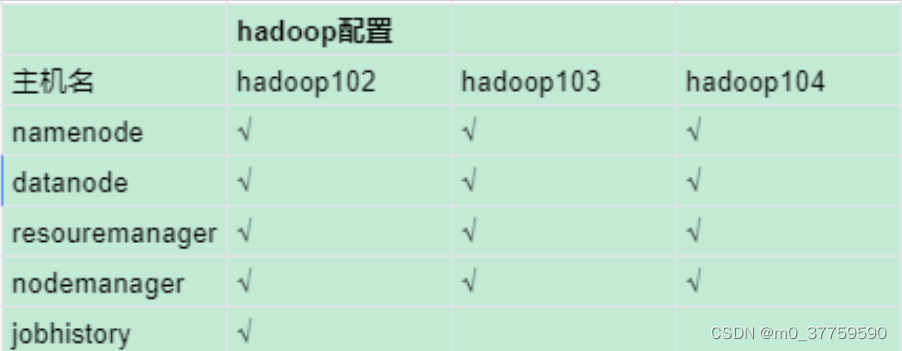

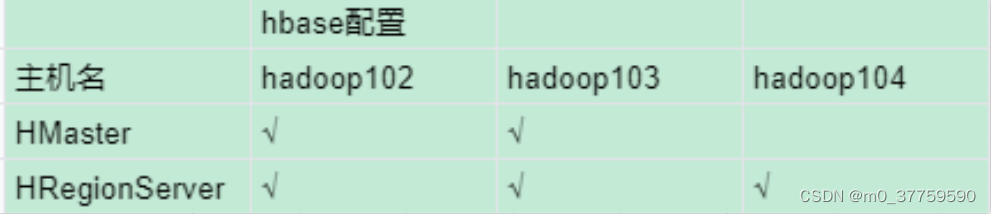

環境如下:

问题描述

想要在hadoop ha的場景上,基於kerberos配置hbase ha,出現了如下的bug

org.apache.zookeeper.KeeperException$NoAuthException: KeeperErrorCode = NoAuth for /hbase/runningat org.apache.zookeeper.KeeperException.create(KeeperException.java:113)at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212)at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataInternal(ZKUtil.java:661)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataAndWatch(ZKUtil.java:637)at org.apache.hadoop.hbase.zookeeper.ZKNodeTracker.nodeCreated(ZKNodeTracker.java:199)at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:460)at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530)at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505)

2023-06-23 16:19:56,035 ERROR [main-EventThread] zookeeper.ZKWatcher: regionserver:16020-0x3029dc0d4ec0021, quorum=hadoop102:2181,hadoop103:2181,hadoop104:2181, baseZNode=/hbase Received unexpected KeeperException, re-throwing exception

org.apache.zookeeper.KeeperException$NoAuthException: KeeperErrorCode = NoAuth for /hbase/runningat org.apache.zookeeper.KeeperException.create(KeeperException.java:113)at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212)at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataInternal(ZKUtil.java:661)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataAndWatch(ZKUtil.java:637)at org.apache.hadoop.hbase.zookeeper.ZKNodeTracker.nodeCreated(ZKNodeTracker.java:199)at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:460)at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530)at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505)

2023-06-23 16:19:56,038 ERROR [main-EventThread] regionserver.HRegionServer: ***** ABORTING region server hadoop102,16020,1687508213386: Unexpected exception handling nodeCreated event *****

org.apache.zookeeper.KeeperException$NoAuthException: KeeperErrorCode = NoAuth for /hbase/runningat org.apache.zookeeper.KeeperException.create(KeeperException.java:113)at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1212)at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.getData(RecoverableZooKeeper.java:340)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataInternal(ZKUtil.java:661)at org.apache.hadoop.hbase.zookeeper.ZKUtil.getDataAndWatch(ZKUtil.java:637)at org.apache.hadoop.hbase.zookeeper.ZKNodeTracker.nodeCreated(ZKNodeTracker.java:199)at org.apache.hadoop.hbase.zookeeper.ZKWatcher.process(ZKWatcher.java:460)at org.apache.zookeeper.ClientCnxn$EventThread.processEvent(ClientCnxn.java:530)at org.apache.zookeeper.ClientCnxn$EventThread.run(ClientCnxn.java:505)

2023-06-23 16:19:56,041 ERROR [main-EventThread] regionserver.HRegionServer: RegionServer abort: loaded coprocessors are: []

2023-06-23 16:19:56,060 INFO [main-EventThread] regionserver.HRegionServer:"exceptions.ScannerResetException" : 0,原因分析:

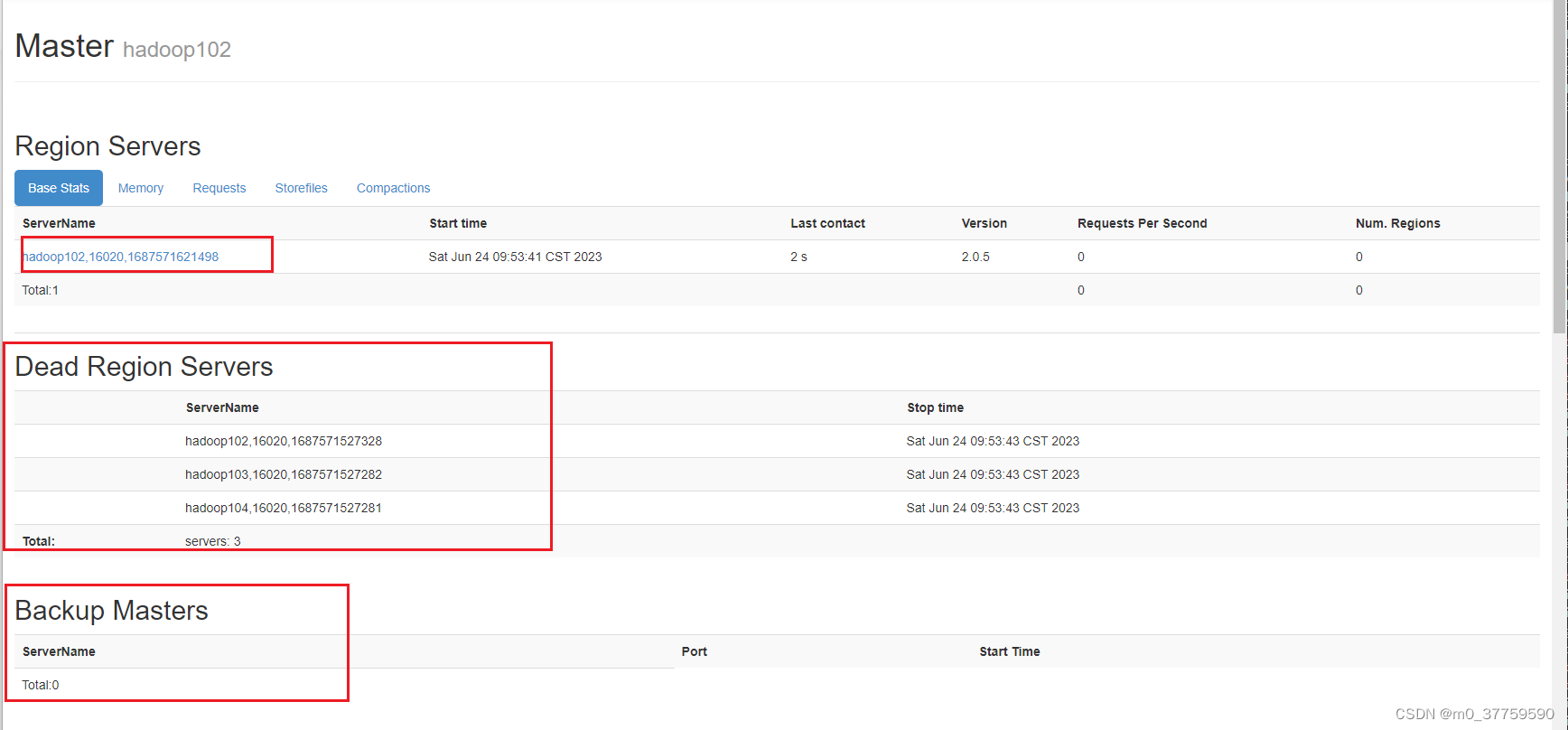

這個問題昨天卡了一天,我發現就是在只有在hadoop102幾點上啟動了master和regionserver,我通過hadop102:16010 web頁面訪問,發現是regionserver是dead,所以後面就一直卡死在這裡了

通過bug日誌看,可以知道是kerberos權限認證的問題,看了一下當下的配置文件

hadoop102 : vim hbase-jaas.conf

Client {com.sun.security.auth.module.Krb5LoginModule requireduseKeyTab=truekeyTab="/etc/security/keytab/hbase.service.keytab"useTicketCache=falseprincipal="hbase/hadoop102@EXAMPLE.COM";

};

hadoop103 : vim hbase-jaas.conf

Client {com.sun.security.auth.module.Krb5LoginModule requireduseKeyTab=truekeyTab="/etc/security/keytab/hbase.service.keytab"useTicketCache=falseprincipal="hbase/hadoop103@EXAMPLE.COM";

};

hadoop104 : vim hbase-jaas.conf

Client {com.sun.security.auth.module.Krb5LoginModule requireduseKeyTab=truekeyTab="/etc/security/keytab/hbase.service.keytab"useTicketCache=falseprincipal="hbase/hadoop104@EXAMPLE.COM";

};

我發現只有hadoop102上的master和regionserver啟動起來了,hadoop103和hadoop104沒有啟動,所以下意識就把hadoop103和hadoop104的hbase-jaas.conf配置文件改成了和hadoop102一樣的,重啟hbase,發現所有的服務是可以啟動的,但是無法執行hbase的插入語句

base(main):002:0> create 'student','info'ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializingat org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:2946)at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:1942)at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:603)at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:130)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)Creates a table. Pass a table name, and a set of column family

specifications (at least one), and, optionally, table configuration.

Column specification can be a simple string (name), or a dictionary

(dictionaries are described below in main help output), necessarily

including NAME attribute.

Examples:Create a table with namespace=ns1 and table qualifier=t1hbase> create 'ns1:t1', {NAME => 'f1', VERSIONS => 5}Create a table with namespace=default and table qualifier=t1hbase> create 't1', {NAME => 'f1'}, {NAME => 'f2'}, {NAME => 'f3'}hbase> # The above in shorthand would be the following:hbase> create 't1', 'f1', 'f2', 'f3'hbase> create 't1', {NAME => 'f1', VERSIONS => 1, TTL => 2592000, BLOCKCACHE => true}hbase> create 't1', {NAME => 'f1', CONFIGURATION => {'hbase.hstore.blockingStoreFiles' => '10'}}hbase> create 't1', {NAME => 'f1', IS_MOB => true, MOB_THRESHOLD => 1000000, MOB_COMPACT_PARTITION_POLICY => 'weekly'}Table configuration options can be put at the end.

Examples:hbase> create 'ns1:t1', 'f1', SPLITS => ['10', '20', '30', '40']hbase> create 't1', 'f1', SPLITS => ['10', '20', '30', '40']hbase> create 't1', 'f1', SPLITS_FILE => 'splits.txt', OWNER => 'johndoe'hbase> create 't1', {NAME => 'f1', VERSIONS => 5}, METADATA => { 'mykey' => 'myvalue' }hbase> # Optionally pre-split the table into NUMREGIONS, usinghbase> # SPLITALGO ("HexStringSplit", "UniformSplit" or classname)hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit'}hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit', REGION_REPLICATION => 2, CONFIGURATION => {'hbase.hregion.scan.loadColumnFamiliesOnDemhbase> create 't1', {NAME => 'f1', DFS_REPLICATION => 1}You can also keep around a reference to the created table:hbase> t1 = create 't1', 'f1'Which gives you a reference to the table named 't1', on which you can then

call methods.Took 8.8778 seconds

hbase(main):003:0> put 'student','1001','info:sex','male'ERROR: org.apache.hadoop.hbase.NotServingRegionException: hbase:meta,,1 is not online on hadoop102,16020,1687510685378at org.apache.hadoop.hbase.regionserver.HRegionServer.getRegionByEncodedName(HRegionServer.java:3272)at org.apache.hadoop.hbase.regionserver.HRegionServer.getRegion(HRegionServer.java:3249)at org.apache.hadoop.hbase.regionserver.RSRpcServices.getRegion(RSRpcServices.java:1414)at org.apache.hadoop.hbase.regionserver.RSRpcServices.get(RSRpcServices.java:2429)at org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:41998)at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:130)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324)at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)Put a cell 'value' at specified table/row/column and optionally

timestamp coordinates. To put a cell value into table 'ns1:t1' or 't1'

at row 'r1' under column 'c1' marked with the time 'ts1', do:hbase> put 'ns1:t1', 'r1', 'c1', 'value'hbase> put 't1', 'r1', 'c1', 'value'hbase> put 't1', 'r1', 'c1', 'value', ts1hbase> put 't1', 'r1', 'c1', 'value', {ATTRIBUTES=>{'mykey'=>'myvalue'}}hbase> put 't1', 'r1', 'c1', 'value', ts1, {ATTRIBUTES=>{'mykey'=>'myvalue'}}hbase> put 't1', 'r1', 'c1', 'value', ts1, {VISIBILITY=>'PRIVATE|SECRET'}The same commands also can be run on a table reference. Suppose you had a reference

t to table 't1', the corresponding command would be:hbase> t.put 'r1', 'c1', 'value', ts1, {ATTRIBUTES=>{'mykey'=>'myvalue'}}解决方案:

提示:这里填写该问题的具体解决方案:

看到這裡我發現所有節點的regionserver都沒有正常啟動,全是dead狀態,所以我就猜測是zookeeper中的hbase數據損壞導致的,所以就想把zookeeper中的hbase信息刪除

[zk: hadoop102:2181(CONNECTED) 0] ls

ls [-s] [-w] [-R] path

[zk: hadoop102:2181(CONNECTED) 1] ls /

[dolphinscheduler, hadoop-ha, hbase, rmstore, yarn-leader-election, zookeeper]

[zk: hadoop102:2181(CONNECTED) 2] deleteall /hbase

Authentication is not valid : /hbase/replication

[zk: hadoop102:2181(CONNECTED) 3] getAcl /hbase

'sasl,'hbase/hadoop102@EXAMPLE.COM

: cdrwa

發現刪除失敗,一直再報Authentication is not valid : /hbase/replication這個bug,這個是由於zookeeper开启了ACL導致的,最後的解決方案是在zookeeper的配置文件zoo.cfg中加入一行skipACL=yes

#kerberos认证配置

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

jaasLoginRenew=3600000

sessionRequireClientSASLAuth=true

skipACL=yes分發zoo.cfg到zk所有節點,重啟zookeeper,再刪除/hbase節點數據

[zk: hadoop102:2181(CONNECTED) 0] ls /

[dolphinscheduler, hadoop-ha, hbase, rmstore, yarn-leader-election, zookeeper]

[zk: hadoop102:2181(CONNECTED) 1] deleteall /hbase

[zk: hadoop102:2181(CONNECTED) 2] ls /

[dolphinscheduler, hadoop-ha, rmstore, yarn-leader-election, zookeeper]

[zk: hadoop102:2181(CONNECTED) 3] quit;

ZooKeeper -server host:port cmd args

成功刪除!!!

到這裡的時候基本上就已經解決成功了

為了保險起見,我把hdfs上的hbase所有文件也刪除了

hadoop fs -rm -r -f /hbase/*

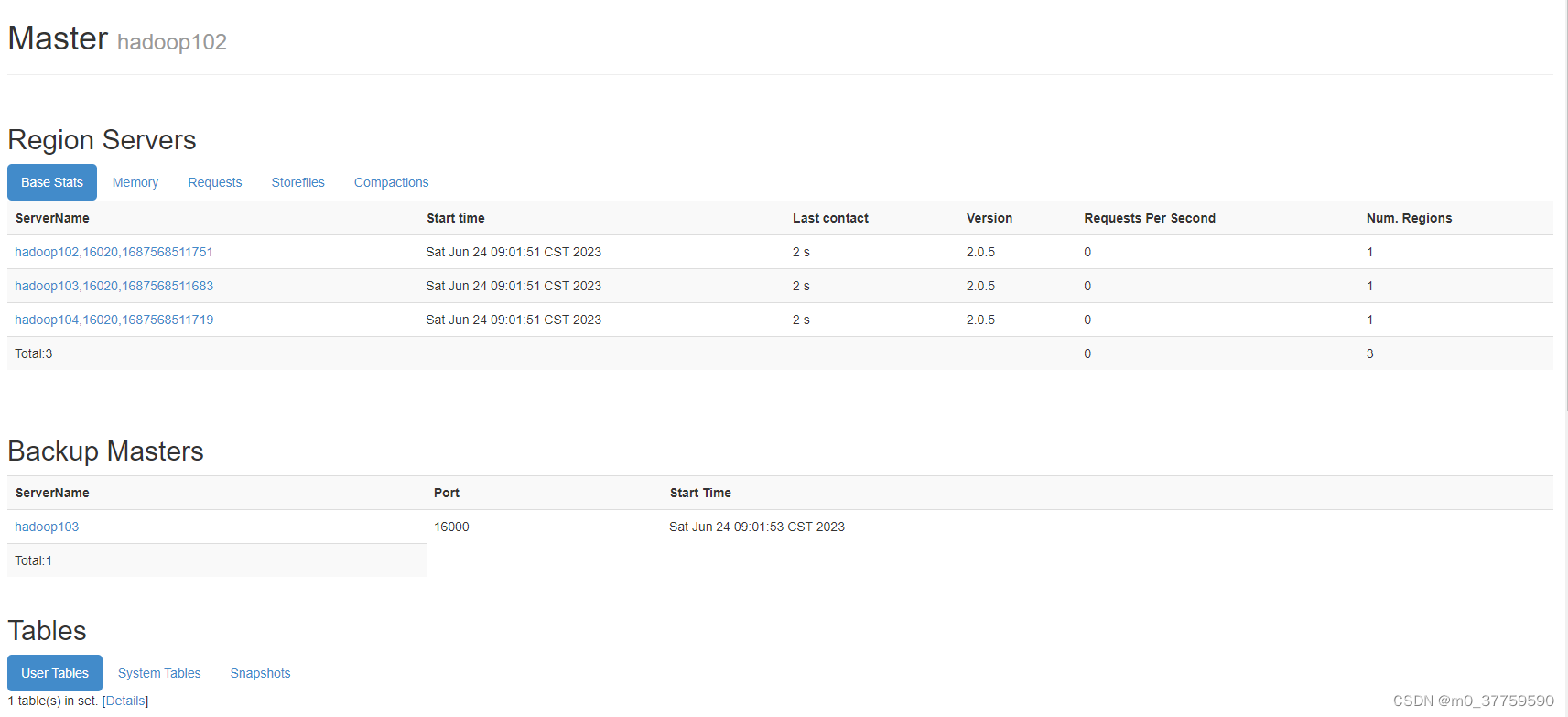

刪除zoo.cfg中的skipACL=yes,然後重啟zk,重啟hbase,訪問hadoop102:16010 web網頁:

可以看到已經沒有dead regionserver了

再執行hbase 插入語句

hbase(main):001:0> create 'student','info'

Created table student

Took 2.6728 seconds

=> Hbase::Table - student

hbase(main):002:0> put 'student','1001','info:sex','male'

Took 0.1907 seconds

hbase(main):003:0> put 'student','1001','info:age','18'

Took 0.0055 seconds

hbase(main):004:0> scan 'student'

ROW COLUMN+CELL 1001 column=info:age, timestamp=1687568561569, value=18 1001 column=info:sex, timestamp=1687568556688, value=male

1 row(s)

Took 0.0611 seconds

hbase(main):005:0> scan 'student',{STARTROW => '1001', STOPROW => '1001'}

ROW COLUMN+CELL 1001 column=info:age, timestamp=1687568561569, value=18 1001 column=info:sex, timestamp=1687568556688, value=male

1 row(s)

Took 0.0131 seconds

hbase(main):006:0> describe 'student'

Table student is ENABLED

student

COLUMN FAMILIES DESCRIPTION

{NAME => 'info', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false',DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY =

> 'false', CACHE_BLOOMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE => '65536'}

1 row(s)

Took 0.0590 seconds

hbase(main):007:0> quit

至此,bug已經解決完

總結:

解決的bug一共有這麼幾個地方:

1.更改所有節點的 hbase-jaas.conf,保持和hadoop102一致

hadoop102 : vim hbase-jaas.conf

Client {com.sun.security.auth.module.Krb5LoginModule requireduseKeyTab=truekeyTab="/etc/security/keytab/hbase.service.keytab"useTicketCache=falseprincipal="hbase/hadoop102@EXAMPLE.COM";

};

2.刪除zookeeper中的/hbase數據

在zoo.cfg中加入skipACL=yes,再重啟zk,然後刪除/hbase

3.刪除hdfs上hbase舊數據

hdfs fs -rm -r -f /hbase/*

4.重啟hbase,執行建表和數據插入語句

參考:https://zhuanlan.zhihu.com/p/396007109