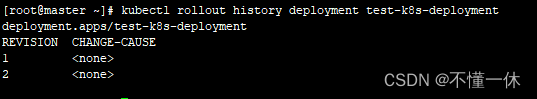

k8s指定节点部署

在一些业务场景中,会需要将一些pod部署到指定node,按照默认的调度规则,pod会优先分配到负载较小的node中,难免会出现多个pod资源竞争的情况。

k8s有两种常用的方法可以实现将指定pod分配到指定node中。

-

nodeName

在部署的yaml文件中,对xxx.spec.nodeName指定节点名称,则该pod将只会在该node上进行部署:

$ vim websvr.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: websvr1-deployment

spec:selector:matchLabels:app: websvr1replicas: 3template:metadata:labels:app: websvr1spec:nodeName: k8s-node1 #指定节点名称containers:- name: websvr1image: websvr:v1ports:- containerPort: 3000---apiVersion: v1

kind: Service

metadata:name: websvr1-service

spec:selector:app: websvr1ports:- protocol: TCPport: 3000targetPort: 3000$ kubectl apply -f websvr1.yamlNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

websvr1-deployment-54cffcc8b4-jw9fl 1/1 Running 0 15s 10.244.36.90 k8s-node1 <none> <none>

websvr1-deployment-54cffcc8b4-s97ln 1/1 Running 0 15s 10.244.36.92 k8s-node1 <none> <none>

websvr1-deployment-54cffcc8b4-wglpb 1/1 Running 0 15s 10.244.36.91 k8s-node1 <none> <none>可以看到websvr只部署在了k8s-node1上,那么如果现在有三个node,一个pod需要部署在其中两个不同node中,是不是要指定两个node的名称呢,其实这种场景可以使用第二种指定分配的方法:

-

nodeSelector

和nodeName不同,nodeSelector是可以指定某一种类型的node进行分配,这种类型在k8s里被称为标签(label)

#查看当前node

$ kubectl get node -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-elasticsearch Ready <none> 16h v1.21.0 172.16.66.167 <none> CentOS Linux 8 4.18.0-305.19.1.el8_4.x86_64 docker://20.10.9

k8s-master Ready control-plane,master 43h v1.21.0 172.16.66.169 <none> CentOS Linux 8 4.18.0-305.19.1.el8_4.x86_64 docker://20.10.9

k8s-node1 Ready <none> 43h v1.21.0 172.16.66.168 <none> CentOS Linux 8 4.18.0-305.19.1.el8_4.x86_64 docker://20.10.9

k8s-node2 Ready <none> 43h v1.21.0 172.16.66.170 <none> CentOS Linux 8 4.18.0-305.19.1.el8_4.x86_64 docker://20.10.9#给k8s-node1 k8s-node2打上标签websvr

$ kubectl label nodes k8s-node1 k8s-node2 type=websvr#查看type=websvr标签的node

$ kubectl get node -l type=websvrNAME STATUS ROLES AGE VERSION

k8s-node1 Ready <none> 43h v1.21.0

k8s-node2 Ready <none> 43h v1.21.0#以下附带标签的其他操作:

#修改标签

$ kubectl label nodes k8s-node1 k8s-node2 type=webtest --overwrite#查看node标签

$ kubectl get nodes k8s-node1 k8s-node2 --show-labels#删除标签

$ kubectl label nodes k8s-node1 k8s-node2 type-修改部署yaml

$ vim websvr1.yamlapiVersion: apps/v1

kind: Deployment

metadata:name: websvr1-deployment

spec:selector:matchLabels:app: websvr1replicas: 3template:metadata:labels:app: websvr1spec:nodeSelector: #选择标签为type:websvr的node部署type: websvrcontainers:- name: websvr1image: websvr:v1ports:- containerPort: 3000---apiVersion: v1

kind: Service

metadata:name: websvr1-service

spec:selector:app: websvr1ports:- protocol: TCPport: 3000targetPort: 3000

同理修改websvr2.yaml

$ vim websvr2.yamlapiVersion: apps/v1

kind: Deployment

metadata:name: websvr2-deployment

spec:selector:matchLabels:app: websvr2replicas: 3template:metadata:labels:app: websvr2spec:nodeSelector: #选择标签为type:websvr的node部署type: websvrcontainers:- name: websvr2image: websvr:v2ports:- containerPort: 3001---apiVersion: v1

kind: Service

metadata:name: websvr2-service

spec:selector:app: websvr2ports:- protocol: TCPport: 3001targetPort: 3001$ kubectl apply -f websvr1.yaml$ kubectl apply -f websvr2.yaml$ kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

websvr1-deployment-67fd6cf9d4-cfstc 1/1 Running 0 2m22s 10.244.36.93 k8s-node1 <none> <none>

websvr1-deployment-67fd6cf9d4-lx6tr 1/1 Running 0 2m22s 10.244.169.151 k8s-node2 <none> <none>

websvr1-deployment-67fd6cf9d4-zxznp 1/1 Running 0 2m22s 10.244.169.152 k8s-node2 <none> <none>

websvr2-deployment-67dfc4f674-dz44b 1/1 Running 0 2m10s 10.244.36.95 k8s-node1 <none> <none>

websvr2-deployment-67dfc4f674-wjg5x 1/1 Running 0 2m10s 10.244.169.153 k8s-node2 <none> <none>

websvr2-deployment-67dfc4f674-xdk9m 1/1 Running 0 2m10s 10.244.36.94 k8s-node1 <none> <none>可以看到此时两个websvr deployment已经部署在指定的node节点上

若有不正确,欢迎指出