版本信息

AndroidStudio 3.5.2

OpenCV 4.1.2

OpenGL 2

OpenCV是什么

维基百科

在本Demo中,OpenCV实现面部识别功能

OpenGL是什么

维基百科

在本Demo中,OpenGL实现美颜功能

配置OpenCV环境

在AndroidStudio中新建C++项目

下载OpenCV Android版

下载OpenCV Windows版

将Android版本的OpenCV解压之后,F:\OpenCV-android-sdk\sdk\native\libs目录的内容复制到项目中, 这个目录中是.so文件。

将Windows版本的OpenCV解压之后,F:\opencv\opencv\build目录中的include内容复制到项目中,这个目录中的是头文件。

将Windows版本的OpenCV解压之后,F:\opencv\opencv\sources\samples\android\face-detection\res\raw的lbpcascade_frontalface.xml文件复制到assets, 这是人脸识别的模型数据。

复制进来大概是这个样子的。我仅复制了armeabi-v7a的,其它的没有复制。

编辑CMakeLists.txt文件,将OpenCV的库打包到native-lib.so中。

cmake_minimum_required(VERSION 3.4.1)add_library(native-libSHAREDnative-lib.cppFaceTracker.cpp)include_directories(include)add_subdirectory(facealigenment)# 需要在app的build.gradle文件中声明jniLibs的位置

#sourceSets {

#main {

#//jni库的调用会到资源文件夹下libs里面找so文件

#jniLibs.srcDirs = ['libs']

#}

#}

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${CMAKE_SOURCE_DIR}/../../../libs/${ANDROID_ABI}")find_library(log-liblog)target_link_libraries(native-libopencv_java4androidseeta_fa_lib${log-lib})rebuild项目成功即代表配置OpenCV成功。

配置OpenGL。 因为Android本身就支持OpenGL,所以只需要在配置文件中指定版本即可。

在ManifestAndroid.xml中增加

<uses-featureandroid:glEsVersion="0x00020000"android:required="true" />将摄像头采集的数据通过OpenGL渲染出来

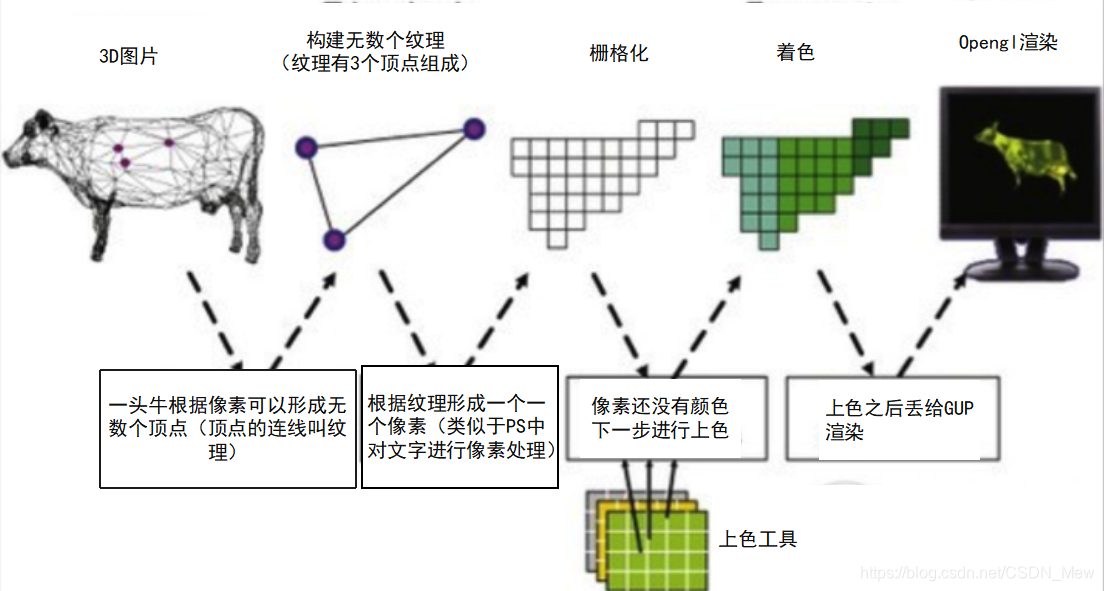

OpenGL的渲染流程

图片由顶点着色器构建纹理,然后栅格化,交给片元着色器上色,最后给OpenGL渲染。

整个渲染的流程是在GPU直接完成,需要CPU跟GPU进行数据交换。

GL语言

OpenGL编程语言-glsl基础

新建一个MyGLSurfaceView继承自GLSurfaceView

import android.content.Context;

import android.opengl.GLSurfaceView;

import android.util.AttributeSet;/*** 作者: ZGQ* 创建时间: 2019/11/19 11:26* 注释:*/

public class MyGLSurfaceView extends GLSurfaceView {private MyRenderer renderer;public MyGLSurfaceView(Context context) {super(context);}public MyGLSurfaceView(Context context, AttributeSet attrs) {super(context, attrs);setEGLContextClientVersion(2);setRenderer(renderer = new MyRenderer(this));// 设置按需渲染 当我们调用requestRender的时候,请求GLThread回调一次onDrawFrame方法// 连续渲染,自动回到onDrawFramesetRenderMode(RENDERMODE_WHEN_DIRTY);}@Overrideprotected void onDetachedFromWindow() {super.onDetachedFromWindow();if (renderer != null) {renderer.release();}}public void swichCamera(){if (renderer != null) {renderer.swichCamera();}}

}

新建MyRenderer继承自GLSurfaceView.Renderer

import android.graphics.SurfaceTexture;

import android.hardware.Camera;

import android.opengl.GLES20;

import android.opengl.GLSurfaceView;import com.bigeye.demo.face.OpenCv;

import com.bigeye.demo.filter.BeautyFilter;

import com.bigeye.demo.filter.BigEyesFilter;

import com.bigeye.demo.filter.CameraFilter;

import com.bigeye.demo.filter.ScreenFilter;

import com.bigeye.demo.filter.StickerFilter;

import com.bigeye.demo.util.CameraHelper;

import com.bigeye.demo.util.Utils;import java.io.File;import javax.microedition.khronos.egl.EGLConfig;

import javax.microedition.khronos.opengles.GL10;/*** 作者: ZGQ* 创建时间: 2019/11/19 11:29* 注释:*/

public class MyRenderer implements GLSurfaceView.Renderer, SurfaceTexture.OnFrameAvailableListener, Camera.PreviewCallback {private MyGLSurfaceView myGLSurfaceView;private CameraHelper mCameraHelper;private SurfaceTexture mSurfaceTexture;private int[] mTextures;private float[] mtx = new float[16];private CameraFilter mCameraFilter;private ScreenFilter mScreenFilter;private BigEyesFilter mBigEyesFilter;private StickerFilter mStickerFilter;private BeautyFilter beautyFilter;private File faceCascade;private File eyeCascade;private OpenCv openCv;public void swichCamera() {if (mCameraHelper != null) {mCameraHelper.switchCamera();}}public MyRenderer(MyGLSurfaceView glSurfaceView) {myGLSurfaceView = glSurfaceView;}@Overridepublic void onSurfaceCreated(GL10 gl, EGLConfig config) {// GLES的所有操作要在GLES的Thread中执行,否则会失败mCameraFilter = new CameraFilter(myGLSurfaceView.getContext());mScreenFilter = new ScreenFilter(myGLSurfaceView.getContext());mBigEyesFilter = new BigEyesFilter(myGLSurfaceView.getContext());mStickerFilter = new StickerFilter(myGLSurfaceView.getContext());beautyFilter = new BeautyFilter(myGLSurfaceView.getContext());mTextures = new int[1];GLES20.glGenTextures(mTextures.length, mTextures, 0);mSurfaceTexture = new SurfaceTexture(mTextures[0]);mSurfaceTexture.setOnFrameAvailableListener(this);mSurfaceTexture.getTransformMatrix(mtx);mCameraFilter.setMatrix(mtx);faceCascade = Utils.copyAssets(myGLSurfaceView.getContext(), "lbpcascade_frontalface.xml");eyeCascade = Utils.copyAssets(myGLSurfaceView.getContext(), "seeta_fa_v1.1.bin");openCv = new OpenCv(faceCascade.getAbsolutePath(), eyeCascade.getAbsolutePath());}@Overridepublic void onSurfaceChanged(GL10 gl, int width, int height) {mCameraHelper = new CameraHelper(Camera.CameraInfo.CAMERA_FACING_BACK, width, height);mCameraHelper.startPreview(mSurfaceTexture);mCameraHelper.setPreviewCallback(this);mCameraFilter.onReady(width, height);mScreenFilter.onReady(width, height);mBigEyesFilter.onReady(width, height);mStickerFilter.onReady(width, height);beautyFilter.onReady(width, height);openCv.startTrack();openCv.setCameraHelper(mCameraHelper);}@Overridepublic void onDrawFrame(GL10 gl) {GLES20.glClearColor(0, 0, 0, 0);// 执行清空GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT);mSurfaceTexture.updateTexImage();mSurfaceTexture.getTransformMatrix(mtx);mCameraFilter.setMatrix(mtx);// 责任链模式int textureId = mCameraFilter.onDrawFrame(mTextures[0]);

// if (openCv != null) {

// mBigEyesFilter.setFace(openCv.getFace());

// mStickerFilter.setFace(openCv.getFace());

// }

// textureId = mBigEyesFilter.onDrawFrame(textureId);

// textureId = mStickerFilter.onDrawFrame(textureId);textureId = beautyFilter.onDrawFrame(textureId);mScreenFilter.onDrawFrame(textureId);}@Overridepublic void onFrameAvailable(SurfaceTexture surfaceTexture) {// 摄像头获得一帧数据,请求GL绘制myGLSurfaceView.requestRender();}public void release() {if (mCameraHelper != null) {mCameraHelper.release();}}@Overridepublic void onPreviewFrame(byte[] data, Camera camera) {if (openCv != null) {

// openCv.dector(data);}}

}

AbstractFilter 过滤器

import android.content.Context;

import android.opengl.GLES20;import com.bigeye.demo.util.OpenGLUtils;import java.nio.ByteBuffer;

import java.nio.ByteOrder;

import java.nio.FloatBuffer;/*** 作者: ZGQ* 创建时间: 2019/11/19 11:57* 注释:*/

public abstract class AbstractFilter {// 顶点着色器protected int mVertexShaderId;// 片元着色器protected int mFragmentShaderId;// 顶点GPU地址protected FloatBuffer mTextureBuffer;// 片元GPU地址protected FloatBuffer mVertexBuffer;protected int mProgram;// 纹理idprotected int vTexture;// 用于处理图像的旋转和镜像protected int vMatrix;protected int vCoord;protected int vPosition;protected int mWidth;protected int mHeight;public AbstractFilter(Context context, int vertexShaderId, int fragmentShaderId) {this.mVertexShaderId = vertexShaderId;this.mFragmentShaderId = fragmentShaderId;// 摄像头是2D的//mVertexBuffer = ByteBuffer.allocateDirect(4 * 2 * 4).order(ByteOrder.nativeOrder()).asFloatBuffer();mVertexBuffer.clear();float[] VERTEX = {-1.0f, -1.0f,1.0f, -1.0f,-1.0f, 1.0f,1.0f, 1.0f};mVertexBuffer.put(VERTEX);mTextureBuffer = ByteBuffer.allocateDirect(4 * 2 * 4).order(ByteOrder.nativeOrder()).asFloatBuffer();mTextureBuffer.clear();float[] TEXTURE = {0.0f, 1.0f,1.0f, 1.0f,0.0f, 0.0f,1.0f, 0.0f};mTextureBuffer.put(TEXTURE);init(context);initCoordinate();}public void onReady(int width, int height) {this.mWidth = width;this.mHeight = height;}private void init(Context context) {String vertexShader = OpenGLUtils.readRawTextFile(context, mVertexShaderId);String fragmentShader = OpenGLUtils.readRawTextFile(context, mFragmentShaderId);mProgram = OpenGLUtils.loadProgram(vertexShader, fragmentShader);vPosition = GLES20.glGetAttribLocation(mProgram, "vPosition");vCoord = GLES20.glGetAttribLocation(mProgram, "vCoord");vMatrix = GLES20.glGetUniformLocation(mProgram, "vMatrix");vTexture = GLES20.glGetUniformLocation(mProgram, "vTexture");}protected abstract void initCoordinate();public int onDrawFrame(int textureId) {// 设置显示窗口GLES20.glViewport(0, 0, mWidth, mHeight);// 使用程序GLES20.glUseProgram(mProgram);mVertexBuffer.position(0);GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 0, mVertexBuffer);GLES20.glEnableVertexAttribArray(vPosition);mTextureBuffer.position(0);GLES20.glVertexAttribPointer(vCoord, 2, GLES20.GL_FLOAT, false, 0, mTextureBuffer);GLES20.glEnableVertexAttribArray(vCoord);GLES20.glActiveTexture(GLES20.GL_TEXTURE0);GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);GLES20.glUniform1i(vTexture, 0);GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);return textureId;}

}

CameraFilter,将摄像头的数据传递到FBO

import android.content.Context;

import android.opengl.GLES11Ext;

import android.opengl.GLES20;import com.bigeye.demo.R;

import com.bigeye.demo.util.OpenGLUtils;/*** 作者: ZGQ* 创建时间: 2019/11/19 14:42* 注释: 与父类中不同* 1.坐标转换 旋转90度, 镜像*/

public class CameraFilter extends AbstractFilter {// FBO 的指针protected int[] mFrameBuffer;protected int[] mFrameBufferTextures;private float[] mMatrix;public CameraFilter(Context context) {super(context, R.raw.camera_vertex, R.raw.camera_frag);}@Overrideprotected void initCoordinate() {mTextureBuffer.clear();// 摄像头原始坐标是颠倒的逆时针旋转90度+是镜像的

// float[] TEXTURE = {

// 0.0f, 0.0f,

// 1.0f, 0.0f,

// 0.0f, 1.0f,

// 1.0f, 1.0f

// };// 修复代码float[] TEXTURE = {0.0f, 0.0f,0.0f, 1.0f,1.0f, 0.0f,1.0f, 1.0f};mTextureBuffer.put(TEXTURE);}@Overridepublic void onReady(int width, int height) {super.onReady(width, height);mFrameBuffer = new int[1];// 生成FBOGLES20.glGenFramebuffers(mFrameBuffer.length, mFrameBuffer, 0);// 实例化一个纹理,目的是与FBO进行绑定,从而操作纹理,不直接操作FBOmFrameBufferTextures = new int[1];OpenGLUtils.glGenTextures(mFrameBufferTextures);// 使用全局变量GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, mFrameBufferTextures[0]);GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mFrameBuffer[0]);// 设置纹理显示参数GLES20.glTexImage2D(GLES20.GL_TEXTURE_2D, 0, GLES20.GL_RGBA, mWidth, mHeight, 0, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, null);// 将纹理与FBO绑定GLES20.glFramebufferTexture2D(GLES20.GL_FRAMEBUFFER, GLES20.GL_COLOR_ATTACHMENT0,GLES20.GL_TEXTURE_2D,mFrameBufferTextures[0], 0);// 不再使用,告诉GPU其它人可以用GL_TEXTURE_2D了GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);}/*** 不同处** @param textureId* @return*/@Overridepublic int onDrawFrame(int textureId) {// 设置显示窗口GLES20.glViewport(0, 0, mWidth, mHeight);// 不同处 1// 不调用的话就是默认的操作glSurfaceView中的纹理了,显示到屏幕上了// 这里我们只是把它画到fbo中(缓存)GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mFrameBuffer[0]);// 使用程序GLES20.glUseProgram(mProgram);mVertexBuffer.position(0);GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 0, mVertexBuffer);GLES20.glEnableVertexAttribArray(vPosition);mTextureBuffer.position(0);GLES20.glVertexAttribPointer(vCoord, 2, GLES20.GL_FLOAT, false, 0, mTextureBuffer);GLES20.glEnableVertexAttribArray(vCoord);// 不同处 2// 变换矩阵GLES20.glUniformMatrix4fv(vMatrix, 1, false, mMatrix, 0);// 不同处 3// 从摄像头拿到的数据,是所以纹理要是GLES11Ext.GL_TEXTURE_EXTERNAL_OES,不能是texture2D// 激活图层GLES20.glActiveTexture(GLES20.GL_TEXTURE0);GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textureId);GLES20.glUniform1i(vTexture, 0);// 绘制GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);// 解绑GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);GLES20.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, 0);return mFrameBufferTextures[0];}public void setMatrix(float[] mtx) {this.mMatrix = mtx;}

}

ScreenFilter将数据渲染到TextureView中

/*** 作者: ZGQ* 创建时间: 2019/11/19 14:42* 注释:*/

public class ScreenFilter extends AbstractFilter {public ScreenFilter(Context context) {super(context, R.raw.base_vertex, R.raw.base_frag);}@Overrideprotected void initCoordinate() {}

}

人脸识别

初始化OpenCV

extern "C"

JNIEXPORT jlong JNICALL

Java_com_bigeye_demo_face_OpenCv_init_1face_1track(JNIEnv *env, jobject thiz, jstring face_cascade_,jstring eye_cascade_) {const char *face_cascade = env->GetStringUTFChars(face_cascade_, 0);// 人脸识别模型,从assets拷贝到本机的绝对路径const char *eye_cascade = env->GetStringUTFChars(eye_cascade_, 0);FaceTracker *faceTracker = new FaceTracker(face_cascade, eye_cascade);env->ReleaseStringUTFChars(face_cascade_, face_cascade);env->ReleaseStringUTFChars(eye_cascade_, eye_cascade);return reinterpret_cast<jlong>(faceTracker);}摄像头采集的数据,发送给OpenCV识别

extern "C"

JNIEXPORT jobject JNICALL

Java_com_bigeye_demo_face_OpenCv_native_1detector(JNIEnv *env, jobject thiz, jlong native_facetrack,jbyteArray data_, jint camera_id, jint width,jint height) {if (native_facetrack == 0) {return nullptr;}FaceTracker *faceTracker = reinterpret_cast<FaceTracker *>(native_facetrack);jbyte *data = env->GetByteArrayElements(data_, NULL);// src 此时是NV21的Mat src(height + height / 2, width, CV_8UC1, data);// 将src的NV21格式转换成RGBA格式cvtColor(src, src, COLOR_YUV2RGBA_NV21);if (camera_id == 1) {// 前置摄像头// 逆时针旋转90度rotate(src, src, ROTATE_90_COUNTERCLOCKWISE);// 1-水平翻转, 0 垂直翻转flip(src, src, 1);} else {// 后置摄像头// 顺时针旋转90度rotate(src, src, ROTATE_90_CLOCKWISE);}Mat gray;cvtColor(src, gray, COLOR_RGBA2GRAY);equalizeHist(gray, gray);// 识别人脸 返回识别面部特征5个std::vector<cv::Rect2f> rects = faceTracker->dector(gray);env->ReleaseByteArrayElements(data_, data, 0);int imgWidth = src.cols;int imgHeight = src.rows;int ret = rects.size();if (ret) {jclass clazz = env->FindClass("com/bigeye/demo/face/Face");jmethodID construct = env->GetMethodID(clazz, "<init>", "(IIII[F)V");int size = ret * 2;jfloatArray jfloatArray1 = env->NewFloatArray(size);for (int i = 0, j = 0; i < size; j++) {float f[2] = {rects[j].x, rects[j].y};LOGE("rects[j].x = %ld , rects[j].y = %ld , x = %ld , y = %ld", rects[j].x,rects[j].y, rects[j].x / imgWidth, rects[j].y / imgHeight);env->SetFloatArrayRegion(jfloatArray1, i, 2, f);i += 2;}Rect2f faceRect = rects[0];width = faceRect.width;height = faceRect.height;jobject face = env->NewObject(clazz, construct, width, height, imgWidth, imgHeight,jfloatArray1);return face;}return nullptr;

std::vector<cv::Rect2f> FaceTracker::dector(Mat src) {std::vector<cv::Rect> faces;std::vector<cv::Rect2f> rects;// 执行人脸识别tracker->process(src);// 获取识别结果tracker->getObjects(faces);seeta::FacialLandmark points[5];if (faces.size() > 0) {cv::Rect face = faces[0];rects.push_back(cv::Rect2f(face.x, face.y, face.width, face.height));ImageData image_data(src.cols, src.rows);image_data.data = src.data;seeta::Rect bbox;bbox.x = face.x;bbox.y = face.y;bbox.width = face.width;bbox.height = face.height;seeta::FaceInfo faceInfo;faceInfo.bbox = bbox;faceAlignment->PointDetectLandmarks(image_data, faceInfo, points);// 识别到特征点 5个特征点 双眼中心 , 鼻子中心 嘴巴 两个嘴角for (int i = 0; i < 5; ++i) {rects.push_back(cv::Rect2f(points[i].x, points[i].y, 0, 0));}}return rects;

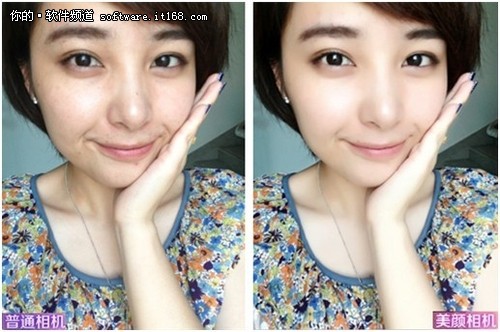

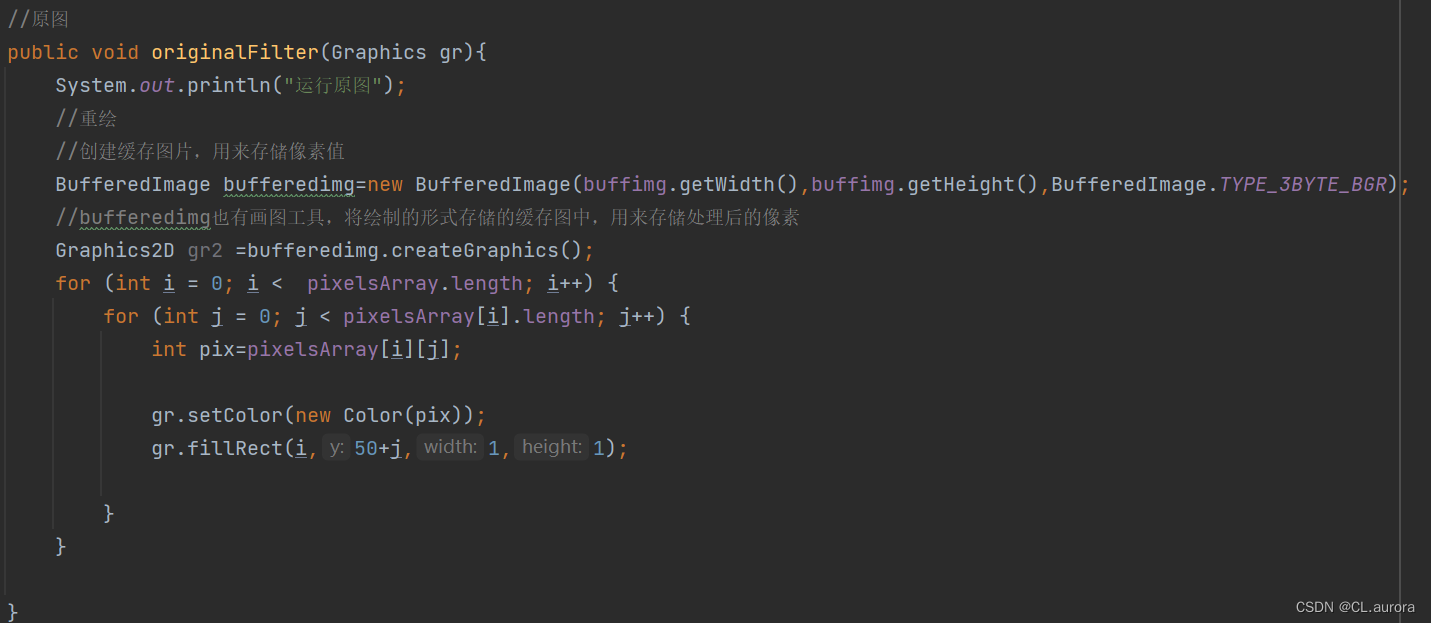

写美颜的片元着色器GL程序。美颜的原理是

- 反向

- 高反差保留

- 高斯模糊

precision mediump float;varying vec2 aCoord;

uniform sampler2D vTexture;uniform int width;

uniform int height;//

vec2 blurCoordinates[20];

void main(){// 1. 模糊: 平滑处理 步长vec2 singleStepOffest = vec2(1.0/float(width), 1.0/float(height));blurCoordinates[0] = aCoord.xy + singleStepOffest * vec2(0.0, -10.0);blurCoordinates[1] = aCoord.xy + singleStepOffest * vec2(0.0, 10.0);blurCoordinates[2] = aCoord.xy + singleStepOffest * vec2(-10.0, 0.0);blurCoordinates[3] = aCoord.xy + singleStepOffest * vec2(10.0, 0.0);blurCoordinates[4] = aCoord.xy + singleStepOffest * vec2(5.0, -8.0);blurCoordinates[5] = aCoord.xy + singleStepOffest * vec2(5.0, 8.0);blurCoordinates[6] = aCoord.xy + singleStepOffest * vec2(-8.0, 5.0);blurCoordinates[7] = aCoord.xy + singleStepOffest * vec2(8.0, 5.0);blurCoordinates[8] = aCoord.xy + singleStepOffest * vec2(8.0, -5.0);blurCoordinates[9] = aCoord.xy + singleStepOffest * vec2(8.0, 5.0);blurCoordinates[10] = aCoord.xy + singleStepOffest * vec2(-5.0, 8.0);blurCoordinates[11] = aCoord.xy + singleStepOffest * vec2(5.0, 8.0);blurCoordinates[12] = aCoord.xy + singleStepOffest * vec2(0.0, -6.0);blurCoordinates[13] = aCoord.xy + singleStepOffest * vec2(0.0, 6.0);blurCoordinates[14] = aCoord.xy + singleStepOffest * vec2(-6.0, 0.0);blurCoordinates[15] = aCoord.xy + singleStepOffest * vec2(6.0, 0.0);blurCoordinates[16] = aCoord.xy + singleStepOffest * vec2(-4.0, -4.0);blurCoordinates[17] = aCoord.xy + singleStepOffest * vec2(-4.0, 4.0);blurCoordinates[18] = aCoord.xy + singleStepOffest * vec2(4.0, -4.0);blurCoordinates[19] = aCoord.xy + singleStepOffest * vec2(4.0, 4.0);// 获取中心点坐标vec4 currentColor = texture2D(vTexture, aCoord);vec3 totalRGB = currentColor.rgb;for (int i = 0; i < 20; i++){totalRGB += texture2D(vTexture, blurCoordinates[i].xy).rgb;}vec4 blur = vec4(totalRGB * 1.0 / 21.0, currentColor.a);// 高通道颜色vec4 highPassColor = currentColor - blur;// 反向highPassColor.r = clamp(2.0 * highPassColor.r * highPassColor.r * 24.0, 0.0, 1.0);highPassColor.g = clamp(2.0 * highPassColor.g * highPassColor.g * 24.0, 0.0, 1.0);highPassColor.b = clamp(2.0 * highPassColor.b * highPassColor.b * 24.0, 0.0, 1.0);vec3 rgb = mix(currentColor.rgb, blur.rgb, 0.2);gl_FragColor = vec4(rgb,1.0);}

美颜过滤器BeautyFilter

import com.bigeye.demo.R;/*** 作者: ZGQ* 创建时间: 2019/11/25 15:52* 注释:*/

public class BeautyFilter extends AbstractFrameFilter {private int width;private int height;public BeautyFilter(Context context) {super(context, R.raw.base_vertex, R.raw.beauty_frag);width = GLES20.glGetUniformLocation(mProgram, "width");height = GLES20.glGetUniformLocation(mProgram, "height");}@Overridepublic int onDrawFrame(int textureId) {// 设置显示窗口GLES20.glViewport(0, 0, mWidth, mHeight);// 不同处 1// 不调用的话就是默认的操作glSurfaceView中的纹理了,显示到屏幕上了// 这里我们只是把它画到fbo中(缓存)GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, mFrameBuffer[0]);// 使用程序GLES20.glUseProgram(mProgram);GLES20.glUniform1i(width, mWidth);GLES20.glUniform1i(height, mHeight);mVertexBuffer.position(0);GLES20.glVertexAttribPointer(vPosition, 2, GLES20.GL_FLOAT, false, 0, mVertexBuffer);GLES20.glEnableVertexAttribArray(vPosition);mTextureBuffer.position(0);GLES20.glVertexAttribPointer(vCoord, 2, GLES20.GL_FLOAT, false, 0, mTextureBuffer);GLES20.glEnableVertexAttribArray(vCoord);// 激活图层GLES20.glActiveTexture(GLES20.GL_TEXTURE0);GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textureId);GLES20.glUniform1i(vTexture, 0);// 绘制GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);// 解绑GLES20.glBindFramebuffer(GLES20.GL_FRAMEBUFFER, 0);GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, 0);return mFrameBufferTextures[0];}

}