背景:

爬取目标:(你懂得)

url: h t t p s : / / w w w . j p x g y w . c o m 为什么要用scrapy-redis:

为什么用scrapy-redis,个人原因喜欢只爬取符合自己口味的,这样我只要开启爬虫,碰到喜欢的写真集,把url lpush到redis,爬虫就检测到url并开始运行,这样爬取就比较有针对性。说白了自己最后看的都是精选的,那岂不是美滋滋

爬取思路:

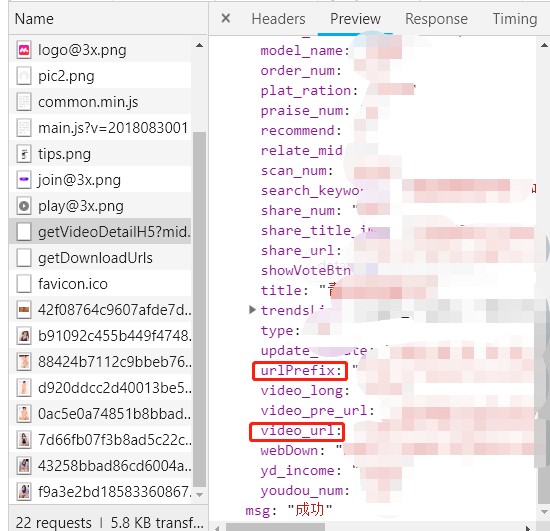

进入一个喜欢的写真集,我们的目标就是将下一页的url和图片的url提取出来

这里我试过,response.css和response.xpath都提取不到图片url,所以我们这里用selenium控制Chrome或PhantomJS获取源码来提取我们想要的url

开干!:

1、环境:

个人环境是python3.6.4+scrapy1.5.1

scrapy环境搭建我就不啰嗦了,pip3安装scrapy,网上教程一大堆。这里多说一句,我们既然爬取的是图片,Pillow这个库是必须要安装的,selenium这个库也需要,还有redis,如果没有,手动pip3 install Pillow/pip3 install selenium/pip3 install redis一下

附上个人虚拟环境库列表:

Scrapy 1.5.1

Pillow 5.2.0

pywin32 223

requests 2.19.1

selenium 3.14.0

redis2、创建爬虫

我们先创建一个scrapy项目,进入虚拟环境

scrapy startproject ScrapyRedisTest下一步就是搞到scrapy-redis的源码,访问github: https://github.com/rmax/scrapy-redis,下载项目

解压后我们把 src 中的scrapy_redis整个复制到刚刚创建的ScrapyRedisTest根目录下

在根目录下的ScrapyRedisTest中创建一个images文件夹作为图片存放文件

这是当前目录结构:

3、编写爬虫

环境搞定了,我们开始写爬虫

编写settings.py文件:(带注释)

童鞋们可以直接复制代码替换自动生成的settings.py

# -*- coding: utf-8 -*-import os,sys# Scrapy settings for ScrapyRedisTest ChatRoom

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.htmlBOT_NAME = 'ScrapyRedisTest'SPIDER_MODULES = ['ScrapyRedisTest.spiders']

NEWSPIDER_MODULE = 'ScrapyRedisTest.spiders'# Crawl responsibly by identifying yourself (and your website) on the user-agent#scrapy自带的UserAgentMiddleware需要设置的参数,我们这里设置一个chrome的UserAgent

USER_AGENT = "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3112.90 Safari/537.36"# Obey robots.txt rules

ROBOTSTXT_OBEY = False #不遵循ROBOT协议# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)

#COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}# Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'ScrapyRedisTest.middlewares.ScrapyredistestSpiderMiddleware': 543,

#}

SCHEDULER = "scrapy_redis.scheduler.Scheduler" #格式:scrapy-redis调度器替换成scrapy_redis的

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" #格式:scrapy-redis去重器替换成scrapy_redis的

#ITEM_PIPELINES = {

# 'scrapy_redis.pipelines.RedisPipeline': 300,

#}BASE_DIR=os.path.dirname(os.path.abspath(os.path.dirname(__file__)))

sys.path.insert(0,os.path.join(BASE_DIR,'ScrapyRedisTest'))# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'ScrapyRedisTest.middlewares.ScrapyredistestDownloaderMiddleware': 543,

#}# Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'ScrapyRedisTest.pipelines.ScrapyredistestPipeline': 300,

#}

IMAGES_URLS_FIELD="front_image_url" #scrapy 自带的 ImagesPipeline根据这个字段判断从哪个item下载图片

project_dir=os.path.abspath(os.path.dirname(__file__))

IMAGES_STORE=os.path.join(project_dir,'images') #下载的图片保存在哪个文件夹# Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

AUTOTHROTTLE_ENABLED = True #scrapy会自动帮我们调整下载速度

# The initial download delay

AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

AUTOTHROTTLE_DEBUG = False

这里多说一句,个人喜欢设置AUTOTHROTTLE_ENABLED=True这个属性,虽然爬取速度可能会慢一点,但是能减少被反爬虫的几率,(爬取拉钩网的时候不设置这个就会被302)。其次,我们不限制速度爬的辣么快给人家网站整挂了也不好嘛

编写middlewares.py文件:

我们的目标是只访问特定的url使用selenium,所以我们编写一个middleware

童鞋们将代码拷贝到自动生成的middlewares.py文件里面,不要覆盖原有的

import time

from scrapy.http import HtmlResponseclass JSPageMiddleware_for_jp(object):def process_request(self, request, spider):if request.url.startswith("https://www.jpxgyw.com"): #特定url才使用selenium下载spider.driver.get(request.url)time.sleep(1)print("currentUrl", spider.driver.current_url)return HtmlResponse(url=spider.driver.current_url,body=spider.driver.page_source,encoding="utf-8",request=request)

编写spider文件:

在spiders文件下创建一个jp.py作为我们爬虫的spidier文件

这里我们将selenium.webdriver的初始化工作放到__init__中是为了每次爬取新的网站不用重复打开浏览器,(这招我是跟慕课网的bobby老师学的,老师666,为他打call)

# -*- coding: utf-8 -*-

import re

import scrapy

from scrapy_redis.spiders import RedisSpider

from selenium import webdriver

from scrapy.loader import ItemLoader

from scrapy.xlib.pydispatch import dispatcher

from scrapy import signalsfrom ScrapyRedisTest.items import JPItemclass JpSpider(RedisSpider):name = 'jp'allowed_domains = ['www.jpxgyw.com','img.xingganyouwu.com']redis_key = 'jp:start_urls' #redis的key值custom_settings = {"AUTOTHROTTLE_ENABLED": True, #开启自动调节爬取速度的插件"DOWNLOADER_MIDDLEWARES": {'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': 1, #使用scrapy自带的middleware模拟user-agent'ScrapyRedisTest.middlewares.JSPageMiddleware_for_jp': 2 #使用自己编写的middleware},"ITEM_PIPELINES": {'scrapy.pipelines.images.ImagesPipeline': 1, #使用scrapy自带的middleware下载图片}}current_page=0 #控制当前爬取到第几页的类变量# max_page=17@staticmethoddef judgeFinalPage(body): #判断是否已爬取到最后一页ma = re.search(r'性感尤物提醒你,访问页面出错了', body)return not madef __init__(self,**kwargs):#这里我使用了PhantomJS,童鞋可以替换成chromedriver,executable_path为我的电脑存放phantomjs的路径,童鞋自行替换,另外在linux上不用设置executable_pathself.driver=webdriver.PhantomJS(executable_path="D:\\phantomjs-2.1.1-windows\\bin\\phantomjs.exe")super(JpSpider,self).__init__()dispatcher.connect(self.spider_closed,signals.spider_closed)def spider_closed(self,spider): #当爬虫退出时关闭PhantomJSprint("spider closed")self.driver.quit()def parse(self, response):if "_" in response.url: #如果start_urls不是从第一页开始爬ma_url_num = re.search(r'_(\d+?).html', response.url) #提取当前数字self.current_page=int(ma_url_num.group(1)) #写到global变量self.current_page = self.current_page + 1ma_url = re.search(r'(.*)_\d+?.html', response.url) #提取当前urlnextUrl=ma_url.group(1)+"_"+str(self.current_page)+".html" #拼接下一页的urlprint("nextUrl", nextUrl)else: #如果start_urls是从第一页开始爬self.current_page=0 #重置next_page_num=self.current_page+1self.current_page=self.current_page+1nextUrl=response.url[:-5]+"_"+str(next_page_num)+".html" #拼接下一页的urlprint("nextUrl",nextUrl)ma = re.findall(r'src="/uploadfile(.*?).jpg', bytes.decode(response.body))imgUrls=[] #提取当前页所有图片url放到列表中for i in ma:imgUrl="http://img.xingganyouwu.com/uploadfile/"+i+".jpg"imgUrls.append(imgUrl)print("imgUrl",imgUrl)item_loader = ItemLoader(item=JPItem(), response=response)item_loader.add_value("front_image_url", imgUrls) #放到item中jp_item = item_loader.load_item()yield jp_item #交给pipline下载图片if self.judgeFinalPage(bytes.decode(response.body)): #如果判断不是最后一页,继续下载yield scrapy.Request(nextUrl, callback=self.parse, dont_filter=True)else:print("最后一页了!")编写items.py文件:

别忘了在items.py文件中加入下面代码,为scrapy图片下载器指定item

class JPItem(scrapy.Item):front_image_url=scrapy.Field()开始爬:

我是将项目放在自己的阿里云服务器上运行的(因为家里网速太慢。。)

首先要开启 redis-server 和 redis-cli windows,linux开启方法都很简单,这里偷懒不写了,请自行百度

之后cd 到爬虫根目录下 scrapy crawl jp 开启爬虫,下图显示爬虫正在执行并等待redis中的jp:start_urls

说明爬虫已经正常运行了,我们去redis,lpush一个url

之后我们就可以看到爬虫开始工作了

爬完这个url之后不需要关闭爬虫,因为它一直监听着redis,我们只要看到中意的url,lpush到redis中就可以了

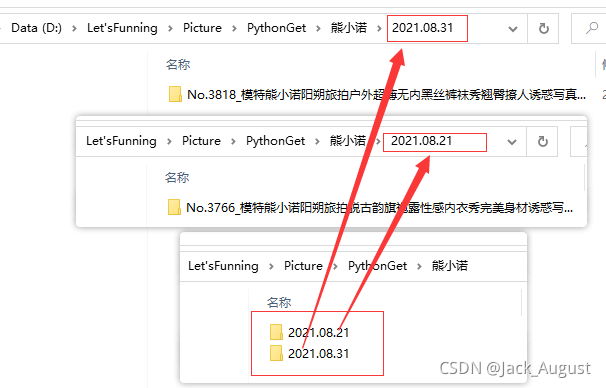

测试效果:稳定爬取321个url,894张图片

ok,剩下的我就不管了,在根目录下的images欣赏图片吧