Docker安装分布式Hadoop集群

一、准备环境

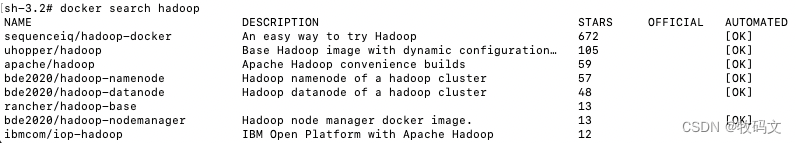

1. 查看docker的hadoop镜像

docker search hadoop

2. 拉取stars最多的镜像

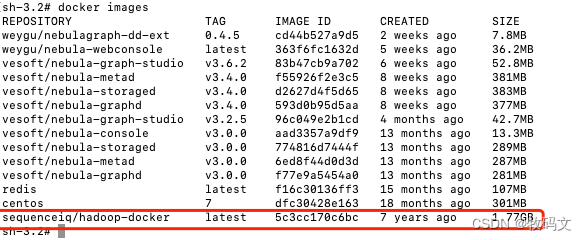

docker pull sequenceiq/hadoop-docker

3. 拉取完成后查看镜像是否已到本地

docker images

4. 运行第一个容器hadoop102

docker run --name hadoop102 -d -h hadoop102 -p 9870:9870 -p 19888:19888 -v /Users/anjuke/opt/data/hadoop:/opt/data/hadoop sequenceiq/hadoop-docker

5. 进入该容器

docker exec -it hadoop102 bash

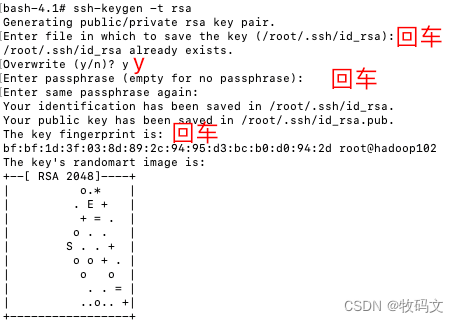

6. 配置ssh生成秘钥,所有的节点都要配置

/etc/init.d/sshd start

7. 生成密钥

ssh-keygen -t rsa

8. 复制公钥到authorized_keys中

cd /root/.ssh/

cat id_rsa.pub > authorized_keys

9. 运行hadoop103容器

docker run --name hadoop103 -d -h hadoop103 -p 8088:8088 sequenceiq/hadoop-docker

10. 运行hadoop104容器

docker run --name hadoop104 -d -h hadoop104 sequenceiq/hadoop-docker

11. 分别进入hadoop103、hadoop104容器执行ssh私钥配置,步骤5-8

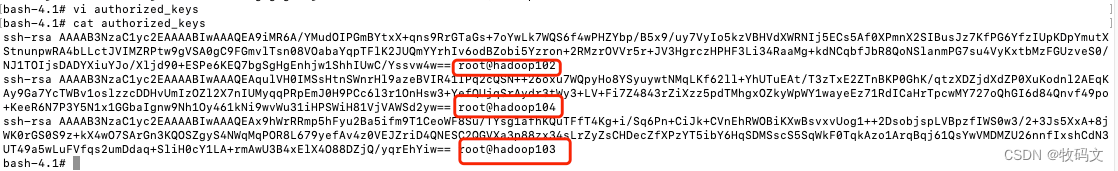

12. 将三个密钥全部复制到authorized_keys文件

13. 配置地址映射

vi /etc/hosts

172.17.0.2 hadoop102

172.17.0.3 hadoop103

172.17.0.4 hadoop104

14. 检查ssh是否成功

ssh hadoop102

ssh hadoop103

ssh hadoop104

二、配置Hadoop

hadoop目录安装在:/usr/local/hadoop-2.7.0/etc/hadoop

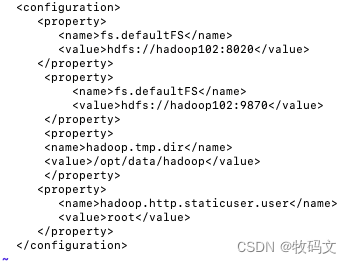

1. core-site.xml

<configuration><property> <name>fs.defaultFS</name> <value>hdfs://hadoop102:8020</value> </property> <property><name>hadoop.tmp.dir</name><value>/opt/data/hadoop</value></property><property> <name>hadoop.http.staticuser.user</name> <value>root</value> </property>

</configuration>

2. hdfs-site.xml

<property> <name>dfs.namenode.http-address</name> <value>hadoop102:9870</value> </property> 3. yarn-site.xml

<property> <name>yarn.resourcemanager.hostname</name> <value>hadoop103</value> </property>

4. mapred-site.xml

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property>

</configuration>

5. 分发文件

scp /usr/local/hadoop-2.7.0/etc/hadoop/core-site.xml hadoop103:/usr/local/hadoop-2.7.0/etc/hadoop

scp /usr/local/hadoop-2.7.0/etc/hadoop/hdfs-site.xml hadoop103:/usr/local/hadoop-2.7.0/etc/hadoop

scp /usr/local/hadoop-2.7.0/etc/hadoop/yarn-site.xml hadoop103:/usr/local/hadoop-2.7.0/etc/hadoopscp /usr/local/hadoop-2.7.0/etc/hadoop/core-site.xml hadoop104:/usr/local/hadoop-2.7.0/etc/hadoop

scp /usr/local/hadoop-2.7.0/etc/hadoop/hdfs-site.xml hadoop104:/usr/local/hadoop-2.7.0/etc/hadoop

scp /usr/local/hadoop-2.7.0/etc/hadoop/yarn-site.xml hadoop104:/usr/local/hadoop-2.7.0/etc/hadoop

三、启动集群

1. 配置slaves文件

hadoop102

hadoop103

hadoop104

2. 发送到其他节点

scp /usr/local/hadoop-2.7.0/etc/hadoop/slaves hadoop103:/usr/local/hadoop-2.7.0/etc/hadoop

scp /usr/local/hadoop-2.7.0/etc/hadoop/slaves hadoop104:/usr/local/hadoop-2.7.0/etc/hadoop

3. 格式化文件系统

hdfs namenode -format

4. 在hadoop102启动hdfs

sbin/start-dfs.sh

5. 在hadoop103启动yarn

sbin/start-yarn.sh

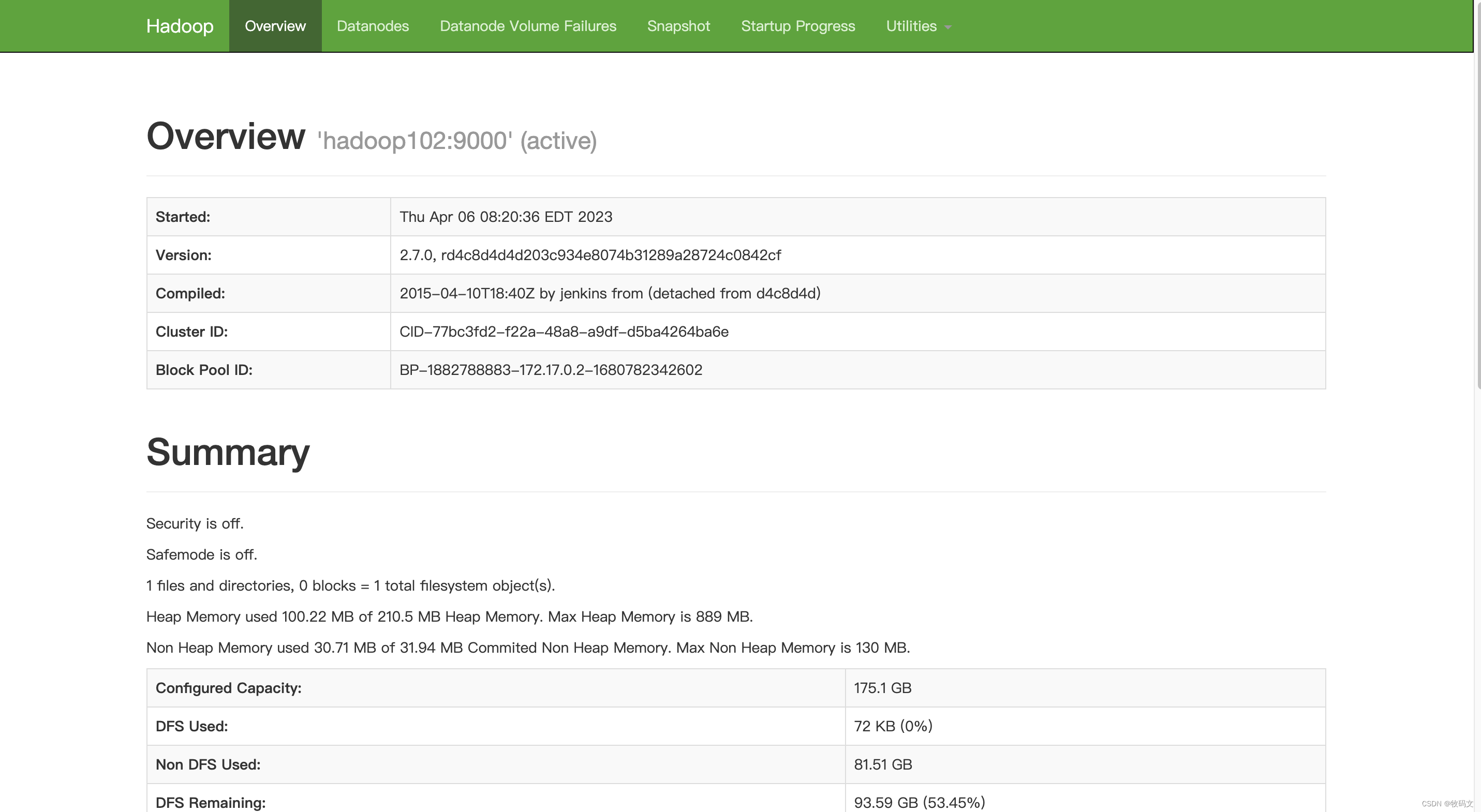

访问Hadoop102:9870,查看是否能够看到hdfs界面

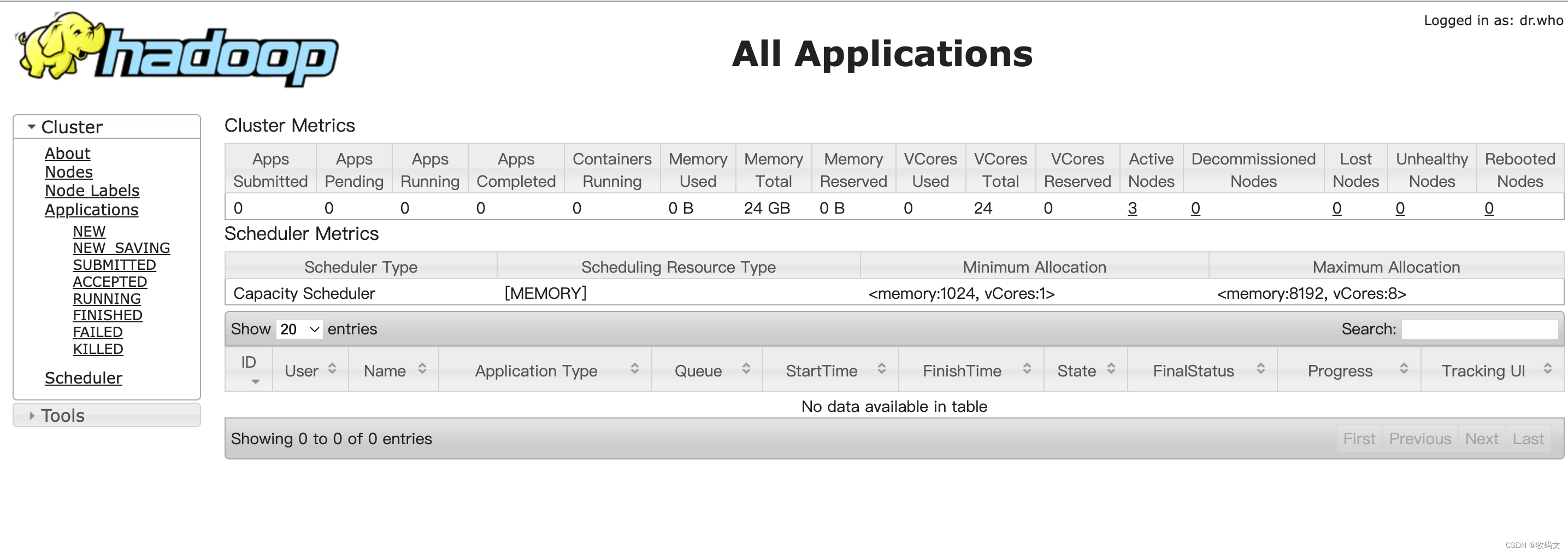

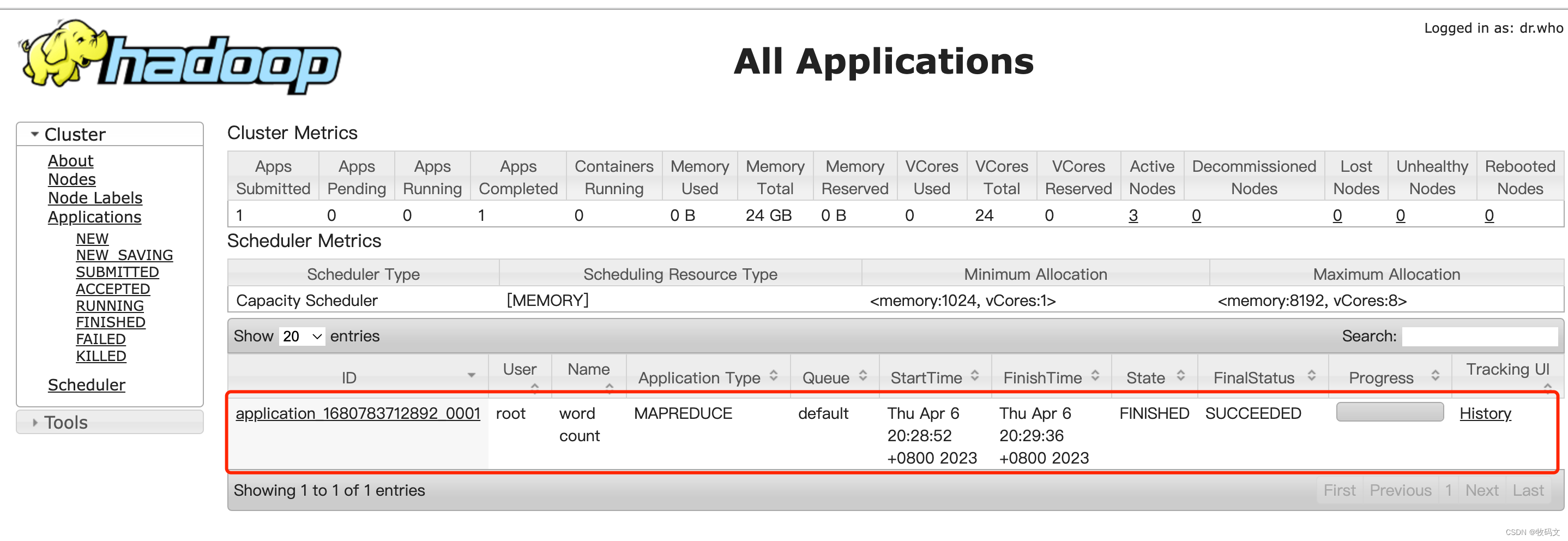

访问hadoop103:8088,查看能够看到yarn界面

集群搭建成功

四 案例

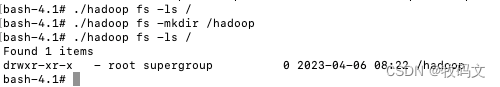

1. 执行一些hdfs命令

hadoop fs -ls /

hadoop fs -mkdir /hadoop

hadoop fs -ls /

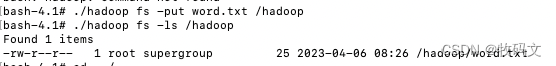

2. 上传文件到hdfs上

hadoop fs -put word.txt /hadoop

hadoop fs -ls /hadoop

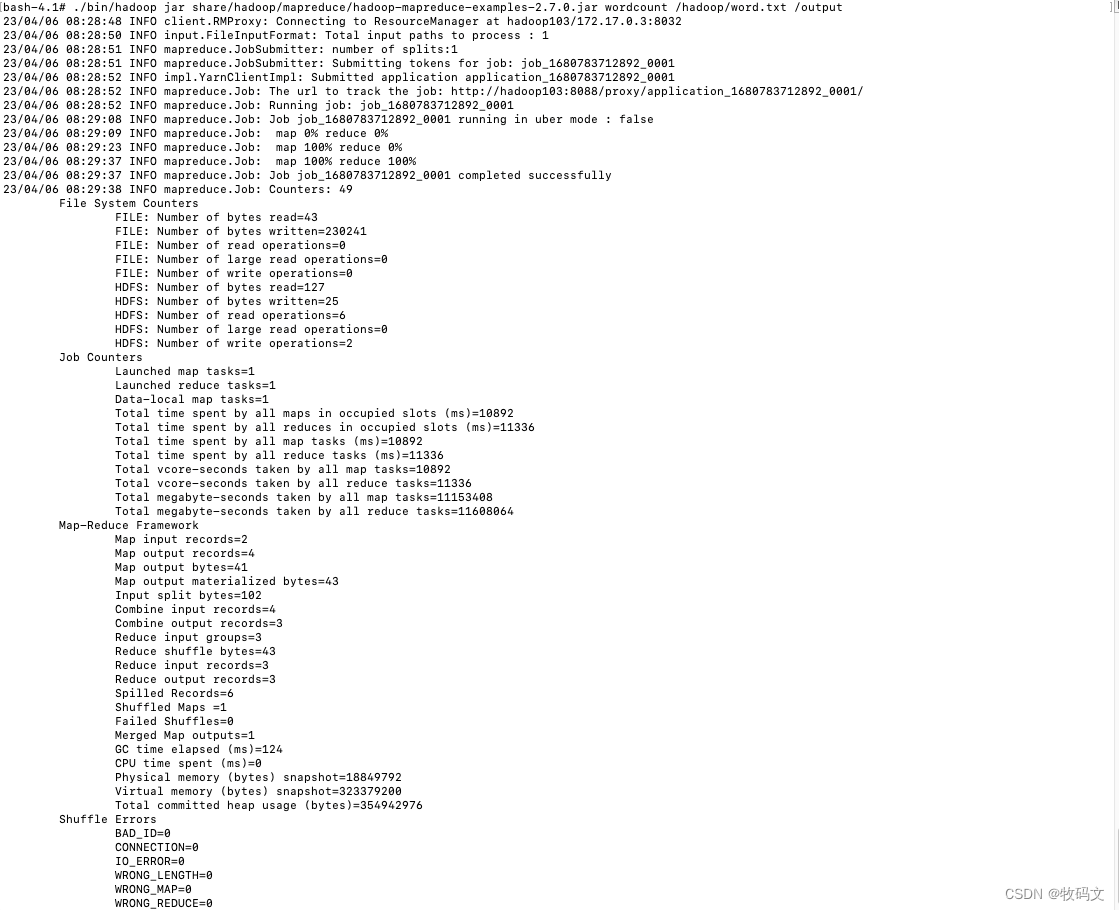

3. 执行wordcount案例

./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar wordcount /hadoop/word.txt /output

在yarn上可以看到执行情况

五、关闭集群

hadoop102上:

stop-dfs.sh

hadoop103上:

stop-yarn.sh