制作工具模块

- -隐藏身份信息的User-Agent模块;对象服务器识别不了身份信息。

import random

user_agent_data = [{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3314.0 Safari/537.36 SE 2.X MetaSr 1.0"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18362"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36 QIHU 360SE"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.162 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.106 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3722.400 QQBrowser/10.5.3751.400"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18363"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3765.400 QQBrowser/10.6.4153.400"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3765.400 QQBrowser/10.6.4153.400"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.106 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.204 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18362"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18362"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18362"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; ServiceUI 14) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36 Edge/18.18362"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.106 Safari/537.36"},{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; …) Gecko/20100101 Firefox/77.0"},

]

def get_headers():"""随机获取报头"""index = random.randint(0,len(user_agent_data)-1)# print("下标值:",index)return user_agent_data[index]

if __name__ == '__main__':headers = get_headers()print("随机获取UA值:",headers)

- 制作一个动态的IP代理池;防止IP被封;可以用的ip代理已存进ippool.json

import json

import random

def get_proxies():"""随机获取代理池"""#读取文件rfile = open("./ipfile/ippool.json","r",encoding="utf-8")proxy_lists = json.load(rfile)rfile.close()# print(len(proxy_lists))#随机数index = random.randint(0,len(proxy_lists)-1)return proxy_lists[index]if __name__ == '__main__':proxies = get_proxies()print("随机获取ip代理:",proxies)

爬取漫画首页的数据内容

1.http://kanbook.net/328

2.爬取字段标题、页数、herf后缀 并存进到json

import requests

import useragenttool

import proxytool

from lxml import etree

import json

import osclass OnePieceSpider(object):def __init__(self):# 初始化self.url = "http://kanbook.net/328"self.html_data = Noneself.one_piece_data_list = []def get_url_html(self):"""解析获得网址源代码"""headers = useragenttool.get_headers()# 添加报头,隐藏身份headers["Accept-Encoding"] = "deflate, sdch, br"headers["Content-Type"] = "text/html; charset=UTF-8"headers["Referer"] = "https://kanbook.net/328/3/1/1"#参考点# print(headers)# 请求响应response = requests.get(url=self.url,headers=headers,proxies=proxytool.get_proxies())html_content = response.content.decode("utf-8")self.html_data = html_content# print(html_content)def catch_html_data(self):"""抓取网址源代码的数据"""# 获得etree对象data_parse = etree.HTML(self.html_data)# print(data_parse)li_list = data_parse.xpath("//div[@aria-labelledby='3-tab']/ol/li")# print(li_list)# 遍历处理,列表倒置for li_element in li_list[::-1]:# print(li_element)# 提取后的链接h_name = li_element.xpath("./a/@href")[0]# print(h_name)title = li_element.xpath("./a/@title")[0]# 提取标题# print(title)# 提取页数page = int(li_element.xpath("./a/span/text()")[0][1:4])# print(page)# 放进字典中one_piece_item = {"title": title,"postfix": h_name,"page": page}# print(one_piece_item)self.one_piece_data_list.append(one_piece_item)print("添加成功!")def save_data_file(self):"""保存信息"""path = "./image_url"if not os.path.exists(path):os.mkdir(path)file = open(path + "/one_piece_data.json", "w", encoding="utf-8")json.dump(self.one_piece_data_list, file, ensure_ascii=False, indent=2)file.close()print("数据保存成功!")def run(self):# 启动程序self.get_url_html()# print(html_content)self.catch_html_data()self.save_data_file()# print(self.one_piece_data_list)def main():spider = OnePieceSpider()spider.run()

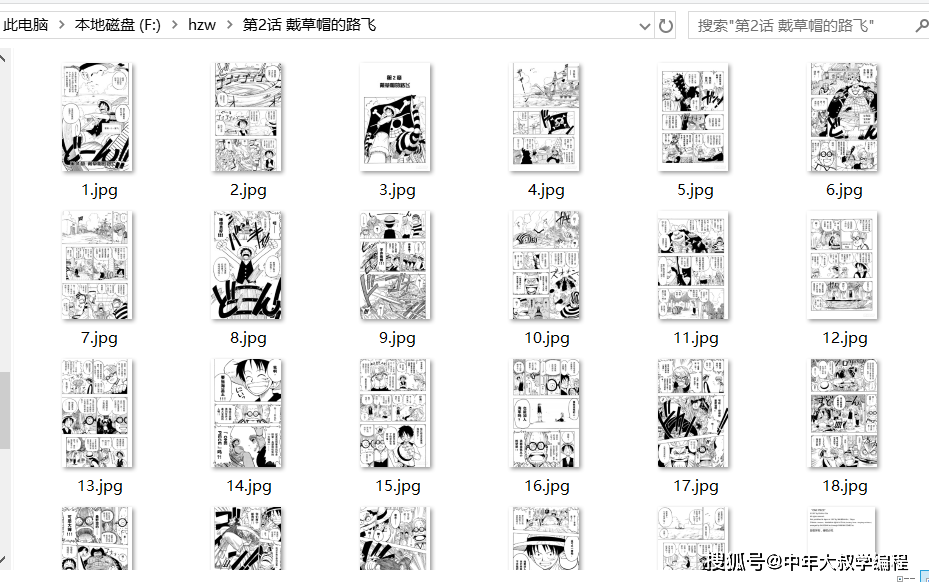

开始爬取海贼王全部的全彩漫画图片

-注意点:报头要添加referer参考页,选择漫画本站

此外循环(while True)为了让全部卷图片都能下载成功,成功下载就跳出循环

import requests

import useragenttool

import proxytool

import time

import random

import json

import os

import re

import urllib3

urllib3.disable_warnings()class OnePieceImageSpider(object):def __init__(self):# 初始化self.url = ""def set_url(self, out_url):"""设置网络地址"""self.url = out_urldef get_url_list(self, num):"""获取num页网址"""url_list = []# 拼接网址,获得列表for page in range(1, num+1):new_url = self.url.format(page)url_list.append(new_url)return url_listdef get_url_html(self, inner_url):"""解析获得网址源代码"""headers = useragenttool.get_headers()headers["Accept-Encoding"] = "deflate, sdch, br"headers["Content-Type"] = "text/html; charset=UTF-8"headers["Referer"] = "https://kanbook.net/328/3/6"#参照页# print(headers)response = requests.get(url=inner_url,headers=headers,proxies=proxytool.get_proxies(),timeout=30,verify=False)# 动态限制爬取网页源代码时间wait_time = random.randint(1, 6)time.sleep(wait_time)html_content = response.content# print(html_content)return html_contentdef __download_image(self, image_url, name, index):"""下载图片:param image_url: 图片地址:param name: 文件名字:param index: 图片数字:return:"""while True:try:if len(image_url) == 0:breakcontent = self.get_url_html(image_url)path = "./onepieceimage/%s" % nameif not os.path.exists(path):os.mkdir(path)with open(path + "/%d.jpg" % index, "wb") as wfile:wfile.write(content)breakexcept Exception as msg:print("出现异常,错误信息为", msg)# 启动程序def run(self,url_list, title):# print(url_list)# 遍历处理,获得htmlindex = 2for url in url_list:while True:try:# print(url)data = self.get_url_html(url).decode("utf-8")# print(data)regex = r"""var img_list=(\[.+])"""result = re.findall(regex, data)# print(type(result[0]))# 转换列表lists = json.loads(result[0])# print(lists)img_url = lists[0]print(img_url)breakexcept Exception as msg:print("错误信息:",msg)self.__download_image(img_url, title, index)print("第%d张下载" % index)index += 1print("所有图片下载成功")def main():# 提取文件read_file = open("./image_url/one_piece_data.json","r",encoding="utf-8")one_piece_data = json.load(read_file)read_file.close()# 遍历处理,提取字典数据for element in one_piece_data:# print(element)# 海贼王地址、页数、标题href_name = element["postfix"]number = element["page"]name = element["title"]# 拼接网址http_url = "http://kanbook.net"+href_name+"/{}"# print(http_url)onepieceimgspider = OnePieceImageSpider()onepieceimgspider.set_url(http_url)print("%s开始下载!" % name)url_list = onepiecespider.get_url_list(number)# print(url_list)# 获得每页的url列表onepieceimgspider.run(url_list, name)if __name__ == '__main__':main()保存的格式: