文章目录

- 1、基于zookeeper的集群

- 2、kafka集群安装

- 2.1 基于Zookeeper集群的配置

- 2.2 基于KRaft模式集群的配置

- 2.3、启动Kafka集群

- 3、kafka_exporter监控组件安装

- 3.1、安装

- 3.2、系统服务

- 3.3、集成到prometheus

- 4、与Grafana集成

1、基于zookeeper的集群

下载地址:https://zookeeper.apache.org/releases.html#download

tar -zxvf zookeeper-3.4.11.tar.gz -C /usr/local

cp /usr/local/zookeeper-3.4.11/conf/zoo_sample.cfg /usr/local/zookeeper-3.4.11/conf/zoo.cfg

vi /usr/local/zookeeper-3.4.11/conf/zoo.cfg##zoo.cfg内容###

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/zookeeper-3.4.11/data/

clientPort=2181

server.0=192.168.28.133:2888:3888

server.1=192.168.28.136:2888:3888

server.2=192.168.28.132:2888:3888

##zoo.cfg内容###

防火墙需要打开相关端口

firewall-cmd --zone=public --add-port=2181/tcp --permanent

firewall-cmd --zone=public --add-port=2888/tcp --permanent

firewall-cmd --zone=public --add-port=3888/tcp --permanent

firewall-cmd --reload

三个节点分别创建目录和myid文件,三个节点的myid值分别为0,1,2,与上边的server.0,server.1,server.2对应

mkdir -p /usr/local/zookeeper-3.4.11/data/

echo 0 > /usr/local/zookeeper-3.4.11/data/myid

三个节点分别启动

# 启动

/usr/local/zookeeper-3.4.11/bin/zkServer.sh start

# 查看状态

/usr/local/zookeeper-3.4.11/bin/zkServer.sh status

# 停止

/usr/local/zookeeper-3.4.11/bin/zkServer.sh stop

# 重启

/usr/local/zookeeper-3.4.11/bin/zkServer.sh restart

2、kafka集群安装

https://kafka.apache.org/downloads

tar -zxvf kafka_2.13-3.2.3.tgz -C /usr/local

cd /usr/local/kafka_2.13-3.2.3

kafka集群安装支持Zookeeper和KRaft两种方式,可选择其中一种方式

2.1 基于Zookeeper集群的配置

编译配置server.properties

vi /usr/local/kafka_2.13-3.2.3/config/server.properties######## server.properties配置########

# 三个节点的broker.id分别为0,1,2

broker.id=0

# listeners三台机器修改为对应IP

listeners=PLAINTEXT://192.168.28.133:9092

zookeeper.connect=192.168.28.133:2181,192.168.28.136:2181,192.168.28.132:2181

log.dirs=/usr/local/kafka_2.13-3.2.3/logs/

######## server.properties配置########

2.2 基于KRaft模式集群的配置

防火墙

firewall-cmd --zone=public --add-port=9092/tcp --permanent

firewall-cmd --zone=public --add-port=9093/tcp --permanent

firewall-cmd --reload

编译配置server.properties

vi /usr/local/kafka_2.13-3.2.3/config/kraft/server.properties# server.properties配置##################

process.roles=broker,controller

# 3台机器的node.id分别为1,2,3,不能重复

node.id=1

controller.quorum.voters=1@192.168.28.133:9093,2@192.168.28.136:9093,3@192.168.28.132:9093

# 3台机器的listeners修改为对应的IP

listeners=PLAINTEXT://192.168.28.133:9092,CONTROLLER://192.168.28.133:9093

log.dirs=/usr/local/kafka_2.13-3.2.3/kraft-combined-logs

# server.properties配置##################

生成集群统一UUID号:

./bin/kafka-storage.sh random-uuid

# 生成uuid:rrGzprV0RPyWTVek9gyTcg

用上边生成的UUID格式化kafka存储目录(所有节点都需要执行)

./bin/kafka-storage.sh format -t rrGzprV0RPyWTVek9gyTcg -c ./config/kraft/server.properties

2.3、启动Kafka集群

命令

cd /usr/local/kafka_2.13-3.2.3

# 1、启动

# (1)、zookeeper集群启动

bin/kafka-server-start.sh -daemon config/server.properties

# (2)、KRaft集群启动

bin/kafka-server-start.sh -daemon ./config/kraft/server.properties # 2、关闭

# (1)、zookeeper集群关闭

bin/kafka-server-stop.sh config/server.properties

# (2)、KRaft集群关闭

bin/kafka-server-stop.sh ./config/kraft/server.properties # 3、主题

# (1)、创建主题 分区数partitions 分区的副本数replication-factor

$ bin/kafka-topics.sh --create --topic topic-test --zookeeper 192.168.28.133:2181,192.168.28.136:2181,192.168.28.132:2181 --replication-factor 3 --partitions 3

# // 2.2版本建议使用--bootstrap-server代替--zookeeper

$ bin/kafka-topics.sh --create --topic topic-test --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --replication-factor 3 --partitions 3

# (2)列出所有主题

$ bin/kafka-topics.sh --list --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

# (3)查询主题

$ bin/kafka-topics.sh --describe --topic topic-test --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

# (4)增加主题的partition数

$ bin/kafka-topics.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --alter --topic topic-test --partitions 5

# (5)查看主题指定分区 offset 的最大值或最小值, time 为 -1 时表示最大值,为 -2 时表示最小值:

$ bin/kafka-run-class.sh kafka.tools.GetOffsetShell --topic topic-test --time -1 --broker-list 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --partitions 0 # 4、生产消息

$ bin/kafka-console-producer.sh --topic topic-test --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

This is my first test

This is my second test# 5、读取消息

# (1)从头开始

$ bin/kafka-console-consumer.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --topic topic-test --from-beginning

# (2)从尾部开始,需要指定分区

$ bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic topic-test --offset latest --partition 0

# (3)取指定个数

$ bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic topic-test --offset latest --partition 0 --max-messages 1

# (4)指定Group

$ bin/kafka-console-consumer.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --topic topic-test -group group_test --from-beginning# 6、消费者Group#

# (2)消费者Group列表

$ bin/kafka-consumer-groups.sh --list --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

# (3)查看Group详情[用来判断是否有延迟数据]

$ bin/kafka-consumer-groups.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --group test_group --describe

# (4)删除Group

$ bin/kafka-consumer-groups.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --group test_group --delete# 7、平衡Leader

$ bin/kafka-preferred-replica-election.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

#或 --partition:指定需要重新分配leader的partition编号

$ bin/kafka-leader-election.sh --bootstrap-server 192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092 --topic test --partition=2 --election-type preferred

# 8、自带压测工具

$ bin/kafka-producer-perf-test.sh --topic test --num-records 100 --record-size 1 --throughput 100 --producer-props bootstrap.servers=192.168.28.133:9092,192.168.28.136:9092,192.168.28.132:9092

3、kafka_exporter监控组件安装

3.1、安装

下载地址:https://github.com/danielqsj/kafka_exporter

# 解压

tar -zxvf kafka_exporter-1.6.0.linux-amd64.tar.gz -C /usr/local

# 启动

/usr/local/kafka_exporter-1.6.0.linux-amd64/kafka_exporter --kafka.server=192.168.28.133:9092 --web.listen-address=:9308

# 查看监控结果

curl http://127.0.0.1:9308/metrics

可用启动参数

| 参数 | 值 | 说明 |

|---|---|---|

| kafka.server | kafka:9092 | Addresses (host:port) of Kafka server |

| kafka.version | 2.0.0 | Kafka broker version |

| sasl.enabled | false | Connect using SASL/PLAIN |

| sasl.handshake | true | Only set this to false if using a non-Kafka SASL proxy |

| sasl.username | SASL user name | |

| sasl.password | SASL user password | |

| sasl.mechanism | SASL mechanism can be plain, scram-sha512, scram-sha256 | |

| sasl.service-name | Service name when using Kerberos Auth | |

| sasl.kerberos-config-path | Kerberos config path | |

| sasl.realm | Kerberos realm | |

| sasl.keytab-path | Kerberos keytab file path | |

| sasl.kerberos-auth-type | Kerberos auth type. Either ‘keytabAuth’ or ‘userAuth’ | |

| tls.enabled | false | Connect to Kafka using TLS |

| tls.server-name | Used to verify the hostname on the returned certificates unless tls.insecure-skip-tls-verify is given. The kafka server’s name should be given | |

| tls.ca-file | The optional certificate authority file for Kafka TLS client authentication | |

| tls.cert-file | The optional certificate file for Kafka client authentication | |

| tls.key-file | The optional key file for Kafka client authentication | |

| tls.insecure-skip-tls-verify | false | If true, the server’s certificate will not be checked for validity |

| server.tls.enabled | false | Enable TLS for web server |

| server.tls.mutual-auth-enabled | false | Enable TLS client mutual authentication |

| server.tls.ca-file | The certificate authority file for the web server | |

| server.tls.cert-file | The certificate file for the web server | |

| server.tls.key-file | The key file for the web server | |

| topic.filter | .* | Regex that determines which topics to collect |

| group.filter | .* | Regex that determines which consumer groups to collect |

| web.listen-address | :9308 | Address to listen on for web interface and telemetry |

| web.telemetry-path | /metrics | Path under which to expose metrics |

| log.enable-sarama | false | Turn on Sarama logging |

| use.consumelag.zookeeper | false | if you need to use a group from zookeeper |

| zookeeper.server | localhost:2181 | Address (hosts) of zookeeper server |

| kafka.labels | Kafka cluster name | |

| refresh.metadata | 30s | Metadata refresh interval |

| offset.show-all | true | Whether show the offset/lag for all consumer group, otherwise, only show connected consumer groups |

| concurrent.enable | false | If true, all scrapes will trigger kafka operations otherwise, they will share results. WARN: This should be disabled on large clusters |

| topic.workers | 100 | Number of topic workers |

| verbosity | 0 | Verbosity log level |

3.2、系统服务

vi /usr/lib/systemd/system/kafka_exporter.service

[Unit]

Description=Prometheus Kafka Exporter

After=network.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/kafka_exporter-1.6.0.linux-amd64/kafka_exporter --kafka.server=192.168.28.133:9092 --kafka.server=192.168.28.136:9092 --kafka.server=192.168.28.132:9092 --web.listen-address=:9308

Restart=on-failure

[Install]

WantedBy=multi-user.target

启动服务

systemctl daemon-reload # 重新加载配置文件

systemctl enable kafka_exporter # 设置开机启动

systemctl disable kafka_exporter # 取消开机启动

systemctl start kafka_exporter # 启动服务

systemctl stop kafka_exporter # 关闭服务

systemctl status kafka_exporter # 查看状态

3.3、集成到prometheus

vi /usr/local/prometheus-2.37.0.linux-amd64/prometheus.yml

- job_name: 'kafka_export'static_configs:- targets: ['192.168.245.139:9308']labels:app: 'zxt_prod'

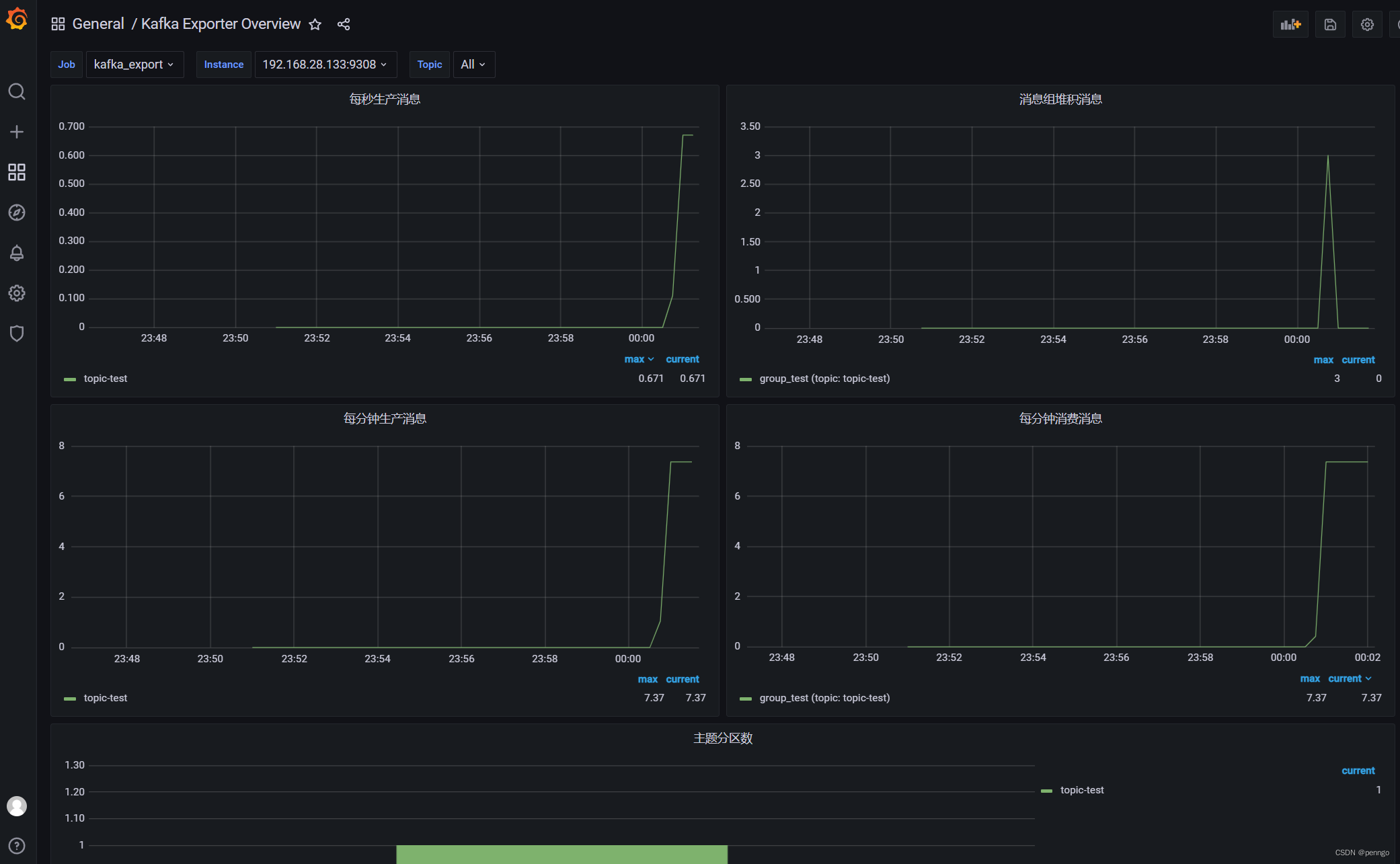

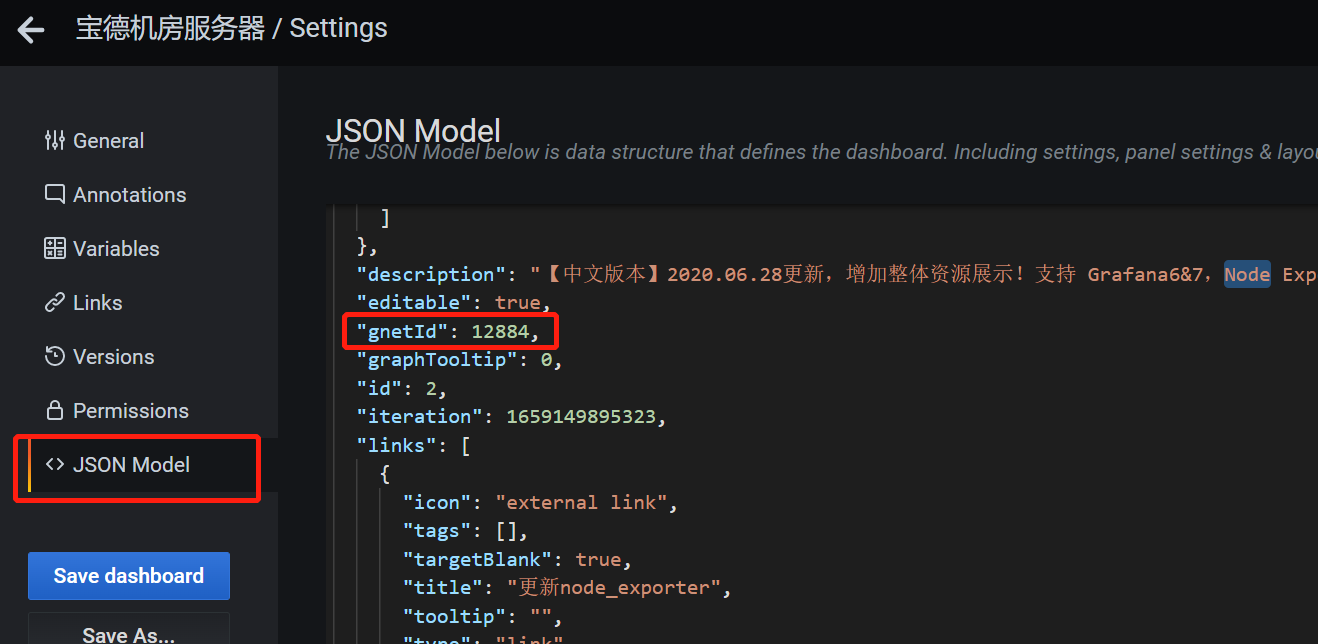

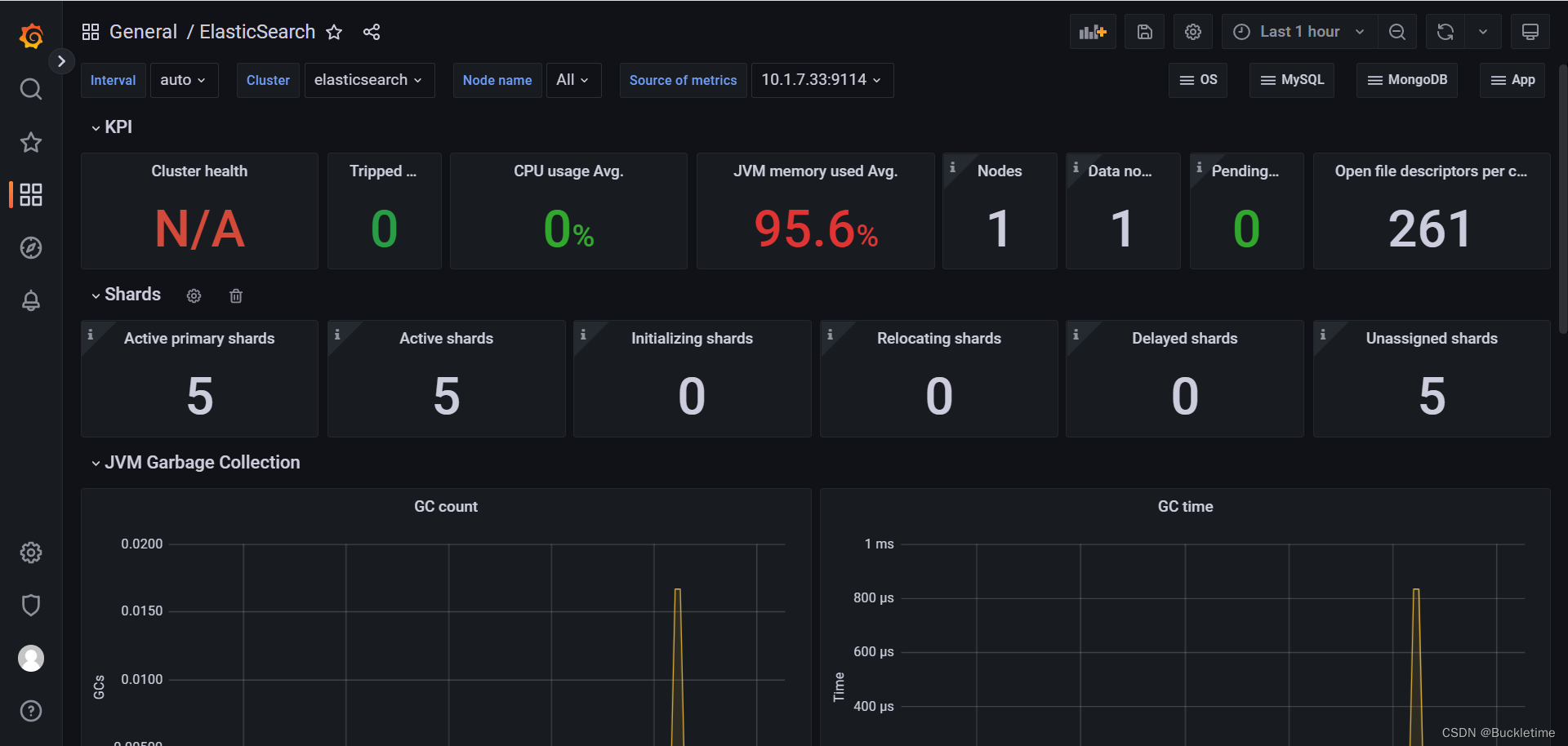

4、与Grafana集成

使用监控模板:https://grafana.com/grafana/dashboards/7589