DenseFusion: 6D Object Pose Estimation by Iterative Dense Fusion(CVPR2019)

原文:https://ieeexplore.ieee.org/document/8953386

代码和视频:https://sites.google.com/view/densefusion/

Github:https://github.com/j96w/DenseFusion/tree/Pytorch-1.0

Abstract: A key technical challenge in performing 6D object pose estimation from RGB-D image is to fully leverage the two complementary data sources. Prior works either extract information from the RGB image and depth separately or use costly post-processing steps, limiting their performances in highly cluttered scenes and real-time applications. In this

work, we present DenseFusion, a generic framework for estimating 6D pose of a set of known objects from RGB-D images. DenseFusion is a heterogeneous architecture that processes the two data sources individually and uses a novel dense fusion network to extract pixel-wise dense feature embedding, from which the pose is estimated. Furthermore, we integrate an end-to-end iterative pose refinement procedure that further improves the pose estimation while achieving near real-time inference. Our experiments show that our method outperforms state-of-the-art approaches in two datasets, YCB-Video and LineMOD. We also deploy our proposed method to a real robot to grasp and manipulate objects based on the estimated pose. Our code and video are available at https://sites.google.com/view/densefusion/.

1、文章提出了DenseFusion,从RGB-D图像中估计一组已知物体的6D姿态。

2、DenseFusion分别处理两个数据源,并使用一种新的dense fusion网络来提取像素级密集特征嵌入,从中估计姿态。

3、集成了一个端到端迭代姿态细化过程,该过程进一步改进了姿态估计,同时实现了接近实时的推断。

4、实验表明,该方法在YCB视频和LineMOD两个数据集上优于最先进的方法。

5、将提出的方法部署到一个真实的机器人上,以基于估计的姿势来抓取和操纵物体。

1. Introduction

6D object pose estimation is the crux to many important real-world applications, such as robotic grasping and manipulation [7, 35, 44], autonomous navigation [6, 11, 42], and augmented reality [19, 20]. Ideally, a solution should deal with objects of varying shape and texture, show robustness towards heavy occlusion, sensor noise, and changing lighting conditions, while achieving the speed requirement of real-time tasks. The advent of cheap RGB-D sensors has enabled methods that infer poses of low-textured objects even in poorly-lighted environments more accurately

than RGB-only methods. Nonetheless, it is difficult for existing methods to satisfy the requirements of accurate pose estimation and fast inference simultaneously.

6D物体姿态估计是许多重要现实世界应用的关键,如机器人抓取和操纵[7,35,44]、自主导航[6,11,42]和增强现实[19,20]。理想情况下,解决方案应处理不同形状和纹理的对象,对严重遮挡、传感器噪声和不断变化的照明条件表现出鲁棒性,同时实现实时任务的速度要求。廉价RGB-D传感器的出现使得即使在光线较差的环境中,也能比仅使用RGB的方法更准确地推断低纹理物体的姿态。然而,现有的方法很难同时满足精确姿态估计和快速推断的要求。

Classical approachesfirst extract features from RGB-D data and perform correspondence grouping and hypothesis verification [3, 12, 13, 15, 26, 33, 38]. However, the reliance on handcrafted features and fixed matching procedures have limited their empirical performances in presence of heavy occlusion and lighting variation. Recent success in visual recognition has inspired a family of datadriven methods that use deep networks for pose estimation from RGB-D inputs, such as PoseCNN [41] and MCN [16].

经典方法首先从RGB-D数据中提取特征,并执行对应分组和假设验证[3,12,13,15,26,33,38]。然而,在存在严重遮挡和光照变化的情况下,对手工特征和固定匹配程序的依赖限制了它们的经验性能。最近在视觉识别方面的成功启发了一系列数据驱动方法,这些方法使用深度网络从RGB-D输入进行姿态估计,例如PoseCNN[41]和MCN[16]。

However, these methods require elaborate post-hoc refinement steps to fully utilize the 3D information, such as a highly customized Iterative Closest Point (ICP) [2] procedure in PoseCNN and a multi-view hypothesis verification scheme in MCN. These refinement steps cannot be optimized jointly with the final objective and are prohibitively slow for real-time applications. In the context of autonomous driving, a third family of solutions has been proposed to better exploit the complementary nature of color and depth information from RGB-D data with end-to-end deep models, such as Frustrum PointNet [23] and PointFusion [42]. These models have achieved good performances in driving scenes and the capacity of real-time inference. However, as we demonstrate empirically, these methods fall short under heavy occlusion, which is common in manipulation domains.

然而,这些方法需要精细的事后细化步骤来充分利用3D信息,例如PoseCNN中高度定制的Iterative Closest Point(ICP) [2]程序和MCN中的多视图假设验证方案。这些细化步骤不能结合最终目标进行优化,而且对于实时应用程序来说速度非常慢。在自动驾驶的背景下,已经提出了第三系列的解决方案,以更好地利用RGB-D数据中的颜色和深度信息与端到端深度模型的互补性,如Frustrum PointNet[23]和PointFusion[42]。这些模型在驾驶场景和实时推理能力方面取得了良好的性能。然而,正如我们根据经验所证明的,这些方法在严重遮挡的情况下是不够的,该情况在操纵领域中是常见的。

In this work, we propose an end-to-end deep learning approach for estimating 6-DoF poses of known objects from RGB-D inputs. The core of our approach is to embed and fuse RGB values and point clouds at a perpixel level, as opposed to prior work which uses image crops to compute global features [42] or 2D bounding boxes [23]. This perpixel fusion scheme enables our model to explicitly reason about the local appearance and geometry information, which is essential to handle heavy occlusion. Furthermore, we propose an iterative method which performs pose refinement within the end-to-end learning framework. This greatly enhances model performance while keeping inference speed real-time.

在这项工作中,我们提出了一种端到端深度学习方法,用于从RGB-D输入估计已知对象的6-DoF姿态。我们方法的核心是在每个像素级别嵌入和融合RGB值和点云,而不是使用图像裁剪来计算全局特征[42]或2D边界框[23]的现有工作。这种逐像素融合方案使我们的模型能够明确地推理局部外观和几何信息,这对于处理严重遮挡至关重要。 此外,我们提出了一种迭代方法,该方法在端到端学习框架内执行姿势细化。 这大大提高了模型性能,同时保持了推理速度的实时性。

We evaluate our method in two popular benchmarks for 6D pose estimation, YCB-Video [41] and LineMOD [12]. We show that our method outperforms the state-of-the-art PoseCNN after ICP refinement [41] by 3.5% in pose accuracy while being 200x faster in inference time. In particular, we demonstrate its robustness in highly cluttered scenes thanks to our novel dense fusion method. Last, we also showcase its utility in a real robot task, where the robot estimates the poses of objects and grasp them to clear up a

table.

我们在两个流行的6D姿态估计基准中评估了我们的方法,YCB视频[41]和LineMOD[12]。我们表明,我们的方法在ICP细化后[41]的姿态精度比最先进的PoseCNN高3.5%,同时推理时间快200倍。特别是,由于我们新颖的密度融合方法,我们证明了它在高度杂乱场景中的鲁棒性。最后,我们还展示了它在真实机器人任务中的实用性,机器人可以估计物体的姿势,并抓住它们以清理桌子。

In summary, the contributions of this work are two-fold:First, we present a principled way to combine color and depth information from the RGB-D input. We augment the information of each 3D point with 2D information from an embedding space learned for the task and use this new color-depth space to estimate the 6D pose. Second, we integrate an iterative refinement procedure within the neural network architecture, removing the dependency of previous methods of a post-processing ICP step.

总之,这项工作的贡献有两个方面:首先,提出了一种结合RGB-D输入的颜色和深度信息的基础方法。使用嵌入空间(从任务中学习得到)的2D信息来增强每个3D点的信息,并使用这个新的颜色深度空间来估计6D姿态。其次,我们在神经网络架构中集成了迭代细化过程,消除了后处理ICP步骤之前方法的依赖性。

2. Related Work

Pose from RGB images.

Classical methods rely on detect-ing and matching keypoints with known object models [1, 7,9, 27, 44]. Newer methods address the challenge by learning to predict the 2D keypoints [3, 22, 32, 34, 35] and solve the poses by PnP [10]. Though prevail in speed-demanding tasks, these methods become unreliable given low-texture or low-resolution inputs. Other methods propose to directly estimate objects pose from images using CNN-based architectures [28, 36]. Many such methods focus on orientation estimation: Xiang et al. [39, 40] learns a viewpoint-aware pose estimator by clustering 3D features from object models. Mousavian et al. [21] predicts 3D object parameters and recovers poses by single-view geometry constraints. Sundermeyer et al. [31] implicitly encode orientation in a latent space and in test timefind the best match in a codebook as the orientation prediction. However, pose estimation in 3D remains a challenge for the lack of depth information. Our method leverages both image and 3D data to estimate object poses in 3D in an end-to-end architecture.

经典方法依赖于检测关键点并将其与已知对象模型匹配[1,7,9,27,44]。较新的方法通过学习预测2D关键点[3,22,32,34,35]并通过PnP[10]求解位姿来解决这一挑战。尽管这些方法在速度要求较高的任务中占优势,但在低纹理或低分辨率输入的情况下,这些方法变得不可靠。其他方法提出使用基于CNN的架构直接从图像中估计物体姿态[28,36]。许多这样的方法专注于方向估计:Xiang等人[39,40]通过从对象模型中聚类3D特征来学习视点感知姿态估计器。Mousavian等人[21]预测3D对象参数,并通过单视图几何约束恢复姿态。Sundermeyer等人[31]隐式编码潜在空间中的方向,并在测试时间中找到码本中的最佳匹配作为方向预测。然而,由于缺乏深度信息,3D中的姿态估计仍然是一个挑战。我们的方法利用图像和3D数据在端到端架构中以3D方式估计对象姿态。

Pose from depth / point cloud.

Recent studies have proposed to directly tackle the 3D object detection problem in discretized 3D voxel spaces. For example, Song et al. [29,

30] generate 3D bounding box proposals and estimate the poses by featuring the voxelized input with 3D ConvNets. Although the voxel representation effectively encodes geometric information, these methods are often prohibitively expensive: [30] takes nearly 20 seconds for each frame.

最近的研究提出直接解决离散化三维体素空间中的三维物体检测问题。例如,Song等人[29,30]通过使用3D ConvNets对体素化输入进行特征化,生成3D边界框建议并估计姿势。尽管体素表示法有效地编码几何信息,但这些方法往往代价高昂:[30]每帧花费近20秒。

More recent 3D deep learning architectures have enabled methods that directly performs 6D pose estimation on 3D point cloud data. As an example, both Frustrum PointNets [23] and VoxelNet [43] use a PointNet-like [24] structure and achieved state-of-the-art performances on the KITTI benchmark [11]. Our method also makes use of similar architecture. However, unlike urban driving applications for which point cloud alone provides enough information, generic object pose estimation tasks such as the YCB-Video dataset [41] demands reasoning over both geometric and appearance information. We address such a challenge by proposing a novel 2D-3D sensor fusion architecture.

更新的3D深度学习架构已经启用了直接对3D点云数据执行6D姿态估计的方法。例如,Frustrum PointNets[23]和VoxelNet[43]都使用类似PointNet[24]的结构,并在KITTI基准测试[11]上实现了最先进的性能。我们的方法也使用了类似的架构。然而,与城市驾驶应用程序(仅点云就为其提供了足够的信息)不同,YCB-Video数据集等通用对象姿态估计任务[41]需要对几何和外观信息进行推理。我们通过提出一种新颖的2D-3D传感器融合架构来解决这一挑战。

Pose from RGB-D data.

Classical approaches extract 3D features from the input RGB-D data and perform correspondence grouping and hypothesis verification [3, 12, 13,

15, 26, 33, 38]. However, these features are either hardcoded [12, 13, 26] or learned by optimizing surrogate objectives [3, 33, 38] such as reconstruction [15] instead of the true objective of 6D pose estimation. Newer methods such as PoseCNN [41] directly estimates 6D poses from image data. Li et al. [16] further fuses the depth input as an additional channel to a CNN-based architecture. However, these approaches rely on expensive post-processing steps to make full use of 3D input. In comparison, our method fuses3D data to 2D appearance feature while retaining the geometric structure of the input space, and we show that it out-

performs [41] on the YCB-Video dataset [41] without the post-processing step.

经典方法从输入RGB-D数据中提取3D特征,并执行对应分组和假设验证[3,12,13,15,26,33,38]。然而,这些特征要么是硬编码的[12,13,26],要么是通过优化替代目标[3,33,38](如重建[15])来学习的,而不是6D姿态估计的真实目标。PoseCNN[41]等较新的方法直接从图像数据中估计6D姿态。Li等人[16]进一步将深度输入融合为基于CNN的架构的附加通道。然而,这些方法依赖于代价高昂的后续处理步骤来充分利用3D输入。相比之下,我们的方法将3D数据融合到2D外观特征,同时保留输入空间的几何结构,并且我们表明,在没有后处理步骤的情况下,它在YCB视频数据集[41]上的表现优于[41]。

Our method is most related to PointFusion [42], in which geometric and appearance information are fused in a heterogeneous architecture. We show that our novel local feature fusion scheme significantly outperforms PointFusion’s naive fusion-by-concatenation method. In addition, we use

a novel iterative refinement method to further improve the pose estimation.

我们的方法与PointFusion最相关[42],其中几何和外观信息在异构架构中融合。我们表明,我们的新局部特征融合方案显著优于PointFusion的级联方法的原始融合。此外,我们使用一种新的迭代细化方法来进一步改进姿态估计。

3. Model

Our goal is to estimate the 6D pose of a set of known objects present in an RGB-D image of a cluttered scene. Without loss of generality, we represent 6D poses as a homogeneous transformation matrix, p ∈ S E ( 3 ) p∈ SE(3) p∈SE(3). In other words, a 6D pose is composed by a rotation R ∈ S O ( 3 ) R∈ SO(3) R∈SO(3) and a translation t ∈ R 3 t∈ R^3 t∈R3, p = [ R ∣ t ] p = [R|t] p=[R∣t]. Since we estimate the 6D pose of the objects from camera images, the poses are defined with respect to the camera coordinate frame.

我们的目标是估计杂乱场景的RGB-D图像中存在的一组已知对象的6D姿态。在不丧失一般性的情况下,将6D姿态表示为齐次变换矩阵, p ∈ S E ( 3 ) p∈ SE(3) p∈SE(3)。换句话说,6D姿态由旋转 R ∈ S O ( 3 ) R∈ SO(3) R∈SO(3)和位移 t ∈ R 3 t∈ R^3 t∈R3表示, p = [ R ∣ t ] p = [R|t] p=[R∣t]。因为我们从相机图像中估计物体6D姿态,因此姿态相对于相机坐标系定义。

Estimating the pose of a known object in adversarial conditions (e.g. heavy occlusion, poor lighting, . . . ) is only possible by combining the information contained in the color and depth image channels. However, the two data

sources reside in different spaces. Extracting features from heterogeneous data sources and fusing them appropriately is the key technical challenge in this domain.

只有通过组合颜色和深度图像通道中包含的信息,才能估计已知物体在不利条件下的姿态(例如,严重遮挡、较差照明等)。但是,这两个数据源位于不同的空间,从异构数据源中提取特征并适当地融合它们是该领域的关键技术挑战。

We address this challenge with (1) a heterogeneous architecture that processes color and depth information differently, retaining the native structure of each data source (Sec. 3.3), and (2) a dense pixel-wise fusion network that performs color-depth fusion by exploiting the intrinsic mapping between the data sources (Sec. 3.4). Finally, the pose estimation is further refined with a differentiable iterative refinement module (Sec. 3.6). In contrast to the expensive post-hoc refinement steps used in [16, 41], our refinement module can be trained jointly with the main architecture and only takes a small fraction of the total inference time.

我们通过**(1)异构架构来解决这一挑战,该架构分别地处理颜色和深度信息,保留每个数据源的本地结构(第3.3节),以及(2)密集像素融合网络**,通过利用数据源之间的固有映射来执行颜色深度融合(第3.4节)。最后,使用可微迭代细化模块进一步细化姿态估计(第3.6节)。与[16,41]中使用的代价高昂的事后细化步骤相比,我们的细化模块可以与主架构联合训练,只需要总推理时间的一小部分。

图2. 6D姿态估计模型概述。 我们的模型从RGB图像生成对象分割遮罩和边界框。来自深度图的RGB颜色和点云被编码为嵌入,并在每个对应的像素处融合。姿势预测器为每个像素生成姿势估计,并且对预测进行投票以生成对象的最终6D姿势预测。(为了简单起见,这里没有描述我们方法的迭代过程)

3.1. Architecture Overview

Fig. 2 illustrates the overall proposed architecture. The architecture contains two main stages. Thefirst stage takes color image as input and performs semantic segmentation for each known object category. Then, for each segmented object, we feed the masked depth pixels (converted to 3D point cloud) as well as an image patch cropped by the bounding box of the mask to the second stage.

图2说明了所提出的总体架构。该体系结构包含两个主要阶段。第一阶段以彩色图像作为输入,并对每个已知对象类别执行语义分割。然后,对于每个分割的对象,我们将遮罩的深度像素(转换为3D点云)以及由遮罩的边界框裁剪的图像块输入到第二阶段。

The second stage processes the results of the segmentation and estimates the object’s 6D pose. It comprises four components: a) a fully convolutional network that processes the color information and maps each pixel in the image crop to a color feature embedding, b) a PointNet-based [24] network that processes each point in the masked 3D point cloud to a geometric feature embedding, c) a pixel-wise fusion network that combines both embeddings and outputs the estimation of the 6D pose of the object based on an unsupervised confidence scoring, and d) an iterative self-refinement

methodology to train the network in a curriculum learning manner and refine the estimation result iteratively. Fig. 2 depicts a), b) and c) and Fig. 3 illustrates d). The details our architecture are described below.

第二阶段处理分割的结果并估计对象的6D姿态。它包括四个部分:a)一个全卷积神经网络,处理颜色信息并将图像裁剪中的每个像素映射到颜色特征嵌入,b)基于PointNet的[24]网络,其将遮罩的3D点云中的每个点处理为几何特征嵌入,c)逐像素融合网络,其组合两种嵌入并基于无监督置信度评分输出物体的6D姿态估计,以及d)迭代自细化方法,以课程学习方式训练网络并迭代地细化估计结果。图2描述了a)、b)和c),图3描述了d)。我们的架构细节如下所述。

图3.迭代姿态优化。我们引入了一个网络模块,该模块在迭代过程中改进姿态估计。

3.2. Semantic Segmentation

Thefirst step is to segment the objects of interest in the image. Our semantic segmentation network is an encoder-decoder architecture that takes an image as input and generates an N +1-channelled semantic segmentation map. Each channel is a binary mask where active pixels depict objects of each of the N possible known classes. The focus of this

work is to develop a pose estimation algorithm. Thus we use an existing segmentation architecture proposed by [41].

第一步是分割图像中感兴趣的对象。本文的语义分割网络是一个编码器-解码器架构,它将图像作为输入并生成N+1个通道的语义分割图。每个通道是一个二元掩膜,其中活跃的像素描述N个可能的已知类中的每一个的对象。本工作的重点是开发一种姿态估计算法。因此,我们使用[41]提出的现有分割架构。

3.3. Dense Feature Extraction

The key technical challenge in this domain is the correct extraction of information from the color and depth channels and their synergistic fusion. Even though color and depth present a similar format in the RGB-D frame, their information resides in different spaces. Therefore, we process them separately to generate color and geometric features from embedding spaces that retain the intrinsic structure of the data sources.

该部分的关键技术挑战是从颜色和深度通道中正确提取信息及其协同融合。尽管颜色和深度在RGB-D帧中呈现出相似的格式,但它们的信息存在于不同的空间中。因此,我们分别对它们进行处理,以从保留数据源固有结构的embedding space中生成颜色和几何特征。

Dense 3D point cloud feature embedding: Previous approaches have used CNN to process the depth image as an additional image channel [16]. However, such method neglects the intrinsic 3D structure of the depth channel. Instead, wefirst convert the segmented depth pixels into a 3D point cloud using the known camera intrinsics, and then use a PointNet-like architecture to extract geometric features.

密集的3D点云特征嵌入: 以前的方法使用CNN处理深度图像作为附加图像通道[16]。然而,这种方法忽略了深度通道的固有3D结构。相反,我们首先使用已知的相机内部特性将分割的深度像素转换为3D点云,然后使用类似PointNet的架构来提取几何特征。

PointNet by Qi et al. [24] pioneered the use of a symmetric function (max-pooling) to achieve permutation invariance in processing unordered point sets. The original architecture takes as input a raw point cloud and learns to encode the information about the vicinity of each point and of the point cloud as a whole. The features are shown to be effective in shape classification and segmentation [24] and pose estimation [23, 42]. We propose a geometric embedding network that generates a dense per-point feature by mapping each of the P segmented points to a dgeo-dimensional

feature space. We implement a variant of PointNet architecture that uses average-pooling as opposed to the commonly used max-pooling as the symmetric reduction function.

Qi等人的PointNet[24]开创了使用对称函数(最大池)在处理无序点集时实现置换不变性的先河。原始体系结构将原始点云作为输入,并学习编码关于每个点的附近和整个点云的信息。这些特征被证明在形状分类和分割[24]和姿态估计[23,42]中是有效的。我们提出了一种几何嵌入网络,该网络通过将每个分割点P映射到二维特征空间来生成密集的逐点特征。我们实现了PointNet架构的一个变体,它使用平均池,而不是通常使用的最大池作为对称还原函数。

Dense color image feature embedding: The goal of the color embedding network is to extract per-pixel features such that we can form dense correspondences between 3D point features and image features. The reason for forming these dense correspondences will be clear in the next

section. The image embedding network is a CNN-based encoder-decoder architecture that maps an image of size H × W × 3 H × W × 3 H×W×3 into a H × W × d r g b H × W × d_{rgb} H×W×drgb embedding space. Each pixel of the embedding is a d r g b d_{rgb} drgb-dimensional vector representing the appearance information of the input image at the corresponding location.

密集的彩色图像特征嵌入: 彩色嵌入网络的目标是提取每个像素的特征,以便我们可以在3D点特征和图像特征之间形成密集的对应关系。形成这些密集对应关系的原因将在下一节中明确。图像嵌入网络是基于CNN的编码器-解码器架构,其将大小为 H × W × 3 H × W × 3 H×W×3的图像转换为 H × W × d r g b H × W × d_{rgb} H×W×drgb的embedding space。embedding层的每个像素都是 d r g b d_{rgb} drgb维向量,表示输入图像在相应位置处的外观信息。

3.4. Pixel-wise Dense Fusion

So far we have obtained dense features from both the image and the 3D point cloud inputs; now we need to fuse the information. A naive approach would be to generate a global feature from the dense color and depth features from the segmented area. However, due to heavy occlusion and segmentation errors, the set of features from the previous step may contain features of points/pixels on other objects or parts of the background. Therefore, blindly fusing color and geometric features globally would degrade theperformance of the estimation. In the following we describe a novel pixel-wise1 dense fusion network that effectively combines the extracted features, especially for pose estimation under heavy occlusion and imperfect segmentation.

到目前为止,我们已经从图像和3D点云输入中获得了密集特征;现在我们需要融合信息。一种简单的方法是从分割区域的密集颜色和深度特征生成全局特征。然而,由于严重的遮挡和分割错误,前一步骤中的一组特征可能包含其他对象或背景部分上的点/像素的特征。因此,盲目地全局融合颜色和几何特征会降低估计的性能。在下文中,我们描述了一种新的像素密集融合网络,该网络有效地结合了提取的特征,尤其是在严重遮挡和不完全分割的情况下进行姿态估计。

Pixel-wise dense fusion: The key idea of our dense fusion network is to perform local per-pixel fusion instead of global fusion so that we can make predictions based on each fused feature. In this way, we can potentially select the predictions based on the visible part of the object and minimize the effects of occlusion and segmentation noise.

Pixel-wise密集融合: 密集融合网络的关键思想是 执行逐像素局部融合,而不是全局融合,以便我们可以基于每个融合特征进行预测。通过这种方式,我们可以潜在地基于对象的可见部分选择预测,并最小化遮挡和分割噪声的影响。

Concretely, our dense fusion procedure first associates the geometric feature of each point to its corresponding image feature pixel based on a projection onto the image plane using the known camera intrinsic parameters. The obtained pairs of features are then concatenated and fed to another network to generate a fixed-size global feature vector using a symmetric reduction function. While we refrained from using a single global feature for the estimation, here we enrich each dense pixel-feature with the global densely-fused feature to provide a global context.

具体而言,我们的密集融合过程首先基于使用已知相机固有参数在图像平面上的投影,将每个点的几何特征与其对应的图像特征像素相关联。然后,将所获得的特征对串联并导入到另一个网络,以使用对称缩减函数生成固定大小的全局特征向量。虽然我们避免使用单个全局特征进行估计,但这里我们使用全局密集融合特征来丰富每个密集像素特征,以提供全局上下文。

We feed each of the resulting per-pixel features into a final network that predicts the object’s 6D pose. In other words, we will train this network to predict one pose from each densely-fused feature. The result is a set of P predicted poses, one per feature. This defines ourfirst learning objective, as we will see in Sec. 3.5. We will now explain our approach to learning to choose the best prediction in a self-supervised manner, inspired by Xu et al. [42]

我们将得到的每个像素特征输入到预测对象6D姿态的最终网络中。换句话说,我们将训练这个网络,从每个密集融合的特征中预测一个姿势。结果是一组P个预测的姿势,每个特征一个。这定义了我们的第一个学习目标,我们将在第3.5节中看到。我们现在将解释我们的学习方法,以自我监督的方式选择最佳预测,灵感来自Xu等人[42] 。

Per-pixel self-supervised confidence: We would like to train our pose estimation network to decide which pose estimation is likely to be the best hypothesis based on the specific context. To do so, we modify the network to output a confidence score c i c_i ci for each prediction in addition to the pose estimation predictions. We will have to reflect this second learning objective in the overall learning objective, as we will see at the end of the next section.

**Per-pixel自监督置信度:**我们希望训练我们的姿势估计网络,以根据特定的背景来决定哪个姿势估计可能是最佳假设。为此,除了姿势估计预测之外,我们修改网络以输出每个预测的置信分数 c i c_i ci。我们必须在总体学习目标中反映第二个学习目标,我们将在下一节末尾看到。

3.5. 6D Object Pose Estimation

Having defined the overall network structure, we now take a closer look at the learning objective. We define the pose estimation loss as the distance between the points sampled on the objects model in ground truth pose and corresponding points on the same model transformed by the predicted pose. Specifically, the loss to minimize for the prediction per dense-pixel is defined as

定义了整个网络结构,现在我们更仔细地研究学习目标。我们将姿态估计损失定义为ground truth中物体模型上采样的点与由预测姿态变换的同一模型上的对应点之间的距离。 具体而言,每个密集像素的预测损失最小化定义为

L i p = 1 M ∑ j ∣ ∣ ( R x j + t ) − ( R ^ i x j + t ^ i ) ∣ ∣ ( 1 ) L_i^p=\frac{1}{M}\sum_j||(Rx_j+t)-(\hat R_ix_j+\hat t_i)|| \quad (1) Lip=M1j∑∣∣(Rxj+t)−(R^ixj+t^i)∣∣(1)

where x j x_j xj denotes the j t h j^{th} jth point of the M M M randomly selected 3D points from the object’s 3D model, p = [ R ∣ t ] p = [R|t] p=[R∣t] is the ground truth pose, and p ^ i = [ R ^ i ∣ t ^ i ] \hat p_i = [\hat R_i|\hat t_i] p^i=[R^i∣t^i] is the predicted pose generated from the fused embedding of the i t h i^{th} ith dense-pixel.

其中, x j x_j xj表示从对象的3D模型中随机选择的 M M M个3D点中的第 j j j个点, p = [ R ∣ t ] p=[R|t] p=[R∣t]是地面真实姿态,而 p ^ i = [ R ^ i ∣ t ^ i ] \hat p_i = [\hat R_i|\hat t_i] p^i=[R^i∣t^i]是从第 i i i个密集像素的融合嵌入生成的预测姿态。

The above loss function is only well-defined for asymmetric objects, where the object shape and/or texture determines a unique canonical frame. Symmetric objects have more than one and possibly an infinite number of canonical frames, which leads to ambiguous learning objectives.Therefore, for symmetric objects, we instead minimize the distance between each point on the estimated model orientation and the closest point on the ground truth model. The loss function becomes:

上述损失函数仅适用于不对称对象,其中对象形状和/或纹理决定了唯一的规范框架。对称对象具有不止一个并且可能有无限多个规范框架,这导致了模糊的学习目标。因此,对于对称对象,我们反而将估计模型方向上的每个点与地面真实模型上的最近点之间的距离最小化。损失函数变为:

L i p = 1 M ∑ j m i n 0 < k < M ∣ ∣ ( R x j + t ) − ( R ^ i x k + t ^ i ) ∣ ∣ ( 2 ) L_i^p=\frac{1}{M}\sum_j \underset {0<k<M}{min}||(Rx_j+t)-(\hat R_ix_k+\hat t_i)||\quad (2) Lip=M1j∑0<k<Mmin∣∣(Rxj+t)−(R^ixk+t^i)∣∣(2)

Optimizing over all predicted per dense-pixel poses would be to minimize the mean of the per dense-pixel losses: L = 1 N ∑ i L i p L = \frac{1}{N}\sum_i L^p_i L=N1∑iLip. However, as explained before, we would like our network to learn to balance the confidence among the per dense-pixel predictions. To do that we weight the per dense-pixel loss with the dense-pixel confidence, and add a second confidence regularization term:

优化所有预测的每密集像素姿态将使每密集像素损失的平均值最小化: L = 1 N ∑ i L i p L = \frac{1}{N}\sum_i L^p_i L=N1∑iLip。然而,如前所述,我们希望我们的网络学会平衡每密集像素预测之间的置信度。为此,我们使用密集像素置信度对每密集像素损失进行加权,并添加第二个置信度正则化项:

L = 1 N ∑ i ( L i p c i − ω l o g ( c i ) ) ( 3 ) L = \frac{1}{N}\sum_i (L_i^pc_i-\omega log(c_i))\quad (3) L=N1i∑(Lipci−ωlog(ci))(3)

where N N N is the number of randomly sampled dense-pixel features from the P P P elements of the segment and w is a balancing hyperparameter.Intuitively, low confidence will result in low pose estimation loss but would incur high penalty from the second term, and vice versa. We use the pose estimation that has the highest confidence as the final output.

其中 N N N是来自分割的 P P P个元素的随机采样的密集像素特征的数量, ω \omega ω是平衡超参数。直观地说,低置信度将导致低姿态估计损失,但从第二项开始将招致高惩罚,反之亦然。我们使用具有最高置信度的姿态估计作为最终输出。

3.6. Iterative Refinement

The iterative closest point algorithm (ICP) [2] is a powerful refinement approach used by many 6D pose estimation methods [14, 31, 41]. However, the best-performing ICP implementations are often not efficient enough for realtime applications. Other refinement methods including[17,18] assume the access to a real-time rendering engine and object models with highquality textures. Here we propose a neural network-based iterative refinement module based on our densely-fused embedding that can improve thefinal pose estimation result in a fast and robust manner without

additional rendering techniques.

迭代最近点算法(ICP)[2]是许多6D姿态估计方法使用的一种强大的细化方法[14,31,41]。然而,性能最好的ICP实现通常对于实时应用程序来说效率不够。包括[17,18]在内的其他细化方法假定可以访问实时渲染引擎和具有高质量纹理的对象模型。在这里,我们提出了基于神经网络的迭代细化模块,该模块基于密集融合嵌入,可以在无需额外渲染技术的情况下以快速和鲁棒的方式改进最终姿态估计结果。

The goal is to enable the network to correct its own pose estimation error in an iterative manner. The challenge here is training the network to refine the previous prediction as opposed to making new predictions. To do so, we must include the prediction made in the previous iteration as part of the input to the next iteration. Our key idea is to consider the previously predicted pose as an estimate of canonical frame of the target object and transform the input point cloud into this estimated canonical frame. This way, the transformed point cloud implicitly encodes the estimated pose. We then feed the transformed point cloud back into the network and predict a residual pose based on the previously estimated pose. This procedure can be applied iteratively and generate potentiallyfiner pose estimation each iteration.

目标是使网络能够以迭代方式校正其自身的姿态估计误差。这里的挑战是训练网络来改进先前的预测,而不是做出新的预测。为此,我们必须将上一次迭代中所做的预测作为下一次迭代输入的一部分。我们的关键思想是将先前预测的姿态作为目标对象的规范框架的估计,并将输入点云转换为该估计的规范框架。 这样,变换后的点云隐式编码估计的姿势。然后,我们将变换后的点云反馈到网络中,并基于先前估计的姿态预测残差姿态。该过程可以迭代应用,并在每次迭代时生成潜在的内部姿态估计。

The procedure is illustrated in Fig. 3. Concretely, we train a dedicated pose residual estimator network to perform the refinement given the initial pose estimation from the main network. At each iteration, we reuse the image feature embedding from the main network and perform dense fusion with the geometric features computed for the new transformed point cloud. The pose residual estimator uses as input a global feature from the set of fused pixel features. After K iterations, we obtain thefinal pose estimation as the concatenation of the per-iteration estimations:

该过程如图3所示。具体而言,我们训练了一个专用的姿态残差估计器网络,以在给定来自主网络的初始姿态估计的情况下执行细化。在每次迭代中,我们重用来自主网络的图像特征嵌入,并与为新变换的点云计算的几何特征进行密集融合。姿态残差估计器使用来自融合像素特征集合的全局特征作为输入。在K次迭代之后,我们获得最终姿态估计,作为每次迭代估计的串联:

p ^ = [ R K ∣ t K ] ⋅ [ R K − 1 ∣ t K − 1 ] … [ R 0 ∣ t 0 ] \hat p=[R_K|t_K] \cdot [R_{K-1}|t_{K-1}] \dots [R_0|t_0] p^=[RK∣tK]⋅[RK−1∣tK−1]…[R0∣t0]

In our experiments, only after K = 2 iterations, the refined pose is already able to surpass the ICP-refined results of prior works. The pose residual estimator can be trained jointly with the main network. However, the pose estimation at the beginning of the training is too noisy for it to learn anything meaningful. Thus in practice, the joint training starts after the main network has converged.

在我们的实验中,只有在K=2次迭代之后,改进的姿态才能够超过先前工作的ICP改进结果。姿态残差估计器可以与主网络联合训练。然而,训练开始时的姿势估计过于嘈杂,以至于它无法学习任何有意义的东西。因此,在实践中,联合训练在主网络汇聚之后开始。

4. Experiments

In the experimental section, we would like to answer the following questions: (1) How does the dense fusion network compare to naive global fusion-by-concatenation? (2)Is the dense fusion and prediction scheme robust to heavy occlusion and segmentation errors? (3) Does the iterative

refinement module improve thefinal pose estimation? (4)Is our method robust and efficient enough for downstream tasks such as robotic grasping?

在实验部分,我们想回答以下问题:(1)密集融合网络与通过级联实现的原始全局融合相比如何?(2) 密集融合和预测方案对严重的遮挡和分割错误是否鲁棒?(3) 迭代细化模块是否改进了最终姿态估计?(4) 我们的方法对于机器人抓取等下游任务是否足够稳健和有效?

To answer thefirst three questions, we evaluate our method on two challenging 6D object pose estimation datasets: YCB-Video Dataset [41] and LineMOD [12]. The YCB-Video Dataset features objects of varying shapes and texture levels under different occlusion conditions. Hence it’s an ideal testbed for our occlusion-resilient multi-modal fusion method. The LineMOD dataset is a widely-used dataset that allows us to compare with a broader range of existing methods. We compare our method with state-of-the-art methods [14, 31, 42] as well as model variants. To answer the last question, we deploy our model to a real robot platform and evaluate the performance of a robot grasping task that uses the predictions from our model.

为了回答前三个问题,我们在两个具有挑战性的6D物体姿态估计数据集上评估了我们的方法:YCB视频数据集[41]和LineMOD[12]。YCB视频数据集以不同遮挡条件下不同形状和纹理级别的对象为特征。因此,它是我们咬合弹性多模态融合方法的理想试验台。LineMOD数据集是一个广泛使用的数据集,允许我们与更广泛的现有方法进行比较。我们将我们的方法与最先进的方法[14,31,42]以及模型变体进行了比较。为了回答最后一个问题,我们将我们的模型部署到一个真实的机器人平台上,并使用我们模型中的预测来评估机器人抓取任务的性能。

4.1. Datasets

YCB-Video Dataset. The YCB-Video Dataset Xiang et al. [41] features 21 YCB objects Calli et al. [5] of varying shape and texture. The dataset contains 92 RGB-D videos, where each video shows a subset of the 21 objects in different indoor scenes. The videos are annotated with 6D poses and segmentation masks. We follow prior work [41] and split the dataset into 80 videos for training and 2,949 key frames chosen from the rest 12 videos for testing and include the same 80,000 synthetic images released by [41] in our training set. In our experiments, we compare with the result of [41] after depth refinement(ICP) and learning-based depth method [42].

YCB视频数据集。YCB视频数据集Xiang等人的[41]具有21个YCB对象,Calli等人的[5]具有不同的形状和纹理。数据集包含92个RGB-D视频,其中每个视频显示不同室内场景中21个对象的子集。视频用6D姿势和分割遮罩进行注释。我们遵循先前的工作[41],将数据集分成80个视频用于训练,从其余12个视频中选择2949个关键帧进行测试,并将[41]发布的80000张合成图像包含在我们的训练集中。在我们的实验中,我们将深度细化(ICP)和基于学习的深度方法[42]后的结果与[41]进行了比较。

LineMOD Dataset. The LineMOD dataset Hinterstoisser et al. [12] consists of 13 low-textured objects in 13 videos. It is widely adopted by both classical methods [4, 8, 37] and recent learning-based approaches [17, 31, 34]. We use the same training and testing set as prior learning-based works [17, 25, 34] without additional synthetic data and compare with the best ICP-refined results of the state-of-the-art algorithms.

LineMOD数据集。LineMOD数据集Hinterstoisser等人[12]由13个视频中的13个低纹理对象组成。它被经典方法[4,8,37]和最近基于学习的方法[17,31,34]广泛采用。我们使用与先前基于学习的工作相同的训练和测试集[17,25,34],无需额外的合成数据,并与现有算法的最佳ICP精炼结果进行比较。

4.2. Metrics

We use two metrics to report on the YCB-Video Dataset. The average closest point distance (ADD-S) [41] is an ambiguity-invariant pose error metric which takes care of both symmetric and non-symmetric objects into an over-all evaluation. Given the estimated pose [ R ^ ∣ t ^ ] [\hat R|\hat t] [R^∣t^] and ground truth pose [ R ∣ t ] [R|t] [R∣t], ADD-S calculates the mean distance from each 3D model point transformed by [ R ^ ∣ t ^ ] [\hat R|\hat t] [R^∣t^] to its closest neighbour on the target model transformed by [ R ∣ t ] [R|t] [R∣t]. We report the area under the ADD-S curve (AUC) following PoseCNN [41]. We follow prior work and set the maximum threshold of AUC to be 0.1m. We also report the percent-age of ADD-S smaller than 2cm (<2cm), which measures the predictions under the minimum tolerance for robot ma-nipulation (2cm for most of the robot grippers).

我们使用两个指标来报告YCB视频数据集。平均最近点距离(ADD-S)[41]是一个模糊不变的姿态误差度量,它在整体评估中同时考虑对称和非对称对象。给定估计的姿态 [ R ^ ∣ t ^ ] [\hat R|\hat t] [R^∣t^] 和地面真实姿态 [ R ∣ t ] [R|t] [R∣t] ,ADD-S计算从通过 [ R ^ ∣ t ^ ] [\hat R|\hat t] [R^∣t^] 变换的每个3D模型点到通过 [ R ∣ t ] [R|t] [R∣t] 变换的目标模型上其最近邻的平均距离。我们报告了PoseCNN后ADD-S曲线下的面积(AUC)[41]。我们遵循先前的工作,将AUC的最大阈值设置为0.1m。我们还报告了小于2cm(<2cm)的ADD-S的百分比,该百分比测量了机器人在最小公差下的操作(大多数机器人夹具为2cm)。

For the LineMOD dataset, we use the Average Distance of Model Points (ADD) [13] for non-symmetric objects and ADD-S for the two symmetric objects (eggbox and glue) following prior works [13, 31, 34].

对于LineMOD数据集,我们根据先前的工作[13,31,34],对非对称对象使用模型点平均距离(ADD)[13],对两个对称对象(鸡蛋盒和胶水)使用ADD-S。

4.3. Implementation Details

The image embedding network consists of a Resnet-18 encoder followed by 4 up-sampling layers as the decoder. The PointNet architecture is an MLP followed by an average-pooling reduction function. Both color and geometric dense feature embedding are of dimension 128. We choose ω = 0.01 \omega = 0.01 ω=0.01 for Eq. 3 by empirical evaluation. The iterative pose refinement module consists of a 4 fully connected layers that directly output the pose residual from the global dense feature. We use the 2 refinement iterations for all experiments.

图像嵌入网络由Resnet-18编码器和作为解码器的4个上采样层组成。PointNet架构是一个MLP,后跟一个平均池减少功能。颜色和几何密集特征嵌入的维数都是128。我们通过经验评估为等式3选择 ω = 0.01 \omega = 0.01 ω=0.01。迭代姿态细化模块由4个完全连接的层组成,这些层直接输出来自全局密集特征的姿态残差。我们对所有实验使用2次优化迭代。

4.4. Architectures

We compare four model variants that showcase the effectiveness of our design choices.

• PointFusion [42] uses a CNN to extract afixed-size feature vector and fuse by directly concatenating the image feature with the geometry feature. The rest of the network is similar to our architecture. The comparison to this baseline demonstrates the effectiveness of our dense fusion network.

• Ours (single) uses our dense fusion network, but instead of performing perpoint prediction, it only outputs a single prediction using the global feature vector.

• Ours (per-pixel) performs per-pixel prediction based on each densely fused feature.

• Ours (iterative) is our complete model that uses the iterative refinement (Sec. 3.6) on top of Ours (per-pixel).

我们比较了四种模型变体,它们展示了我们设计选择的有效性。

• PointFusion[42]使用CNN提取固定大小的特征向量,并通过将图像特征与几何特征直接连接来融合。网络的其余部分与我们的架构类似。与该基线的比较表明了我们密集融合网络的有效性。

• Ours (single) 使用我们的密集融合网络,但它只使用全局特征向量输出单个预测,而不是执行逐点预测。

• Ours (per-pixel) 基于每个密集融合特征执行逐像素预测。

• Ours (iterative) 是我们的完整模型,它在Ours (per-pixel) 之上使用迭代细化(第3.6节)。

4.5. Evaluation on YCB-Video Dataset

Table 1 shows the evaluation results for all the 21 objects in the YCB-Video Dataset. We report the ADD-S AUC(<0.1m) and the ADD-S<2cm metrics on

PoseCNN [41] and our four model variants. To ensure a fair comparison, all methods use the same segmentation masks as in PoseCNN [41]. Among our model variants, Ours (Iterative) achieves the best performance. Our method is able to outperform PoseCNN + ICP[41] even without iterative refinement. In particular, Ours (Iterative) outperforms PoseCNN + ICP by 3.5% on the ADD-S<2cm metric.

表1显示了YCB视频数据集中所有21个对象的评估结果。我们在PoseCNN[41]和我们的四个模型变体上报告了ADD-S AUC(<0.1m)和ADD-S<2cm度量。为了确保公平比较,所有方法都使用与PoseCNN中相同的分割掩码[41]。在我们的模型变体中,Ours (iterative) 实现了最佳性能。即使没有迭代细化,我们的方法也能够超过PoseCNN+ICP[41]。特别是,在ADD-S<2cm度量上,Ours(Iterative)的表现优于PoseCNN+ICP 3.5%。

Effect of dense fusion Both of our dense fusion baselines (Ours (single) and Ours (per-pixel)) outperform PointFusion by a large margin, which shows that dense fusion has a clear advantage over the global fusion-by-concatenation method used in PointFusion.

密度融合的效果 我们的密度融合baseline (Ours (single)和Ours (per-pixel)) 都大大优于PointFusion,这表明密度融合比PointFusion中使用的串联方法进行的全局融合具有明显优势。

Effect of iterative refinement Table 1 shows that our iterative refinement improves the overall pose estimation performance. In particular, it significantly improves the performances for texture-less symmetric object, e.g., bowl (29%), banana (6%), and extra large clamp (6%) which suffer from orientation ambiguity.

迭代细化的效果 表1表明,我们的迭代细化提高了整体姿态估计性能。特别是,它显著提高了纹理不对称对象的性能,例如碗(29%)、香蕉(6%)和特大夹具(6%),它们受到方向模糊的影响。

Robustness towards occlusion The main advantage of our dense fusion method is its robustness towards occlusions. To quantify the effect of occlusion on thefinal performance, we calculate the visible surface ratio of each object instance. Then we calculate how the accuracy (ADD-S<2cm percentage) changes with the extent of occlusion. As shown in Fig. 5, the performances of PointFusion and PoseCNN+ICP degrade significantly as the occlusion increases. In contrast, none of our methods experiences notable performance drop. In particular, the performance of both Ours (per-pixel) and Ours (iterative) only decrease by 2% overall.

对遮挡的鲁棒性 我们的密集融合方法的主要优点是对遮挡的鲁棒性。为了量化遮挡对最终性能的影响,我们计算每个对象实例的可见表面比率。然后我们计算准确度(ADD-S<2cm百分比)如何随遮挡程度而变化。如图5所示,随着遮挡的增加,PointFusion和PoseCNN+ICP的性能显著降低。相比之下,我们的方法都没有显著的性能下降。特别是,Ours (per-pixel) 和 Ours (iterative) 的性能总体仅下降2%

Time efficiency We compare the time efficiency of our model with PoseCNN+ICP in Table 2. We can see that our method is two order of magnitude faster than PoseCNN+ICP. In particular, PoseCNN+ICP spends most of the time on the post processing ICP. In contrast, all of our computation component, namely segmentation (Seg), pose estimation (PE), and iterative refinement (Refine), are equally efficient, and the overall runtime is fast enough for real-time application (16 FPS, about 5 objects in each frame).

时间效率 我们将我们的模型的时间效率与表2中的PoseCNN+ICP进行了比较。我们可以看到,我们的方法比PoseCNN+ICP快两个数量级。特别是,PoseCNN+ICP将大部分时间用于后处理ICP。相比之下,我们的所有计算组件,即分割(Seg)、姿态估计(PE)和迭代细化(Refine),都同样有效,而且对于实时应用(16 FPS,每帧约5个对象)的整体运行时间足够快。

Qualitative evaluation Fig. 4 visualizes some sample predictions made by PoseCNN+ICP, PointFusion, and our iterative refinement model. As we can see, PoseCNN+ICP and PointFusion fail to estimate the correct pose of the bowl in the leftmost column and the cracker box in the middle column due to heavy occlusion, whereas our method remains robust. Another challenging case is the clamp in the middle row due to poor segmentation (not shown in thefigure). Our approach localizes the clamp from only the visible part of the object and effectively reduces the dependency on accurate segmentation result.

定性评估 图4可视化了PoseCNN+ICP、PointFusion和我们的迭代细化模型所做的一些样本预测。如我们所见,由于严重遮挡,PoseCN+ICP和PointFusion无法估计最左侧列中碗和中间列中饼干盒的正确姿势,而我们的方法仍然鲁棒。另一个具有挑战性的情况是,由于分割不良(图中未显示),中间一行出现夹钳。我们的方法仅从对象的可见部分定位夹具,并有效地减少了对精确分割结果的依赖。

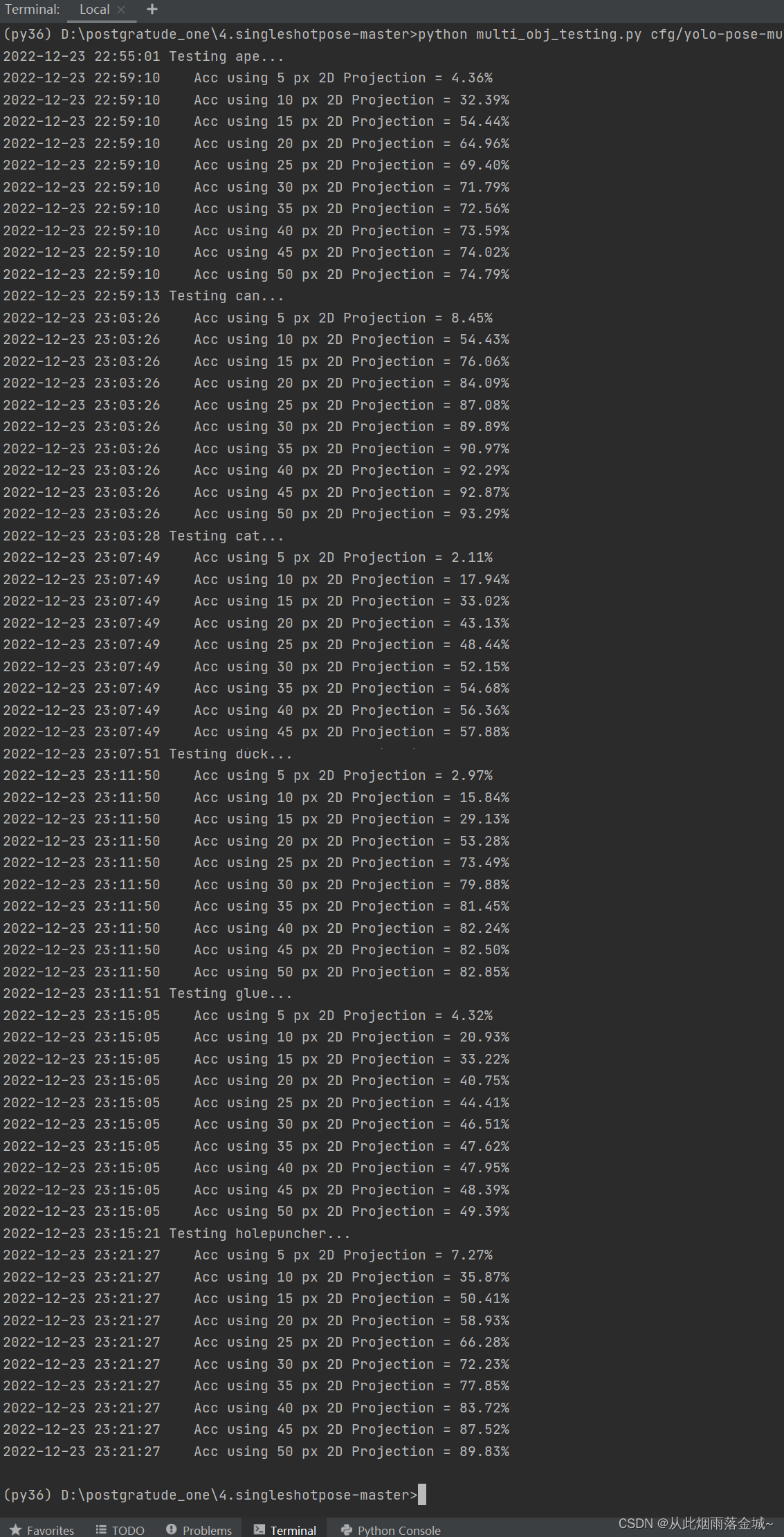

4.6. Evaluation on LineMOD Dataset

Table 3 compares our method with previous RGB methods with depth refinement(ICP) (results from [31, 34]) on the ADD metric [13]. Even without the iterative refinement step, our method can outperform 7% over the state-of-the-art depth refinement method. After processing the iterative refinement approach, thefinal result has another 8% improvement, which proves that our learning-based depth method is superior to the sophisticated application of ICP in both accuracy and efficiency. We visualize the estimated 6D pose after each refinement iteration in Fig. 6, where our pose estimation improves by an average of 0.8 cm (ADD) after 2 refinement iterations. The results of some other color-only methods are also listed in Table 3 for reference.

表3将我们的方法与先前的基于ADD度量的深度细化(ICP)RGB方法(来自[31,34]的结果)进行了比较[13]。即使没有迭代细化步骤,我们的方法也可以比最先进的深度细化方法表现出7%。在处理迭代细化方法后,最终结果又有8%的改进,这证明我们基于学习的深度方法在准确性和效率方面都优于ICP的复杂应用。我们在图6中可视化了每个细化迭代后估计的6D姿态,其中我们的姿态估计在2次细化迭代后平均提高了0.8 cm(ADD)。表3中还列出了一些其他纯彩色方法的结果,以供参考。

4.7. Robotic Grasping Experiment

In our last experiment, we evaluate whether the poses estimated by our approach are accurate enough to enable robot grasping and manipulation. As shown in Fig. 1, we place 5 YCB objects on a table and command the robot to grasp them using the estimated pose. We follow a similar procedure to Tremblay et al. [35]: we place thefive objects in four different random locations on the table, at three random orientations, including configurations with partial occlusions. Since the order of picking the objects is not optimized, we do not allow configurations where objects lay on top of each other. The robot attempts 12 grasps on each object, 60 attempts in total. The robot uses the estimated object orientation to compute an

alignment of the gripper’s fingers to the object narrower dimension.

在我们的上一个实验中,我们评估了我们的方法所估计的姿态是否足够精确,以使机器人抓取和操纵。如图1所示,我们将5个YCB对象放在桌子上,并命令机器人使用估计的姿势抓住它们。我们遵循与Tremblay等人相似的程序。[35]:我们将五个物体放置在桌子上的四个不同的随机位置,以三个随机方向,包括具有部分闭塞的配置。由于拾取对象的顺序没有优化,因此我们不允许对象重叠的配置。机器人尝试12次抓住每个物体,总共60次。机器人使用估计的物体方向来计算抓取器手指与物体较窄尺寸的对齐。

The robot succeeds on 73% of the grasps using our proposed approach to estimate the pose of the objects. The most difficult object to grasp is the banana (7 out of 12 successful attempts). One possible reason is that our banana model is not exactly the same as in the dataset– ours is plain yellow. This characteristic hinders the estimation, especially of the orientation, and leads to some failed grasp attempts along the longer axis of the object. In spite of this less accurate case, our results indicate that our approach is robust enough to be deployed in real-world robotic tasks

without explicit domain adaptation, even with a different RGB-D sensor and in a different background than the ones in the training data.

机器人使用我们提出的方法来估计物体的姿态,成功完成了73%的抓取。最难抓住的物体是香蕉(12次成功尝试中有7次)。一个可能的原因是,我们的香蕉模型与数据集中的模型不完全相同——我们的模型是纯黄色的。这一特性阻碍了估计,尤其是方向的估计,并导致沿物体长轴的一些抓取尝试失败。尽管这种情况不太准确,但我们的结果表明,即使使用不同的RGB-D传感器,在与训练数据中的背景不同的背景下,我们的方法也足够稳健,可以部署在现实世界的机器人任务中,而无需显式的域自适应。

5. Conclusion

We presented a novel approach to estimating 6D poses of known objects from RGB-D images. Our approach fuses a dense representation of features that include color and depth information based on the confidence of their predictions. With this dense fusion approach, our method outperforms previous approaches in several datasets, and is significantly more robust against occlusions. Additionally, we demonstrated that a robot can use our proposed approach to grasp and manipulate objects.

我们提出了一种从RGB-D图像估计已知物体6D姿态的新方法。我们的方法融合了密集的特征表示,这些特征包括基于其预测的置信度的颜色和深度信息。使用这种密集融合方法,我们的方法在多个数据集中优于先前的方法,并且对遮挡具有显著更强的鲁棒性。此外,我们证明了机器人可以使用我们提出的方法来抓取和操纵物体。