目标网址为Top Wallpapers - wallhaven.cc

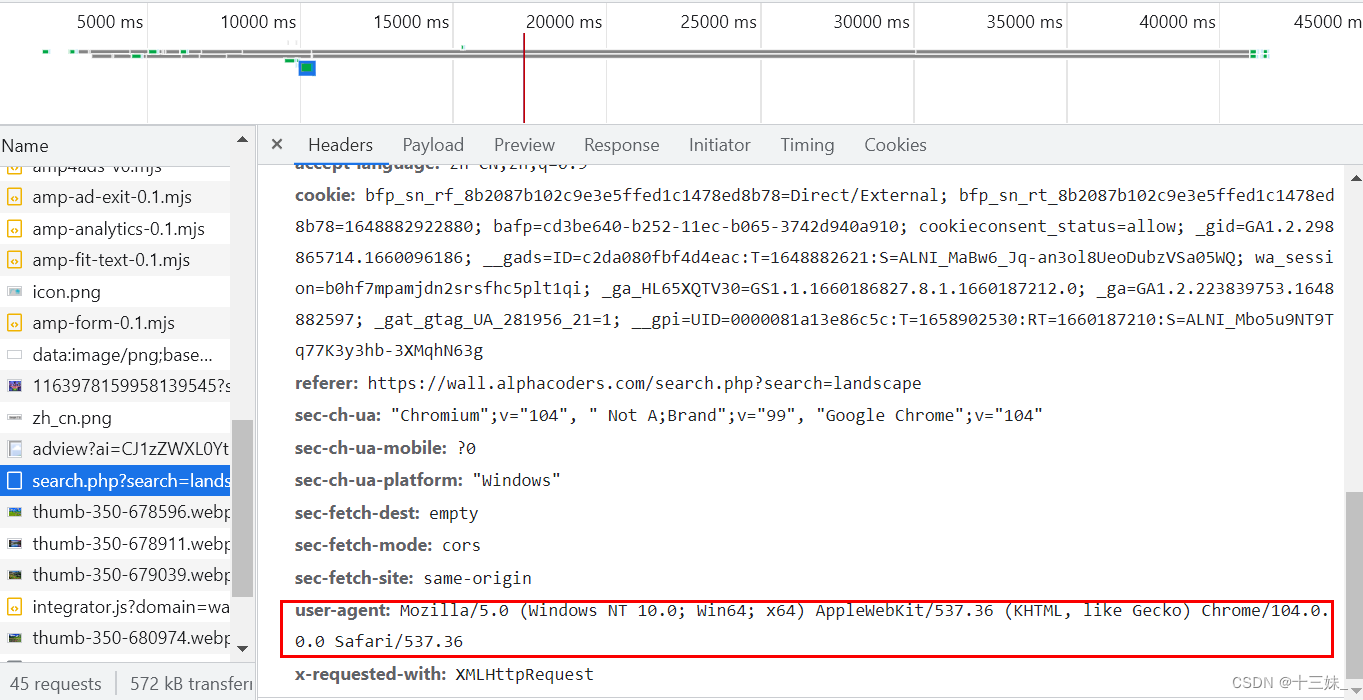

F12检查网页元素,点击网络(Network),刷新页面,之后找到Name的第一个toplist?page=,点击标头(Headers),找到user-agent。

headers如下:

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36'

}开始运行爬虫程序,保存壁纸(保存的壁纸在当前文件夹):

爬取到的结果如下:

整体代码如下:

# 导入所需库

import requests

from lxml import etree

import os

headers = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36'

}def get_html_info(page):url = f'https://wallhaven.cc/toplist?page={page}'resp = requests.get(url,headers=headers)resp_html = etree.HTML(resp.text)return resp_htmldef get_pic(resp_html):pic_url_list = []lis = resp_html.xpath('//*[@id="thumbs"]/section[1]/ul/li')for li in lis:pic_url = li.xpath('./figure/a/@href')[0]pic_url_list.append(pic_url)for pic_url in pic_url_list:resp2 = requests.get(pic_url,headers=headers)r_html2 = etree.HTML(resp2.text)'//*[@id="thumbs"]/section[1]/ul/li[2]/figure/a'pic_size = r_html2.xpath('//*[@id="showcase-sidebar"]/div/div[1]/h3/text()')[0]final_url = r_html2.xpath('//*[@id="wallpaper"]/@src')[0]pic = requests.get(url=final_url,headers=headers).contentif not os.path.exists('Wallhaven'):os.mkdir('Wallhaven')with open('Wallhaven\\' + pic_size +final_url[-10:],mode='wb') as f:f.write(pic) # 保存图片的函数print(pic_size + final_url[-10:]+',下载完毕,已下载{}张壁纸'.format(len(os.listdir('Wallhaven'))))def main():page_range = range(1,10) # 爬取1-5页的壁纸 (可以自己设置需要爬取的页面数量)for i in page_range:r = get_html_info(i)get_pic(r)print(f'===============第{i}页下载完毕=============')

if __name__ == '__main__':main()