基于第一部分在单帧中将点云投影到图像的基础上,现将上述代码改写为ros实时处理的版本。以后还可以在此基础上进行一步一步的扩展。将原先的离线代码改写为ros在线版本,需要将原本的代码写成package的形式,并配置好相应的依赖包、环境等,处理好数据接口。

1.程序包结构

本部分对数据处理较为简单,整个包仅包括两个c++文件,project.cpp和project.h文件,另外还有一个配置文件initial_params.txt,存放在cfg文件夹下。配置文件的内容如下,可根据需求修改:

/kitti/camera_gray_left/image_raw //节点接收的图片话题

/kitti/velo/pointcloud //点云话题

9.999239e-01 9.837760e-03 -7.445048e-03 0.0

-9.869795e-03 9.999421e-01 -4.278459e-03 0.0

7.402527e-03 4.351614e-03 9.999631e-01 0.0

0 0 0 1 //相机与相机外参矩阵

7.215377e+02 0.000000e+00 6.095593e+02 0.000000e+00

0.000000e+00 7.215377e+02 1.728540e+02 0.000000e+00

0.000000e+00 0.000000e+00 1.000000e+00 0.000000e+00 //相机内参矩阵

7.533745e-03 -9.999714e-01 -6.166020e-04 -4.069766e-03

1.480249e-02 7.280733e-04 -9.998902e-01 -7.631618e-02

9.998621e-01 7.523790e-03 1.480755e-02 -2.717806e-01

0 0 0 1 //相机与激光雷达之间的外参矩阵

此处应用的标定参数是kitti数据集的标定参数,最后是将点云投影到左侧灰度摄像头图像平面上。关于标定参数的介绍参照之前的博客。

2.数据接口

接收数据

利用 message_filters类定义了图像和点云数据的接受者,并利用时间同步的函数 message_filters::Synchronizer 对两者进行时间同步过滤,只有当点云和图像的时间戳相同才会被接收进行处理。

message_filters::Subscriber<sensor_msgs::Image> image_sub(nh, i_params.camera_topic, 5);message_filters::Subscriber<sensor_msgs::PointCloud2> pcl_sub(nh,i_params.lidar_topic, 5);typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::PointCloud2> MySyncPolicy;message_filters::Synchronizer<MySyncPolicy> sync(MySyncPolicy(5), image_sub, pcl_sub);

处理数据

接收到数据后调用回调函数projector::projection_callback进行投影处理

处理流程和离线是一样的,只是将数据输入与输出和ros连接起来了。最后投影的结果转换为图片数据利用ros节点发送出去。

sync.registerCallback(boost::bind(&projector::projection_callback, this, _1, _2));

projector::projection_callback

void projector::projection_callback(const sensor_msgs::Image::ConstPtr &img, const sensor_msgs::PointCloud2::ConstPtr &pc){cv_bridge::CvImagePtr cv_ptr;try {cv_ptr = cv_bridge::toCvCopy(img, "bgr8");}catch (cv_bridge::Exception &e) {return;}cv::Mat raw_img = cv_ptr->image;pcl::PointCloud<pcl::PointXYZI>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZI>);pcl::fromROSMsg(*pc, *cloud);cv::Mat visImg = raw_img.clone();cv::Mat overlay = visImg.clone();std::cout<<"get pc and image data"<<std::endl;cv::Mat X(4,1,cv::DataType<double>::type);cv::Mat Y(3,1,cv::DataType<double>::type);for(pcl::PointCloud<pcl::PointXYZI>::const_iterator it = cloud->points.begin(); it != cloud->points.end(); it++){if(it->x > maxX || it->x < 0.0 || abs(it->y) > maxY || it->z < minZ ) {continue;}X.at<double>(0,0) = it->x;X.at<double>(1,0) = it->y;X.at<double>(2,0) = it->z;X.at<double>(3,0) = 1;Y = i_params.cameraIn * i_params.camtocam_mat * i_params.RT * X; //tranform the point to the camera coordinatecv::Point pt;pt.x = Y.at<double>(0,0) / Y.at<double>(0,2);pt.y = Y.at<double>(1,0) / Y.at<double>(0,2);float val = it->x;float maxVal = 20.0;int red = std::min(255, (int)(255 * abs((val - maxVal) / maxVal)));int green = std::min(255, (int)(255 * (1 - abs((val - maxVal) / maxVal))));cv::circle(overlay, pt, 1, cv::Scalar(0, green, red), -1);}// Publish the image projectionros::Time time = ros::Time::now();cv_ptr->encoding = "bgr8";cv_ptr->header.stamp = time;cv_ptr->header.frame_id = "/camera_main";cv_ptr->image = overlay;image_publisher.publish(cv_ptr->toImageMsg());std::cout<<"project picture is published!"<<std::endl;}发送数据

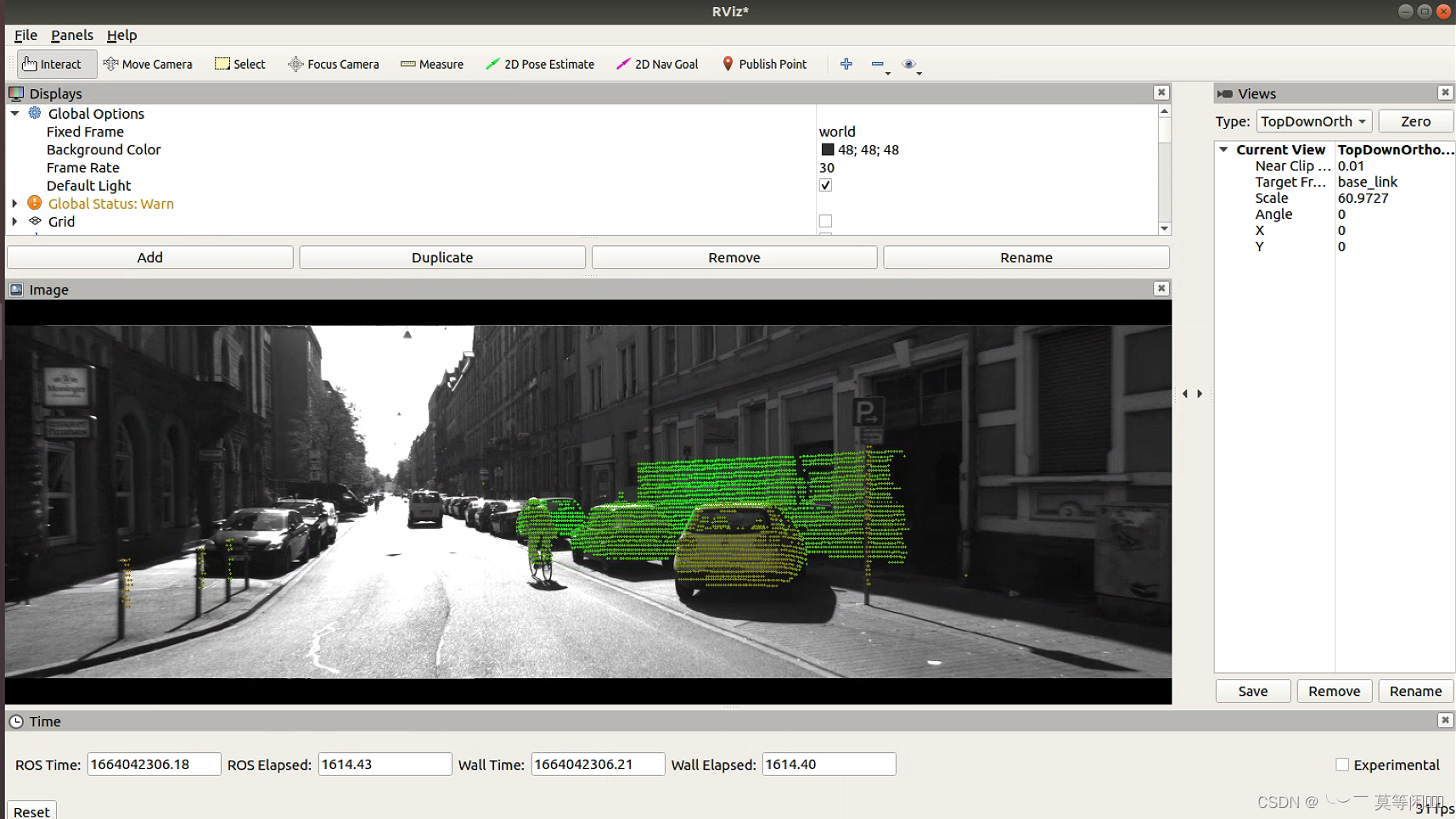

最后会将投影后的图像以话题名为“/project_pc_image”的话题发出,可以通过rviz观察到。

image_transport::ImageTransport imageTransport(nh);image_publisher = imageTransport.advertise("/project_pc_image", 20);

...........image_publisher.publish(cv_ptr->toImageMsg());3.参数初始化函数

除了数据处理,还有config的参数需要读取,利用了projector::initParams()对配置文件中的配置参数进行读取

projector::initParams()

void projector::initParams(){std::string pkg_loc = ros::package::getPath("ros_detection_tracking");std::ifstream infile(pkg_loc + "/cfg/initial_params.txt");infile >> i_params.camera_topic;infile >> i_params.lidar_topic;//std::cout<<i_params.camera_topic<<std::endl;double_t camtocam[12];double_t cameraIn[16];double_t RT[16];for (int i = 0; i < 16; i++){infile >> camtocam[i];}cv::Mat(4, 4, 6, &camtocam).copyTo(i_params.camtocam_mat);//cameratocamera paramsfor (int i = 0; i < 12; i++){infile >> cameraIn[i];}cv::Mat(4, 4, 6, &cameraIn).copyTo(i_params.cameraIn);//cameraIn paramsfor (int i = 0; i < 16; i++){infile >> RT[i];}cv::Mat(4, 4, 6, &RT).copyTo(i_params.RT);//lidar to camera params//std::cout<<i_params.RT<<std::endl;}

运行效果

运行步骤:

1.建立工作空间

2.cd 到src目录下

3.git clone代码包(我传的代码包可能克隆下来会有一级多余的目录ros_project_pc_to_image,需要把package从里面拿出来放到src目录下)

4.在工作空间目录 catkin_make

5.source devel/setup.bash

7.roscore

8.rosrun ros_detection_tracking projector

代码下载地址:https://github.com/jhzhang19/ros_project_pc_to_image

使用kitti的任意检测类的标准.bag包都可以运行。此处提供一个验证数据包,下载地址链接: 链接:https://pan.baidu.com/s/1CYtAS4kcy3N2KEzbg0mPhQ

提取码:jvo3