前言

上一篇讲到,k8s使用traefik代理集群内部服务,灵活地注入代理配置的方式,提供7层对外服务(参考:k8s(二)、对外服务)。在本篇,使用kube-router的cni,实现3层网络直通式的集群内外网络互通。

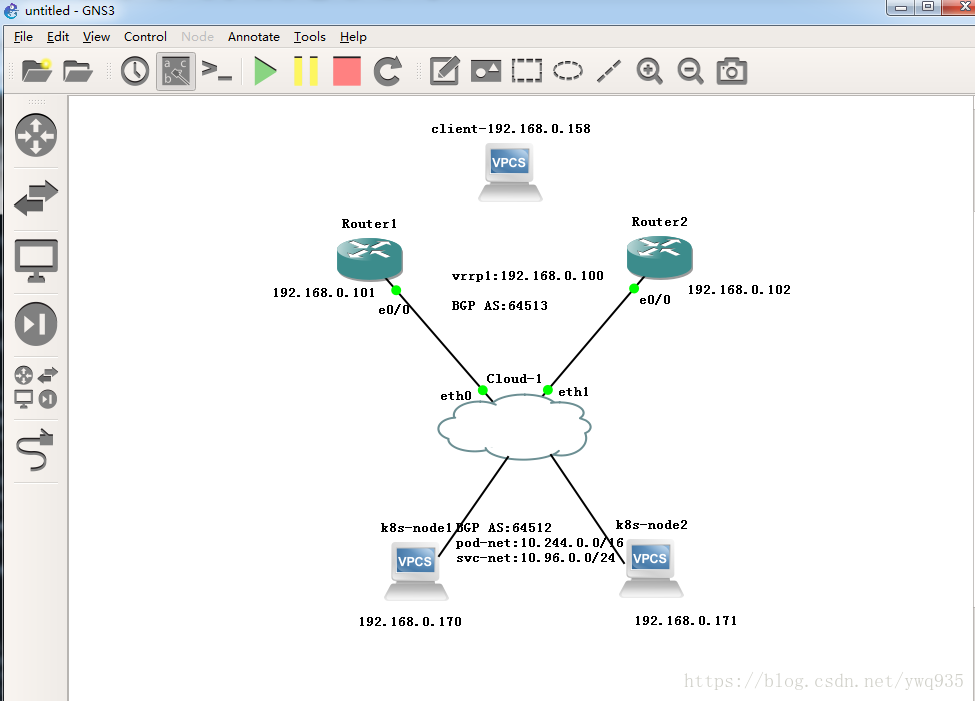

环境

环境测试使用GNS3-IOU思科模拟器+vmware虚拟机,搭建BGP网络环境。主机(client端)与 虚拟机/路由器 通过vmware桥接的方式互通,而后配置路由器与k8s节点内的kube-router cni,使两者建立起bgp邻居关系,宣告集群内的pod CIDR网段,BGP网络宣告完成后,外部client即可直接通过3层网络通信的方式访问集群内的pod,最终实现集群内部与外部的BGP网络互通。

# 客户端

client: 192.168.0.158/24 # 物理机# 中转端(router)

AS number: 64513

router1: 192.168.0.101/24 # gns3+vmware虚机环境

router2: 192.168.0.102/24 # gns3+vmware虚机环境

router vip: 192.168.0.100 # 两个router之间的vrrp虚拟ip# k8s集群端

AS number: 64512

node1: 192.168.0.170/24 # vmware虚机环境

node1: 192.168.0.171/24 # vmware虚机环境

pod CIDR: 10.244.0.0/16

svc CIDR: 10.96.0.0/12

目标实验效果: 集群外的客户端访问集群内的pod和svc,数据层面通过如下流向:client ---> router --> k8s node --> pod ip/ svc ip,实现3层直通。

拓扑图:

路由器配置

####router1:

IOU1#show running-config interface Ethernet0/0ip address 192.168.0.101 255.255.255.0vrrp 1 ip 192.168.0.100router bgp 64513bgp log-neighbor-changesneighbor 192.168.0.102 remote-as 64513neighbor 192.168.0.170 remote-as 64512neighbor 192.168.0.171 remote-as 64512maximum-paths 2no auto-summary###router2:

IOU2#show running-config

interface Ethernet0/0ip address 192.168.0.102 255.255.255.0vrrp 1 ip 192.168.0.100vrrp 1 priority 100router bgp 64513bgp log-neighbor-changesneighbor 192.168.0.101 remote-as 64513neighbor 192.168.0.170 remote-as 64512neighbor 192.168.0.171 remote-as 64512maximum-paths 2no auto-summary

kube-router配置:

k8s集群搭建步骤见k8s(一)、 1.9.0高可用集群本地离线部署记录

在此前配置好的kube-route的yaml配置文件中,需添加如下几项参数,分别指定本地bgp as,对等体as、ip等。

- --advertise-cluster-ip=true #宣告集群IP- --advertise-external-ip=true #宣告svc外部ip,如果svc指定了external-ip则生效- --cluster-asn=64512 #指定本地集群bgp as号- --peer-router-ips=192.168.0.100 #指定对等体ip,这里可以写多个,以','隔开,本次实验路由器配置了vrrp,指定vip即可- --peer-router-asns=64513 #对等体as号完整的kube-router.yaml文件如下:

apiVersion: v1

kind: ConfigMap

metadata:name: kube-router-cfgnamespace: kube-systemlabels:tier: nodek8s-app: kube-router

data:cni-conf.json: |{"name":"kubernetes","type":"bridge","bridge":"kube-bridge","isDefaultGateway":true,"ipam": {"type":"host-local"}}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:labels:k8s-app: kube-routertier: nodename: kube-routernamespace: kube-system

spec:template:metadata:labels:k8s-app: kube-routertier: nodeannotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:serviceAccountName: kube-routerserviceAccount: kube-routercontainers:- name: kube-routerimage: cloudnativelabs/kube-routerimagePullPolicy: Alwaysargs:- --run-router=true- --run-firewall=true- --run-service-proxy=true- --advertise-cluster-ip=true- --advertise-external-ip=true- --cluster-asn=64512- --peer-router-ips=192.168.0.100- --peer-router-asns=64513env:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeNamelivenessProbe:httpGet:path: /healthzport: 20244initialDelaySeconds: 10periodSeconds: 3resources:requests:cpu: 250mmemory: 250MisecurityContext:privileged: truevolumeMounts:- name: lib-modulesmountPath: /lib/modulesreadOnly: true- name: cni-conf-dirmountPath: /etc/cni/net.d- name: kubeconfigmountPath: /var/lib/kube-router/kubeconfigreadOnly: trueinitContainers:- name: install-cniimage: busyboximagePullPolicy: Alwayscommand:- /bin/sh- -c- set -e -x;if [ ! -f /etc/cni/net.d/10-kuberouter.conf ]; thenTMP=/etc/cni/net.d/.tmp-kuberouter-cfg;cp /etc/kube-router/cni-conf.json ${TMP};mv ${TMP} /etc/cni/net.d/10-kuberouter.conf;fivolumeMounts:- mountPath: /etc/cni/net.dname: cni-conf-dir- mountPath: /etc/kube-routername: kube-router-cfghostNetwork: truetolerations:- key: CriticalAddonsOnlyoperator: Exists- effect: NoSchedulekey: node-role.kubernetes.io/masteroperator: Existsvolumes:- name: lib-moduleshostPath:path: /lib/modules- name: cni-conf-dirhostPath:path: /etc/cni/net.d- name: kube-router-cfgconfigMap:name: kube-router-cfg- name: kubeconfighostPath:path: /var/lib/kube-router/kubeconfig

---

apiVersion: v1

kind: ServiceAccount

metadata:name: kube-routernamespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: kube-routernamespace: kube-system

rules:- apiGroups:- ""resources:- namespaces- pods- services- nodes- endpointsverbs:- list- get- watch- apiGroups:- "networking.k8s.io"resources:- networkpoliciesverbs:- list- get- watch- apiGroups:- extensionsresources:- networkpoliciesverbs:- get- list- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:name: kube-router

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kube-router

subjects:

- kind: ServiceAccountname: kube-routernamespace: kube-system

直接删除此前的kube-router配置,重新create这个yaml文件内的资源:

kubectl delete -f kubeadm-kuberouter.yaml #更新配置参数前执行

kubectl create -f kubeadm-kuberouter.yaml #更新配置参数后执行

接着部署一个测试用得nginx的pod实例,yaml文件如下

[root@171 nginx]# cat nginx-deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:annotations:deployment.kubernetes.io/revision: "2"creationTimestamp: 2018-04-09T04:02:02Zgeneration: 4labels:app: nginxname: nginx-deploynamespace: defaultresourceVersion: "111504"selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/nginx-deployuid: c28090c0-3baa-11e8-b75a-000c29858eab

spec:replicas: 1selector:matchLabels:app: nginxstrategy:rollingUpdate:maxSurge: 1maxUnavailable: 1type: RollingUpdatetemplate:metadata:creationTimestamp: nulllabels:app: nginxspec:containers:- image: nginx:1.9.1name: nginxports:- containerPort: 80protocol: TCPresources: {}terminationMessagePath: /dev/termination-logterminationMessagePolicy: FilednsPolicy: ClusterFirstrestartPolicy: AlwaysschedulerName: default-schedulersecurityContext: {}terminationGracePeriodSeconds: 30查看podIP,本地curl测试:

[root@171 nginx]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5964dfd755-lv2kb 1/1 Running 0 29m 10.244.1.35 171

[root@171 nginx]# curl 10.244.1.35

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@171 nginx]# 3.检验结果

路由器查看BGP邻居:

IOU1#show ip bgp summary

BGP router identifier 192.168.0.101, local AS number 64513

BGP table version is 19, main routing table version 19

4 network entries using 560 bytes of memory

6 path entries using 480 bytes of memory

2 multipath network entries and 4 multipath paths

1/1 BGP path/bestpath attribute entries using 144 bytes of memory

1 BGP AS-PATH entries using 24 bytes of memory

0 BGP route-map cache entries using 0 bytes of memory

0 BGP filter-list cache entries using 0 bytes of memory

BGP using 1208 total bytes of memory

BGP activity 5/1 prefixes, 12/6 paths, scan interval 60 secsNeighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

192.168.0.102 4 64513 100 106 19 0 0 01:27:20 0

192.168.0.170 4 64512 107 86 19 0 0 00:37:19 3

192.168.0.171 4 64512 98 85 19 0 0 00:37:21 3

IOU1#路由器查看BGP路由条目:

IOU1#show ip route bgp

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGPD - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2E1 - OSPF external type 1, E2 - OSPF external type 2i - IS-IS, su - IS-IS summary, L1 - IS-IS level-1, L2 - IS-IS level-2ia - IS-IS inter area, * - candidate default, U - per-user static routeo - ODR, P - periodic downloaded static route, H - NHRP, l - LISPa - application route+ - replicated route, % - next hop overrideGateway of last resort is 192.168.0.1 to network 0.0.0.010.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

B 10.96.0.1/32 [20/0] via 192.168.0.171, 00:38:36[20/0] via 192.168.0.170, 00:38:36

B 10.96.0.10/32 [20/0] via 192.168.0.171, 00:38:36[20/0] via 192.168.0.170, 00:38:36

B 10.244.0.0/24 [20/0] via 192.168.0.170, 00:38:36

B 10.244.1.0/24 [20/0] via 192.168.0.171, 00:38:39

可以看到,bgp邻居建立成功,k8s集群内部路由学习成功

开一台测试机检查:

测试机修改默认网关指向路由器,模拟外部网络环境中的一台普通pc:

[root@python ~]# ip route

default via 192.168.0.100 dev eth3 proto static [root@python ~]# curl 10.244.1.35

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p><p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@python ~]# 测试成功,至此,集群内部bgp网络,和外部bgp网络对接成功!

友情提示:别忘了保存路由器的配置到nvram里(copy running-config startup-config),否则重启就丢配置啦。好久没碰网络设备了,这个茬给忘了,被坑了一次,嘿嘿

9-22踩坑

今日尝试在k8s集群中添加与原集群node(192.168.9.x/24)不在同一个网段的新node(192.168.20.x/24),创建好了之后出现了非常奇怪的现象:新node中的kube-router与集群外的网络核心设备之间建立的peer邻居关系一直重复地处于established —> idle状态频繁转变,非常的不稳定,当处于established状态时,新node与原node间的丢包率甚至达到70%以上,处于idle状态时,node间ping包正常

问题截图:

在进入kube-router后使用gobgp neighbor查看发现新node与外部网络设备ebgp\原node的ibgp邻居关系一直处于频繁变化的状态无法稳定建立关系,百思不得其解,最后终于在github上找到了kube-router唯一的类似issue,项目成员解释如下:

Github issue链接

个人理解是:

kube-router只支持与同一个子网的node建立ibgp对等体关系,跨子网的节点无法建立对等体邻居关系;同时,ebgp协议的ebgp max hop属性,默认值为1,路由器设备一般支持手动修改此值,而kube-router较早之前的版本仅支持默认值1,无法手动配置此值,因此EBGP邻居与kube-router也必须在同一个子网中,后面的版本已解决此问题,升级版本后node跨子网建ebgp邻居不再有问题。