Multimodal (Visual and Language) understanding with LLaVA-NeXT — ROCm Blogs (amd.com)

2024年4月26日,由 Phillip Dang撰写。

LLaVa(Large Language And Vision Assistant)在2023年被推出,并成为多模态模型的一个里程碑。它结合了预训练的 视觉编码器 和预训练的 大型语言模型(LLM),用于通用视觉和语言理解。在2024年1月,LLaVa-NeXT发布了,它具备了显著的增强,包括更高输入视觉分辨率以及改进的逻辑推理和世界知识。

LLaVa模型的核心是使用一个简单的线性层将图像特征连接到词嵌入空间,从而使其在实验运行时更加高效。而其他多模态模型(例如Flamingo和BLIP-2)则使用更复杂的方法,如门控交叉注意力和Q-former来连接图像和语言表示。下图展示了LLaVa的高层架构 (来源).

用于微调LLaVa模型的数据是由仅有语言能力的GPT-4生成的,总共有数十万条语言-图像指令遵循样本,包括对话、详细描述和复杂的推理。目标是创建一个强大的模型,能够理解并执行文本和视觉格式的指令。使用GPT生成的数据的原因是,对于多模态指令遵循数据,“考虑到人为众包,创建此类数据的过程既耗时又定义不足” (来源)。更多的数据集示例请参见 这个HuggingFace数据集。

在评估中,给LLaVa模型提供问题和图像,以生成响应。然后将此响应与真实文本描述进行比较,并基于其帮助性、相关性、准确性和详细程度由GPT-4进行评分,评分标准为1到10分,总体评分越高越好。

想进一步了解LLaVa的内部工作原理和评估,建议用户查看Haotian Liu等人的 《视觉指令调优》和他们的 网站。有关LLaVa-NeXT的更多细节,建议用户查看HuggingFace的文档 和此公告。

在本博客中,我们使用最近发布的LLaVa-NeXT进行一些推理演示,并展示其在AMD GPU和ROCm上的开箱即用效果。

先决条件

-

软件:

-

ROCm

-

PyTorch

-

Linux 操作系统

-

关于支持的 GPU 和操作系统列表,请参考 此页。为了方便和稳定,我们建议您直接在 Linux 系统中拉取并运行 rocm/pytorch Docker,用如下代码:

docker run -it --ipc=host --network=host --device=/dev/kfd --device=/dev/dri \--group-add video --cap-add=SYS_PTRACE --security-opt seccomp=unconfined \--name=olmo rocm/pytorch:rocm6.0_ubuntu20.04_py3.9_pytorch_2.1.1 /bin/bash

-

硬件:

确保系统识别您的 GPU:

! rocm-smi --showproductname

================= ROCm System Management Interface ================ ========================= Product Info ============================ GPU[0] : Card series: Instinct MI210 GPU[0] : Card model: 0x0c34 GPU[0] : Card vendor: Advanced Micro Devices, Inc. [AMD/ATI] GPU[0] : Card SKU: D67301 =================================================================== ===================== End of ROCm SMI Log =========================

让我们检查是否安装了正确版本的 ROCm。

!apt show rocm-libs -a

Package: rocm-libs Version: 5.7.0.50700-63~22.04 Priority: optional Section: devel Maintainer: ROCm Libs Support <rocm-libs.support@amd.com> Installed-Size: 13.3 kBA Depends: hipblas (= 1.1.0.50700-63~22.04), hipblaslt (= 0.3.0.50700-63~22.04), hipfft (= 1.0.12.50700-63~22.04), hipsolver (= 1.8.1.50700-63~22.04), hipsparse (= 2.3.8.50700-63~22.04), miopen-hip (= 2.20.0.50700-63~22.04), rccl (= 2.17.1.50700-63~22.04), rocalution (= 2.1.11.50700-63~22.04), rocblas (= 3.1.0.50700-63~22.04), rocfft (= 1.0.23.50700-63~22.04), rocrand (= 2.10.17.50700-63~22.04), rocsolver (= 3.23.0.50700-63~22.04), rocsparse (= 2.5.4.50700-63~22.04), rocm-core (= 5.7.0.50700-63~22.04), hipblas-dev (= 1.1.0.50700-63~22.04), hipblaslt-dev (= 0.3.0.50700-63~22.04), hipcub-dev (= 2.13.1.50700-63~22.04), hipfft-dev (= 1.0.12.50700-63~22.04), hipsolver-dev (= 1.8.1.50700-63~22.04), hipsparse-dev (= 2.3.8.50700-63~22.04), miopen-hip-dev (= 2.20.0.50700-63~22.04), rccl-dev (= 2.17.1.50700-63~22.04), rocalution-dev (= 2.1.11.50700-63~22.04), rocblas-dev (= 3.1.0.50700-63~22.04), rocfft-dev (= 1.0.23.50700-63~22.04), rocprim-dev (= 2.13.1.50700-63~22.04), rocrand-dev (= 2.10.17.50700-63~22.04), rocsolver-dev (= 3.23.0.50700-63~22.04), rocsparse-dev (= 2.5.4.50700-63~22.04), rocthrust-dev (= 2.18.0.50700-63~22.04), rocwmma-dev (= 1.2.0.50700-63~22.04) Homepage: https://github.com/RadeonOpenCompute/ROCm Download-Size: 1012 B APT-Manual-Installed: yes APT-Sources: http://repo.radeon.com/rocm/apt/5.7 jammy/main amd64 Packages Description: Radeon Open Compute (ROCm) Runtime software stack

确保 PyTorch 也能识别 GPU:

import torch

print(f"number of GPUs: {torch.cuda.device_count()}")

print([torch.cuda.get_device_name(i) for i in range(torch.cuda.device_count())])

number of GPUs: 1 ['AMD Radeon Graphics']

让我们开始测试 LLaVa-NeXT。

库

请先安装HuggingFace的transformers库。

! pip install -q transformers

接下来导入你将在这篇博客中使用的模块:

from transformers import LlavaNextProcessor, LlavaNextForConditionalGeneration import torch from PIL import Image import requests import time

加载模型

我们将加载模型。我们会使用Mistral-7B作为大型语言模型(LLM)和CLIP作为视觉编码器。

device = "cuda:0"

model = LlavaNextForConditionalGeneration.from_pretrained("llava-hf/llava-v1.6-mistral-7b-hf", torch_dtype=torch.float16, low_cpu_mem_usage=True)

model.to(device)

processor = LlavaNextProcessor.from_pretrained("llava-hf/llava-v1.6-mistral-7b-hf")

print(f"{model.num_parameters() / 1e9:.2f}B parameters")

print(model)

Loading checkpoint shards: 100%|██████████| 4/4 [00:00<00:00, 13.97it/s] Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained. 7.57B parameters LlavaNextForConditionalGeneration((vision_tower): CLIPVisionModel((vision_model): CLIPVisionTransformer((embeddings): CLIPVisionEmbeddings((patch_embedding): Conv2d(3, 1024, kernel_size=(14, 14), stride=(14, 14), bias=False)(position_embedding): Embedding(577, 1024))(pre_layrnorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)(encoder): CLIPEncoder((layers): ModuleList((0-23): 24 x CLIPEncoderLayer((self_attn): CLIPAttention((k_proj): Linear(in_features=1024, out_features=1024, bias=True)(v_proj): Linear(in_features=1024, out_features=1024, bias=True)(q_proj): Linear(in_features=1024, out_features=1024, bias=True)(out_proj): Linear(in_features=1024, out_features=1024, bias=True))(layer_norm1): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)(mlp): CLIPMLP((activation_fn): QuickGELUActivation()(fc1): Linear(in_features=1024, out_features=4096, bias=True)(fc2): Linear(in_features=4096, out_features=1024, bias=True))(layer_norm2): LayerNorm((1024,), eps=1e-05, elementwise_affine=True))))(post_layernorm): LayerNorm((1024,), eps=1e-05, elementwise_affine=True)))(multi_modal_projector): LlavaNextMultiModalProjector((linear_1): Linear(in_features=1024, out_features=4096, bias=True)(act): GELUActivation()(linear_2): Linear(in_features=4096, out_features=4096, bias=True))(language_model): MistralForCausalLM((model): MistralModel((embed_tokens): Embedding(32064, 4096)(layers): ModuleList((0-31): 32 x MistralDecoderLayer((self_attn): MistralAttention((q_proj): Linear(in_features=4096, out_features=4096, bias=False)(k_proj): Linear(in_features=4096, out_features=1024, bias=False)(v_proj): Linear(in_features=4096, out_features=1024, bias=False)(o_proj): Linear(in_features=4096, out_features=4096, bias=False)(rotary_emb): MistralRotaryEmbedding())(mlp): MistralMLP((gate_proj): Linear(in_features=4096, out_features=14336, bias=False)(up_proj): Linear(in_features=4096, out_features=14336, bias=False)(down_proj): Linear(in_features=14336, out_features=4096, bias=False)(act_fn): SiLU())(input_layernorm): MistralRMSNorm()(post_attention_layernorm): MistralRMSNorm()))(norm): MistralRMSNorm())(lm_head): Linear(in_features=4096, out_features=32064, bias=False)) )

多模态推理

让我们首先创建一个函数,该函数接受一个提示和一个本地图像路径或图像网址作为参数,然后使用模型生成一个文本响应。我们将HuggingFace的 文档中的代码进行适应。

def run_inference(text='', image_source='', is_url=False): # 从URL或本地文件加载图像if is_url == True:image = Image.open(requests.get(image_source, stream=True).raw)else:image = Image.open(image_source)image = image.convert("RGB")# 展示图像 image.show() # 创建提示并处理输入 start = time.time()prompt = f"<image>\nUSER: {text}\nASSISTANT:"inputs = processor(text=prompt, images=image, return_tensors="pt").to(device)# 生成响应generate_ids = model.generate(**inputs, max_length=500)response = processor.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]print(f"Generated in {time.time() - start: .2f} secs")print(response) return response

现在,我们准备测试模型。以下是我们与模型互动的几个示例,使用输入图像。

示例 1:

text = "What's the content of the image?" response = run_inference(text, "https://www.ilankelman.org/stopsigns/australia.jpg", is_url=True)

Generated in 3.66 secsUSER: What's the content of the image? ASSISTANT: The image shows a stop sign at an intersection, with a black car driving through it. The stop sign is located on a street corner, and there are Chinese characters on the building behind the car. The architecture of the buildings suggests an Asian influence, possibly in a Chinatown area.

示例 2:

text = "Describe the image and what's funny about it" response = run_inference(text, "images/example2.jpg", is_url=False)

Generated in 4.20 secsUSER: Describe the image and what's funny about it ASSISTANT: The image features a cat sitting on the edge of a boat, wearing a pair of sunglasses. The cat is looking directly at the camera, giving the impression that it is posing for a photo. The sunglasses add a humorous and anthropomorphic touch to the image, as it's not common for cats to wear accessories like sunglasses. The cat's serious expression and the sunglasses create a comical contrast, making the image amusing and entertaining.

示例 3:

text = "Explain the humor in the image" response = run_inference(text, "images/example3.jpg", is_url=False)

Generated in 4.84 secsUSER: Explain the humor in the image ASSISTANT: The image is a humorous collage of various animals, including dogs and a cat, with their faces digitally altered to look like they are eating muffins. The humor arises from the unexpected and absurd nature of the images. It's amusing to see animals, which are not capable of eating muffins, depicted as if they are enjoying a human-like snack. The juxtaposition of the animals' natural behavior with the human activity of eating muffins creates a whimsical and comical effect.

示例 4:

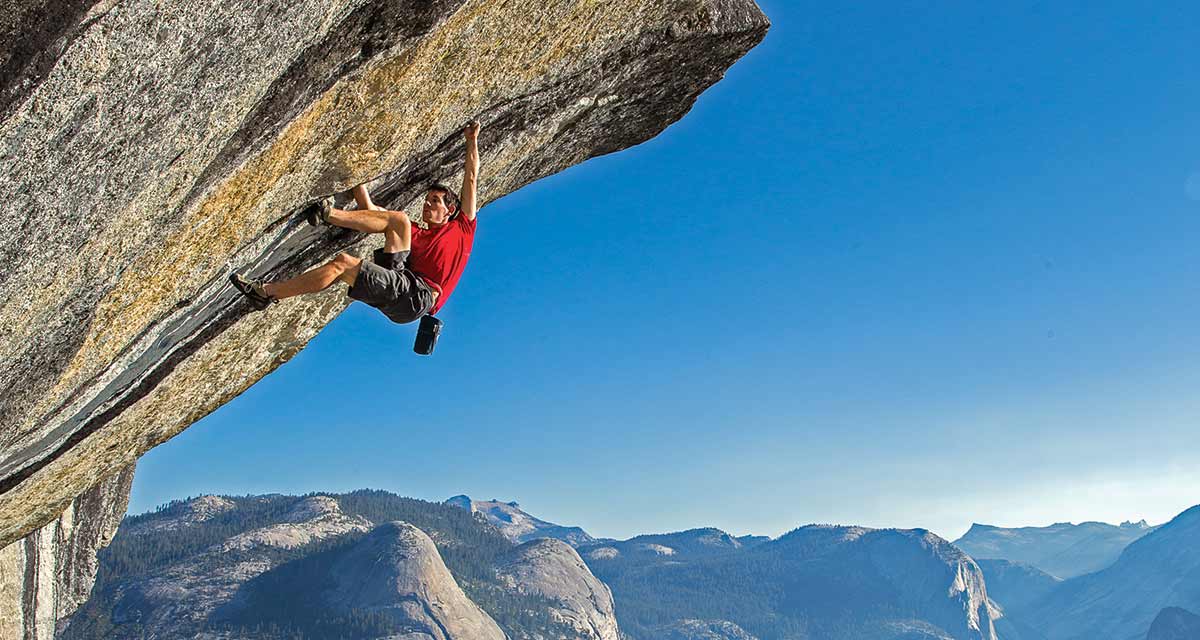

text = "Tell me about the content of this image and what's unusual about it?" response = run_inference(text, "images/example4.jpg", is_url=False)

Generated in 4.44 secsUSER: Tell me about the content of this image and what's unusual about it? ASSISTANT: The image shows a person climbing a rock face, likely a part of a mountain. The climber is wearing a red shirt and appears to be in the midst of a challenging ascent, with their hands and feet gripping the rock. The unusual aspect of this image is the perspective; it's taken from a low angle, which makes the climber appear to be hanging from the rock face, almost as if they are defying gravity. This perspective creates a dramatic and somewhat surreal effect, emphasizing the height and difficulty of the climb.

示例 5:

text = "What are the names of the people in the image?" response = run_inference(text, "images/example5.jpg", is_url=False)

Generated in 1.87 secsUSER: What are the names of the people in the image? ASSISTANT: The image contains a collage of various historical figures and celebrities. However, without specific names, I can only describe the individuals as "historical figures" and "celebrities."

示例 6:

text = "Identify the landmark in the image and tell me one fun fact about it" response = run_inference(text, "images/example6.jpg", is_url=False)

Generated in 3.32 secsUSER: Identify the landmark in the image and tell me one fun fact about it ASSISTANT: The landmark in the image is the Colosseum, located in Rome, Italy. One fun fact about the Colosseum is that it was originally built for gladiatorial contests and other public spectacles, such as mock sea battles, animal hunts, and executions. It is one of the most iconic symbols of Imperial Rome and has been a major tourist attraction for centuries. .

我们可以看到,该模型在识别图像中的物体、场景和背景方面表现出色。除了示例3中模型无法理解幽默之外,其他示例的解释都相当不错。

文本推理

值得指出的是,我们也可以无需输入图片,仅通过文本提示进行推理。我们只需稍微调整一下上面的函数:

def run_text_only(text=''): # 创建提示并处理输入start = time.time()prompt = f"USER: {text}\nASSISTANT:"inputs = processor(text=prompt,return_tensors="pt").to(device)# 生成回复generate_ids = model.generate(**inputs, max_length=500)response = processor.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]print(f"Generated in {time.time() - start: .2f} secs")print(response) return response

这里有两个例子:

text = "Explain three laws of thermodynamics" response = run_text_only(text)

Generated in 6.94 secs USER: Explain three laws of thermodynamics ASSISTANT: The three laws of thermodynamics are fundamental principles that describe the behavior of energy in a system. Here's a brief explanation of each:1. **First Law of Thermodynamics**: Also known as the Law of Conservation of Energy, this law states that energy cannot be created or destroyed, only converted from one form to another. This means that the total amount of energy in a closed system remains constant.2. **Second Law of Thermodynamics**: This law states that the total entropy (a measure of the disorder or randomness of a system) of an isolated system can only increase over time. In other words, natural processes tend to move towards a state of disorder.3. **Third Law of Thermodynamics**: This law states that as the temperature of a system approaches absolute zero (0 Kelvin), the entropy of the system approaches a minimum value. This means that it is impossible to reach absolute zero, as the entropy would have to be zero.These laws are fundamental to the study of thermodynamics and have wide-ranging implications in physics, chemistry, and engineering.

text = "Why is the sky blue?" response = run_text_only(text)

Generated in 3.00 secs USER: Why is the sky blue? ASSISTANT: The sky appears blue because of the scattering of light by the Earth's atmosphere. The Earth's atmosphere is made up of many gases, including nitrogen and oxygen. When sunlight enters the Earth's atmosphere, it is scattered in all directions by these gases. Blue light is scattered more than other colors because it travels in smaller, shorter waves. This means that when you look up at the sky, you are seeing the blue light that has been scattered by the Earth's atmosphere.