替换Inner CIoU损失函数(基于MMYOLO)

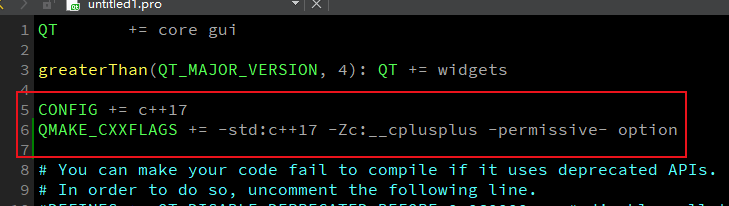

由于MMYOLO中没有实现Inner CIoU损失函数,所以需要在mmyolo/models/iou_loss.py中添加Inner CIoU的计算和对应的iou_mode,修改完以后在终端运行

python setup.py install再在配置文件中进行修改即可。修改例子如下:

elif iou_mode == "innerciou":ratio=1.0w1_, h1_, w2_, h2_ = w1 / 2, h1 / 2, w2 / 2, h2 / 2x1 = bbox1_x1 + w1_y1 = bbox1_y1 + h1_x2 = bbox2_x1 + w2_y2 = bbox2_y1 + h2_inner_b1_x1, inner_b1_x2, inner_b1_y1, inner_b1_y2 = x1 - w1_ * ratio, x1 + w1_ * ratio, \y1 - h1_ * ratio, y1 + h1_ * ratioinner_b2_x1, inner_b2_x2, inner_b2_y1, inner_b2_y2 = x2 - w2_ * ratio, x2 + w2_ * ratio, \y2 - h2_ * ratio, y2 + h2_ * ratioinner_inter = (torch.min(inner_b1_x2, inner_b2_x2) - torch.max(inner_b1_x1, inner_b2_x1)).clamp(0) * \(torch.min(inner_b1_y2, inner_b2_y2) - torch.max(inner_b1_y1, inner_b2_y1)).clamp(0)inner_union = w1 * ratio * h1 * ratio + w2 * ratio * h2 * ratio - inner_inter + epsinner_iou = inner_inter / inner_union# CIoU = IoU - ( (ρ^2(b_pred,b_gt) / c^2) + (alpha x v) )# calculate enclose area (c^2)enclose_area = enclose_w**2 + enclose_h**2 + eps# calculate ρ^2(b_pred,b_gt):# euclidean distance between b_pred(bbox2) and b_gt(bbox1)# center point, because bbox format is xyxy -> left-top xy and# right-bottom xy, so need to / 4 to get center point.rho2_left_item = ((bbox2_x1 + bbox2_x2) - (bbox1_x1 + bbox1_x2))**2 / 4rho2_right_item = ((bbox2_y1 + bbox2_y2) -(bbox1_y1 + bbox1_y2))**2 / 4rho2 = rho2_left_item + rho2_right_item # rho^2 (ρ^2)# Width and height ratio (v)wh_ratio = (4 / (math.pi**2)) * torch.pow(torch.atan(w2 / h2) - torch.atan(w1 / h1), 2)with torch.no_grad():alpha = wh_ratio / (wh_ratio - ious + (1 + eps))# innerCIoUious = inner_iou - ((rho2 / enclose_area) + (alpha * wh_ratio))修改后的配置文件(以configs/yolov5/yolov5_s-v61_syncbn_8xb16-300e_coco.py为例)

_base_ = ['../_base_/default_runtime.py', '../_base_/det_p5_tta.py']# ========================Frequently modified parameters======================

# -----data related-----

data_root = 'data/coco/' # Root path of data

# Path of train annotation file

train_ann_file = 'annotations/instances_train2017.json'

train_data_prefix = 'train2017/' # Prefix of train image path

# Path of val annotation file

val_ann_file = 'annotations/instances_val2017.json'

val_data_prefix = 'val2017/' # Prefix of val image pathnum_classes = 80 # Number of classes for classification

# Batch size of a single GPU during training

train_batch_size_per_gpu = 16

# Worker to pre-fetch data for each single GPU during training

train_num_workers = 8

# persistent_workers must be False if num_workers is 0

persistent_workers = True# -----model related-----

# Basic size of multi-scale prior box

anchors = [[(10, 13), (16, 30), (33, 23)], # P3/8[(30, 61), (62, 45), (59, 119)], # P4/16[(116, 90), (156, 198), (373, 326)] # P5/32

]# -----train val related-----

# Base learning rate for optim_wrapper. Corresponding to 8xb16=128 bs

base_lr = 0.01

max_epochs = 300 # Maximum training epochsmodel_test_cfg = dict(# The config of multi-label for multi-class prediction.multi_label=True,# The number of boxes before NMSnms_pre=30000,score_thr=0.001, # Threshold to filter out boxes.nms=dict(type='nms', iou_threshold=0.65), # NMS type and thresholdmax_per_img=300) # Max number of detections of each image# ========================Possible modified parameters========================

# -----data related-----

img_scale = (640, 640) # width, height

# Dataset type, this will be used to define the dataset

dataset_type = 'YOLOv5CocoDataset'

# Batch size of a single GPU during validation

val_batch_size_per_gpu = 1

# Worker to pre-fetch data for each single GPU during validation

val_num_workers = 2# Config of batch shapes. Only on val.

# It means not used if batch_shapes_cfg is None.

batch_shapes_cfg = dict(type='BatchShapePolicy',batch_size=val_batch_size_per_gpu,img_size=img_scale[0],# The image scale of padding should be divided by pad_size_divisorsize_divisor=32,# Additional paddings for pixel scaleextra_pad_ratio=0.5)# -----model related-----

# The scaling factor that controls the depth of the network structure

deepen_factor = 0.33

# The scaling factor that controls the width of the network structure

widen_factor = 0.5

# Strides of multi-scale prior box

strides = [8, 16, 32]

num_det_layers = 3 # The number of model output scales

norm_cfg = dict(type='BN', momentum=0.03, eps=0.001) # Normalization config# -----train val related-----

affine_scale = 0.5 # YOLOv5RandomAffine scaling ratio

loss_cls_weight = 0.5

loss_bbox_weight = 0.05

loss_obj_weight = 1.0

prior_match_thr = 4. # Priori box matching threshold

# The obj loss weights of the three output layers

obj_level_weights = [4., 1., 0.4]

lr_factor = 0.01 # Learning rate scaling factor

weight_decay = 0.0005

# Save model checkpoint and validation intervals

save_checkpoint_intervals = 10

# The maximum checkpoints to keep.

max_keep_ckpts = 3

# Single-scale training is recommended to

# be turned on, which can speed up training.

env_cfg = dict(cudnn_benchmark=True)# ===============================Unmodified in most cases====================

model = dict(type='YOLODetector',data_preprocessor=dict(type='mmdet.DetDataPreprocessor',mean=[0., 0., 0.],std=[255., 255., 255.],bgr_to_rgb=True),backbone=dict(##使用YOLOv8的主干网络type='YOLOv8CSPDarknet',deepen_factor=deepen_factor,widen_factor=widen_factor,norm_cfg=norm_cfg,act_cfg=dict(type='SiLU', inplace=True)),neck=dict(type='YOLOv5PAFPN',deepen_factor=deepen_factor,widen_factor=widen_factor,in_channels=[256, 512, 1024],out_channels=[256, 512, 1024],num_csp_blocks=3,norm_cfg=norm_cfg,act_cfg=dict(type='SiLU', inplace=True)),bbox_head=dict(type='YOLOv5Head',head_module=dict(type='YOLOv5HeadModule',num_classes=num_classes,in_channels=[256, 512, 1024],widen_factor=widen_factor,featmap_strides=strides,num_base_priors=3),prior_generator=dict(type='mmdet.YOLOAnchorGenerator',base_sizes=anchors,strides=strides),# scaled based on number of detection layersloss_cls=dict(type='mmdet.CrossEntropyLoss',use_sigmoid=True,reduction='mean',loss_weight=loss_cls_weight *(num_classes / 80 * 3 / num_det_layers)),# 修改此处实现IoU损失函数的替换loss_bbox=dict(type='IoULoss',iou_mode='innerciou',bbox_format='xywh',eps=1e-7,reduction='mean',loss_weight=loss_bbox_weight * (3 / num_det_layers),return_iou=True),loss_obj=dict(type='mmdet.CrossEntropyLoss',use_sigmoid=True,reduction='mean',loss_weight=loss_obj_weight *((img_scale[0] / 640)**2 * 3 / num_det_layers)),prior_match_thr=prior_match_thr,obj_level_weights=obj_level_weights),test_cfg=model_test_cfg)albu_train_transforms = [dict(type='Blur', p=0.01),dict(type='MedianBlur', p=0.01),dict(type='ToGray', p=0.01),dict(type='CLAHE', p=0.01)

]pre_transform = [dict(type='LoadImageFromFile', file_client_args=_base_.file_client_args),dict(type='LoadAnnotations', with_bbox=True)

]train_pipeline = [*pre_transform,dict(type='Mosaic',img_scale=img_scale,pad_val=114.0,pre_transform=pre_transform),dict(type='YOLOv5RandomAffine',max_rotate_degree=0.0,max_shear_degree=0.0,scaling_ratio_range=(1 - affine_scale, 1 + affine_scale),# img_scale is (width, height)border=(-img_scale[0] // 2, -img_scale[1] // 2),border_val=(114, 114, 114)),dict(type='mmdet.Albu',transforms=albu_train_transforms,bbox_params=dict(type='BboxParams',format='pascal_voc',label_fields=['gt_bboxes_labels', 'gt_ignore_flags']),keymap={'img': 'image','gt_bboxes': 'bboxes'}),dict(type='YOLOv5HSVRandomAug'),dict(type='mmdet.RandomFlip', prob=0.5),dict(type='mmdet.PackDetInputs',meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'flip','flip_direction'))

]train_dataloader = dict(batch_size=train_batch_size_per_gpu,num_workers=train_num_workers,persistent_workers=persistent_workers,pin_memory=True,sampler=dict(type='DefaultSampler', shuffle=True),dataset=dict(type=dataset_type,data_root=data_root,ann_file=train_ann_file,data_prefix=dict(img=train_data_prefix),filter_cfg=dict(filter_empty_gt=False, min_size=32),pipeline=train_pipeline))test_pipeline = [dict(type='LoadImageFromFile', file_client_args=_base_.file_client_args),dict(type='YOLOv5KeepRatioResize', scale=img_scale),dict(type='LetterResize',scale=img_scale,allow_scale_up=False,pad_val=dict(img=114)),dict(type='LoadAnnotations', with_bbox=True, _scope_='mmdet'),dict(type='mmdet.PackDetInputs',meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape','scale_factor', 'pad_param'))

]val_dataloader = dict(batch_size=val_batch_size_per_gpu,num_workers=val_num_workers,persistent_workers=persistent_workers,pin_memory=True,drop_last=False,sampler=dict(type='DefaultSampler', shuffle=False),dataset=dict(type=dataset_type,data_root=data_root,test_mode=True,data_prefix=dict(img=val_data_prefix),ann_file=val_ann_file,pipeline=test_pipeline,batch_shapes_cfg=batch_shapes_cfg))test_dataloader = val_dataloaderparam_scheduler = None

optim_wrapper = dict(type='OptimWrapper',optimizer=dict(type='SGD',lr=base_lr,momentum=0.937,weight_decay=weight_decay,nesterov=True,batch_size_per_gpu=train_batch_size_per_gpu),constructor='YOLOv5OptimizerConstructor')default_hooks = dict(param_scheduler=dict(type='YOLOv5ParamSchedulerHook',scheduler_type='linear',lr_factor=lr_factor,max_epochs=max_epochs),checkpoint=dict(type='CheckpointHook',interval=save_checkpoint_intervals,save_best='auto',max_keep_ckpts=max_keep_ckpts))custom_hooks = [dict(type='EMAHook',ema_type='ExpMomentumEMA',momentum=0.0001,update_buffers=True,strict_load=False,priority=49)

]val_evaluator = dict(type='mmdet.CocoMetric',proposal_nums=(100, 1, 10),ann_file=data_root + val_ann_file,metric='bbox')

test_evaluator = val_evaluatortrain_cfg = dict(type='EpochBasedTrainLoop',max_epochs=max_epochs,val_interval=save_checkpoint_intervals)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')