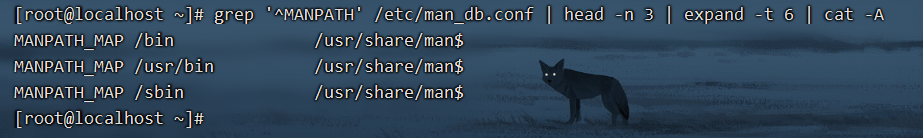

- 线性dense网络结构,输入(B,W)

`

class Model(nn.Module):def __init__(self):super().__init__()self.media_type_embed = nn.Embedding(num_media_type, embed_dim)self.mid_scroe_embed = nn.Embedding(num_mid_score, embed_dim)#self.cat = torch.cat()self.model = nn.Sequential(nn.Linear(embed_dim*2, 256),nn.ReLU(),nn.Linear(256, 2),#nn.Sigmoid(),)def forward(self, x,):#print("x :",x.shape)[media_type,mid_score] = xx_media = self.media_type_embed(media_type)x_mid = self.mid_scroe_embed(mid_score)x = torch.cat((x_media, x_mid), -1)x = self.model(x)return xmodel = Model()

model.to(device)`optimizer = torch.optim.Adam(model.parameters(), lr=lr)

criterion = nn.CrossEntropyLoss()

2.conv1d卷积网络:输入(B,C,W)

import torch

import torch.nn as nn# 定义一个一维卷积神经网络模型

class CNN1D(nn.Module):def __init__(self, input_dim, output_dim):super(CNN1D, self).__init__()self.conv1 = nn.Conv1d(in_channels=1, out_channels=16, kernel_size=3)self.conv2 = nn.Conv1d(in_channels=16, out_channels=32, kernel_size=3)self.pool = nn.MaxPool1d(kernel_size=2)self.fc1 = nn.Linear(32 * 47, 64)self.fc2 = nn.Linear(64, output_dim)def forward(self, x):x = self.conv1(x)x = nn.functional.relu(x)x = self.pool(x)x = self.conv2(x)x = nn.functional.relu(x)x = self.pool(x)x = x.view(-1, 32 * 47)x = self.fc1(x)x = nn.functional.relu(x)x = self.fc2(x)return x# 实例化模型并定义损失函数和优化器

model = CNN1D(input_dim=100, output_dim=10)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)# 定义数据集并训练模型

for epoch in range(100):for i, (inputs, labels) in enumerate(data_loader):optimizer.zero_grad()outputs = model(inputs)loss = criterion(outputs, labels)loss.backward()optimizer.step()