方法一:

Meta已将llama2开源,任何人都可以通过在meta ai上申请并接受许可证、提供电子邮件地址来获取模型。 Meta 将在电子邮件中发送下载链接。

下载llama2

- 获取download.sh文件,将其存储在mac上

- 打开mac终端,执行 chmod +x ./download.sh 赋予权限。

- 运行 ./download.sh 开始下载过程

- 复制电子邮件中的下载链接,粘贴到终端

- 仅下载13B-chat

安装系统依赖的东西

必须安装 Xcode 才能编译 C++ 项目。 如果您没有,请执行以下操作:

xcode-select --install接下来,安装用于构建 C++ 项目的依赖项。

brew install pkgconfig cmake

最后,我们安装 Torch。

如果您没有安装python3,请通过以下方式安装

brew install python@3.11像这样创建一个虚拟环境:

/opt/homebrew/bin/python3.11 -m venv venv激活 venv。

source venv/bin/activate安装 PyTorch:

pip install --pre torch torchvision --extra-index-url https://download.pytorch.org/whl/nightly/cpu编译 llama.cpp

克隆 llama.cpp

git clone https://github.com/ggerganov/llama.cpp.git安装python依赖包

pip3 install -r requirements.txt编译

LLAMA_METAL=1 make

如果你有两个arch (x86_64, arm64), 可以用下面指定arm64

arch -arm64 make

将下载的 13B 移至 models 文件夹下的 llama.cpp 项目。

将模型转换为ggml格式

13B和70B是不一样的。 Convert-pth-to-ggml.py 已弃用,请使用 Convert.py 代替

13B-chat

python3 convert.py --outfile ./models/llama-2-13b-chat/ggml-model-f16.bin --outtype f16 ./models/llama-2-13b-chatQuantize 模型:

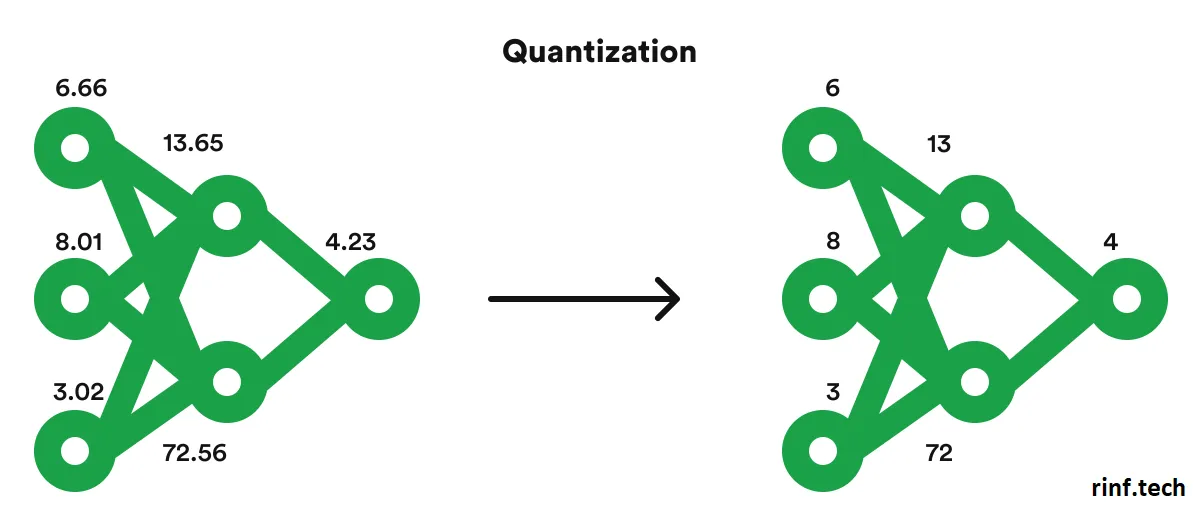

In order to run these huge LLMs in our small laptops we will need to reconstruct and quantize the model with the following commands, here we will convert the model’s weights from float16 to int4 requiring less memory to be executed and only losing a little bit of quality in the process.

13B-chat:

./quantize ./models/llama-2-13b-chat/ggml-model-f16.bin ./models/llama-2-13b-chat/ggml-model-q4_0.bin q4_0运行模型

./main -m ./models/llama-2-13b-chat/ggml-model-q4_0.bin -t 4 -c 2048 -n 2048 --color -i -r '### Question:' -p '### Question:'您可以使用 -ngl 1 命令行参数启用 GPU 推理。 任何大于 0 的值都会将计算负载转移到 GPU。 例如:

./main -m ./models/llama-2-13b-chat/ggml-model-q4_0.bin -t 4 -c 2048 -n 2048 --color -i -ngl 1 -r '### Question:' -p '### Question:'在我的 Mac 上测试时,它比纯 cpu 快大约 25%。

方法2:

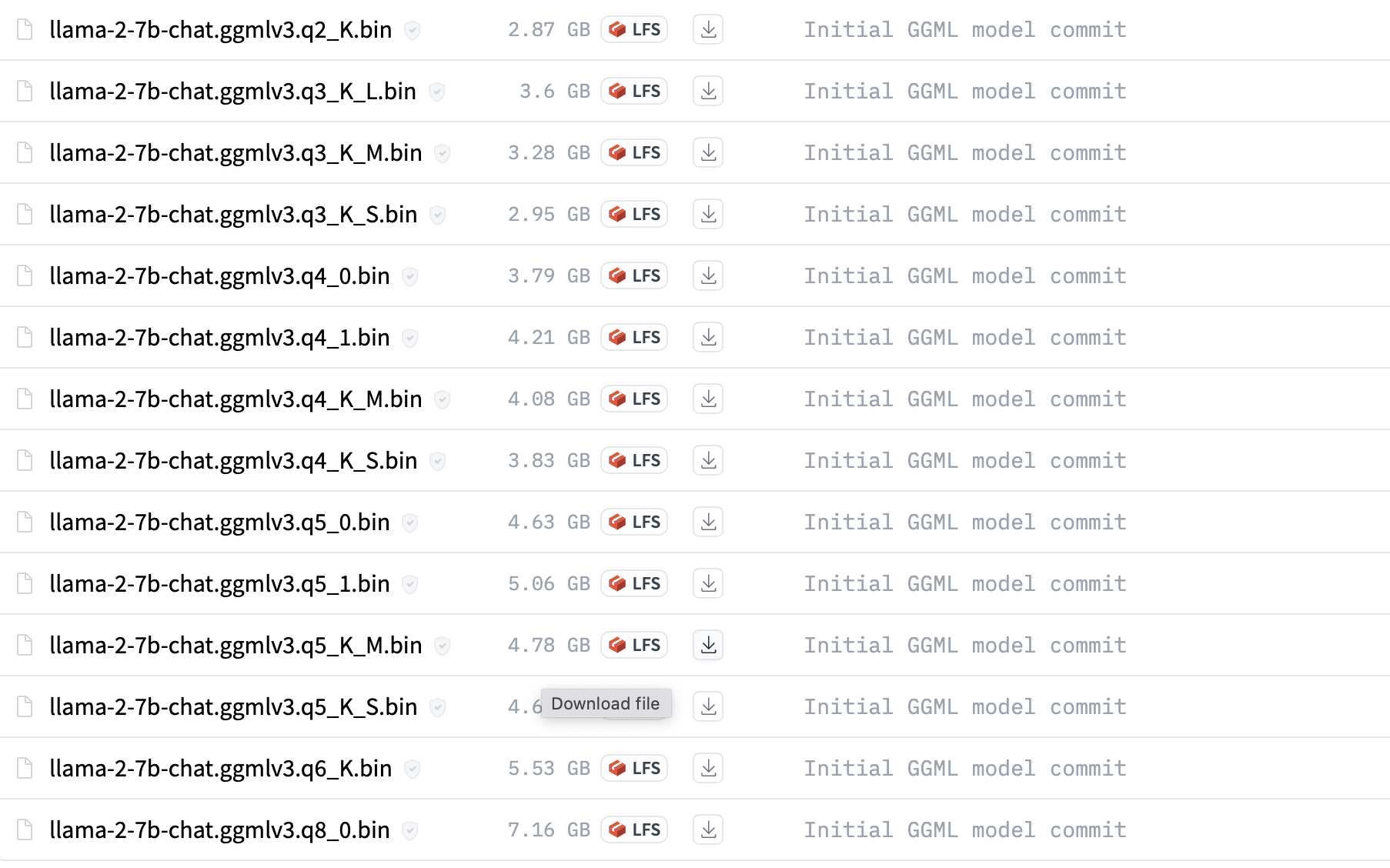

在huggingface 里直接下载quantized gguf格式的模型

https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF/tree/main

安装系统的依赖方法一的一样

编译 llama.cpp 和方法一的一样

如果你下载的是ggml格式的, 要运行下面命令转换格式

python convert-llama-ggml-to-gguf.py --eps 1e-5 -i ./models/llama-2-13b-chat.ggmlv3.q4_0.bin -o ./models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin(llama) C:\Users\Harry\PycharmProjects\llama.cpp>python convert-llama-ggml-to-gguf.py --eps 1e-5 -i ./models/llama-2-13b-chat.ggmlv3.q4_0.bin -o ./models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin

* Using config: Namespace(input=WindowsPath('models/llama-2-13b-chat.ggmlv3.q4_0.bin'), output=WindowsPath('models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin'), name=None, desc=None, gqa=1, eps='1e-5', context_length=2048, model_metadata_dir=None, vocab_dir=None, vocabtype='spm')=== WARNING === Be aware that this conversion script is best-effort. Use a native GGUF model if possible. === WARNING ===- Note: If converting LLaMA2, specifying "--eps 1e-5" is required. 70B models also need "--gqa 8".

* Scanning GGML input file

* File format: GGJTv3 with ftype MOSTLY_Q4_0

* GGML model hyperparameters: <Hyperparameters: n_vocab=32000, n_embd=5120, n_mult=256, n_head=40, n_layer=40, n_rot=128, n_ff=13824, ftype=MOSTLY_Q4_0>=== WARNING === Special tokens may not be converted correctly. Use --model-metadata-dir if possible === WARNING ===* Preparing to save GGUF file

This gguf file is for Little Endian only

* Adding model parameters and KV items

* Adding 32000 vocab item(s)

* Adding 363 tensor(s)gguf: write headergguf: write metadatagguf: write tensors

* Successful completion. Output saved to: models\llama-2-13b-chat.ggmlv3.q4_0.gguf.bin不需要执行步骤-Quantize 模型

运行模型

./main -m ./models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin --color --ctx_size 2048 -n -1 -ins -b 256 --top_k 10000 --temp 0.2 --repeat_penalty 1.1 -t 8~/PycharmProjects/llama.cpp $ ./main -m ./models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin --color --ctx_size 2048 -n -1 -ins -b 256 --top_k 10000 --temp 0.2 --repeat_penalty 1.1 -t 8

Log start

main: build = 0 (unknown)

main: built with cc (GCC) 13.2.0 for x86_64-w64-mingw32

main: seed = 1699106015

llama_model_loader: loaded meta data with 19 key-value pairs and 363 tensors from ./models/llama-2-13b-chat.ggmlv3.q4_0.gguf.bin (version GGUF V3 (latest))

llama_model_loader: - tensor 0: token_embd.weight q4_0 [ 5120, 32000, 1, 1 ]

llama_model_loader: - tensor 1: output_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 2: output.weight q4_0 [ 5120, 32000, 1, 1 ]

llama_model_loader: - tensor 3: blk.0.attn_q.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 4: blk.0.attn_k.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 5: blk.0.attn_v.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 6: blk.0.attn_output.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 7: blk.0.attn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 8: blk.0.ffn_gate.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 9: blk.0.ffn_down.weight q4_0 [ 13824, 5120, 1, 1 ]

llama_model_loader: - tensor 10: blk.0.ffn_up.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 11: blk.0.ffn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 12: blk.1.attn_q.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 13: blk.1.attn_k.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 14: blk.1.attn_v.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 15: blk.1.attn_output.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 16: blk.1.attn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 17: blk.1.ffn_gate.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 18: blk.1.ffn_down.weight q4_0 [ 13824, 5120, 1, 1 ]

llama_model_loader: - tensor 19: blk.1.ffn_up.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 20: blk.1.ffn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 21: blk.2.attn_q.weight q4_0 [ 5120, 5120, 1, 1 ]

....llama_model_loader: - tensor 351: blk.38.ffn_down.weight q4_0 [ 13824, 5120, 1, 1 ]

llama_model_loader: - tensor 352: blk.38.ffn_up.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 353: blk.38.ffn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 354: blk.39.attn_q.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 355: blk.39.attn_k.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 356: blk.39.attn_v.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 357: blk.39.attn_output.weight q4_0 [ 5120, 5120, 1, 1 ]

llama_model_loader: - tensor 358: blk.39.attn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - tensor 359: blk.39.ffn_gate.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 360: blk.39.ffn_down.weight q4_0 [ 13824, 5120, 1, 1 ]

llama_model_loader: - tensor 361: blk.39.ffn_up.weight q4_0 [ 5120, 13824, 1, 1 ]

llama_model_loader: - tensor 362: blk.39.ffn_norm.weight f32 [ 5120, 1, 1, 1 ]

llama_model_loader: - kv 0: general.architecture str

llama_model_loader: - kv 1: general.name str

llama_model_loader: - kv 2: general.description str

llama_model_loader: - kv 3: general.file_type u32

llama_model_loader: - kv 4: llama.context_length u32

llama_model_loader: - kv 5: llama.embedding_length u32

llama_model_loader: - kv 6: llama.block_count u32

llama_model_loader: - kv 7: llama.feed_forward_length u32

llama_model_loader: - kv 8: llama.rope.dimension_count u32

llama_model_loader: - kv 9: llama.attention.head_count u32

llama_model_loader: - kv 10: llama.attention.head_count_kv u32

llama_model_loader: - kv 11: llama.attention.layer_norm_rms_epsilon f32

llama_model_loader: - kv 12: tokenizer.ggml.model str

llama_model_loader: - kv 13: tokenizer.ggml.tokens arr

llama_model_loader: - kv 14: tokenizer.ggml.scores arr

llama_model_loader: - kv 15: tokenizer.ggml.token_type arr

llama_model_loader: - kv 16: tokenizer.ggml.unknown_token_id u32

llama_model_loader: - kv 17: tokenizer.ggml.bos_token_id u32

llama_model_loader: - kv 18: tokenizer.ggml.eos_token_id u32

llama_model_loader: - type f32: 81 tensors

llama_model_loader: - type q4_0: 282 tensors

llm_load_vocab: special tokens definition check successful ( 259/32000 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = llama

llm_load_print_meta: vocab type = SPM

llm_load_print_meta: n_vocab = 32000

llm_load_print_meta: n_merges = 0

llm_load_print_meta: n_ctx_train = 2048

llm_load_print_meta: n_embd = 5120

llm_load_print_meta: n_head = 40

llm_load_print_meta: n_head_kv = 40

llm_load_print_meta: n_layer = 40

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-05

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 13824

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 2048

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 13B

llm_load_print_meta: model ftype = mostly Q4_0

llm_load_print_meta: model params = 13.02 B

llm_load_print_meta: model size = 6.82 GiB (4.50 BPW)

llm_load_print_meta: general.name = llama-2-13b-chat.ggmlv3.q4_0.bin

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

llm_load_tensors: ggml ctx size = 0.13 MB

llm_load_tensors: mem required = 6983.75 MB

....................................................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_new_context_with_model: kv self size = 1600.00 MB

llama_build_graph: non-view tensors processed: 924/924

llama_new_context_with_model: compute buffer total size = 103.63 MBsystem_info: n_threads = 8 / 16 | AVX = 1 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 |

main: interactive mode on.

Reverse prompt: '### Instruction:'

sampling:repeat_last_n = 64, repeat_penalty = 1.100, frequency_penalty = 0.000, presence_penalty = 0.000top_k = 10000, tfs_z = 1.000, top_p = 0.950, min_p = 0.050, typical_p = 1.000, temp = 0.200mirostat = 0, mirostat_lr = 0.100, mirostat_ent = 5.000

generate: n_ctx = 2048, n_batch = 256, n_predict = -1, n_keep = 1== Running in interactive mode. ==- Press Ctrl+C to interject at any time.- Press Return to return control to LLaMa.- To return control without starting a new line, end your input with '/'.- If you want to submit another line, end your input with '\'.参考资料

A comprehensive guide to running Llama 2 locally – Replicate