linux内核版本 5.10

1队列初始化 alloc_netdev_mqs

内核网络设备结构使用两个成员表示队列数量,num_tx_queues表示最大队列数量,而real_num_tx_queues表示实际可用的队列数量。以intel的ixgbe驱动来看,在分配网卡设备时,队列数量设置为MAX_TX_QUEUES。

static int ixgbe_probe(struct pci_dev *pdev, const struct pci_device_id *ent)

{unsigned int indices = MAX_TX_QUEUES;netdev = alloc_etherdev_mq(sizeof(struct ixgbe_adapter), indices);if (!netdev) {err = -ENOMEM;goto err_alloc_etherdev;}.../* setup the private structure */err = ixgbe_sw_init(adapter, ii);if (err)goto err_sw_init;...err = ixgbe_init_interrupt_scheme(adapter);//-->ixgbe_set_num_queuesif (err)goto err_sw_init;...

}如下将num_tx_queues和real_num_tx_queues都设置为了MAX_TX_QUEUES的值。

struct net_device *alloc_netdev_mqs(int sizeof_priv, const char *name,unsigned char name_assign_type,void (*setup)(struct net_device *),unsigned int txqs, unsigned int rxqs)

{dev->num_tx_queues = txqs;dev->real_num_tx_queues = txqs;if (netif_alloc_netdev_queues(dev))goto free_all;

宏MAX_TX_QUEUES定义为64,即最大队列数量为64。但是真正可用的队列数量为real_num_tx_queues,之后将更新其值。

#define IXGBE_MAX_FDIR_INDICES 63 /* based on q_vector limit */

#define MAX_TX_QUEUES (IXGBE_MAX_FDIR_INDICES + 1)2 实际发送队列计算 ixgbe_set_num_queues

函数ixgbe_set_num_queues根据使能的feature特性来设置队列的长度。注意以下的顺序,优先根据使能最多特性feature的情况设置队列,以最小feature的情况结束。这样,可以尽可能为多特性组合分配队列。

static void ixgbe_set_num_queues(struct ixgbe_adapter *adapter)

{/* Start with base case */adapter->num_rx_queues = 1;adapter->num_tx_queues = 1;adapter->num_xdp_queues = 0;adapter->num_rx_pools = 1;adapter->num_rx_queues_per_pool = 1;#ifdef CONFIG_IXGBE_DCBif (ixgbe_set_dcb_sriov_queues(adapter))return;if (ixgbe_set_dcb_queues(adapter))return;

#endifif (ixgbe_set_sriov_queues(adapter))return;ixgbe_set_rss_queues(adapter);

}以82599网卡为例,其使用如下的发送队列配置:

2.1 SR-IOV与DCB队列 ixgbe_set_dcb_sriov_queues

首先在初始化函数中,RSS队列最大数量取值为:16(IXGBE_MAX_RSS_INDICES)和系统的处理器核心数量,两者之间的较小值。VMDQ队列最大值初始化为1。

enum ixgbe_ring_f_enum {RING_F_NONE = 0,RING_F_VMDQ, /* SR-IOV uses the same ring feature */RING_F_RSS,RING_F_FDIR,

#ifdef IXGBE_FCOERING_F_FCOE,

#endif /* IXGBE_FCOE */RING_F_ARRAY_SIZE /* must be last in enum set */

};#define IXGBE_MAX_RSS_INDICES 16

#define IXGBE_MAX_RSS_INDICES_X550 63

#define IXGBE_MAX_VMDQ_INDICES 64

#define IXGBE_MAX_FDIR_INDICES 63 /* based on q_vector limit */

#define IXGBE_MAX_FCOE_INDICES 8

#define MAX_RX_QUEUES (IXGBE_MAX_FDIR_INDICES + 1)

#define MAX_TX_QUEUES (IXGBE_MAX_FDIR_INDICES + 1)

#define MAX_XDP_QUEUES (IXGBE_MAX_FDIR_INDICES + 1)

#define IXGBE_MAX_L2A_QUEUES 4

#define IXGBE_BAD_L2A_QUEUE 3

#define IXGBE_MAX_MACVLANS 63

...static int ixgbe_sw_init(struct ixgbe_adapter *adapter,const struct ixgbe_info *ii)

{

.../* Set common capability flags and settings */rss = min_t(int, ixgbe_max_rss_indices(adapter), num_online_cpus());adapter->ring_feature[RING_F_RSS].limit = rss;adapter->ring_feature[RING_F_VMDQ].limit = 1;

...

}在使能macvlan设备(macvlan_open)时,调用函数ixgbe_fwd_add初始化流控类别(TC)。首先,如果可用的pool已经达到pool总数量,尝试增加pool的数量。其次,如果pool数量已经达到最大值,返回错误。a)使能DCB的情况下,pool数量大于等于每个流量类别的队列数量;b)pool数量大于MACVLAN数量(63)。

static int macvlan_open(struct net_device *dev)

{

...if (lowerdev->features & NETIF_F_HW_L2FW_DOFFLOAD)vlan->accel_priv =lowerdev->netdev_ops->ndo_dfwd_add_station(lowerdev, dev);

}static const struct net_device_ops ixgbe_netdev_ops = {.ndo_open = ixgbe_open,.ndo_stop = ixgbe_close,.ndo_start_xmit = ixgbe_xmit_frame,....ndo_dfwd_add_station = ixgbe_fwd_add,...

};static void *ixgbe_fwd_add(struct net_device *pdev, struct net_device *vdev)

{pool = find_first_zero_bit(adapter->fwd_bitmask, adapter->num_rx_pools);if (pool == adapter->num_rx_pools) {u16 used_pools = adapter->num_vfs + adapter->num_rx_pools;u16 reserved_pools;if (((adapter->flags & IXGBE_FLAG_DCB_ENABLED) &&adapter->num_rx_pools >= (MAX_TX_QUEUES / tcs)) ||adapter->num_rx_pools > IXGBE_MAX_MACVLANS)return ERR_PTR(-EBUSY);

PF以及每个VF都需要占用一个pool队列,已使用的used_pools数量不能超过最大的VF数量IXGBE_MAX_VF_FUNCTIONS(64)。

/* Hardware has a limited number of available pools. Each VF,* and the PF require a pool. Check to ensure we don't* attempt to use more then the available number of pools.*/if (used_pools >= IXGBE_MAX_VF_FUNCTIONS)return ERR_PTR(-EBUSY);由于启用的pool数量越少,分配给每个pool的队列数量就越多。以下pool数量按照三个等级16/32/64进行分配,将最终结果写入VMDQ的limit中。

/* Enable VMDq flag so device will be set in VM mode */adapter->flags |= IXGBE_FLAG_VMDQ_ENABLED | IXGBE_FLAG_SRIOV_ENABLED;/* Try to reserve as many queues per pool as possible,* we start with the configurations that support 4 queues* per pools, followed by 2, and then by just 1 per pool.*/if (used_pools < 32 && adapter->num_rx_pools < 16)reserved_pools = min_t(u16, 32 - used_pools, 16 - adapter->num_rx_pools);else if (adapter->num_rx_pools < 32)reserved_pools = min_t(u16, 64 - used_pools, 32 - adapter->num_rx_pools);elsereserved_pools = 64 - used_pools;adapter->ring_feature[RING_F_VMDQ].limit += reserved_pools;/* Force reinit of ring allocation with VMDQ enabled */err = ixgbe_setup_tc(pdev, adapter->hw_tcs);

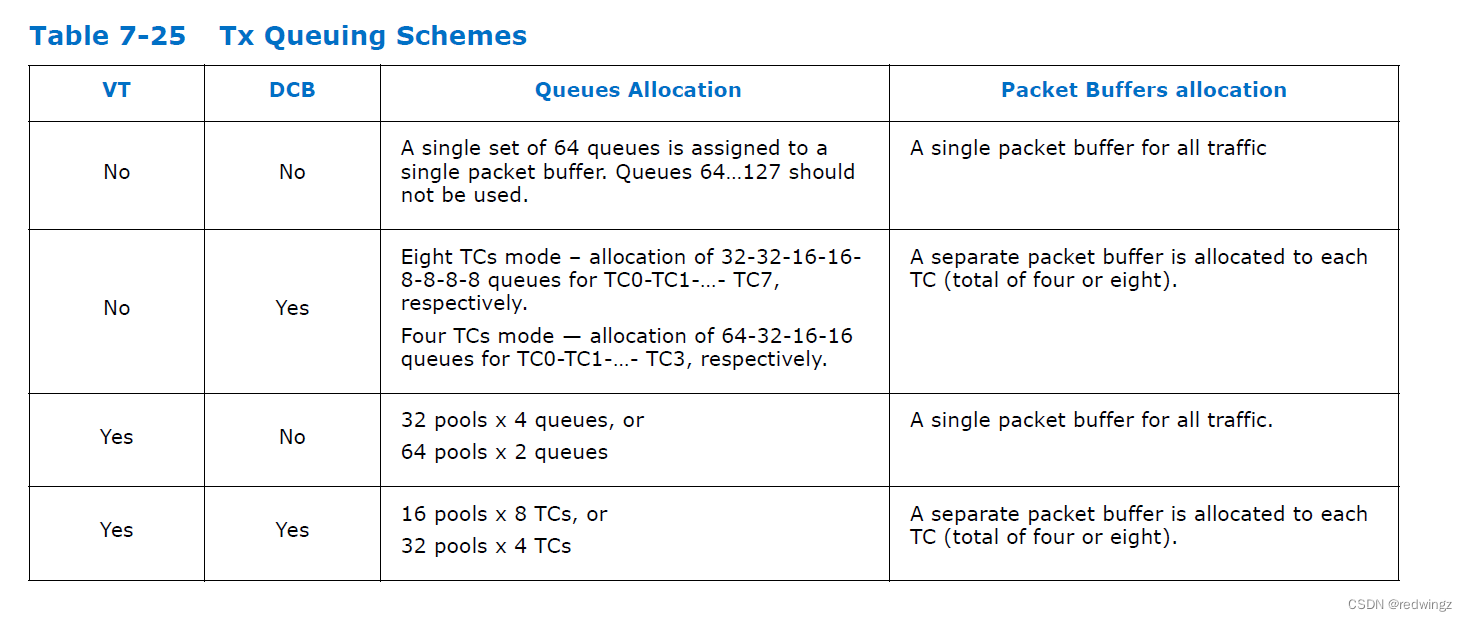

在同时启用DCB和SRIOV的情况下,对于82599网卡,TC取值为8或者4。

static bool ixgbe_set_dcb_sriov_queues(struct ixgbe_adapter *adapter)

{u16 vmdq_i = adapter->ring_feature[RING_F_VMDQ].limit;u16 vmdq_m = 0;u8 tcs = adapter->hw_tcs;/* verify we have DCB queueing enabled before proceeding */if (tcs <= 1) return false;/* verify we have VMDq enabled before proceeding */if (!(adapter->flags & IXGBE_FLAG_SRIOV_ENABLED))return false;以下计算每个pool(每个TC需要一个pool)的队列数量,vmdq_i的pool数量不能超过最大值(MAX_TX_QUEUES / tcs)。先将vmdq_i加上偏移量转换为索引值,对于8TC的情况,pool数量需小于等于16;对于4TC的情况,pool数量需小于等于32。

/* limit VMDq instances on the PF by number of Tx queues */vmdq_i = min_t(u16, vmdq_i, MAX_TX_QUEUES / tcs);/* Add starting offset to total pool count */vmdq_i += adapter->ring_feature[RING_F_VMDQ].offset;/* 16 pools w/ 8 TC per pool */if (tcs > 4) {vmdq_i = min_t(u16, vmdq_i, 16);vmdq_m = IXGBE_82599_VMDQ_8Q_MASK;/* 32 pools w/ 4 TC per pool */} else {vmdq_i = min_t(u16, vmdq_i, 32);vmdq_m = IXGBE_82599_VMDQ_4Q_MASK;}IXGBE不支持同时开启DCB,VMDq和RSS,以下关闭RSS和FDIR。真实的发送队列的数量num_tx_queues 等于pool数量乘以每个pool中的队列数量(每个pool中有所有TC类别的队列)。

/* remove the starting offset from the pool count */vmdq_i -= adapter->ring_feature[RING_F_VMDQ].offset;/* save features for later use */adapter->ring_feature[RING_F_VMDQ].indices = vmdq_i;adapter->ring_feature[RING_F_VMDQ].mask = vmdq_m;/* We do not support DCB, VMDq, and RSS all simultaneously* so we will disable RSS since it is the lowest priority*/adapter->ring_feature[RING_F_RSS].indices = 1;adapter->ring_feature[RING_F_RSS].mask = IXGBE_RSS_DISABLED_MASK;/* disable ATR as it is not supported when VMDq is enabled */adapter->flags &= ~IXGBE_FLAG_FDIR_HASH_CAPABLE;adapter->num_rx_pools = vmdq_i;adapter->num_rx_queues_per_pool = tcs;adapter->num_tx_queues = vmdq_i * tcs;adapter->num_xdp_queues = 0;adapter->num_rx_queues = vmdq_i * tcs;/* configure TC to queue mapping */for (i = 0; i < tcs; i++)netdev_set_tc_queue(adapter->netdev, i, 1, i);2.2 DCB队列 ixgbe_set_dcb_queues

首先在初始化时,RSS队列的数量不超过系统的处理器核心数量,最大值为16(IXGBE_MAX_RSS_INDICES)。

static int ixgbe_sw_init(struct ixgbe_adapter *adapter,const struct ixgbe_info *ii)/* Set common capability flags and settings */rss = min_t(int, ixgbe_max_rss_indices(adapter), num_online_cpus());adapter->ring_feature[RING_F_RSS].limit = rss;如下设置DCB队列函数,首先将发送队列数量平均分到每个流量类别(Traffic Class),对于82598EB,仅支持8TC模式,确保以上计算得到的队列数量不超过4。对于其它网卡(82599),其支持8TC和4TC模式,如果是8TC模式,确保每个类别的队列数量小于8。对于4TC模式,每个流量类别可使用16个队列。

static bool ixgbe_set_dcb_queues(struct ixgbe_adapter *adapter)

{struct net_device *dev = adapter->netdev;struct ixgbe_ring_feature *f;/* Map queue offset and counts onto allocated tx queues */tcs = adapter->hw_tcs;/* verify we have DCB queueing enabled before proceeding */if (tcs <= 1) return false;/* determine the upper limit for our current DCB mode */rss_i = dev->num_tx_queues / tcs;if (adapter->hw.mac.type == ixgbe_mac_82598EB) {/* 8 TC w/ 4 queues per TC */rss_i = min_t(u16, rss_i, 4);rss_m = IXGBE_RSS_4Q_MASK;} else if (tcs > 4) {/* 8 TC w/ 8 queues per TC */rss_i = min_t(u16, rss_i, 8);rss_m = IXGBE_RSS_8Q_MASK;} else {/* 4 TC w/ 16 queues per TC */rss_i = min_t(u16, rss_i, 16);rss_m = IXGBE_RSS_16Q_MASK;}确保以上得到的队列数量小于RSS设置的最大值,最后,每个流量类别的队列数量乘以类别总数为真实的发送队列总数量。

/* set RSS mask and indices */f = &adapter->ring_feature[RING_F_RSS];rss_i = min_t(int, rss_i, f->limit);f->indices = rss_i;f->mask = rss_m;/* disable ATR as it is not supported when multiple TCs are enabled */adapter->flags &= ~IXGBE_FLAG_FDIR_HASH_CAPABLE;for (i = 0; i < tcs; i++)netdev_set_tc_queue(dev, i, rss_i, rss_i * i);adapter->num_tx_queues = rss_i * tcs;

2.3 SR-IOV队列 ixgbe_set_sriov_queues

首先在初始化函数中,RSS队列和FDIR队列的数量不超过系统的处理器核心数量,VMDQ队列最大值初始化为1。

static int ixgbe_sw_init(struct ixgbe_adapter *adapter,const struct ixgbe_info *ii)/* Set common capability flags and settings */rss = min_t(int, ixgbe_max_rss_indices(adapter), num_online_cpus());adapter->ring_feature[RING_F_RSS].limit = rss;adapter->flags2 |= IXGBE_FLAG2_RSC_CAPABLE;adapter->max_q_vectors = MAX_Q_VECTORS_82599;adapter->atr_sample_rate = 20;fdir = min_t(int, IXGBE_MAX_FDIR_INDICES, num_online_cpus());adapter->ring_feature[RING_F_FDIR].limit = fdir;adapter->fdir_pballoc = IXGBE_FDIR_PBALLOC_64K;adapter->ring_feature[RING_F_VMDQ].limit = 1;

首先,确保rss_i值不超过每个vmdq_i所占用的最大队列数量。其次,将pool值vmdq_i加上偏移量offset转换为索引值,其值限定在最大索引值64(IXGBE_MAX_VMDQ_INDICES)。82599网卡在VT模式下,仅支持32和64pool的配置,对于64pool的情况,每个pool的队列数量限定在2;对于32pool的情况,每个pool的队列数量可选择4/2/1。

static bool ixgbe_set_sriov_queues(struct ixgbe_adapter *adapter)

{u16 vmdq_i = adapter->ring_feature[RING_F_VMDQ].limit;u16 rss_i = adapter->ring_feature[RING_F_RSS].limit;u16 rss_m = IXGBE_RSS_DISABLED_MASK;/* only proceed if SR-IOV is enabled */if (!(adapter->flags & IXGBE_FLAG_SRIOV_ENABLED))return false;/* limit l2fwd RSS based on total Tx queue limit */rss_i = min_t(u16, rss_i, MAX_TX_QUEUES / vmdq_i);/* Add starting offset to total pool count */vmdq_i += adapter->ring_feature[RING_F_VMDQ].offset;/* double check we are limited to maximum pools */vmdq_i = min_t(u16, IXGBE_MAX_VMDQ_INDICES, vmdq_i);/* 64 pool mode with 2 queues per pool */if (vmdq_i > 32) {vmdq_m = IXGBE_82599_VMDQ_2Q_MASK;rss_m = IXGBE_RSS_2Q_MASK;rss_i = min_t(u16, rss_i, 2);/* 32 pool mode with up to 4 queues per pool */} else {vmdq_m = IXGBE_82599_VMDQ_4Q_MASK;rss_m = IXGBE_RSS_4Q_MASK;/* We can support 4, 2, or 1 queues */rss_i = (rss_i > 3) ? 4 : (rss_i > 1) ? 2 : 1;}此时,发送队列数量由pool数量(vmdq_i非索引),乘以每个pool中的rss_i队列数量来确定。

/* remove the starting offset from the pool count */vmdq_i -= adapter->ring_feature[RING_F_VMDQ].offset;/* save features for later use */adapter->ring_feature[RING_F_VMDQ].indices = vmdq_i;adapter->ring_feature[RING_F_VMDQ].mask = vmdq_m;/* limit RSS based on user input and save for later use */adapter->ring_feature[RING_F_RSS].indices = rss_i;adapter->ring_feature[RING_F_RSS].mask = rss_m;adapter->num_rx_pools = vmdq_i;adapter->num_rx_queues_per_pool = rss_i;adapter->num_rx_queues = vmdq_i * rss_i;adapter->num_tx_queues = vmdq_i * rss_i;adapter->num_xdp_queues = 0;/* disable ATR as it is not supported when VMDq is enabled */adapter->flags &= ~IXGBE_FLAG_FDIR_HASH_CAPABLE;/* To support macvlan offload we have to use num_tc to* restrict the queues that can be used by the device.* By doing this we can avoid reporting a false number of queues.*/if (vmdq_i > 1) netdev_set_num_tc(adapter->netdev, 1);/* populate TC0 for use by pool 0 */netdev_set_tc_queue(adapter->netdev, 0, adapter->num_rx_queues_per_pool, 0);2.4 RSS队列 ixgbe_set_rss_queues

对于82598/82599/X540网卡,RSS队列的最大值为16(IXGBE_MAX_RSS_INDICES)。对于X550系列网卡,RSS队列最大为63(IXGBE_MAX_RSS_INDICES_X550)。

static inline u8 ixgbe_max_rss_indices(struct ixgbe_adapter *adapter)

{ switch (adapter->hw.mac.type) {case ixgbe_mac_82598EB:case ixgbe_mac_82599EB:case ixgbe_mac_X540:return IXGBE_MAX_RSS_INDICES;case ixgbe_mac_X550:case ixgbe_mac_X550EM_x:case ixgbe_mac_x550em_a:return IXGBE_MAX_RSS_INDICES_X550;另外,RSS队列的数量不超过系统CPU核心的数量。FDIR的数量取值在CPU核心数量,和63(IXGBE_MAX_FDIR_INDICES)之间的较小值。

static int ixgbe_sw_init(struct ixgbe_adapter *adapter,const struct ixgbe_info *ii)

{/* Set common capability flags and settings */rss = min_t(int, ixgbe_max_rss_indices(adapter), num_online_cpus());adapter->ring_feature[RING_F_RSS].limit = rss;adapter->atr_sample_rate = 20;fdir = min_t(int, IXGBE_MAX_FDIR_INDICES, num_online_cpus());adapter->ring_feature[RING_F_FDIR].limit = fdir;没有启动DCB和VT模式,函数ixgbe_set_rss_queues尝试为每个处理器核心发配一个发送/接收队列。

static bool ixgbe_set_rss_queues(struct ixgbe_adapter *adapter)

{struct ixgbe_hw *hw = &adapter->hw;struct ixgbe_ring_feature *f;u16 rss_i;/* set mask for 16 queue limit of RSS */f = &adapter->ring_feature[RING_F_RSS];rss_i = f->limit;f->indices = rss_i;

如果RSS索引大于1,使用FDIR配置的队列索引。真实的发送队列数量等于RSS数量。

/* disable ATR by default, it will be configured below */adapter->flags &= ~IXGBE_FLAG_FDIR_HASH_CAPABLE;/** Use Flow Director in addition to RSS to ensure the best* distribution of flows across cores, even when an FDIR flow* isn't matched.*/if (rss_i > 1 && adapter->atr_sample_rate) {f = &adapter->ring_feature[RING_F_FDIR];rss_i = f->indices = f->limit;if (!(adapter->flags & IXGBE_FLAG_FDIR_PERFECT_CAPABLE))adapter->flags |= IXGBE_FLAG_FDIR_HASH_CAPABLE;}adapter->num_rx_queues = rss_i;adapter->num_tx_queues = rss_i;

3 设置真实队列数量 netif_set_real_num_tx_queues

int ixgbe_open(struct net_device *netdev)

{struct ixgbe_adapter *adapter = netdev_priv(netdev);struct ixgbe_hw *hw = &adapter->hw;/* Notify the stack of the actual queue counts. */queues = adapter->num_tx_queues;err = netif_set_real_num_tx_queues(netdev, queues);