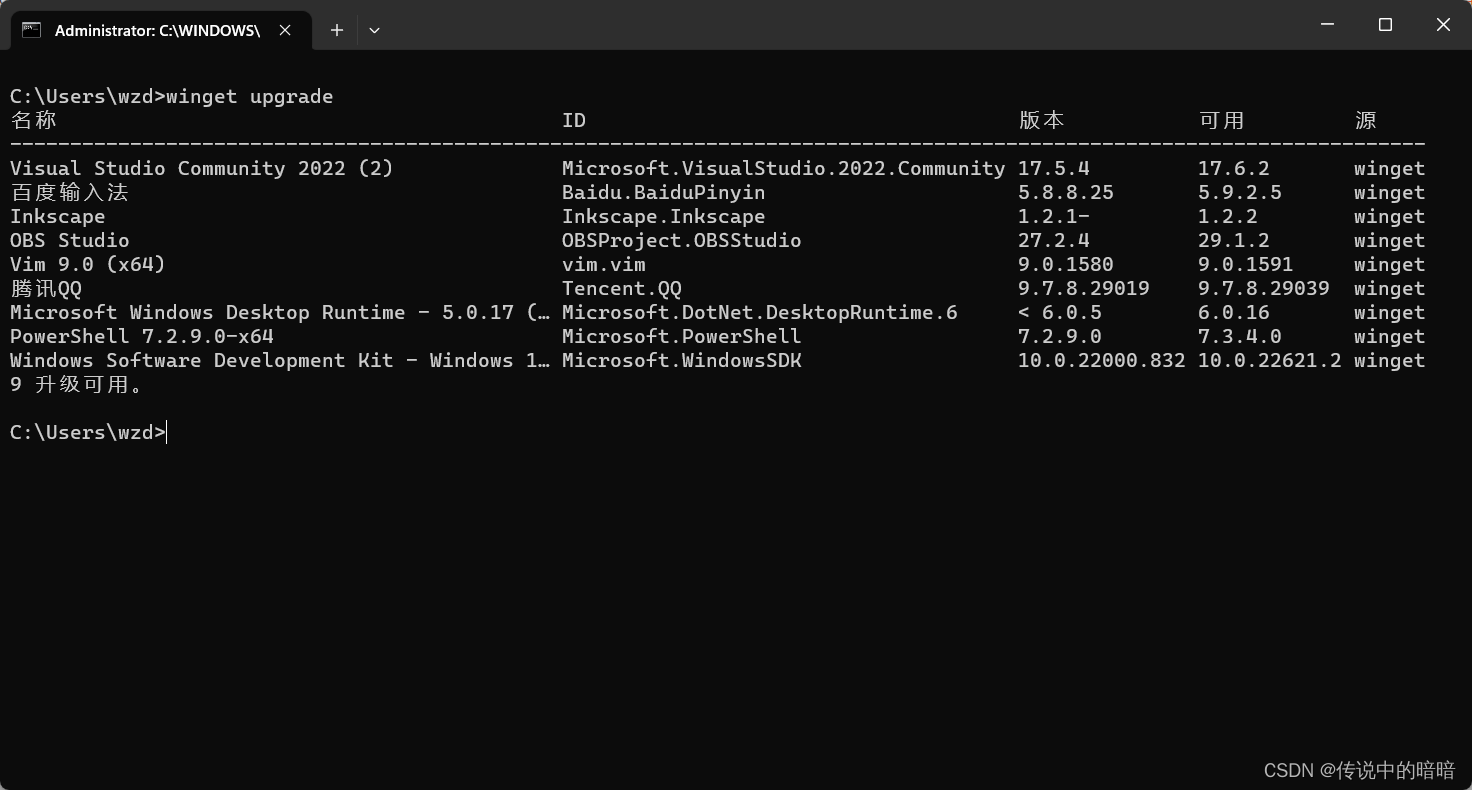

一、加载深度神经网络模型

Net cv:dnn::readNet(const String & model, const String & config = "", const String & framework = "")

model:模型文件名称

config:配置文件名称

framework:框架种类

Net类中的函数名称以及作用:

向网络层中添加数据:

void cv::dnn::Net::setInput ( InputArray blob, const String & name = "", scalefactor =, double 1.0, const Scalar & mean = Scalar())

blob:新的输入数据,数据类型为CV_32F或CV_8U。

name:输入网络层的名称。

scalefactor:可选的标准化比例(尺寸缩放)。

mean:可选的减数数值(平移)。

opencv调用深度学习模型:

using namespace cv::dnn;int main() {string model = "caffe_model.caffemodel";string config = "caffe_model.prototxt";//加载模型Net net = dnn::readNet(model, config); if (net.empty()){cout << "请确认是否输入空的模型文件" << endl; return -1;}// 获取各层信息vector<String> layerNames = net.getLayerNames();for (int i = 0; i < layerNames.size(); i++){// 读取每层网络的IDint ID = net.getLayerId(layerNames[i]);// 读取每层网络的信息Ptr<Layer> layer = net.getLayer(ID);//输出网络信息cout << "网络层数; " << ID << "网络层名称:" << layerNames[i] << endl<< "网络层类型:" << layer->type.c_str() << endl;}return 0;

}

二、opencv使用深度学习模型

输入数据尺寸转换函数:

Mat cv::dnn::blobFromlmages ( InputArrayOfArrays images, scalefactor =, double 1.0, Size size = size(), mean =, const Scalar & Scalar(), swapRB=, bool false, bool crop = false, int ddepth = cv_32F)

images:输入图像,图像可以是单通道、三通道或者四通道。

scalefactor:图像像素缩放系数。

size:输出图像的尺寸。

mean:像素值去均值化的数值。

swapRB:是否交换三通道图像的第一个通道和最后一个通道的标志。

crop:调整大小后是否对图像进行剪切的标志。

ddepth:输出图像的数据类型,可选参数为CV_32F或CV_8U。

使用神经网络对图像进行分类:

int main() {//读取图片Mat src = imread("1.png");if (src.empty()){printf("不能打开空图片");return -1;}//读取分类种类名称String typeListFile = "image_recognition/imagenet_comp_graph_label_strings.txt";vector<String> typeList;ifstream file(typeListFile);if (!file.is_open()){printf("请确认分类种类名称是否正确");return -1;}std::string type;while (!file.eof()){//读取名称getline(file, type);if (type.length()){typeList.push_back(type);}}file.close();//加载网络String tf_pb_file = "imnge_recognition/tensorflom_inception_graph. pb ";Net net = readNet(tf_pb_file);if (net.empty()){printf("请确认模型文件是否为空文件");return -1;}//对输入图像数据进行处理Mat blob = blobFromImage(src, 1.0f, Size(224, 224), Scalar(), true, false);//进行图像种类预测Mat prob;net.setInput(blob, "input"); prob = net.forward("softmax2");//得到最可能分类输出Mat probMat = prob.reshape(1, 1);Point classNumber;// 最大可能性double classProb;minMaxLoc(probMat, NULL, &classProb, NULL, &classNumber);string typeName = typeList.at(classNumber.x).c_str();cout << "图像中物体可能为: " <<typeName << "可能性为" << classProb;//检测内容string str = typeName + "possibility:" + to_string(classProb);putText(src, str, Point(50, 50), FONT_HERSHEY_SIMPLEX, 1.0, Scalar(0, 0, 255), 2, 8);imshow("图像判断结果", src);waitKey(0);return 0;

}

采用opencv进行图像风格迁移:

int main() {//读取图片Mat src = imread("1.png");if (src.empty()){printf("不能打开空图片");return -1;}//读取五个模型文件String models[5] = { "the_wave.t7","mosaic.t7","feathers.t7 ","candy.t7","udnie.t7"); for (int i = 0; i < size(models); i++){Net net = readNet("fest_style / " + models[i]);imshow("原始图像", src);//计算图像每个通道均值Scalar imgaeMean = mean(src);// 调整图像尺寸和格式Mat blobImage = blobFromImage(src, 1.0, Size(256, 256), imgaeMean, false, false);// 计算网络对原图像处理结果net.setInput(blobImage); Mat output = net.forward();//输出结果的尺寸和通道数int outputChannels = output.size[1]; int outputRows = output.size[2]; int outputCols = output.size[3]; // 将输出结果存放到图像中Mat result = Mat::zeros(Size(outputCols, outputRows),CV_32FC3); float* data = output.ptr<float>();for (int channel = 0; channel < outputChannels; channel++){for (int row = 0; row < outputRows; row++){for (int col = 0; col < outputCols; col++){result.at<Vec3f>(row, col)[channel] = *data++;}}}//对迁移结果进行进一步操作处理//恢复图像减掉的均值result = result + imgaeMean;//对图像进行归一化, 便于图像显示result = result / 255.0;//调整图像尺寸,使得与原图像尺寸相同resize(result, result, src.size());//显示结果imshow("result", result);}

}

采用两个不同的网络联系对图像进行处理,先检测人脸,在检测性别:

int main() {//读取图片Mat src = imread("1.png");if (src.empty()){printf("不能打开空图片");return -1;}// 读取人脸识别模型String model_bin = "face_age/ openev_face_detectar_uint8. pb";String config_text = "face_age / opencv_face_detector.pbtxt";Net faceNet = readNet(model_bin, config_text);//读取性别检测模型String genderProto = "face_age/gender_deploy.prototxt";String genderModel = "face_age/gendler_net.caffemodel";String genderList[] = { "Male", "Female" };Net genderNet = readNet(genderModel, genderProto);if (faceNet.empty() && genderNet.empty()){cout << "请确定是否输入正确的模型文件" << endl;return -1;}//对整幅图像进行人脸检测Mat blobImage = blobFromImage(src, 1.0, Size(300, 300), Scalar(), false, false);faceNet.setInput(blobImage, "data");Mat detect = faceNet.forward("detection_out");// 人脸概率、人脸矩形区域的位置Mat detectionMat(detect.size[2], detect.size[3], CV_32F, detect.ptr <float>());//对每个人脸区域进行性别检测// 每个人脸区域界个方向扩充的尺寸int exBoundray = 25;//判定定为人脸的概率阀值,阈值越大准确性越高float confidenceThreshold = 0.5;for (int i = 0; i < detectionMat.rows; i++){//检测为人脸的概率float confidence = detectionMat.at<float>(i, 2);// 只检测概率大于阙恼区域的性别if (confidence > confidenceThreshold){//网络检测人脸区域大小int topLx = detectionMat.at<float>(i, 3)* src.cols;int topLy = detectionMat.at<float>(i, 4)* src.rows;int bottomRx = detectionMat.at<float>(i, 5)*src.cols;int bottomRy = detectionMat.at<float>(i, 6)* src.rows;Rect faceRect(topLx, topLy, bottomRx - topLx, bottomRy - topLy);//将网络检测出的区域尺寸进行扩充,要注意防止尺寸在图像真实尺寸之外Rect faceTextRect;faceTextRect.x = max(0, faceRect.x - exBoundray);faceTextRect.y = max(0, faceRect.y - exBoundray);faceTextRect.width = min(faceRect.width + exBoundray, src.cols - 1);faceTextRect.height = min(faceRect.height + exBoundray, src.rows - 1);// 扩充后的人脸图像Mat face = src(faceTextRect);//调整面部图像尺寸Mat faceblob = blobFromImage(face, 1.0, Size(227, 227), Scalar(), false, false);// 将调整后的面部图像输入到性别检测网络genderNet.setInput(faceblob);// 计算检测结果//两个性别的可能性Mat genderPreds = genderNet.forward();//性别检测结果float male, female;male = genderPreds.at<float>(0, 0);female = genderPreds.at<float>(0, 1);int classID = male > female ? 0 : 1;String gender = genderList[classID];//在原图像中绘制面都轮廓和性别rectangle(src, faceRect, Scalar(0, 0, 255), 2, 8, 0);putText(src, gender.c_str(), faceRect.tl(), FONT_HERSHEY_SIMPLEX, 0.8, Scalar(0, 0, 255), 2, 8);}}imshow("结果", src);waitKey(0);return 0;

}