网络文章整理

PART 1

http://disinfo.com/2016/03/face2face-real-time-face-capture-and-reenactment-of-rgb-videos/

Face2Face: Real-time Face Capture and Reenactment

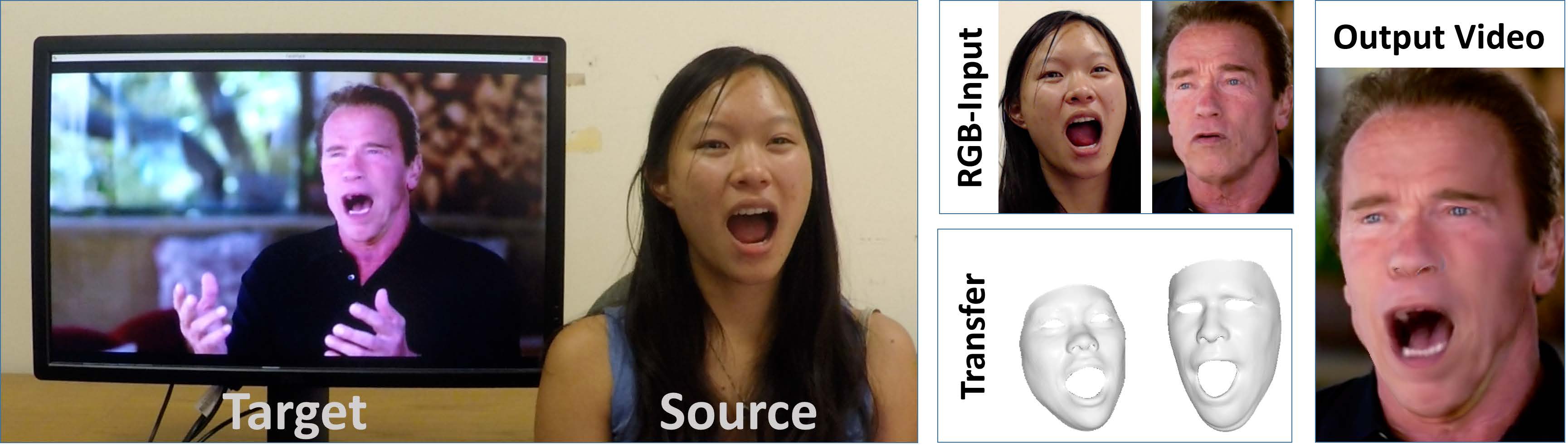

Today, we can kiss reality goodbye. Stanford, University of Erlangen-Nuremberg and Max Planck Institute for Informatics unveiled their latest project and it is very scary. They have developed a technology that can manipulate youtube videos in real-time with a basic web camera. The implications of these technology are very serious.

Abstract:

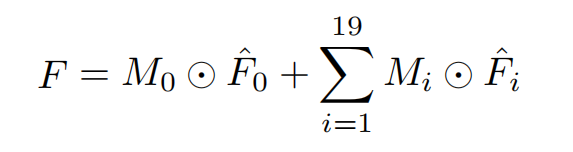

We present a novel approach for real-time facial reenactment of a monocular target video sequence (e.g., Youtube video). The source sequence is also a monocular video stream, captured live with a commodity webcam. Our goal is to animate the facial expressions of the target video by a source actor and re-render the manipulated output video in a photo-realistic fashion. To this end, we first address the under-constrained problem of facial identity recovery from monocular video by non-rigid model-based bundling. At run time, we track facial expressions of both source and target video using a dense photometric consistency measure. Reenactment is then achieved by fast and efficient deformation transfer between source and target. The mouth interior that best matches the re-targeted expression is retrieved from the target sequence and warped to produce an accurate fit. Finally, we convincingly re-render the synthesized target face on top of the corresponding video stream such that it seamlessly blends with the real-world illumination. We demonstrate our method in a live setup, where Youtube videos are reenacted in real time.

Now that scene from Bruce Almighty is actually possible…

- About

- Latest Posts

Harry Henderson

PART 2

Face2Face: Real-time Face Capture and Reenactment of RGB Videos

Proc. Computer Vision and Pattern Recognition (CVPR), IEEE, June 2016

Proc. Computer Vision and Pattern Recognition (CVPR), IEEE, June 2016 Abstract

We present a novel approach for real-time facial reenactment of a monocular target video sequence (e.g., Youtube video). The source sequence is also a monocular video stream, captured live with a commodity webcam. Our goal is to animate the facial expressions of the target video by a source actor and re-render the manipulated output video in a photo-realistic fashion. To this end, we first address the under-constrained problem of facial identity recovery from monocular video by non-rigid model-based bundling. At run time, we track facial expressions of both source and target video using a dense photometric consistency measure. Reenactment is then achieved by fast and efficient deformation transfer between source and target. The mouth interior that best matches the re-targeted expression is retrieved from the target sequence and warped to produce an accurate fit. Finally, we convincingly re-render the synthesized target face on top of the corresponding video stream such that it seamlessly blends with the real-world illumination. We demonstrate our method in a live setup, where Youtube videos are reenacted in real time.

Extras

Paper: ![]() PDF

PDF

Supplemental Materials: ![]() ZIP

ZIP

BibTeX: ![]() .bib

.bib

Google Scholar: ![]()