文章目录

- 前言

- 整体网络结构

- 分解的yolov7.yaml

- 各组件结构

- ELAN1 (backbone)

- ELAN2 (head)

- MPConv

- SPPCSPC

- RepConv(重参数卷积)

- 原理理解层面

- 代码实现层面

- ImpConv(隐性知识学习)

- 训练时

- 推理时

- References

前言

论文地址

YOLOv7源码

下面对v0.1版本的整体网络结构及各个组件,结合源码和train文件夹中的yolov7.yaml配置文件进行解析。

整体网络结构

分解的yolov7.yaml

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple# anchors

anchors:- [12,16, 19,36, 40,28] # P3/8- [36,75, 76,55, 72,146] # P4/16- [142,110, 192,243, 459,401] # P5/32# yolov7 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [32, 3, 1]], # 0[-1, 1, Conv, [64, 3, 2]], # 1-P1/2 [-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [128, 3, 2]], # 3-P2/4# ELAN1[-1, 1, Conv, [64, 1, 1]],[-2, 1, Conv, [64, 1, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[[-1, -3, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1]], # 11# MPConv[-1, 1, MP, []],[-1, 1, Conv, [128, 1, 1]],[-3, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [128, 3, 2]],[[-1, -3], 1, Concat, [1]], # 16-P3/8# ELAN1[-1, 1, Conv, [128, 1, 1]],[-2, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[[-1, -3, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [512, 1, 1]], # 24# MPConv[-1, 1, MP, []],[-1, 1, Conv, [256, 1, 1]],[-3, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [256, 3, 2]],[[-1, -3], 1, Concat, [1]], # 29-P4/16# ELAN1[-1, 1, Conv, [256, 1, 1]],[-2, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[[-1, -3, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [1024, 1, 1]], # 37# MPConv[-1, 1, MP, []],[-1, 1, Conv, [512, 1, 1]],[-3, 1, Conv, [512, 1, 1]],[-1, 1, Conv, [512, 3, 2]],[[-1, -3], 1, Concat, [1]], # 42-P5/32# ELAN1[-1, 1, Conv, [256, 1, 1]],[-2, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[[-1, -3, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [1024, 1, 1]], # 50]# yolov7 head

head:[[-1, 1, SPPCSPC, [512]], # 51[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[37, 1, Conv, [256, 1, 1]], # route backbone P4[[-1, -2], 1, Concat, [1]],# ELAN2[-1, 1, Conv, [256, 1, 1]],[-2, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1]], # 63[-1, 1, Conv, [128, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[24, 1, Conv, [128, 1, 1]], # route backbone P3[[-1, -2], 1, Concat, [1]],# ELAN2[-1, 1, Conv, [128, 1, 1]],[-2, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1]], # 75# MPConv Channel × 2[-1, 1, MP, []],[-1, 1, Conv, [128, 1, 1]],[-3, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [128, 3, 2]],[[-1, -3, 63], 1, Concat, [1]],# ELAN2[-1, 1, Conv, [256, 1, 1]],[-2, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1]], # 88# MPConv Channel × 2[-1, 1, MP, []],[-1, 1, Conv, [256, 1, 1]],[-3, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [256, 3, 2]],[[-1, -3, 51], 1, Concat, [1]],# ELAN2[-1, 1, Conv, [512, 1, 1]],[-2, 1, Conv, [512, 1, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[-1, 1, Conv, [256, 3, 1]],[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [512, 1, 1]], # 101[75, 1, RepConv, [256, 3, 1]],[88, 1, RepConv, [512, 3, 1]],[101, 1, RepConv, [1024, 3, 1]],[[102,103,104], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5)]

各组件结构

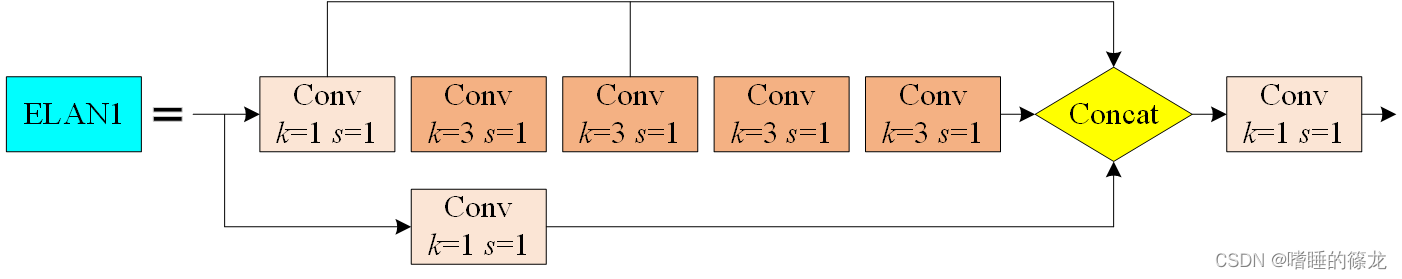

ELAN1 (backbone)

yolov7.yaml中对应部分:

# ELAN1[-1, 1, Conv, [64, 1, 1]],[-2, 1, Conv, [64, 1, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[-1, 1, Conv, [64, 3, 1]],[[-1, -3, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1]], # 11

ELAN2 (head)

yolov7.yaml中对应部分:

# ELAN2[-1, 1, Conv, [256, 1, 1]],[-2, 1, Conv, [256, 1, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[-1, 1, Conv, [128, 3, 1]],[[-1, -2, -3, -4, -5, -6], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1]], # 63

MPConv

- 在

backnone中的对应部分 - 要注意相比于

MP函数之前,通道数减少一半

[-1, 1, Conv, [256, 1, 1]], # 11# MPConv[-1, 1, MP, []],[-1, 1, Conv, [128, 1, 1]],[-3, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [128, 3, 2]],[[-1, -3], 1, Concat, [1]], # 16-P3/8

- 在

head中的对应部分 - 要注意相比于

MP函数之前,通道数不变

[-1, 1, Conv, [128, 1, 1]], # 75# MPConv Channel × 2[-1, 1, MP, []],[-1, 1, Conv, [128, 1, 1]],[-3, 1, Conv, [128, 1, 1]],[-1, 1, Conv, [128, 3, 2]],[[-1, -3, 63], 1, Concat, [1]],

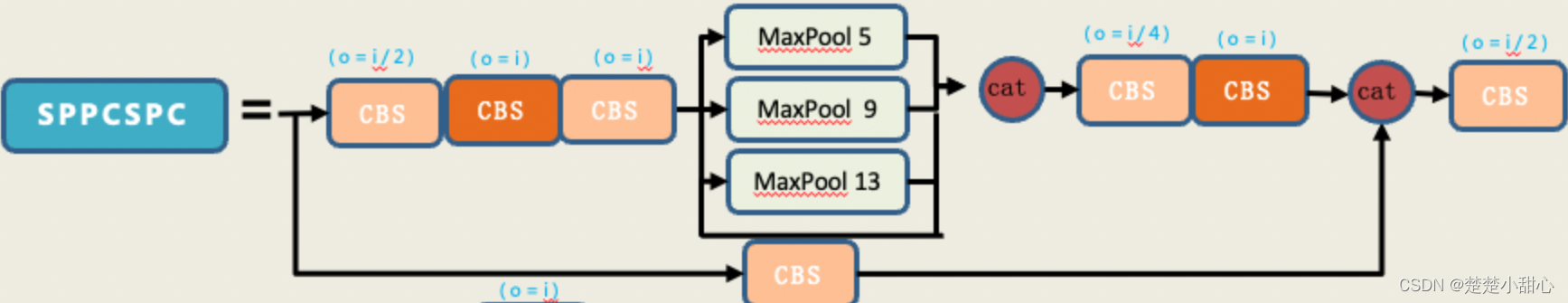

SPPCSPC

类似于yolov5中的SPPF,不同的是,使用了5×5、9×9、13×13最大池化。

common.py中对应部分:

class SPPCSPC(nn.Module):# CSP https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)):super(SPPCSPC, self).__init__()c_ = int(2 * c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(c_, c_, 3, 1)self.cv4 = Conv(c_, c_, 1, 1)self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])self.cv5 = Conv(4 * c_, c_, 1, 1)self.cv6 = Conv(c_, c_, 3, 1)self.cv7 = Conv(2 * c_, c2, 1, 1)def forward(self, x):x1 = self.cv4(self.cv3(self.cv1(x)))y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1)))y2 = self.cv2(x)return self.cv7(torch.cat((y1, y2), dim=1))

RepConv(重参数卷积)

原理理解层面

- 训练时:一个3*3卷积、一个1*1卷积和一个BN层(当输入输出通道相同时)相加得到输出

- 推理时:将以上三部分重参数化,合并为一个3*3的卷积输出

代码实现层面

- 训练时:不执行

Model类的fuse函数 - 推理时:在

attempt_load函数加载训练好的模型时,会执行Model类的fuse函数,进而调用fuse_repvgg_block函数,实现将三个卷积重参数化,合并为一个卷积输出 common.py中对应部分:

# Represented convolution https://arxiv.org/abs/2101.03697

class RepConv(nn.Module):'''重参数卷积训练时:deploy = Falserbr_dense(3*3卷积) + rbr_1x1(1*1卷积) + rbr_identity(c2 == c1时) 三者相加rbr_reparam = None推理时:deploy = Truerbr_reparam = Conv2drbr_dense = Nonerbr_1x1 = Nonerbr_identity = None'''def __init__(self, c1, c2, k=3, s=1, p=None, g=1, act=True, deploy=False):super(RepConv, self).__init__()self.deploy = deployself.groups = gself.in_channels = c1self.out_channels = c2assert k == 3assert autopad(k, p) == 1padding_11 = autopad(k, p) - k // 2self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())# 推理阶段,仅有一个3×3的卷积来替换if deploy:self.rbr_reparam = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=True)else:# 训练阶段,当输入和输出的通道数相同时,会在加一个BN层self.rbr_identity = (nn.BatchNorm2d(num_features=c1) if c2 == c1 and s == 1 else None)# 3×3的卷积(padding=1)self.rbr_dense = nn.Sequential(nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False),nn.BatchNorm2d(num_features=c2),)# 1×1的卷积self.rbr_1x1 = nn.Sequential(nn.Conv2d(c1, c2, 1, s, padding_11, groups=g, bias=False),nn.BatchNorm2d(num_features=c2),)def forward(self, inputs):if hasattr(self, "rbr_reparam"):return self.act(self.rbr_reparam(inputs))if self.rbr_identity is None:id_out = 0else:id_out = self.rbr_identity(inputs)return self.act(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)# Conv2D + BN -> Conv2Ddef fuse_conv_bn(self, conv, bn):std = (bn.running_var + bn.eps).sqrt()bias = bn.bias - bn.running_mean * bn.weight / stdt = (bn.weight / std).reshape(-1, 1, 1, 1)weights = conv.weight * tbn = nn.Identity()conv = nn.Conv2d(in_channels=conv.in_channels,out_channels=conv.out_channels,kernel_size=conv.kernel_size,stride=conv.stride,padding=conv.padding,dilation=conv.dilation,groups=conv.groups,bias=True,padding_mode=conv.padding_mode)conv.weight = torch.nn.Parameter(weights)conv.bias = torch.nn.Parameter(bias)return conv# 在推理阶段才执行重参数操作def fuse_repvgg_block(self):if self.deploy:returnprint(f"RepConv.fuse_repvgg_block")self.rbr_dense = self.fuse_conv_bn(self.rbr_dense[0], self.rbr_dense[1])self.rbr_1x1 = self.fuse_conv_bn(self.rbr_1x1[0], self.rbr_1x1[1])rbr_1x1_bias = self.rbr_1x1.bias# self.rbr_1x1.weight [256, 128, 1, 1]# weight_1x1_expanded [256, 128, 3, 3]weight_1x1_expanded = torch.nn.functional.pad(self.rbr_1x1.weight, [1, 1, 1, 1])# Fuse self.rbr_identityif (isinstance(self.rbr_identity, nn.BatchNorm2d) or isinstance(self.rbr_identity,nn.modules.batchnorm.SyncBatchNorm)):# print(f"fuse: rbr_identity == BatchNorm2d or SyncBatchNorm")identity_conv_1x1 = nn.Conv2d(in_channels=self.in_channels,out_channels=self.out_channels,kernel_size=1,stride=1,padding=0,groups=self.groups,bias=False)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.to(self.rbr_1x1.weight.data.device)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.squeeze().squeeze()# print(f" identity_conv_1x1.weight = {identity_conv_1x1.weight.shape}")identity_conv_1x1.weight.data.fill_(0.0)identity_conv_1x1.weight.data.fill_diagonal_(1.0)identity_conv_1x1.weight.data = identity_conv_1x1.weight.data.unsqueeze(2).unsqueeze(3)# print(f" identity_conv_1x1.weight = {identity_conv_1x1.weight.shape}")identity_conv_1x1 = self.fuse_conv_bn(identity_conv_1x1, self.rbr_identity)bias_identity_expanded = identity_conv_1x1.biasweight_identity_expanded = torch.nn.functional.pad(identity_conv_1x1.weight, [1, 1, 1, 1])else:# print(f"fuse: rbr_identity != BatchNorm2d, rbr_identity = {self.rbr_identity}")bias_identity_expanded = torch.nn.Parameter(torch.zeros_like(rbr_1x1_bias))weight_identity_expanded = torch.nn.Parameter(torch.zeros_like(weight_1x1_expanded))# print(f"self.rbr_1x1.weight = {self.rbr_1x1.weight.shape}, ")# print(f"weight_1x1_expanded = {weight_1x1_expanded.shape}, ")# print(f"self.rbr_dense.weight = {self.rbr_dense.weight.shape}, ")self.rbr_dense.weight = torch.nn.Parameter(self.rbr_dense.weight + weight_1x1_expanded + weight_identity_expanded)self.rbr_dense.bias = torch.nn.Parameter(self.rbr_dense.bias + rbr_1x1_bias + bias_identity_expanded)self.rbr_reparam = self.rbr_dense# 前向推理时,使用重参数化后的 rbr_reparam 函数self.deploy = Trueif self.rbr_identity is not None:del self.rbr_identityself.rbr_identity = Noneif self.rbr_1x1 is not None:del self.rbr_1x1self.rbr_1x1 = Noneif self.rbr_dense is not None:del self.rbr_denseself.rbr_dense = None

ImpConv(隐性知识学习)

这一部分直接继承自YOLOR中的显隐性知识学习。一般情况下,将神经网络的浅层特征称为显性知识,深层特征称为隐性知识。而YOLOR的作者(同时也是YOLOv7的作者)则直接把神经网络最终观察到的知识称为显性知识,那些观察不到、与观察无关的知识称为隐性知识。

在model/common.py文件中,定义了两类隐性知识:ImplicitA和ImplicitM,分别对输入 相加 和 相乘:

# Add

class ImplicitA(nn.Module):def __init__(self, channel, mean=0., std=.02):super(ImplicitA, self).__init__()self.channel = channelself.mean = meanself.std = std# 全0矩阵self.implicit = nn.Parameter(torch.zeros(1, channel, 1, 1))nn.init.normal_(self.implicit, mean=self.mean, std=self.std)def forward(self, x):# 全0矩阵 与 输入 相加return self.implicit + x# Multiply

class ImplicitM(nn.Module):def __init__(self, channel, mean=0., std=.02):super(ImplicitM, self).__init__()self.channel = channelself.mean = meanself.std = std# 全1矩阵self.implicit = nn.Parameter(torch.ones(1, channel, 1, 1))nn.init.normal_(self.implicit, mean=self.mean, std=self.std)def forward(self, x):# 全1矩阵 与 输入相乘return self.implicit * x

训练时

在模型训练阶段,先对输入进行ImplicitA操作, 在进行1*1卷积,最后进行ImplicitM操作:

class IDetect(nn.Module):stride = None # strides computed during buildexport = False # onnx exportend2end = Falseinclude_nms = Falsedef __init__(self, nc=80, anchors=(), ch=()): # detection layersuper(IDetect, self).__init__()self.nc = nc # number of classesself.no = nc + 5 # number of outputs per anchorself.nl = len(anchors) # number of detection layersself.na = len(anchors[0]) // 2 # number of anchorsself.grid = [torch.zeros(1)] * self.nl # init grida = torch.tensor(anchors).float().view(self.nl, -1, 2)self.register_buffer('anchors', a) # shape(nl,na,2)self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl,1,na,1,1,2)self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv# 初始化隐性知识self.ia = nn.ModuleList(ImplicitA(x) for x in ch)self.im = nn.ModuleList(ImplicitM(self.no * self.na) for _ in ch)def forward(self, x):# x = x.copy() # for profilingz = [] # inference outputself.training |= self.exportfor i in range(self.nl):# 加入隐性知识x[i] = self.m[i](self.ia[i](x[i])) # convx[i] = self.im[i](x[i])bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()if not self.training: # inferenceif self.grid[i].shape[2:4] != x[i].shape[2:4]:self.grid[i] = self._make_grid(nx, ny).to(x[i].device)y = x[i].sigmoid()y[..., 0:2] = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xyy[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # whz.append(y.view(bs, -1, self.no))return x if self.training else (torch.cat(z, 1), x)

推理时

在模型推理阶段,将ImplicitA-Conv-ImplicitM融合为一个1*1的Conv操作:

# 将隐性知识与Detect层的1*1卷积进行融合

def fuse(self):print("IDetect.fuse")# fuse ImplicitA and Convolutionfor i in range(len(self.m)):c1, c2, _, _ = self.m[i].weight.shapec1_, c2_, _, _ = self.ia[i].implicit.shapeself.m[i].bias += torch.matmul(self.m[i].weight.reshape(c1, c2),self.ia[i].implicit.reshape(c2_, c1_)).squeeze(1)# fuse ImplicitM and Convolutionfor i in range(len(self.m)):c1, c2, _, _ = self.im[i].implicit.shapeself.m[i].bias *= self.im[i].implicit.reshape(c2)self.m[i].weight *= self.im[i].implicit.transpose(0, 1)

References

[1] 深入浅出 Yolo 系列之 Yolov7 基础网络结构详解

[2] 【yolov7系列】网络框架细节拆解

[3] yolov7-GradCAM