作者:张华 发表于:2016-12-06

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

( http://blog.csdn.net/quqi99 )

硬件准备

我的实验硬件设备如下,需CPU与主板同时支持VT-x与VT-d,网卡支持SR-IOV:

- 英特尔(Intel) 酷睿i5-4590 22纳米 Haswell架构盒装CPU处理器 (LGA1150/3.3GHz/6M三级缓存)

- 华硕(ASUS) B85-PRO GAMER 主板 (Intel B85/LGA 1150)

- Winyao WY576T PCI-E X4 双口服务器千兆网卡 intel 82576

并更新华硕主板的BIOS程序至最后的B85-PRO-GAMER-ASUS-2203.zip配合使用i5:

http://www.asus.com/au/supportonly/B85-PRO%20GAMER/HelpDesk_Download/

http://www.asus.com/au/supportonly/B85-PRO%20GAMER/HelpDesk_CPU/

驱动准备

在启动igb模块后(sudo modprobe -r igb && sudo modprobe igb max_vfs=2)就能用lspci命令看到这块物理网卡已经被注册成了多个SR-IOV网卡。使用下列方法持久化:

1, vi /etc/modprobe.d/igb.confoptions igb max_vfs=2

2, update kernel, update-initramfs -k all -t -u

3, igbvf驱动应该是hypervisor里用的,所以host应该禁用它vi /etc/modprobe.d/blacklist-igbvf.confblacklist igbvf

SRIOV NIC info

$ lspci -nn |grep 82576

06:00.0 Ethernet controller [0200]: Intel Corporation 82576 Gigabit Network Connection [8086:10c9] (rev 01)

06:00.1 Ethernet controller [0200]: Intel Corporation 82576 Gigabit Network Connection [8086:10c9] (rev 01)

07:10.0 Ethernet controller [0200]: Intel Corporation 82576 Virtual Function [8086:10ca] (rev 01)

07:10.1 Ethernet controller [0200]: Intel Corporation 82576 Virtual Function [8086:10ca] (rev 01)

07:10.2 Ethernet controller [0200]: Intel Corporation 82576 Virtual Function [8086:10ca] (rev 01)

07:10.3 Ethernet controller [0200]: Intel Corporation 82576 Virtual Function [8086:10ca] (rev 01)$ sudo virsh nodedev-list --tree...+- pci_0000_00_1c_4| || +- pci_0000_06_00_0| | || | +- net_enp6s0f0_2c_53_4a_02_20_3c| +- pci_0000_06_00_1| | || | +- net_enp6s0f1_2c_53_4a_02_20_3d| +- pci_0000_07_10_0| +- pci_0000_07_10_1| +- pci_0000_07_10_2| +- pci_0000_07_10_3$ ip link show enp6s0f0

3: enp6s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000link/ether 2c:53:4a:02:20:3c brd ff:ff:ff:ff:ff:ffvf 0 MAC 00:00:00:00:00:00, spoof checking on, link-state autovf 1 MAC 00:00:00:00:00:00, spoof checking on, link-state auto$ ip link show enp6s0f1

4: enp6s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000link/ether 2c:53:4a:02:20:3d brd ff:ff:ff:ff:ff:ffvf 0 MAC 00:00:00:00:00:00, spoof checking on, link-state autovf 1 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

Step 1

- Enable IOMMU support

#/etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=pt intel_iommu=on pci=assign-busses"$ update-grub

- Set up a common OpenStack env

# Network infoneutron net-create private --provider:network_type flat --provider:physical_network physnet1

neutron subnet-create --allocation-pool start=10.0.1.22,end=10.0.1.122 --gateway 10.0.1.1 private 10.0.1.0/24 --enable_dhcp=True --name privateneutron net-create public -- --router:external=True --provider:network_type flat --provider:physical_network public

neutron subnet-create --allocation-pool start=10.230.56.100,end=10.230.56.104 --gateway 10.230.56.1 public 10.230.56.100/21 --enable_dhcp=False --name public-subnetneutron router-create router1

EXT_NET_ID=$(neutron net-list |grep ' public ' |awk '{print $2}')

ROUTER_ID=$(neutron router-list |grep ' router1 ' |awk '{print $2}')

SUBNET_ID=$(neutron subnet-list |grep '10.0.1.0/24' |awk '{print $2}')

neutron router-interface-add $ROUTER_ID $SUBNET_ID

neutron router-gateway-set $ROUTER_ID $EXT_NET_ID

Step 2, Enable SRIOV support

- /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2_type_flat]

flat_networks = physnet1,physnet2,sriov1,sriov2[ml2_sriov]

supported_pci_vendor_devs = 8086:10ca[securitygroup]

firewall_driver = neutron.agent.firewall.NoopFirewallDriver[sriov_nic]

physical_device_mappings = sriov1:enp6s0f0,sriov2:enp6s0f1

exclude_devices =

- Start neutron-sriov-nic-agent process

neutron-sriov-nic-agent --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini

- Modify /etc/nova/nova.conf, and restart nova-schedule process

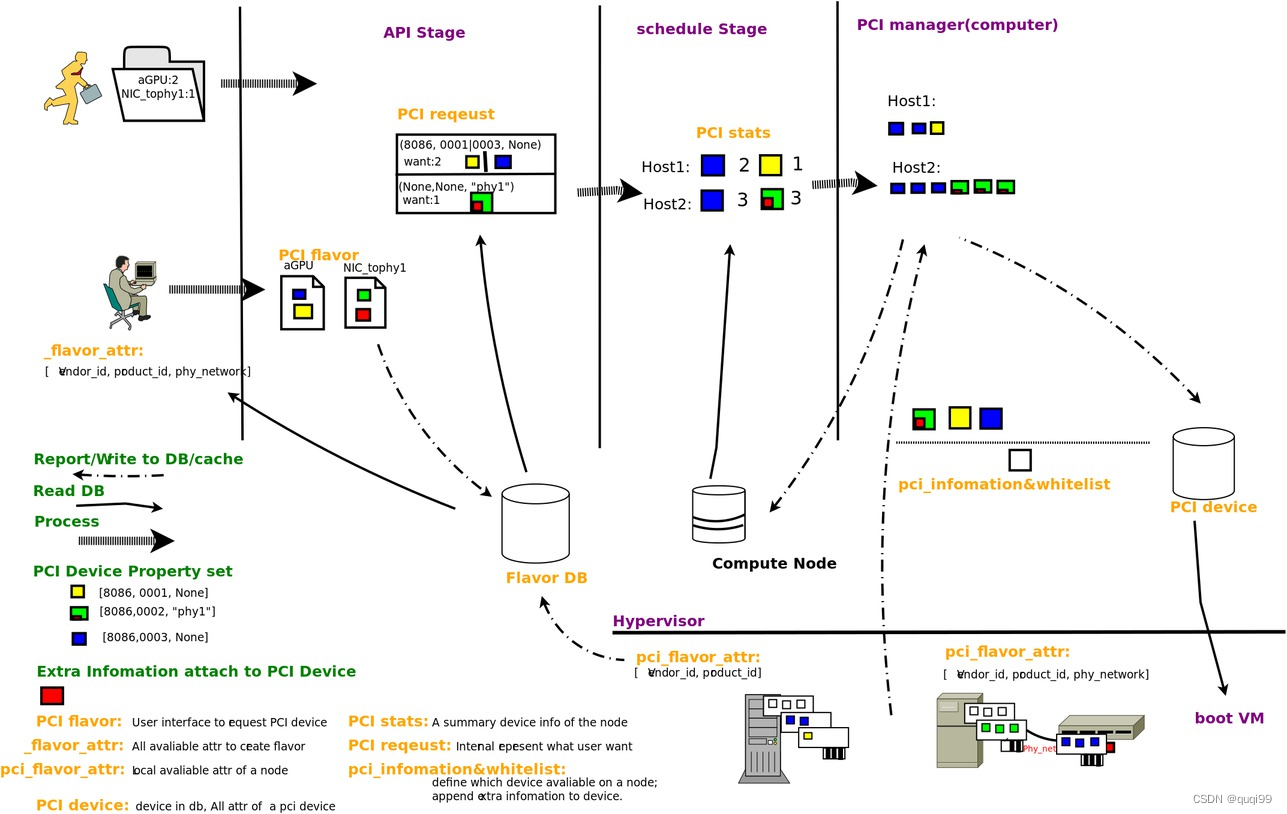

注: passthrough_whitelist用于nova-compute, scheduler_available_filters用于nova-schedule, pci_alias用于nova-api, 见: https://wiki.openstack.org/wiki/PCI_passthrough_SRIOV_support

[pci]

passthrough_whitelist = {"devname": "enp6s0f0", "physical_network": "sriov1"}

passthrough_whitelist = {"devname": "enp6s0f1", "physical_network": "sriov2"}[DEFAULT]

pci_alias={"vendor_id":"8086", "product_id":"10ca", "name":"a1"}scheduler_available_filters = nova.scheduler.filters.all_filters

scheduler_default_filters = RetryFilter, AvailabilityZoneFilter, RamFilter, ComputeFilter, ComputeCapabilitiesFilter, ImagePropertiesFilter, PciPassthroughFilter

Step 3, Create sriov network

neutron net-create sriov_net --provider:network_type flat --provider:physical_network sriov1

neutron subnet-create --allocation-pool start=192.168.9.122,end=192.168.9.129 sriov_net 192.168.9.0/24 --enable_dhcp=False --name=sriov_subnet

Step 4, Boot test VM

nova keypair-add --pub-key ~/.ssh/id_rsa.pub mykey

nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0nova flavor-key m1.medium set "pci_passthrough:alias"="a1:1"neutron port-create sriov_net --name sriov_port --binding:vnic-type direct

nova boot --key-name mykey --image trusty-server-cloudimg-amd64-disk1 --flavor m1.medium --nic port-id=$(neutron port-list |grep ' sriov_port ' |awk '{print $2}') i1FLOATING_IP=$(nova floating-ip-create |grep 'public' |awk '{print $4}')

nova add-floating-ip i1 $FLOATING_IP

sudo ip addr add 192.168.101.1/24 dev br-ex

ssh ubuntu@192.168.101.101 -vIt equals:

neutron port-create sriov_net --name sriov_port --binding:host_id desktop --binding:vnic-type direct --binding:profile type=dict pci_vendor_info=8086:10ca,pci_slot=0000:07:10.0,physical_network=sriov1

nova interface-attach --port-id $(neutron port-show sriov_port |grep ' id ' |awk -F '|' '{print $3}') i1

Step 5, Problem Fix

1, VFIO_MAP_DMA cannot allocate memory

ln -s /etc/apparmor.d/usr.sbin.libvirtd /etc/apparmor.d/disable/

ln -s /etc/apparmor.d/usr.lib.libvirt.virt-aa-helper /etc/apparmor.d/disable/

apparmor_parser -R /etc/apparmor.d/usr.sbin.libvirtd

apparmor_parser -R /etc/apparmor.d/usr.lib.libvirt.virt-aa-helper

Step 6, Code analysis

1, 'nova boot' one VM will invoke _validate_and_build_base_options.

pci_request.get_pci_requests_from_flavor is used to create InstancePCIRequests(instance_uuid, InstancePCIRequest(count, spec, alias_name, is_new, request_id)) by the flavor with pci_alias.

self.network_api.create_pci_requests_for_sriov_ports is used to fill in request_net.pci_request_iddef _validate_and_build_base_options(...)

...

# PCI requests come from two sources: instance flavor and

# requested_networks. The first call in below returns an

# InstancePCIRequests object which is a list of InstancePCIRequest

# objects. The second call in below creates an InstancePCIRequest

# object for each SR-IOV port, and append it to the list in the

# InstancePCIRequests object

pci_request_info = pci_request.get_pci_requests_from_flavor(

instance_type)

self.network_api.create_pci_requests_for_sriov_ports(context,

pci_request_info, requested_networks)def get_pci_requests_from_flavor(flavor):

pci_requests = []

if ('extra_specs' in flavor and

'pci_passthrough:alias' in flavor['extra_specs']):

pci_requests = _translate_alias_to_requests(

flavor['extra_specs']['pci_passthrough:alias'])

return objects.InstancePCIRequests(requests=pci_requests)fields = {

'instance_uuid': fields.UUIDField(),

'requests': fields.ListOfObjectsField('InstancePCIRequest'),

}2, build_and_run_instance will invoke instance_claim then invoke _test_pci to clain pci for the instance by retrieving InstancePCIRequests via instance_uuid.def _test_pci(self):

pci_requests = objects.InstancePCIRequests.get_by_instance_uuid(

self.context, self.instance.uuid)

if pci_requests.requests:

devs = self.tracker.pci_tracker.claim_instance(self.context,

self.instance)

if not devs:

return _('Claim pci failed.')def _claim_instance(self, context, instance, prefix=''):

pci_requests = objects.InstancePCIRequests.get_by_instance(context, instance)

...

devs = self.stats.consume_requests(pci_requests.requests,instance_cells)

...

return devs3, Final pci_request_id conducts _populate_neutron_binding_profile to use pci_manager.get_instance_pci_devs find pci_dev for the instance, then set binding:profile for Neutron.def _populate_neutron_binding_profile(instance, pci_request_id, port_req_body):

if pci_request_id:

pci_dev = pci_manager.get_instance_pci_devs(

instance, pci_request_id).pop()

devspec = pci_whitelist.get_pci_device_devspec(pci_dev)

profile = {'pci_vendor_info': "%s:%s" % (pci_dev.vendor_id,

pci_dev.product_id),

'pci_slot': pci_dev.address,

'physical_network':

devspec.get_tags().get('physical_network')

}

port_req_body['port']['binding:profile'] = profileNOTE: for os-attach-interfaces extension api, allocate_port_for_instance invoked by attach_interface function will simply pass pci_request_id=None before running self.allocate_for_instance/_populate_neutron_binding_profile, so seems 'nova interface-attach' operation need to re-deploy the existing VM to have sr-iov ports after defining pci_alias for the flavor.

Reference

[1] https://www.jianshu.com/p/95a0a407fceb

[2] https://wiki.openstack.org/wiki/SR-IOV-Passthrough-For-Networking