一:工具:

查看模型工具netron

1.https://github.com/lutzroeder/netron/releases

可以查看训练好的模型的输入结构

下载安装好netron ,然后注册右键菜单,弄一个xx.reg ,运行一下

Windows Registry Editor Version 5.00[HKEY_CLASSES_ROOT\Directory\Background\shell\OpenNetron]

@="Open with Netron"

"Icon"="C:\\Users\\Administrator\\AppData\\Local\\Programs\\netron\\Netron.exe"[HKEY_CLASSES_ROOT\Directory\Background\shell\OpenNetron\command]

@="\"C:\\Users\\Administrator\\AppData\\Local\\Programs\\netron\\Netron.exe\""

pip install ultralytics 安装 ultralytics库

1.先写 py2onnx.py代码

from ultralytics import YOLOmodel = YOLO("best.pt")

success = model.export(format="onnx", opset=11, simplify=True) # export the model to onnx format

assert success

二:onnxruntime x86库(我是易语言,所以用x86,首先得用vs编一个32位的dll)

https://github.com/microsoft/onnxruntime/releases?page=2

直接用大哥的代码:一个脚本搞定yolov5和yolov8在c++环境中加载onnx模型格式仅调用opencv部署_c++ 下 图片测试 opencv 部署yolo5 onnx 模型-CSDN博客

然后再稍微改一下类别,我的模型只有一个输入和一个输出

下面是代码

#include <fstream>

#include <vector>

#include <string>

#include <random>// OpenCV / DNN / Inference

#include <opencv2/imgproc.hpp>

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>using namespace cv;

using namespace std;struct Detection

{int class_id{ 0 };std::string className{};float confidence{ 0.0 };cv::Scalar color{};cv::Rect box{};

};class Inference

{

public:Inference(const std::string& onnxModelPath, const cv::Size& modelInputShape, const std::string& classesTxtFile, const bool& runWithCuda){modelPath = onnxModelPath;modelShape = modelInputShape;classesPath = classesTxtFile;cudaEnabled = runWithCuda;loadOnnxNetwork();}

private:std::string modelPath;std::string classesPath;bool cudaEnabled;cv::Size2f modelShape;cv::dnn::Net net;bool letterBoxForSquare = true;std::vector<std::string> classes{ "goal"};float modelConfidenceThreshold{ 0.25 };float modelScoreThreshold{ 0.45 };float modelNMSThreshold{ 0.50 };private:void loadOnnxNetwork(){net = cv::dnn::readNetFromONNX(modelPath);if (cudaEnabled){std::cout << "\nRunning on CUDA" << std::endl;net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);}else{std::cout << "\nRunning on CPU" << std::endl;net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);}}cv::Mat formatToSquare(const cv::Mat& source){int col = source.cols;int row = source.rows;int _max = MAX(col, row);cv::Mat result = cv::Mat::zeros(_max, _max, CV_8UC3);source.copyTo(result(cv::Rect(0, 0, col, row)));return result;}

public:std::vector<Detection> runInference(const cv::Mat& input){cv::Mat modelInput = input;if (letterBoxForSquare && modelShape.width == modelShape.height)modelInput = formatToSquare(modelInput);cv::Mat blob;cv::dnn::blobFromImage(modelInput, blob, 1.0 / 255.0, modelShape, cv::Scalar(), true, false);net.setInput(blob);std::vector<cv::Mat> outputs;net.forward(outputs, net.getUnconnectedOutLayersNames());int rows = outputs[0].size[1];int dimensions = outputs[0].size[2];bool yolov8 = false;// yolov5 has an output of shape (batchSize, 25200, 85) (Num classes + box[x,y,w,h] + confidence[c])// yolov8 has an output of shape (batchSize, 84, 8400) (Num classes + box[x,y,w,h])if (dimensions > rows) // Check if the shape[2] is more than shape[1] (yolov8){yolov8 = true;rows = outputs[0].size[2];dimensions = outputs[0].size[1];outputs[0] = outputs[0].reshape(1, dimensions);cv::transpose(outputs[0], outputs[0]);}float* data = (float*)outputs[0].data;float x_factor = modelInput.cols / modelShape.width;float y_factor = modelInput.rows / modelShape.height;std::vector<int> class_ids;std::vector<float> confidences;std::vector<cv::Rect> boxes;for (int i = 0; i < rows; ++i){if (yolov8){float* classes_scores = data + 4;cv::Mat scores(1, classes.size(), CV_32FC1, classes_scores);cv::Point class_id;double maxClassScore;minMaxLoc(scores, 0, &maxClassScore, 0, &class_id);if (maxClassScore > modelScoreThreshold){confidences.push_back(maxClassScore);class_ids.push_back(class_id.x);float x = data[0];float y = data[1];float w = data[2];float h = data[3];int left = int((x - 0.5 * w) * x_factor);int top = int((y - 0.5 * h) * y_factor);int width = int(w * x_factor);int height = int(h * y_factor);boxes.push_back(cv::Rect(left, top, width, height));}}else // yolov5{float confidence = data[4];if (confidence >= modelConfidenceThreshold){float* classes_scores = data + 5;cv::Mat scores(1, classes.size(), CV_32FC1, classes_scores);cv::Point class_id;double max_class_score;minMaxLoc(scores, 0, &max_class_score, 0, &class_id);if (max_class_score > modelScoreThreshold){confidences.push_back(confidence);class_ids.push_back(class_id.x);float x = data[0];float y = data[1];float w = data[2];float h = data[3];int left = int((x - 0.5 * w) * x_factor);int top = int((y - 0.5 * h) * y_factor);int width = int(w * x_factor);int height = int(h * y_factor);boxes.push_back(cv::Rect(left, top, width, height));}}}data += dimensions;}std::vector<int> nms_result;cv::dnn::NMSBoxes(boxes, confidences, modelScoreThreshold, modelNMSThreshold, nms_result);std::vector<Detection> detections{};for (unsigned long i = 0; i < nms_result.size(); ++i){int idx = nms_result[i];Detection result;result.class_id = class_ids[idx];result.confidence = confidences[idx];std::random_device rd;std::mt19937 gen(rd());std::uniform_int_distribution<int> dis(100, 255);result.color = cv::Scalar(dis(gen),dis(gen),dis(gen));result.className = classes[result.class_id];result.box = boxes[idx];detections.push_back(result);}return detections;}void loadClassesFromFile(){std::ifstream inputFile(classesPath);if (inputFile.is_open()){std::string classLine;while (std::getline(inputFile, classLine))classes.push_back(classLine);inputFile.close();}}

};int main(int argc, char** argv)

{std::string projectBasePath = "D:\\C_++\\yolov8_onnx";bool runOnGPU = false;Inference inf(projectBasePath + "/best.onnx", cv::Size(640, 640), "classes.txt", runOnGPU);std::vector<std::string> imageNames;imageNames.push_back(projectBasePath + "/image.png");for (int i = 0; i < imageNames.size(); ++i){cv::Mat frame = cv::imread(imageNames[i]);// Inference starts here...std::vector<Detection> output = inf.runInference(frame);int detections = output.size();std::cout << "Number of detections:" << detections << std::endl;for (int i = 0; i < detections; ++i){Detection detection = output[i];cv::Rect box = detection.box;cv::Scalar color = detection.color;// Detection boxcv::rectangle(frame, box, color, 2);// Detection box textstd::string classString = detection.className + ' ' + std::to_string(detection.confidence).substr(0, 4);cv::Size textSize = cv::getTextSize(classString, cv::FONT_HERSHEY_DUPLEX, 1, 2, 0);cv::Rect textBox(box.x, box.y - 40, textSize.width + 10, textSize.height + 20);cv::rectangle(frame, textBox, color, cv::FILLED);cv::putText(frame, classString, cv::Point(box.x + 5, box.y - 10), cv::FONT_HERSHEY_DUPLEX, 1, cv::Scalar(0, 0, 0), 2, 0);}// Inference ends here...// This is only for preview purposesfloat scale = 0.8;cv::resize(frame, frame, cv::Size(frame.cols * scale, frame.rows * scale));cv::imshow("Inference", frame);cv::waitKey(-1);}std::cout << "-----------------------------" << std::endl;std::cout << "dd" << std::endl;return 0;

}

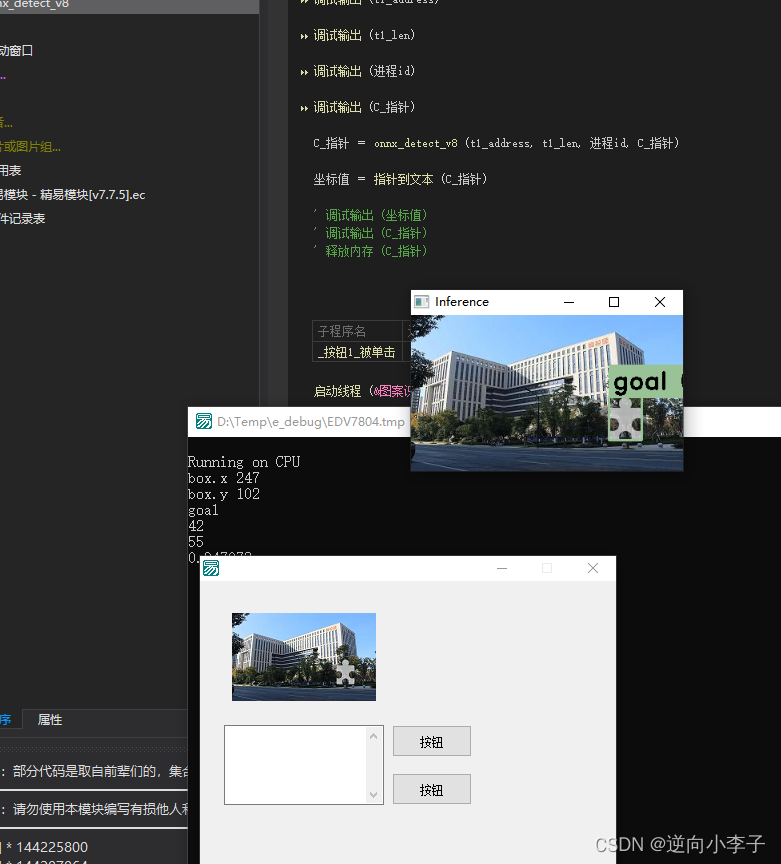

最后移植到易上调用