oneAPI学习-使用oneAPI 实现矩阵乘法并分析性能瓶颈

- 一.相关链接

- 二.oneAPI介绍

- 三.矩阵乘法简介

- 四.环境准备

- 五.获取设备列表

- 六.基础版实现

- 代码解释

- 七.局部内存实现

- 代码解释

- 八.性能分析

- 1.运行性能分析

- 2.常见分析类型

- 3.分析结果解读

- 4.优化建议

- 5.清理分析数据

oneAPI学习-使用oneAPI 实现矩阵乘法并分析性能瓶颈

一.相关链接

- Get the Intel® oneAPI Base Toolkit

- oneapi_docs

- installation-guide-linux

- oneAPI-samples

- Intel® oneAPI Programming Guide

- Intel® VTune™ Profiler User Guide

- SYCL Specification

二.oneAPI介绍

Intel® oneAPI 是一个跨平台的编程模型,能够在不同的计算架构(如 CPU、GPU、FPGA)上提供统一的开发体验。通过使用 Data Parallel C++ (DPC++),开发者可以编写高性能的并行代码,以充分利用硬件的计算能力。

本文将使用 oneAPI 实现矩阵乘法,并使用 Intel® VTune™ Profiler 对程序进行性能分析,找出可能的性能瓶颈。

三.矩阵乘法简介

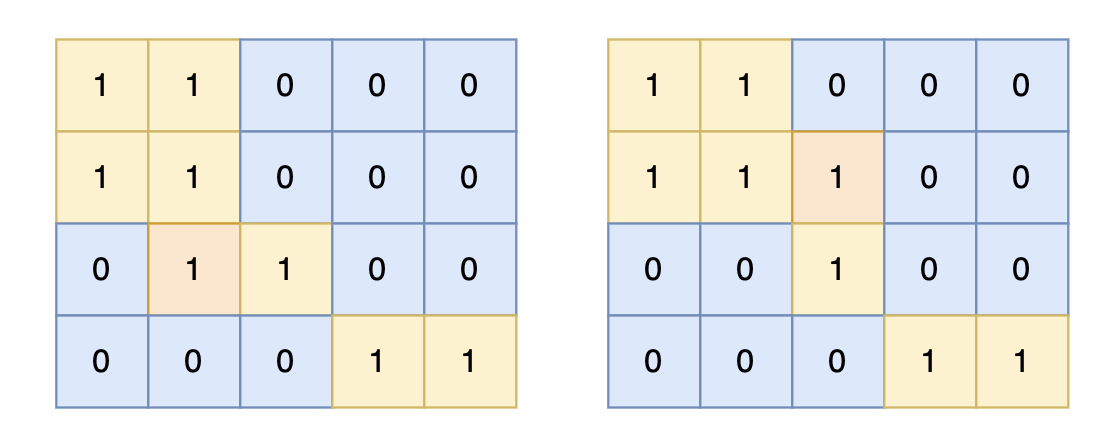

矩阵乘法是线性代数中的基本操作,其计算如下:

给定矩阵 A(尺寸为 M×K)和矩阵 B(尺寸为 K×N),它们的乘积矩阵 C(尺寸为 M×N)的每个元素计算为:

C[i][j] = Σ(k=0 到 K-1) A[i][k] * B[k][j]

四.环境准备

wget https://registrationcenter-download.intel.com/akdlm/IRC_NAS/e6ff8e9c-ee28-47fb-abd7-5c524c983e1c/l_BaseKit_p_2024.2.1.100_offline.sh

sudo sh ./l_BaseKit_p_2024.2.1.100_offline.sh -a --silent --cli --eula accept

source /opt/intel/oneapi/setvars.sh

五.获取设备列表

tee syscl_devices.cpp<<-'EOF'

#include <CL/sycl.hpp>

#include <iostream>int main() {std::vector<sycl::device> devices = sycl::device::get_devices();for (const auto& dev : devices) {std::cout << "设备名称: " << dev.get_info<sycl::info::device::name>() << std::endl;std::cout << "厂商: " << dev.get_info<sycl::info::device::vendor>() << std::endl;std::cout << "驱动版本: " << dev.get_info<sycl::info::device::driver_version>() << std::endl;std::cout << "设备类型: ";switch (dev.get_info<sycl::info::device::device_type>()) {case sycl::info::device_type::cpu:std::cout << "CPU" << std::endl;break;case sycl::info::device_type::gpu:std::cout << "GPU" << std::endl;break;case sycl::info::device_type::accelerator:std::cout << "加速器" << std::endl;break;default:std::cout << "其他" << std::endl;}std::cout << "--------------------------" << std::endl;}return 0;

}

EOF

icpx -fsycl -O3 -o syscl_devices syscl_devices.cpp

./syscl_devices

- 输出

设备名称: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

厂商: Intel(R) Corporation

驱动版本: 2024.18.7.0.11_160000

设备类型: CPU

六.基础版实现

tee matrix_multiplication.cpp<<-'EOF'

#include <CL/sycl.hpp>

#include <iostream>

#include <vector>

#include <chrono>

#include <exception>using namespace sycl;

using namespace std;// 矩阵尺寸

constexpr size_t M = 1024; // 行数

constexpr size_t N = 1024; // 列数

constexpr size_t K = 1024; // 中间维度int main() {// 创建 CPU 或 GPU 设备的队列queue q(default_selector{}, [](exception_list e_list) {for (exception_ptr const& e : e_list) {try {rethrow_exception(e);} catch (std::exception const& e) {std::cout << "Caught asynchronous SYCL exception:\n" << e.what() << std::endl;}}});std::cout << "Device: " << q.get_device().get_info<info::device::name>() << std::endl;// 分配并初始化主机内存vector<float> A(M * K);vector<float> B(K * N);vector<float> C(M * N, 0.0f);// 初始化矩阵 A 和 Bfor (size_t i = 0; i < M * K; ++i) {A[i] = static_cast<float>(i % 100);}for (size_t i = 0; i < K * N; ++i) {B[i] = static_cast<float>(i % 100);}// 创建设备内存缓冲区buffer<float, 1> buffer_A(A.data(), range<1>(M * K));buffer<float, 1> buffer_B(B.data(), range<1>(K * N));buffer<float, 1> buffer_C(C.data(), range<1>(M * N));// 记录开始时间auto start = chrono::high_resolution_clock::now();// 提交命令组到队列q.submit([&](handler& h) {// 从缓冲区中获取只读访问器accessor a_A(buffer_A, h, read_only);accessor a_B(buffer_B, h, read_only);// 获取读写访问器,用于存储结果accessor a_C(buffer_C, h, write_only, noinit);// 执行并行计算h.parallel_for(range<2>(M, N), [=](id<2> index) {size_t row = index[0];size_t col = index[1];float sum = 0.0f;for (size_t k = 0; k < K; ++k) {sum += a_A[row * K + k] * a_B[k * N + col];}a_C[row * N + col] = sum;});}).wait(); // 等待计算完成// 记录结束时间auto end = chrono::high_resolution_clock::now();chrono::duration<float, milli> duration = end - start;std::cout << "矩阵乘法计算完成,耗时:" << duration.count() << " 毫秒" << std::endl;return 0;

}

EOF

icpx -fsycl -O3 -o matrix_multiplication matrix_multiplication.cpp

./matrix_multiplication

- 输出

Device: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

矩阵乘法计算完成,耗时:213.378 毫秒

代码解释

- 选择设备队列:使用

default_selector定义计算设备,程序会自动选择可用的设备(如 GPU 或 CPU)。可以根据需要替换为gpu_selector或cpu_selector。 - 初始化矩阵:在主机端分配并初始化矩阵 A 和 B。

- 创建缓冲区:将主机内存的数据复制到设备的缓冲区中。

- 并行计算:使用

parallel_for在设备上执行并行计算。二维范围range<2>(M, N)表示启动 M×N 个工作项,每个工作项计算结果矩阵 C 的一个元素。 - 等待计算完成:使用

.wait()来确保设备计算完成,然后再继续执行主机代码。

七.局部内存实现

tee matrix_multiplication_opt.cpp<<-'EOF'

#include <CL/sycl.hpp>

#include <iostream>

#include <vector>

#include <chrono>

#include <exception>

using namespace sycl;

using namespace std;

// 矩阵尺寸

constexpr size_t M = 1024; // 行数

constexpr size_t N = 1024; // 列数

constexpr size_t K = 1024; // 中间维度

constexpr size_t tile_size = 16;

int main() {// 创建 CPU 或 GPU 设备的队列queue q(default_selector{}, [](exception_list e_list) {for (exception_ptr const& e : e_list) {try {rethrow_exception(e);} catch (std::exception const& e) {std::cout << "Caught asynchronous SYCL exception:\n" << e.what() << std::endl;}}});std::cout << "Device: " << q.get_device().get_info<info::device::name>() << std::endl;// 分配并初始化主机内存vector<float> A(M * K);vector<float> B(K * N);vector<float> C(M * N, 0.0f);// 初始化矩阵 A 和 Bfor (size_t i = 0; i < M * K; ++i) {A[i] = static_cast<float>(i % 100);}for (size_t i = 0; i < K * N; ++i) {B[i] = static_cast<float>(i % 100);}// 创建设备内存缓冲区buffer<float, 1> buffer_A(A.data(), range<1>(M * K));buffer<float, 1> buffer_B(B.data(), range<1>(K * N));buffer<float, 1> buffer_C(C.data(), range<1>(M * N));// 记录开始时间auto start = chrono::high_resolution_clock::now();q.submit([&](handler &h) {accessor a_A(buffer_A, h, read_only);accessor a_B(buffer_B, h, read_only);accessor a_C(buffer_C, h, write_only, noinit);// 定义局部内存local_accessor<float, 1> local_A(tile_size * tile_size, h);local_accessor<float, 1> local_B(tile_size * tile_size, h);// 启动计算h.parallel_for(nd_range<2>({M, N}, {tile_size, tile_size}),[=](nd_item<2> item) {size_t global_row = item.get_global_id(0);size_t global_col = item.get_global_id(1);size_t local_row = item.get_local_id(0);size_t local_col = item.get_local_id(1);float sum = 0.0f;for (size_t k = 0; k < K; k += tile_size) {// 将数据加载到局部内存local_A[local_row * tile_size + local_col] = a_A[global_row * K + k + local_col];local_B[local_row * tile_size + local_col] = a_B[(k + local_row) * N + global_col];item.barrier(access::fence_space::local_space);// 计算部分和for (size_t t = 0; t < tile_size; ++t) {sum += local_A[local_row * tile_size + t] * local_B[t * tile_size + local_col];}item.barrier(access::fence_space::local_space);}a_C[global_row * N + global_col] = sum;});});q.wait();// 记录结束时间auto end = chrono::high_resolution_clock::now();chrono::duration<float, milli> duration = end - start;std::cout << "矩阵乘法计算完成,耗时:" << duration.count() << " 毫秒" << std::endl;return 0;

}

EOF

icpx -fsycl -O3 -o matrix_multiplication_opt matrix_multiplication_opt.cpp

./matrix_multiplication_opt

- 输出

Device: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

矩阵乘法计算完成,耗时:184.403 毫秒

代码解释

local_accessor定义局部内存:需要定义在submit内,并提供大小和处理器 (handler)。nd_range使用:在parallel_for里,nd_range可以有效分配全局和局部范围,从而启用本地内存访问。- 同步障碍:使用

item.barrier()确保局部内存的数据在所有工作项可用前同步。

八.性能分析

1.运行性能分析

-

启动应用程序的性能分析:

使用

vtune命令行工具收集性能数据。例如,要进行热点(Hotspots)分析:vtune -collect hotspots -result-dir vtune_results ./matrix_multiplication这将运行

matrix_multiplication程序并收集热点数据,存储在vtune_results目录中。 -

查看分析报告:

收集数据后,可以生成报告:

vtune -report summary -result-dir vtune_results这个命令生成一个概要报告,显示 CPU 使用率、线程负载等信息。

2.常见分析类型

-

Hotspots:识别最消耗 CPU 时间的代码区域。

vtune -collect hotspots -result-dir vtune_hotspots ./matrix_multiplicationvtune: Executing actions 75 % Generating a report Elapsed Time: 5.643sCPU Time: 5.170sEffective Time: 5.028sSpin Time: 0.094sImbalance or Serial Spinning: 0.010sLock Contention: 0sOther: 0.084sOverhead Time: 0.048sCreation: 0.040sScheduling: 0.008sReduction: 0sAtomics: 0sOther: 0.000sTotal Thread Count: 56Paused Time: 0sTop Hotspots Function Module CPU Time % of CPU Time(%) -------------------------------------------- ---------------- -------- ---------------- main::{lambda(sycl::_V1::handler&)#1}::operator() 4702a06a83a24278 1.488s 28.8% sycl::_V1::device_selector::select_device libsycl.so.7 1.289s 24.9% memset libc-dynamic.so 1.246s 24.1% memcmp libc-dynamic.so 0.312s 6.0% sycl::_V1::queue::submit_impl libsycl.so.7 0.208s 4.0% [Others] N/A 0.628s 12.1%Top Tasks Task Type Task Time Task Count Average Task Time ---------------- --------- ---------- ----------------- tbb_parallel_for 2.950s 166 0.018s tbb_custom 0.092s 7 0.013s Effective Physical Core Utilization: 4.6% (1.300 out of 28)| The metric value is low, which may signal a poor physical CPU cores| utilization caused by:| - load imbalance| - threading runtime overhead| - contended synchronization| - thread/process underutilization| - incorrect affinity that utilizes logical cores instead of physical| cores| Explore sub-metrics to estimate the efficiency of MPI and OpenMP parallelism| or run the Locks and Waits analysis to identify parallel bottlenecks for| other parallel runtimes.|Effective Logical Core Utilization: 2.4% (1.368 out of 56)| The metric value is low, which may signal a poor logical CPU cores| utilization. Consider improving physical core utilization as the first| step and then look at opportunities to utilize logical cores, which in| some cases can improve processor throughput and overall performance of| multi-threaded applications.| Collection and Platform InfoApplication Command Line: ./matrix_multiplicationOperating System: 5.15.0-119-generic DISTRIB_ID=Ubuntu DISTRIB_RELEASE=20.04"Computer Name: -X99Result Size: 8.7 MBCollection start time: 05:45:29 13/10/2024 UTCCollection stop time: 05:45:36 13/10/2024 UTCCollector Type: Event-based counting driver,User-mode sampling and tracingCPUName: Intel(R) Xeon(R) Processor code named BroadwellFrequency: 2.600 GHzLogical CPU Count: 56LLC size: 36.7 MBCache Allocation TechnologyLevel 2 capability: not detectedLevel 3 capability: availableIf you want to skip descriptions of detected performance issues in the report, enter: vtune -report summary -report-knob show-issues=false -r <my_result_dir>. Alternatively, you may view the report in the csv format: vtune -report <report_name> -format=csv. vtune: Executing actions 100 % done -

Memory Consumption:分析内存带宽和缓存利用率。

vtune -collect memory-consumption -result-dir vtune_memory ./matrix_multiplicationvtune: Executing actions 75 % Generating a report Elapsed Time: 5.643sCPU Time: 5.170sEffective Time: 5.028sSpin Time: 0.094sImbalance or Serial Spinning: 0.010sLock Contention: 0sOther: 0.084sOverhead Time: 0.048sCreation: 0.040sScheduling: 0.008sReduction: 0sAtomics: 0sOther: 0.000sTotal Thread Count: 56Paused Time: 0sTop Hotspots Function Module CPU Time % of CPU Time(%) ---------------------------------------------- ---------------- -------- ---------------- main::{lambda(sycl::_V1::handler&)#1}::operator() 4702a06a83a24278 1.488s 28.8% sycl::_V1::device_selector::select_device libsycl.so.7 1.289s 24.9% memset libc-dynamic.so 1.246s 24.1% memcmp libc-dynamic.so 0.312s 6.0% sycl::_V1::queue::submit_impl libsycl.so.7 0.208s 4.0% [Others] N/A 0.628s 12.1%Top Tasks Task Type Task Time Task Count Average Task Time ---------------- --------- ---------- ----------------- tbb_parallel_for 2.950s 166 0.018s tbb_custom 0.092s 7 0.013s Effective Physical Core Utilization: 4.6% (1.300 out of 28)| The metric value is low, which may signal a poor physical CPU cores| utilization caused by:| - load imbalance| - threading runtime overhead| - contended synchronization| - thread/process underutilization| - incorrect affinity that utilizes logical cores instead of physical| cores| Explore sub-metrics to estimate the efficiency of MPI and OpenMP parallelism| or run the Locks and Waits analysis to identify parallel bottlenecks for| other parallel runtimes.|Effective Logical Core Utilization: 2.4% (1.368 out of 56)| The metric value is low, which may signal a poor logical CPU cores| utilization. Consider improving physical core utilization as the first| step and then look at opportunities to utilize logical cores, which in| some cases can improve processor throughput and overall performance of| multi-threaded applications.| Collection and Platform InfoApplication Command Line: ./matrix_multiplicationOperating System: 5.15.0-119-generic DISTRIB_ID=Ubuntu DISTRIB_RELEASE=20.04"Computer Name: -X99Result Size: 8.7 MBCollection start time: 05:45:29 13/10/2024 UTCCollection stop time: 05:45:36 13/10/2024 UTCCollector Type: Event-based counting driver,User-mode sampling and tracingCPUName: Intel(R) Xeon(R) Processor code named BroadwellFrequency: 2.600 GHzLogical CPU Count: 56LLC size: 36.7 MBCache Allocation TechnologyLevel 2 capability: not detectedLevel 3 capability: availableIf you want to skip descriptions of detected performance issues in the report, enter: vtune -report summary -report-knob show-issues=false -r <my_result_dir>. Alternatively, you may view the report in the csv format: vtune -report <report_name> -format=csv. vtune: Executing actions 100 % done -

Microarchitecture Exploration:详细分析 CPU 微架构利用情况。

vtune -collect uarch-exploration -result-dir vtune_uarch ./matrix_multiplicationvtune: Executing actions 75 % Generating a report Elapsed Time: 5.643sCPU Time: 5.170sEffective Time: 5.028sSpin Time: 0.094sImbalance or Serial Spinning: 0.010sLock Contention: 0sOther: 0.084sOverhead Time: 0.048sCreation: 0.040sScheduling: 0.008sReduction: 0sAtomics: 0sOther: 0.000sTotal Thread Count: 56Paused Time: 0sTop Hotspots Function Module CPU Time % of CPU Time(%) ------------------------------------------------ ---------------- -------- ---------------- main::{lambda(sycl::_V1::handler&)#1}::operator() 4702a06a83a24278 1.488s 28.8% sycl::_V1::device_selector::select_device libsycl.so.7 1.289s 24.9% memset libc-dynamic.so 1.246s 24.1% memcmp libc-dynamic.so 0.312s 6.0% sycl::_V1::queue::submit_impl libsycl.so.7 0.208s 4.0% [Others] N/A 0.628s 12.1%Top Tasks Task Type Task Time Task Count Average Task Time ---------------- --------- ---------- ----------------- tbb_parallel_for 2.950s 166 0.018s tbb_custom 0.092s 7 0.013s Effective Physical Core Utilization: 4.6% (1.300 out of 28)| The metric value is low, which may signal a poor physical CPU cores| utilization caused by:| - load imbalance| - threading runtime overhead| - contended synchronization| - thread/process underutilization| - incorrect affinity that utilizes logical cores instead of physical| cores| Explore sub-metrics to estimate the efficiency of MPI and OpenMP parallelism| or run the Locks and Waits analysis to identify parallel bottlenecks for| other parallel runtimes.|Effective Logical Core Utilization: 2.4% (1.368 out of 56)| The metric value is low, which may signal a poor logical CPU cores| utilization. Consider improving physical core utilization as the first| step and then look at opportunities to utilize logical cores, which in| some cases can improve processor throughput and overall performance of| multi-threaded applications.| Collection and Platform InfoApplication Command Line: ./matrix_multiplicationOperating System: 5.15.0-119-generic DISTRIB_ID=Ubuntu DISTRIB_RELEASE=20.04 DISTRIB_CODENAME=focal"Computer Name: -X99Result Size: 8.7 MBCollection start time: 05:45:29 13/10/2024 UTCCollection stop time: 05:45:36 13/10/2024 UTCCollector Type: Event-based counting driver,User-mode sampling and tracingCPUName: Intel(R) Xeon(R) Processor code named BroadwellFrequency: 2.600 GHzLogical CPU Count: 56LLC size: 36.7 MBCache Allocation TechnologyLevel 2 capability: not detectedLevel 3 capability: availableIf you want to skip descriptions of detected performance issues in the report, enter: vtune -report summary -report-knob show-issues=false -r <my_result_dir>. Alternatively, you may view the report in the csv format: vtune -report <report_name> -format=csv. vtune: Executing actions 100 % done -

vtune -collect gpu-offload -result-dir vtune_gpu ./your_application

3.分析结果解读

- CPU 使用率:高 CPU 使用率可能表明计算密集型性能瓶颈,需优化计算逻辑。

- 内存带宽:如果内存带宽接近瓶颈,则需优化内存访问。

- 线程同步:检查是否存在线程等待或同步问题。

- GPU 使用:检查 GPU 负载和数据传输开销。

4.优化建议

- 可以根据分析结果进行代码优化,如利用局部内存、提高并行度、优化向量化和循环展开等策略。

5.清理分析数据

运行分析后若需要清理数据,可以使用:

rm -rf vtune_results