一、pipeline概念

Python的sklearn.pipeline.Pipeline()函数可以把多个“处理数据的节点”按顺序打包在一起,数据在前一个节点处理之后的结果,转到下一个节点处理。除了最后一个节点外,其他节点都必须实现'fit()'和'transform()'方法, 最后一个节点需要实现fit()方法即可。当训练样本数据送进Pipeline进行处理时, 它会逐个调用节点的fit()和transform()方法,然后点用最后一个节点的fit()方法来拟合数据。

Pipeline可用于将多个估计器链接为一个。这很有用,因为在处理数据时通常会有固定的步骤顺序,例如特征选择,归一化和分类。Pipeline在这里有多种用途:

- 方便和封装:只需调用一次fit并在数据上进行一次predict即可拟合整个估计器序列。

- 联合参数选择:可以一次对Pipeline中所有估计器的参数进行网格搜索(grid search )。

- 安全性:通过确保使用相同的样本来训练转换器和预测器,Pipeline有助于避免在交叉验证中将测试数据的统计信息泄漏到经过训练的模型中。

二、pipeline的使用

Pipeline是使用 (key,value) 对的列表构建的,其中key是包含要提供此步骤名称的字符串,而value是一个估计器对象:

from sklearn.pipeline import Pipeline

from sklearn.svm import SVC

from sklearn.decomposition import PCA

estimators = [('reduce_dim', PCA()), ('clf', SVC())]

pipe = Pipeline(estimators)

pipe

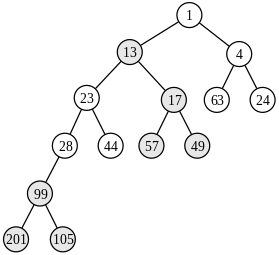

输出结果:

三、pipeline 应用场景

3.1 . 模块化 Feature Transform

只需写很少的代码就能将新的 Feature 更新到训练集中。

''' 对数据集 Breast Cancer Wisconsin 进行分类:包含 569 个样本,

第一列 ID,第二列类别(M=恶性肿瘤,B=良性肿瘤),第 3-32 列是实数值的特征。'''

from pandas as pd

# from sklearn.cross_validation import train_test_split

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.linear_model import LogisticRegression

from sklearn.pipeline import Pipelinedf = pd.read_csv('https://archive.ics.uci.edu/ml/machine-learning-databases/''breast-cancer-wisconsin/wdbc.data', header=None)# Breast Cancer Wisconsin datasetX, y = df.values[:, 2:], df.values[:, 1]

encoder = LabelEncoder()

y = encoder.fit_transform(y)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.2, random_state=0)pipe_lr = Pipeline([('sc', StandardScaler()),('pca', PCA(n_components=2)),('clf', LogisticRegression(random_state=1))])

pipe_lr.fit(X_train, y_train)

print('Test accuracy: %.3f' % pipe_lr.score(X_test, y_test))

3.2. 自动化 Grid Search

只要预先设定好使用的 Model 和参数的候选,就能自动搜索并记录最佳的 Model。

import numpy as np

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model.logistic import LogisticRegression

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

from sklearn.preprocessing import LabelEncoder

from sklearn.pipeline import Pipelinepipeline = Pipeline([('vect', TfidfVectorizer(stop_words='english')),('clf', LogisticRegression())

])

parameters = {'vect__max_df': (0.25, 0.5, 0.75),'vect__stop_words': ('english', None),'vect__max_features': (2500, 5000, None),'vect__ngram_range': ((1, 1), (1, 2)),'vect__use_idf': (True, False),'clf__penalty': ('l1', 'l2'),'clf__C': (0.01, 0.1, 1, 10),

}df = pd.read_csv('./sms.csv')

X = df['message']

y = df['label']

label_encoder = LabelEncoder()

y = label_encoder.fit_transform(y)

X_train, X_test, y_train, y_test = train_test_split(X, y)grid_search = GridSearchCV(pipeline, parameters, n_jobs=-1, verbose=1, scoring='accuracy', cv=3)

grid_search.fit(X_train, y_train)print('Best score: %0.3f' % grid_search.best_score_)

print('Best parameters set:')

best_parameters = grid_search.best_estimator_.get_params()

for param_name in sorted(parameters.keys()):print('\t%s: %r' % (param_name, best_parameters[param_name]))predictions = grid_search.predict(X_test)

print('Accuracy: %s' % accuracy_score(y_test, predictions))

print('Precision: %s' % precision_score(y_test, predictions))

print('Recall: %s' % recall_score(y_test, predictions))

3.3. 自动化 Ensemble Generation

每隔一段时间将现有最好的 K 个 Model 拿来做 Ensemble。