常用英语词汇-andrew Ng课程

- [1 ] intensity 强度

- [2 ] Regression 回归

- [3 ] Loss function 损失函数

- [4 ] non-convex 非凸函数

- [5 ] neural network 神经网络

- [ ] supervised learning 监督学习

- [ ] regression problem 回归问题处理的是连续的问题

- [ ] classification problem 分类问题处理的问题是离散的而不是连续的

回归问题和分类问题的区别应该在于 回归问题的结果是连续的,分类问题的结果是离散的。 - [ ] discreet value 离散值

- [ ] support vector machines 支持向量机,用来处理分类算法中输入的维度不单一的情况(甚至输入维度为无穷)

- [ ] learning theory 学习理论

- [ ] learning algorithms 学习算法

- [ ] unsupervised learning 无监督学习

- [ ] gradient descent 梯度下降

- [ ] linear regression 线性回归

- [ ] Neural Network 神经网络

- [ ] gradient descent 梯度下降 监督学习的一种算法,用来拟合的算法

- [ ] normal equations

- [ ] linear algebra 线性代数 原谅我英语不太好

- [ ] superscript上标

- [ ] exponentiation 指数

- [ ] training set 训练集合

- [ ] training example 训练样本

- [ ] hypothesis 假设,用来表示学习算法的输出,叫我们不要太纠结H的意思,因为这只是历史的惯例

- [ ] LMS algorithm “least mean squares” 最小二乘法算法

- [ ] batch gradient descent 批量梯度下降,因为每次都会计算 最小拟合的方差,所以运算慢

- [ ] constantly gradient descent 字幕组翻译成“随机梯度下降” 我怎么觉得是“常量梯度下降”也就是梯度下降的运算次数不变,一般比批量梯度下降速度快,但是通常不是那么准确

- [ ] iterative algorithm 迭代算法

- [ ] partial derivative 偏导数

- [ ] contour 等高线

- [ ] quadratic function 二元函数

- [ ] locally weighted regression局部加权回归

- [ ] underfitting欠拟合

- [ ] overfitting 过拟合

- [ ] non-parametric learning algorithms 无参数学习算法

- [ ] parametric learning algorithm 参数学习算法

[ ] other

[ ] activation 激活值

- [ ] activation function 激活函数

- [ ] additive noise 加性噪声

- [ ] autoencoder 自编码器

- [ ] Autoencoders 自编码算法

- [ ] average firing rate 平均激活率

- [ ] average sum-of-squares error 均方差

- [ ] backpropagation 后向传播

- [ ] basis 基

- [ ] basis feature vectors 特征基向量

- [50 ] batch gradient ascent 批量梯度上升法

- [ ] Bayesian regularization method 贝叶斯规则化方法

- [ ] Bernoulli random variable 伯努利随机变量

- [ ] bias term 偏置项

- [ ] binary classfication 二元分类

- [ ] class labels 类型标记

- [ ] concatenation 级联

- [ ] conjugate gradient 共轭梯度

- [ ] contiguous groups 联通区域

- [ ] convex optimization software 凸优化软件

- [ ] convolution 卷积

- [ ] cost function 代价函数

- [ ] covariance matrix 协方差矩阵

- [ ] DC component 直流分量

- [ ] decorrelation 去相关

- [ ] degeneracy 退化

- [ ] demensionality reduction 降维

- [ ] derivative 导函数

- [ ] diagonal 对角线

- [ ] diffusion of gradients 梯度的弥散

- [ ] eigenvalue 特征值

- [ ] eigenvector 特征向量

- [ ] error term 残差

- [ ] feature matrix 特征矩阵

- [ ] feature standardization 特征标准化

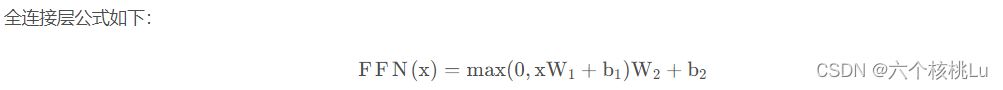

- [ ] feedforward architectures 前馈结构算法

- [ ] feedforward neural network 前馈神经网络

- [ ] feedforward pass 前馈传导

- [ ] fine-tuned 微调

- [ ] first-order feature 一阶特征

- [ ] forward pass 前向传导

- [ ] forward propagation 前向传播

- [ ] Gaussian prior 高斯先验概率

- [ ] generative model 生成模型

- [ ] gradient descent 梯度下降

- [ ] Greedy layer-wise training 逐层贪婪训练方法

- [ ] grouping matrix 分组矩阵

- [ ] Hadamard product 阿达马乘积

- [ ] Hessian matrix Hessian 矩阵

- [ ] hidden layer 隐含层

- [ ] hidden units 隐藏神经元

- [ ] Hierarchical grouping 层次型分组

- [ ] higher-order features 更高阶特征

- [ ] highly non-convex optimization problem 高度非凸的优化问题

- [ ] histogram 直方图

- [ ] hyperbolic tangent 双曲正切函数

- [ ] hypothesis 估值,假设

- [ ] identity activation function 恒等激励函数

- [ ] IID 独立同分布

- [ ] illumination 照明

- [100 ] inactive 抑制

- [ ] independent component analysis 独立成份分析

- [ ] input domains 输入域

- [ ] input layer 输入层

- [ ] intensity 亮度/灰度

- [ ] intercept term 截距

- [ ] KL divergence 相对熵

- [ ] KL divergence KL分散度

- [ ] k-Means K-均值

- [ ] learning rate 学习速率

- [ ] least squares 最小二乘法

- [ ] linear correspondence 线性响应

- [ ] linear superposition 线性叠加

- [ ] line-search algorithm 线搜索算法

- [ ] local mean subtraction 局部均值消减

- [ ] local optima 局部最优解

- [ ] logistic regression 逻辑回归

- [ ] loss function 损失函数

- [ ] low-pass filtering 低通滤波

- [ ] magnitude 幅值

- [ ] MAP 极大后验估计

- [ ] maximum likelihood estimation 极大似然估计

- [ ] mean 平均值

- [ ] MFCC Mel 倒频系数

- [ ] multi-class classification 多元分类

- [ ] neural networks 神经网络

- [ ] neuron 神经元

- [ ] Newton’s method 牛顿法

- [ ] non-convex function 非凸函数

- [ ] non-linear feature 非线性特征

- [ ] norm 范式

- [ ] norm bounded 有界范数

- [ ] norm constrained 范数约束

- [ ] normalization 归一化

- [ ] numerical roundoff errors 数值舍入误差

- [ ] numerically checking 数值检验

- [ ] numerically reliable 数值计算上稳定

- [ ] object detection 物体检测

- [ ] objective function 目标函数

- [ ] off-by-one error 缺位错误

- [ ] orthogonalization 正交化

- [ ] output layer 输出层

- [ ] overall cost function 总体代价函数

- [ ] over-complete basis 超完备基

- [ ] over-fitting 过拟合

- [ ] parts of objects 目标的部件

- [ ] part-whole decompostion 部分-整体分解

- [ ] PCA 主元分析

- [ ] penalty term 惩罚因子

- [ ] per-example mean subtraction 逐样本均值消减

- [150 ] pooling 池化

- [ ] pretrain 预训练

- [ ] principal components analysis 主成份分析

- [ ] quadratic constraints 二次约束

- [ ] RBMs 受限Boltzman机

- [ ] reconstruction based models 基于重构的模型

- [ ] reconstruction cost 重建代价

- [ ] reconstruction term 重构项

- [ ] redundant 冗余

- [ ] reflection matrix 反射矩阵

- [ ] regularization 正则化

- [ ] regularization term 正则化项

- [ ] rescaling 缩放

- [ ] robust 鲁棒性

- [ ] run 行程

- [ ] second-order feature 二阶特征

- [ ] sigmoid activation function S型激励函数

- [ ] significant digits 有效数字

- [ ] singular value 奇异值

- [ ] singular vector 奇异向量

- [ ] smoothed L1 penalty 平滑的L1范数惩罚

- [ ] Smoothed topographic L1 sparsity penalty 平滑地形L1稀疏惩罚函数

- [ ] smoothing 平滑

- [ ] Softmax Regresson Softmax回归

- [ ] sorted in decreasing order 降序排列

- [ ] source features 源特征

- [ ] sparse autoencoder 消减归一化

- [ ] Sparsity 稀疏性

- [ ] sparsity parameter 稀疏性参数

- [ ] sparsity penalty 稀疏惩罚

- [ ] square function 平方函数

- [ ] squared-error 方差

- [ ] stationary 平稳性(不变性)

- [ ] stationary stochastic process 平稳随机过程

- [ ] step-size 步长值

- [ ] supervised learning 监督学习

- [ ] symmetric positive semi-definite matrix 对称半正定矩阵

- [ ] symmetry breaking 对称失效

- [ ] tanh function 双曲正切函数

- [ ] the average activation 平均活跃度

- [ ] the derivative checking method 梯度验证方法

- [ ] the empirical distribution 经验分布函数

- [ ] the energy function 能量函数

- [ ] the Lagrange dual 拉格朗日对偶函数

- [ ] the log likelihood 对数似然函数

- [ ] the pixel intensity value 像素灰度值

- [ ] the rate of convergence 收敛速度

- [ ] topographic cost term 拓扑代价项

- [ ] topographic ordered 拓扑秩序

- [ ] transformation 变换

- [200 ] translation invariant 平移不变性

- [ ] trivial answer 平凡解

- [ ] under-complete basis 不完备基

- [ ] unrolling 组合扩展

- [ ] unsupervised learning 无监督学习

- [ ] variance 方差

- [ ] vecotrized implementation 向量化实现

- [ ] vectorization 矢量化

- [ ] visual cortex 视觉皮层

- [ ] weight decay 权重衰减

- [ ] weighted average 加权平均值

- [ ] whitening 白化

[ ] zero-mean 均值为零

[ ] Letter A

[ ] Accumulated error backpropagation 累积误差逆传播

- [ ] Activation Function 激活函数

- [ ] Adaptive Resonance Theory/ART 自适应谐振理论

- [ ] Addictive model 加性学习

- [ ] Adversarial Networks 对抗网络

- [ ] Affine Layer 仿射层

- [ ] Affinity matrix 亲和矩阵

- [ ] Agent 代理 / 智能体

- [ ] Algorithm 算法

- [ ] Alpha-beta pruning α-β剪枝

- [ ] Anomaly detection 异常检测

- [ ] Approximation 近似

- [ ] Area Under ROC Curve/AUC Roc 曲线下面积

- [ ] Artificial General Intelligence/AGI 通用人工智能

- [ ] Artificial Intelligence/AI 人工智能

- [ ] Association analysis 关联分析

- [ ] Attention mechanism 注意力机制

- [ ] Attribute conditional independence assumption 属性条件独立性假设

- [ ] Attribute space 属性空间

- [ ] Attribute value 属性值

- [ ] Autoencoder 自编码器

- [ ] Automatic speech recognition 自动语音识别

- [ ] Automatic summarization 自动摘要

- [ ] Average gradient 平均梯度

[ ] Average-Pooling 平均池化

[ ] Letter B

[ ] Backpropagation Through Time 通过时间的反向传播

- [ ] Backpropagation/BP 反向传播

- [ ] Base learner 基学习器

- [ ] Base learning algorithm 基学习算法

- [ ] Batch Normalization/BN 批量归一化

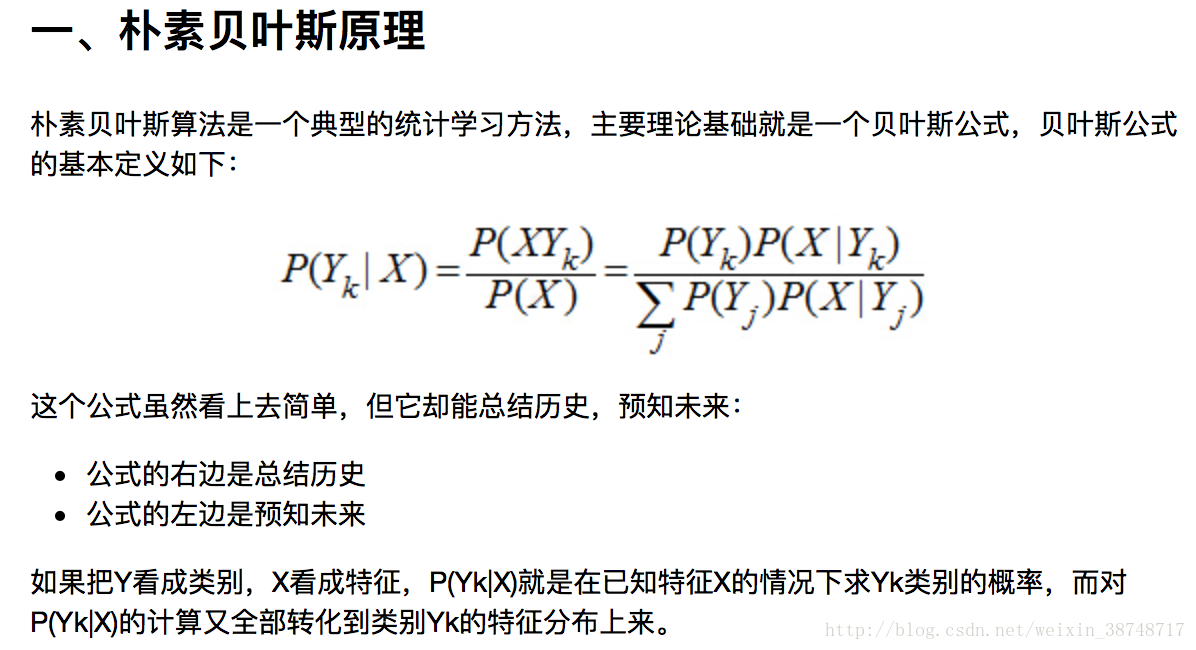

- [ ] Bayes decision rule 贝叶斯判定准则

- [250 ] Bayes Model Averaging/BMA 贝叶斯模型平均

- [ ] Bayes optimal classifier 贝叶斯最优分类器

- [ ] Bayesian decision theory 贝叶斯决策论

- [ ] Bayesian network 贝叶斯网络

- [ ] Between-class scatter matrix 类间散度矩阵

- [ ] Bias 偏置 / 偏差

- [ ] Bias-variance decomposition 偏差-方差分解

- [ ] Bias-Variance Dilemma 偏差 – 方差困境

- [ ] Bi-directional Long-Short Term Memory/Bi-LSTM 双向长短期记忆

- [ ] Binary classification 二分类

- [ ] Binomial test 二项检验

- [ ] Bi-partition 二分法

- [ ] Boltzmann machine 玻尔兹曼机

- [ ] Bootstrap sampling 自助采样法/可重复采样/有放回采样

- [ ] Bootstrapping 自助法

[ ] Break-Event Point/BEP 平衡点

[ ] Letter C

[ ] Calibration 校准

- [ ] Cascade-Correlation 级联相关

- [ ] Categorical attribute 离散属性

- [ ] Class-conditional probability 类条件概率

- [ ] Classification and regression tree/CART 分类与回归树

- [ ] Classifier 分类器

- [ ] Class-imbalance 类别不平衡

- [ ] Closed -form 闭式

- [ ] Cluster 簇/类/集群

- [ ] Cluster analysis 聚类分析

- [ ] Clustering 聚类

- [ ] Clustering ensemble 聚类集成

- [ ] Co-adapting 共适应

- [ ] Coding matrix 编码矩阵

- [ ] COLT 国际学习理论会议

- [ ] Committee-based learning 基于委员会的学习

- [ ] Competitive learning 竞争型学习

- [ ] Component learner 组件学习器

- [ ] Comprehensibility 可解释性

- [ ] Computation Cost 计算成本

- [ ] Computational Linguistics 计算语言学

- [ ] Computer vision 计算机视觉

- [ ] Concept drift 概念漂移

- [ ] Concept Learning System /CLS 概念学习系统

- [ ] Conditional entropy 条件熵

- [ ] Conditional mutual information 条件互信息

- [ ] Conditional Probability Table/CPT 条件概率表

- [ ] Conditional random field/CRF 条件随机场

- [ ] Conditional risk 条件风险

- [ ] Confidence 置信度

- [ ] Confusion matrix 混淆矩阵

- [300 ] Connection weight 连接权

- [ ] Connectionism 连结主义

- [ ] Consistency 一致性/相合性

- [ ] Contingency table 列联表

- [ ] Continuous attribute 连续属性

- [ ] Convergence 收敛

- [ ] Conversational agent 会话智能体

- [ ] Convex quadratic programming 凸二次规划

- [ ] Convexity 凸性

- [ ] Convolutional neural network/CNN 卷积神经网络

- [ ] Co-occurrence 同现

- [ ] Correlation coefficient 相关系数

- [ ] Cosine similarity 余弦相似度

- [ ] Cost curve 成本曲线

- [ ] Cost Function 成本函数

- [ ] Cost matrix 成本矩阵

- [ ] Cost-sensitive 成本敏感

- [ ] Cross entropy 交叉熵

- [ ] Cross validation 交叉验证

- [ ] Crowdsourcing 众包

- [ ] Curse of dimensionality 维数灾难

- [ ] Cut point 截断点

[ ] Cutting plane algorithm 割平面法

[ ] Letter D

[ ] Data mining 数据挖掘

- [ ] Data set 数据集

- [ ] Decision Boundary 决策边界

- [ ] Decision stump 决策树桩

- [ ] Decision tree 决策树/判定树

- [ ] Deduction 演绎

- [ ] Deep Belief Network 深度信念网络

- [ ] Deep Convolutional Generative Adversarial Network/DCGAN 深度卷积生成对抗网络

- [ ] Deep learning 深度学习

- [ ] Deep neural network/DNN 深度神经网络

- [ ] Deep Q-Learning 深度 Q 学习

- [ ] Deep Q-Network 深度 Q 网络

- [ ] Density estimation 密度估计

- [ ] Density-based clustering 密度聚类

- [ ] Differentiable neural computer 可微分神经计算机

- [ ] Dimensionality reduction algorithm 降维算法

- [ ] Directed edge 有向边

- [ ] Disagreement measure 不合度量

- [ ] Discriminative model 判别模型

- [ ] Discriminator 判别器

- [ ] Distance measure 距离度量

- [ ] Distance metric learning 距离度量学习

- [ ] Distribution 分布

- [ ] Divergence 散度

- [350 ] Diversity measure 多样性度量/差异性度量

- [ ] Domain adaption 领域自适应

- [ ] Downsampling 下采样

- [ ] D-separation (Directed separation) 有向分离

- [ ] Dual problem 对偶问题

- [ ] Dummy node 哑结点

- [ ] Dynamic Fusion 动态融合

[ ] Dynamic programming 动态规划

[ ] Letter E

[ ] Eigenvalue decomposition 特征值分解

- [ ] Embedding 嵌入

- [ ] Emotional analysis 情绪分析

- [ ] Empirical conditional entropy 经验条件熵

- [ ] Empirical entropy 经验熵

- [ ] Empirical error 经验误差

- [ ] Empirical risk 经验风险

- [ ] End-to-End 端到端

- [ ] Energy-based model 基于能量的模型

- [ ] Ensemble learning 集成学习

- [ ] Ensemble pruning 集成修剪

- [ ] Error Correcting Output Codes/ECOC 纠错输出码

- [ ] Error rate 错误率

- [ ] Error-ambiguity decomposition 误差-分歧分解

- [ ] Euclidean distance 欧氏距离

- [ ] Evolutionary computation 演化计算

- [ ] Expectation-Maximization 期望最大化

- [ ] Expected loss 期望损失

- [ ] Exploding Gradient Problem 梯度爆炸问题

- [ ] Exponential loss function 指数损失函数

[ ] Extreme Learning Machine/ELM 超限学习机

[ ] Letter F

[ ] Factorization 因子分解

- [ ] False negative 假负类

- [ ] False positive 假正类

- [ ] False Positive Rate/FPR 假正例率

- [ ] Feature engineering 特征工程

- [ ] Feature selection 特征选择

- [ ] Feature vector 特征向量

- [ ] Featured Learning 特征学习

- [ ] Feedforward Neural Networks/FNN 前馈神经网络

- [ ] Fine-tuning 微调

- [ ] Flipping output 翻转法

- [ ] Fluctuation 震荡

- [ ] Forward stagewise algorithm 前向分步算法

- [ ] Frequentist 频率主义学派

- [ ] Full-rank matrix 满秩矩阵

[400 ] Functional neuron 功能神经元

[ ] Letter G

[ ] Gain ratio 增益率

- [ ] Game theory 博弈论

- [ ] Gaussian kernel function 高斯核函数

- [ ] Gaussian Mixture Model 高斯混合模型

- [ ] General Problem Solving 通用问题求解

- [ ] Generalization 泛化

- [ ] Generalization error 泛化误差

- [ ] Generalization error bound 泛化误差上界

- [ ] Generalized Lagrange function 广义拉格朗日函数

- [ ] Generalized linear model 广义线性模型

- [ ] Generalized Rayleigh quotient 广义瑞利商

- [ ] Generative Adversarial Networks/GAN 生成对抗网络

- [ ] Generative Model 生成模型

- [ ] Generator 生成器

- [ ] Genetic Algorithm/GA 遗传算法

- [ ] Gibbs sampling 吉布斯采样

- [ ] Gini index 基尼指数

- [ ] Global minimum 全局最小

- [ ] Global Optimization 全局优化

- [ ] Gradient boosting 梯度提升

- [ ] Gradient Descent 梯度下降

- [ ] Graph theory 图论

[ ] Ground-truth 真相/真实

[ ] Letter H

[ ] Hard margin 硬间隔

- [ ] Hard voting 硬投票

- [ ] Harmonic mean 调和平均

- [ ] Hesse matrix 海塞矩阵

- [ ] Hidden dynamic model 隐动态模型

- [ ] Hidden layer 隐藏层

- [ ] Hidden Markov Model/HMM 隐马尔可夫模型

- [ ] Hierarchical clustering 层次聚类

- [ ] Hilbert space 希尔伯特空间

- [ ] Hinge loss function 合页损失函数

- [ ] Hold-out 留出法

- [ ] Homogeneous 同质

- [ ] Hybrid computing 混合计算

- [ ] Hyperparameter 超参数

- [ ] Hypothesis 假设

[ ] Hypothesis test 假设验证

[ ] Letter I

[ ] ICML 国际机器学习会议

- [450 ] Improved iterative scaling/IIS 改进的迭代尺度法

- [ ] Incremental learning 增量学习

- [ ] Independent and identically distributed/i.i.d. 独立同分布

- [ ] Independent Component Analysis/ICA 独立成分分析

- [ ] Indicator function 指示函数

- [ ] Individual learner 个体学习器

- [ ] Induction 归纳

- [ ] Inductive bias 归纳偏好

- [ ] Inductive learning 归纳学习

- [ ] Inductive Logic Programming/ILP 归纳逻辑程序设计

- [ ] Information entropy 信息熵

- [ ] Information gain 信息增益

- [ ] Input layer 输入层

- [ ] Insensitive loss 不敏感损失

- [ ] Inter-cluster similarity 簇间相似度

- [ ] International Conference for Machine Learning/ICML 国际机器学习大会

- [ ] Intra-cluster similarity 簇内相似度

- [ ] Intrinsic value 固有值

- [ ] Isometric Mapping/Isomap 等度量映射

- [ ] Isotonic regression 等分回归

[ ] Iterative Dichotomiser 迭代二分器

[ ] Letter K

[ ] Kernel method 核方法

- [ ] Kernel trick 核技巧

- [ ] Kernelized Linear Discriminant Analysis/KLDA 核线性判别分析

- [ ] K-fold cross validation k 折交叉验证/k 倍交叉验证

- [ ] K-Means Clustering K – 均值聚类

- [ ] K-Nearest Neighbours Algorithm/KNN K近邻算法

- [ ] Knowledge base 知识库

[ ] Knowledge Representation 知识表征

[ ] Letter L

[ ] Label space 标记空间

- [ ] Lagrange duality 拉格朗日对偶性

- [ ] Lagrange multiplier 拉格朗日乘子

- [ ] Laplace smoothing 拉普拉斯平滑

- [ ] Laplacian correction 拉普拉斯修正

- [ ] Latent Dirichlet Allocation 隐狄利克雷分布

- [ ] Latent semantic analysis 潜在语义分析

- [ ] Latent variable 隐变量

- [ ] Lazy learning 懒惰学习

- [ ] Learner 学习器

- [ ] Learning by analogy 类比学习

- [ ] Learning rate 学习率

- [ ] Learning Vector Quantization/LVQ 学习向量量化

- [ ] Least squares regression tree 最小二乘回归树

- [ ] Leave-One-Out/LOO 留一法

- [500 ] linear chain conditional random field 线性链条件随机场

- [ ] Linear Discriminant Analysis/LDA 线性判别分析

- [ ] Linear model 线性模型

- [ ] Linear Regression 线性回归

- [ ] Link function 联系函数

- [ ] Local Markov property 局部马尔可夫性

- [ ] Local minimum 局部最小

- [ ] Log likelihood 对数似然

- [ ] Log odds/logit 对数几率

- [ ] Logistic Regression Logistic 回归

- [ ] Log-likelihood 对数似然

- [ ] Log-linear regression 对数线性回归

- [ ] Long-Short Term Memory/LSTM 长短期记忆

[ ] Loss function 损失函数

[ ] Letter M

[ ] Machine translation/MT 机器翻译

- [ ] Macron-P 宏查准率

- [ ] Macron-R 宏查全率

- [ ] Majority voting 绝对多数投票法

- [ ] Manifold assumption 流形假设

- [ ] Manifold learning 流形学习

- [ ] Margin theory 间隔理论

- [ ] Marginal distribution 边际分布

- [ ] Marginal independence 边际独立性

- [ ] Marginalization 边际化

- [ ] Markov Chain Monte Carlo/MCMC 马尔可夫链蒙特卡罗方法

- [ ] Markov Random Field 马尔可夫随机场

- [ ] Maximal clique 最大团

- [ ] Maximum Likelihood Estimation/MLE 极大似然估计/极大似然法

- [ ] Maximum margin 最大间隔

- [ ] Maximum weighted spanning tree 最大带权生成树

- [ ] Max-Pooling 最大池化

- [ ] Mean squared error 均方误差

- [ ] Meta-learner 元学习器

- [ ] Metric learning 度量学习

- [ ] Micro-P 微查准率

- [ ] Micro-R 微查全率

- [ ] Minimal Description Length/MDL 最小描述长度

- [ ] Minimax game 极小极大博弈

- [ ] Misclassification cost 误分类成本

- [ ] Mixture of experts 混合专家

- [ ] Momentum 动量

- [ ] Moral graph 道德图/端正图

- [ ] Multi-class classification 多分类

- [ ] Multi-document summarization 多文档摘要

- [ ] Multi-layer feedforward neural networks 多层前馈神经网络

- [ ] Multilayer Perceptron/MLP 多层感知器

- [ ] Multimodal learning 多模态学习

- [550 ] Multiple Dimensional Scaling 多维缩放

- [ ] Multiple linear regression 多元线性回归

- [ ] Multi-response Linear Regression /MLR 多响应线性回归

[ ] Mutual information 互信息

[ ] Letter N

[ ] Naive bayes 朴素贝叶斯

- [ ] Naive Bayes Classifier 朴素贝叶斯分类器

- [ ] Named entity recognition 命名实体识别

- [ ] Nash equilibrium 纳什均衡

- [ ] Natural language generation/NLG 自然语言生成

- [ ] Natural language processing 自然语言处理

- [ ] Negative class 负类

- [ ] Negative correlation 负相关法

- [ ] Negative Log Likelihood 负对数似然

- [ ] Neighbourhood Component Analysis/NCA 近邻成分分析

- [ ] Neural Machine Translation 神经机器翻译

- [ ] Neural Turing Machine 神经图灵机

- [ ] Newton method 牛顿法

- [ ] NIPS 国际神经信息处理系统会议

- [ ] No Free Lunch Theorem/NFL 没有免费的午餐定理

- [ ] Noise-contrastive estimation 噪音对比估计

- [ ] Nominal attribute 列名属性

- [ ] Non-convex optimization 非凸优化

- [ ] Nonlinear model 非线性模型

- [ ] Non-metric distance 非度量距离

- [ ] Non-negative matrix factorization 非负矩阵分解

- [ ] Non-ordinal attribute 无序属性

- [ ] Non-Saturating Game 非饱和博弈

- [ ] Norm 范数

- [ ] Normalization 归一化

- [ ] Nuclear norm 核范数

[ ] Numerical attribute 数值属性

[ ] Letter O

[ ] Objective function 目标函数

- [ ] Oblique decision tree 斜决策树

- [ ] Occam’s razor 奥卡姆剃刀

- [ ] Odds 几率

- [ ] Off-Policy 离策略

- [ ] One shot learning 一次性学习

- [ ] One-Dependent Estimator/ODE 独依赖估计

- [ ] On-Policy 在策略

- [ ] Ordinal attribute 有序属性

- [ ] Out-of-bag estimate 包外估计

- [ ] Output layer 输出层

- [ ] Output smearing 输出调制法

- [ ] Overfitting 过拟合/过配

[600 ] Oversampling 过采样

[ ] Letter P

[ ] Paired t-test 成对 t 检验

- [ ] Pairwise 成对型

- [ ] Pairwise Markov property 成对马尔可夫性

- [ ] Parameter 参数

- [ ] Parameter estimation 参数估计

- [ ] Parameter tuning 调参

- [ ] Parse tree 解析树

- [ ] Particle Swarm Optimization/PSO 粒子群优化算法

- [ ] Part-of-speech tagging 词性标注

- [ ] Perceptron 感知机

- [ ] Performance measure 性能度量

- [ ] Plug and Play Generative Network 即插即用生成网络

- [ ] Plurality voting 相对多数投票法

- [ ] Polarity detection 极性检测

- [ ] Polynomial kernel function 多项式核函数

- [ ] Pooling 池化

- [ ] Positive class 正类

- [ ] Positive definite matrix 正定矩阵

- [ ] Post-hoc test 后续检验

- [ ] Post-pruning 后剪枝

- [ ] potential function 势函数

- [ ] Precision 查准率/准确率

- [ ] Prepruning 预剪枝

- [ ] Principal component analysis/PCA 主成分分析

- [ ] Principle of multiple explanations 多释原则

- [ ] Prior 先验

- [ ] Probability Graphical Model 概率图模型

- [ ] Proximal Gradient Descent/PGD 近端梯度下降

- [ ] Pruning 剪枝

[ ] Pseudo-label 伪标记

[ ] Letter Q

[ ] Quantized Neural Network 量子化神经网络

- [ ] Quantum computer 量子计算机

- [ ] Quantum Computing 量子计算

[ ] Quasi Newton method 拟牛顿法

[ ] Letter R

[ ] Radial Basis Function/RBF 径向基函数

- [ ] Random Forest Algorithm 随机森林算法

- [ ] Random walk 随机漫步

- [ ] Recall 查全率/召回率

- [ ] Receiver Operating Characteristic/ROC 受试者工作特征

- [ ] Rectified Linear Unit/ReLU 线性修正单元

- [650 ] Recurrent Neural Network 循环神经网络

- [ ] Recursive neural network 递归神经网络

- [ ] Reference model 参考模型

- [ ] Regression 回归

- [ ] Regularization 正则化

- [ ] Reinforcement learning/RL 强化学习

- [ ] Representation learning 表征学习

- [ ] Representer theorem 表示定理

- [ ] reproducing kernel Hilbert space/RKHS 再生核希尔伯特空间

- [ ] Re-sampling 重采样法

- [ ] Rescaling 再缩放

- [ ] Residual Mapping 残差映射

- [ ] Residual Network 残差网络

- [ ] Restricted Boltzmann Machine/RBM 受限玻尔兹曼机

- [ ] Restricted Isometry Property/RIP 限定等距性

- [ ] Re-weighting 重赋权法

- [ ] Robustness 稳健性/鲁棒性

- [ ] Root node 根结点

- [ ] Rule Engine 规则引擎

[ ] Rule learning 规则学习

[ ] Letter S

[ ] Saddle point 鞍点

- [ ] Sample space 样本空间

- [ ] Sampling 采样

- [ ] Score function 评分函数

- [ ] Self-Driving 自动驾驶

- [ ] Self-Organizing Map/SOM 自组织映射

- [ ] Semi-naive Bayes classifiers 半朴素贝叶斯分类器

- [ ] Semi-Supervised Learning 半监督学习

- [ ] semi-Supervised Support Vector Machine 半监督支持向量机

- [ ] Sentiment analysis 情感分析

- [ ] Separating hyperplane 分离超平面

- [ ] Sigmoid function Sigmoid 函数

- [ ] Similarity measure 相似度度量

- [ ] Simulated annealing 模拟退火

- [ ] Simultaneous localization and mapping 同步定位与地图构建

- [ ] Singular Value Decomposition 奇异值分解

- [ ] Slack variables 松弛变量

- [ ] Smoothing 平滑

- [ ] Soft margin 软间隔

- [ ] Soft margin maximization 软间隔最大化

- [ ] Soft voting 软投票

- [ ] Sparse representation 稀疏表征

- [ ] Sparsity 稀疏性

- [ ] Specialization 特化

- [ ] Spectral Clustering 谱聚类

- [ ] Speech Recognition 语音识别

- [ ] Splitting variable 切分变量

- [700 ] Squashing function 挤压函数

- [ ] Stability-plasticity dilemma 可塑性-稳定性困境

- [ ] Statistical learning 统计学习

- [ ] Status feature function 状态特征函

- [ ] Stochastic gradient descent 随机梯度下降

- [ ] Stratified sampling 分层采样

- [ ] Structural risk 结构风险

- [ ] Structural risk minimization/SRM 结构风险最小化

- [ ] Subspace 子空间

- [ ] Supervised learning 监督学习/有导师学习

- [ ] support vector expansion 支持向量展式

- [ ] Support Vector Machine/SVM 支持向量机

- [ ] Surrogat loss 替代损失

- [ ] Surrogate function 替代函数

- [ ] Symbolic learning 符号学习

- [ ] Symbolism 符号主义

[ ] Synset 同义词集

[ ] Letter T

[ ] T-Distribution Stochastic Neighbour Embedding/t-SNE T – 分布随机近邻嵌入

- [ ] Tensor 张量

- [ ] Tensor Processing Units/TPU 张量处理单元

- [ ] The least square method 最小二乘法

- [ ] Threshold 阈值

- [ ] Threshold logic unit 阈值逻辑单元

- [ ] Threshold-moving 阈值移动

- [ ] Time Step 时间步骤

- [ ] Tokenization 标记化

- [ ] Training error 训练误差

- [ ] Training instance 训练示例/训练例

- [ ] Transductive learning 直推学习

- [ ] Transfer learning 迁移学习

- [ ] Treebank 树库

- [ ] Tria-by-error 试错法

- [ ] True negative 真负类

- [ ] True positive 真正类

- [ ] True Positive Rate/TPR 真正例率

- [ ] Turing Machine 图灵机

[ ] Twice-learning 二次学习

[ ] Letter U

[ ] Underfitting 欠拟合/欠配

- [ ] Undersampling 欠采样

- [ ] Understandability 可理解性

- [ ] Unequal cost 非均等代价

- [ ] Unit-step function 单位阶跃函数

- [ ] Univariate decision tree 单变量决策树

- [ ] Unsupervised learning 无监督学习/无导师学习

- [ ] Unsupervised layer-wise training 无监督逐层训练

[ ] Upsampling 上采样

[ ] Letter V

[ ] Vanishing Gradient Problem 梯度消失问题

- [ ] Variational inference 变分推断

- [ ] VC Theory VC维理论

- [ ] Version space 版本空间

- [ ] Viterbi algorithm 维特比算法

[760 ] Von Neumann architecture 冯 · 诺伊曼架构

[ ] Letter W

[ ] Wasserstein GAN/WGAN Wasserstein生成对抗网络

- [ ] Weak learner 弱学习器

- [ ] Weight 权重

- [ ] Weight sharing 权共享

- [ ] Weighted voting 加权投票法

- [ ] Within-class scatter matrix 类内散度矩阵

- [ ] Word embedding 词嵌入

[ ] Word sense disambiguation 词义消歧

[ ] Letter Z

[ ] Zero-data learning 零数据学习

[ ] Zero-shot learning 零次学习

[ ] A

[ ] approximations近似值

- [ ] arbitrary随意的

- [ ] affine仿射的

- [ ] arbitrary任意的

- [ ] amino acid氨基酸

- [ ] amenable经得起检验的

- [ ] axiom公理,原则

- [ ] abstract提取

- [ ] architecture架构,体系结构;建造业

- [ ] absolute绝对的

- [ ] arsenal军火库

- [ ] assignment分配

- [ ] algebra线性代数

- [ ] asymptotically无症状的

[ ] appropriate恰当的

[ ] B

[ ] bias偏差

- [ ] brevity简短,简洁;短暂

- [800 ] broader广泛

- [ ] briefly简短的

[ ] batch批量

[ ] C

[ ] convergence 收敛,集中到一点

- [ ] convex凸的

- [ ] contours轮廓

- [ ] constraint约束

- [ ] constant常理

- [ ] commercial商务的

- [ ] complementarity补充

- [ ] coordinate ascent同等级上升

- [ ] clipping剪下物;剪报;修剪

- [ ] component分量;部件

- [ ] continuous连续的

- [ ] covariance协方差

- [ ] canonical正规的,正则的

- [ ] concave非凸的

- [ ] corresponds相符合;相当;通信

- [ ] corollary推论

- [ ] concrete具体的事物,实在的东西

- [ ] cross validation交叉验证

- [ ] correlation相互关系

- [ ] convention约定

- [ ] cluster一簇

- [ ] centroids 质心,形心

- [ ] converge收敛

- [ ] computationally计算(机)的

[ ] calculus计算

[ ] D

[ ] derive获得,取得

- [ ] dual二元的

- [ ] duality二元性;二象性;对偶性

- [ ] derivation求导;得到;起源

- [ ] denote预示,表示,是…的标志;意味着,[逻]指称

- [ ] divergence 散度;发散性

- [ ] dimension尺度,规格;维数

- [ ] dot小圆点

- [ ] distortion变形

- [ ] density概率密度函数

- [ ] discrete离散的

- [ ] discriminative有识别能力的

- [ ] diagonal对角

- [ ] dispersion分散,散开

- [ ] determinant决定因素

[849 ] disjoint不相交的

[ ] E

[ ] encounter遇到

- [ ] ellipses椭圆

- [ ] equality等式

- [ ] extra额外的

- [ ] empirical经验;观察

- [ ] ennmerate例举,计数

- [ ] exceed超过,越出

- [ ] expectation期望

- [ ] efficient生效的

- [ ] endow赋予

- [ ] explicitly清楚的

- [ ] exponential family指数家族

[ ] equivalently等价的

[ ] F

[ ] feasible可行的

- [ ] forary初次尝试

- [ ] finite有限的,限定的

- [ ] forgo摒弃,放弃

- [ ] fliter过滤

- [ ] frequentist最常发生的

- [ ] forward search前向式搜索

[ ] formalize使定形

[ ] G

[ ] generalized归纳的

- [ ] generalization概括,归纳;普遍化;判断(根据不足)

- [ ] guarantee保证;抵押品

- [ ] generate形成,产生

- [ ] geometric margins几何边界

- [ ] gap裂口

[ ] generative生产的;有生产力的

[ ] H

[ ] heuristic启发式的;启发法;启发程序

- [ ] hone怀恋;磨

[ ] hyperplane超平面

[ ] L

[ ] initial最初的

- [ ] implement执行

- [ ] intuitive凭直觉获知的

- [ ] incremental增加的

- [900 ] intercept截距

- [ ] intuitious直觉

- [ ] instantiation例子

- [ ] indicator指示物,指示器

- [ ] interative重复的,迭代的

- [ ] integral积分

- [ ] identical相等的;完全相同的

- [ ] indicate表示,指出

- [ ] invariance不变性,恒定性

- [ ] impose把…强加于

- [ ] intermediate中间的

[ ] interpretation解释,翻译

[ ] J

[ ] joint distribution联合概率

[ ] L

[ ] lieu替代

- [ ] logarithmic对数的,用对数表示的

- [ ] latent潜在的

[ ] Leave-one-out cross validation留一法交叉验证

[ ] M

[ ] magnitude巨大

- [ ] mapping绘图,制图;映射

- [ ] matrix矩阵

- [ ] mutual相互的,共同的

- [ ] monotonically单调的

- [ ] minor较小的,次要的

- [ ] multinomial多项的

[ ] multi-class classification二分类问题

[ ] N

[ ] nasty讨厌的

- [ ] notation标志,注释

[ ] naïve朴素的

[ ] O

[ ] obtain得到

- [ ] oscillate摆动

- [ ] optimization problem最优化问题

- [ ] objective function目标函数

- [ ] optimal最理想的

- [ ] orthogonal(矢量,矩阵等)正交的

- [ ] orientation方向

- [ ] ordinary普通的

[ ] occasionally偶然的

[ ] P

[ ] partial derivative偏导数

- [ ] property性质

- [ ] proportional成比例的

- [ ] primal原始的,最初的

- [ ] permit允许

- [ ] pseudocode伪代码

- [ ] permissible可允许的

- [ ] polynomial多项式

- [ ] preliminary预备

- [ ] precision精度

- [ ] perturbation 不安,扰乱

- [ ] poist假定,设想

- [ ] positive semi-definite半正定的

- [ ] parentheses圆括号

- [ ] posterior probability后验概率

- [ ] plementarity补充

- [ ] pictorially图像的

- [ ] parameterize确定…的参数

- [ ] poisson distribution柏松分布

[ ] pertinent相关的

[ ] Q

[ ] quadratic二次的

- [ ] quantity量,数量;分量

[ ] query疑问的

[ ] R

[ ] regularization使系统化;调整

- [ ] reoptimize重新优化

- [ ] restrict限制;限定;约束

- [ ] reminiscent回忆往事的;提醒的;使人联想…的(of)

- [ ] remark注意

- [ ] random variable随机变量

- [ ] respect考虑

- [ ] respectively各自的;分别的

[ ] redundant过多的;冗余的

[ ] S

[ ] susceptible敏感的

- [ ] stochastic可能的;随机的

- [ ] symmetric对称的

- [ ] sophisticated复杂的

- [ ] spurious假的;伪造的

- [ ] subtract减去;减法器

- [ ] simultaneously同时发生地;同步地

- [ ] suffice满足

- [ ] scarce稀有的,难得的

- [ ] split分解,分离

- [ ] subset子集

- [ ] statistic统计量

- [ ] successive iteratious连续的迭代

- [ ] scale标度

- [ ] sort of有几分的

[ ] squares平方

[ ] T

[ ] trajectory轨迹

- [ ] temporarily暂时的

- [ ] terminology专用名词

- [ ] tolerance容忍;公差

- [ ] thumb翻阅

- [ ] threshold阈,临界

- [ ] theorem定理

[ ] tangent正弦

[ ] U

[ ] unit-length vector单位向量

[ ] V

[ ] valid有效的,正确的

- [ ] variance方差

- [ ] variable变量;变元

- [ ] vocabulary词汇

[ ] valued经估价的;宝贵的

[ ] W

[1038 ] wrapper包装

[ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]

- [ ]