本文参考自:kubernetes最新版安装单机版v1.21.5_kubernetes下载_qq759035366的博客-CSDN博客

只用一台VB虚拟机,装K8S加Tungsten Fabric。

0. 提前避坑:

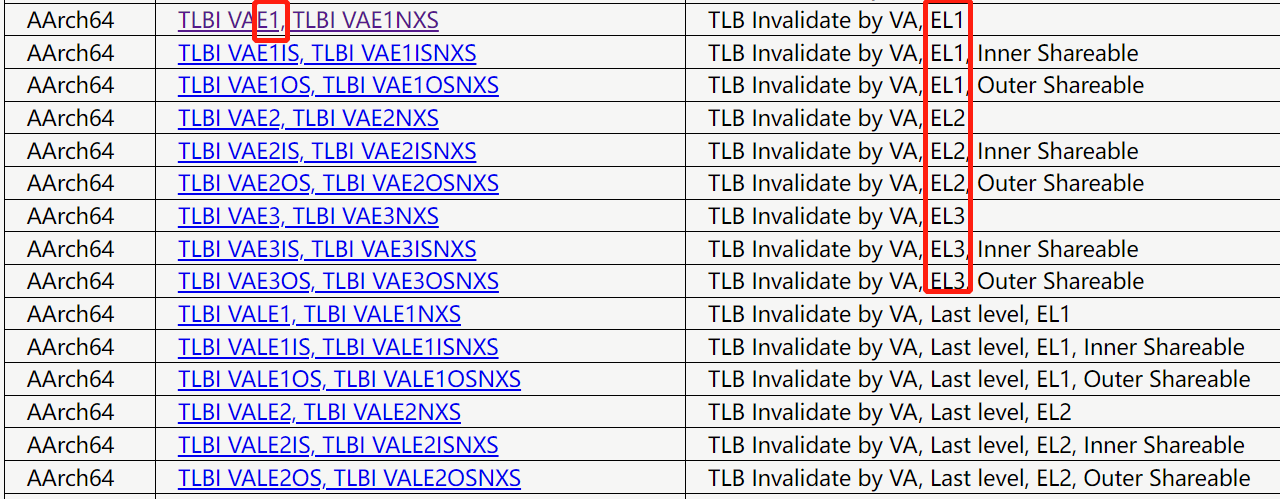

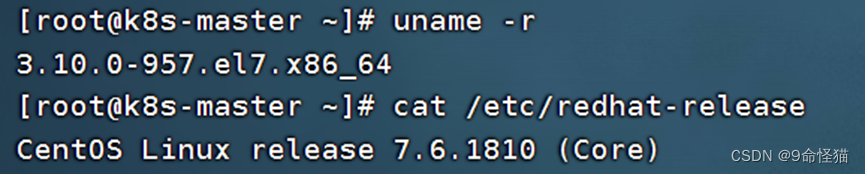

0.1 Tungsten Fabric的VRouter对Linux内核版本有要求,以下内核版本才可以,否则VRouter启动有问题:

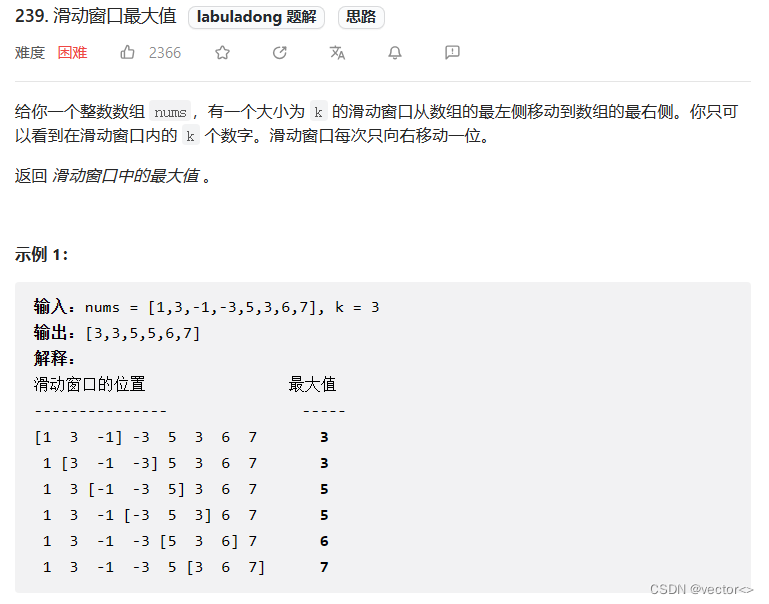

0.2 VirtualBox配置调整

0.2.1 想要终端工具连接,配置端口如下。其中:10.0.2.15是虚机地址。

0.3 虚机core

需要配置为2个core或以上,否则会有些pod无法启动

一、安装K8S

1. 检查环境,确认内核版本符合条件:

2. 改主机名:

hostnamectl set-hostname k8s-master && bash

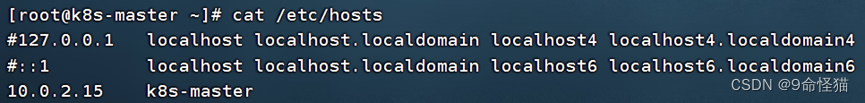

3. 修改hosts文件

4. 关闭防火墙,关闭selinux,关闭swap

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

[root@k8s-master ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@k8s-master ~]# setenforce 0

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# sed -i 's/.*swap.*/#&/' /etc/fstab 5. 将桥接的IPv4 流量传递到iptables 的链

[root@k8s-master ~]# cat /etc/sysctl.d/k8s.conf #文件内容如下

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@k8s-master ~]# sysctl --system # 生效6. 时间同步

[root@k8s-master ~]# yum install ntpdate -y

[root@k8s-master ~]# ntpdate time.windows.com7. 安装Docker

[root@k8s-master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@k8s-master ~]# yum -y install docker-ce-20.10.12-3.el7 #安装 20.10.12版本

[root@k8s-master ~]# systemctl enable docker && systemctl start docker && systemctl status docker

[root@k8s-master ~]# docker --version #查看是否 20.10.128. docker加速器

[root@k8s-master ~]# cat /etc/docker/daemon.json #文件内容如下:{"registry-mirrors": ["https://qj799ren.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"storage-driver": "overlay2"}

[root@k8s-master ~]# systemctl restart docker9. 添加kubernetes的yum源

[root@k8s-master ~]# cat /etc/yum.repos.d/kubernetes.repo #文件内容

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg10. 安装kubeadm,kubelet 和kubectl

[root@k8s-master ~]# yum -y install kubelet-1.21.5-0 kubeadm-1.21.5-0 kubectl-1.21.5-0 #选用v1.21.5版本,记住版本号,下面有用

[root@k8s-master ~]# systemctl enable kubelet11. 部署Kubernetes Master

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=10.0.2.15 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.5 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=all参数说明:

–apiserver-advertise-address=10.0.2.15 是master主机的IP地址

–image-repository registry.aliyuncs.com/google_containers 镜像地址,使用的阿里云仓库地址:repository registry.aliyuncs.com/google_containers

–kubernetes-version=v1.21.5 上一步记录的k8s软件版本号

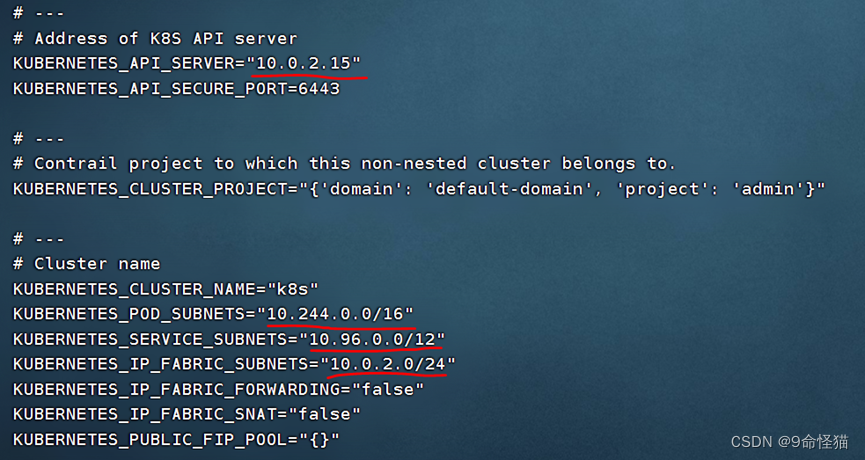

–service-cidr=10.96.0.0/12 记住这个地址段,10.96.0.0/12 后面配置Tungsten Fabric会用到

–pod-network-cidr=10.244.0.0/16 k8s内部的pod节点之间网络可以使用的IP段,不能和service-cidr写一样,如果不知道怎么配,就先用这个10.244.0.0/16

–ignore-preflight-errors=all 忽略错误

出现如下开头的很大段英文,说明安装成功:

Your Kubernetes control-plane has initialized successfully!

12. 开始使用cluster之前的准备

执行如下语句,其实刚才那一大段英文里提示了让执行如下命令:

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config13. 查看nodes节点状态

[root@k8s-master ~]# kubectl get nodes #节点此时状态为NotReady

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 5m16s v1.21.514. 安装Pod 网络插件

本次选择calico,采用的yaml如下:

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:name: calico-confignamespace: kube-system

data:# Typha is disabled.typha_service_name: "none"# Configure the backend to use.calico_backend: "bird"# Configure the MTU to useveth_mtu: "1440"# The CNI network configuration to install on each node. The special# values in this config will be automatically populated.cni_network_config: |-{"name": "k8s-pod-network","cniVersion": "0.3.1","plugins": [{"type": "calico","log_level": "info","datastore_type": "kubernetes","nodename": "__KUBERNETES_NODE_NAME__","mtu": __CNI_MTU__,"ipam": {"type": "calico-ipam"},"policy": {"type": "k8s"},"kubernetes": {"kubeconfig": "__KUBECONFIG_FILEPATH__"}},{"type": "portmap","snat": true,"capabilities": {"portMappings": true}}]}---

# Source: calico/templates/kdd-crds.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: felixconfigurations.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: FelixConfigurationplural: felixconfigurationssingular: felixconfiguration

---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: ipamblocks.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: IPAMBlockplural: ipamblockssingular: ipamblock---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: blockaffinities.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: BlockAffinityplural: blockaffinitiessingular: blockaffinity---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: ipamhandles.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: IPAMHandleplural: ipamhandlessingular: ipamhandle---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: ipamconfigs.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: IPAMConfigplural: ipamconfigssingular: ipamconfig---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: bgppeers.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: BGPPeerplural: bgppeerssingular: bgppeer---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: bgpconfigurations.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: BGPConfigurationplural: bgpconfigurationssingular: bgpconfiguration---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: ippools.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: IPPoolplural: ippoolssingular: ippool---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: hostendpoints.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: HostEndpointplural: hostendpointssingular: hostendpoint---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: clusterinformations.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: ClusterInformationplural: clusterinformationssingular: clusterinformation---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: globalnetworkpolicies.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: GlobalNetworkPolicyplural: globalnetworkpoliciessingular: globalnetworkpolicy---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: globalnetworksets.crd.projectcalico.org

spec:scope: Clustergroup: crd.projectcalico.orgversion: v1names:kind: GlobalNetworkSetplural: globalnetworksetssingular: globalnetworkset---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: networkpolicies.crd.projectcalico.org

spec:scope: Namespacedgroup: crd.projectcalico.orgversion: v1names:kind: NetworkPolicyplural: networkpoliciessingular: networkpolicy---apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:name: networksets.crd.projectcalico.org

spec:scope: Namespacedgroup: crd.projectcalico.orgversion: v1names:kind: NetworkSetplural: networksetssingular: networkset

---

# Source: calico/templates/rbac.yaml# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: calico-kube-controllers

rules:# Nodes are watched to monitor for deletions.- apiGroups: [""]resources:- nodesverbs:- watch- list- get# Pods are queried to check for existence.- apiGroups: [""]resources:- podsverbs:- get# IPAM resources are manipulated when nodes are deleted.- apiGroups: ["crd.projectcalico.org"]resources:- ippoolsverbs:- list- apiGroups: ["crd.projectcalico.org"]resources:- blockaffinities- ipamblocks- ipamhandlesverbs:- get- list- create- update- delete# Needs access to update clusterinformations.- apiGroups: ["crd.projectcalico.org"]resources:- clusterinformationsverbs:- get- create- update

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: calico-kube-controllers

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-kube-controllers

subjects:

- kind: ServiceAccountname: calico-kube-controllersnamespace: kube-system

---

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: calico-node

rules:# The CNI plugin needs to get pods, nodes, and namespaces.- apiGroups: [""]resources:- pods- nodes- namespacesverbs:- get- apiGroups: [""]resources:- endpoints- servicesverbs:# Used to discover service IPs for advertisement.- watch- list# Used to discover Typhas.- get- apiGroups: [""]resources:- nodes/statusverbs:# Needed for clearing NodeNetworkUnavailable flag.- patch# Calico stores some configuration information in node annotations.- update# Watch for changes to Kubernetes NetworkPolicies.- apiGroups: ["networking.k8s.io"]resources:- networkpoliciesverbs:- watch- list# Used by Calico for policy information.- apiGroups: [""]resources:- pods- namespaces- serviceaccountsverbs:- list- watch# The CNI plugin patches pods/status.- apiGroups: [""]resources:- pods/statusverbs:- patch# Calico monitors various CRDs for config.- apiGroups: ["crd.projectcalico.org"]resources:- globalfelixconfigs- felixconfigurations- bgppeers- globalbgpconfigs- bgpconfigurations- ippools- ipamblocks- globalnetworkpolicies- globalnetworksets- networkpolicies- networksets- clusterinformations- hostendpoints- blockaffinitiesverbs:- get- list- watch# Calico must create and update some CRDs on startup.- apiGroups: ["crd.projectcalico.org"]resources:- ippools- felixconfigurations- clusterinformationsverbs:- create- update# Calico stores some configuration information on the node.- apiGroups: [""]resources:- nodesverbs:- get- list- watch# These permissions are only requried for upgrade from v2.6, and can# be removed after upgrade or on fresh installations.- apiGroups: ["crd.projectcalico.org"]resources:- bgpconfigurations- bgppeersverbs:- create- update# These permissions are required for Calico CNI to perform IPAM allocations.- apiGroups: ["crd.projectcalico.org"]resources:- blockaffinities- ipamblocks- ipamhandlesverbs:- get- list- create- update- delete- apiGroups: ["crd.projectcalico.org"]resources:- ipamconfigsverbs:- get# Block affinities must also be watchable by confd for route aggregation.- apiGroups: ["crd.projectcalico.org"]resources:- blockaffinitiesverbs:- watch# The Calico IPAM migration needs to get daemonsets. These permissions can be# removed if not upgrading from an installation using host-local IPAM.- apiGroups: ["apps"]resources:- daemonsetsverbs:- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: calico-node

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-node

subjects:

- kind: ServiceAccountname: calico-nodenamespace: kube-system---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:name: calico-nodenamespace: kube-systemlabels:k8s-app: calico-node

spec:selector:matchLabels:k8s-app: calico-nodeupdateStrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1template:metadata:labels:k8s-app: calico-nodeannotations:# This, along with the CriticalAddonsOnly toleration below,# marks the pod as a critical add-on, ensuring it gets# priority scheduling and that its resources are reserved# if it ever gets evicted.scheduler.alpha.kubernetes.io/critical-pod: ''spec:nodeSelector:beta.kubernetes.io/os: linuxhostNetwork: truetolerations:# Make sure calico-node gets scheduled on all nodes.- effect: NoScheduleoperator: Exists# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- effect: NoExecuteoperator: ExistsserviceAccountName: calico-node# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.terminationGracePeriodSeconds: 0priorityClassName: system-node-criticalinitContainers:# This container performs upgrade from host-local IPAM to calico-ipam.# It can be deleted if this is a fresh installation, or if you have already# upgraded to use calico-ipam.- name: upgrade-ipamimage: calico/cni:v3.11.3command: ["/opt/cni/bin/calico-ipam", "-upgrade"]env:- name: KUBERNETES_NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: CALICO_NETWORKING_BACKENDvalueFrom:configMapKeyRef:name: calico-configkey: calico_backendvolumeMounts:- mountPath: /var/lib/cni/networksname: host-local-net-dir- mountPath: /host/opt/cni/binname: cni-bin-dirsecurityContext:privileged: true# This container installs the CNI binaries# and CNI network config file on each node.- name: install-cniimage: calico/cni:v3.11.3command: ["/install-cni.sh"]env:# Name of the CNI config file to create.- name: CNI_CONF_NAMEvalue: "10-calico.conflist"# The CNI network config to install on each node.- name: CNI_NETWORK_CONFIGvalueFrom:configMapKeyRef:name: calico-configkey: cni_network_config# Set the hostname based on the k8s node name.- name: KUBERNETES_NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName# CNI MTU Config variable- name: CNI_MTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Prevents the container from sleeping forever.- name: SLEEPvalue: "false"volumeMounts:- mountPath: /host/opt/cni/binname: cni-bin-dir- mountPath: /host/etc/cni/net.dname: cni-net-dirsecurityContext:privileged: true# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes# to communicate with Felix over the Policy Sync API.- name: flexvol-driverimage: calico/pod2daemon-flexvol:v3.11.3volumeMounts:- name: flexvol-driver-hostmountPath: /host/driversecurityContext:privileged: truecontainers:# Runs calico-node container on each Kubernetes node. This# container programs network policy and routes on each# host.- name: calico-nodeimage: calico/node:v3.11.3env:# Use Kubernetes API as the backing datastore.- name: DATASTORE_TYPEvalue: "kubernetes"# Wait for the datastore.- name: WAIT_FOR_DATASTOREvalue: "true"# Set based on the k8s node name.- name: NODENAMEvalueFrom:fieldRef:fieldPath: spec.nodeName# Choose the backend to use.- name: CALICO_NETWORKING_BACKENDvalueFrom:configMapKeyRef:name: calico-configkey: calico_backend# Cluster type to identify the deployment type- name: CLUSTER_TYPEvalue: "k8s,bgp"# Auto-detect the BGP IP address.- name: IPvalue: "autodetect"# Enable IPIP- name: CALICO_IPV4POOL_IPIPvalue: "Always"# Set MTU for tunnel device used if ipip is enabled- name: FELIX_IPINIPMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# The default IPv4 pool to create on startup if none exists. Pod IPs will be# chosen from this range. Changing this value after installation will have# no effect. This should fall within `--cluster-cidr`.- name: CALICO_IPV4POOL_CIDRvalue: "10.244.0.0/16"# Disable file logging so `kubectl logs` works.- name: CALICO_DISABLE_FILE_LOGGINGvalue: "true"# Set Felix endpoint to host default action to ACCEPT.- name: FELIX_DEFAULTENDPOINTTOHOSTACTIONvalue: "ACCEPT"# Disable IPv6 on Kubernetes.- name: FELIX_IPV6SUPPORTvalue: "false"# Set Felix logging to "info"- name: FELIX_LOGSEVERITYSCREENvalue: "info"- name: FELIX_HEALTHENABLEDvalue: "true"securityContext:privileged: trueresources:requests:cpu: 250mlivenessProbe:exec:command:- /bin/calico-node- -felix-live- -bird-liveperiodSeconds: 10initialDelaySeconds: 10failureThreshold: 6readinessProbe:exec:command:- /bin/calico-node- -felix-ready- -bird-readyperiodSeconds: 10volumeMounts:- mountPath: /lib/modulesname: lib-modulesreadOnly: true- mountPath: /run/xtables.lockname: xtables-lockreadOnly: false- mountPath: /var/run/caliconame: var-run-calicoreadOnly: false- mountPath: /var/lib/caliconame: var-lib-calicoreadOnly: false- name: policysyncmountPath: /var/run/nodeagentvolumes:# Used by calico-node.- name: lib-moduleshostPath:path: /lib/modules- name: var-run-calicohostPath:path: /var/run/calico- name: var-lib-calicohostPath:path: /var/lib/calico- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate# Used to install CNI.- name: cni-bin-dirhostPath:path: /opt/cni/bin- name: cni-net-dirhostPath:path: /etc/cni/net.d# Mount in the directory for host-local IPAM allocations. This is# used when upgrading from host-local to calico-ipam, and can be removed# if not using the upgrade-ipam init container.- name: host-local-net-dirhostPath:path: /var/lib/cni/networks# Used to create per-pod Unix Domain Sockets- name: policysynchostPath:type: DirectoryOrCreatepath: /var/run/nodeagent# Used to install Flex Volume Driver- name: flexvol-driver-hosthostPath:type: DirectoryOrCreatepath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

---apiVersion: v1

kind: ServiceAccount

metadata:name: calico-nodenamespace: kube-system---

# Source: calico/templates/calico-kube-controllers.yaml# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllers

spec:# The controllers can only have a single active instance.replicas: 1selector:matchLabels:k8s-app: calico-kube-controllersstrategy:type: Recreatetemplate:metadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersannotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:nodeSelector:beta.kubernetes.io/os: linuxtolerations:# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- key: node-role.kubernetes.io/mastereffect: NoScheduleserviceAccountName: calico-kube-controllerspriorityClassName: system-cluster-criticalcontainers:- name: calico-kube-controllersimage: calico/kube-controllers:v3.11.3env:# Choose which controllers to run.- name: ENABLED_CONTROLLERSvalue: node- name: DATASTORE_TYPEvalue: kubernetesreadinessProbe:exec:command:- /usr/bin/check-status- -r

---

apiVersion: v1

kind: ServiceAccount

metadata:name: calico-kube-controllersnamespace: kube-system注意:CALICO_IPV4POOL_CIDR对应的IP,必须与前面kubeadm init的 --pod-network-cidr指定的一样:–pod-network-cidr=10.244.0.0/16

部署安装calico:

[root@k8s-master ~]# kubectl apply -f calico.yaml15. 验证网络

这次再查看node情况

[root@k8s-master ~]# kubectl get nodes #看到 Ready说明网络就正常了

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 18m v1.21.5查看pod情况,全running就对了。如果宿主机和虚机配置不高,这个过程会持续10分钟或以上……

[root@k8s-master ~]# kubectl get pods -n kube-system 16. 去“污点”

默认master节点是有“污点”的,去掉

[root@k8s-master ~]# kubectl taint nodes --all node-role.kubernetes.io/master-17. 配置kubectl命令TAB补全相关

[root@k8s-master ~]# yum -y install bash-completion #安装补全命令的包

[root@k8s-master ~]# kubectl completion bash

[root@k8s-master ~]# source /usr/share/bash-completion/bash_completion

[root@k8s-master ~]# kubectl completion bash >/etc/profile.d/kubectl.sh

[root@k8s-master ~]# source /etc/profile.d/kubectl.sh/root/.bashrc 文件最后加一行

source /etc/profile.d/kubectl.sh

至此:单机安装K8S 完毕

二、安装Tungsten Fabric

1. master节点打标签

kubectl label node <Master-name> node-role.opencontrail.org/controller=true2. 下载tungsten fabric安装包

git clone https://github.com/tungstenfabric/tf-container-builder.git3. 修改配置文件:

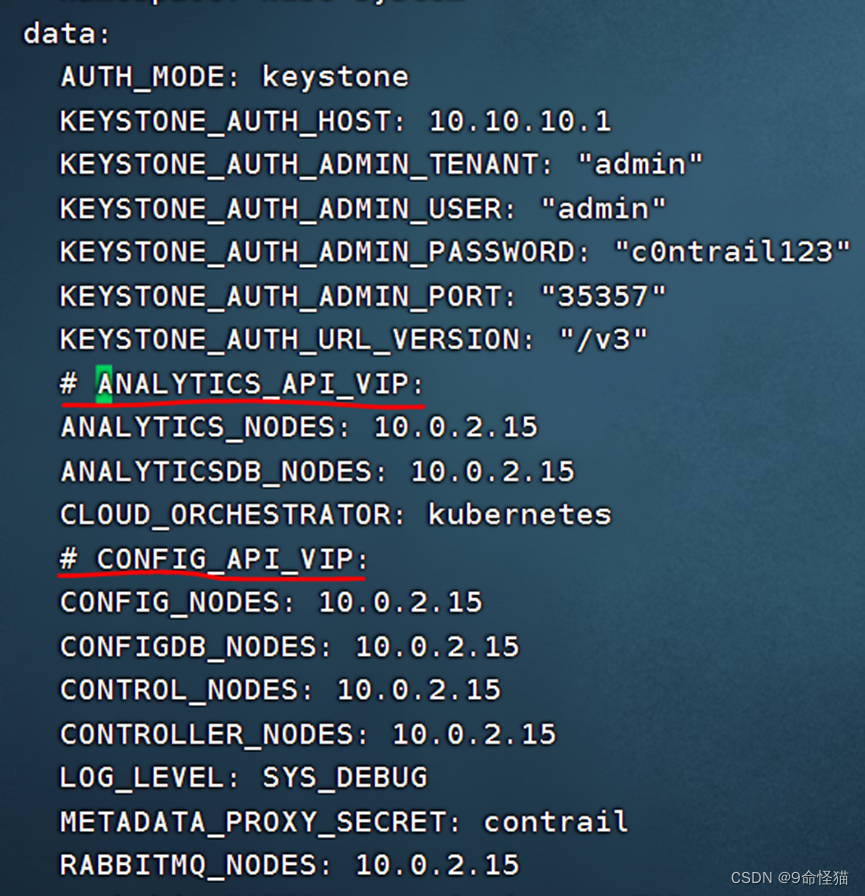

cp <your_directory>/sample_config_files/kubernetes/common.env.sample.non_nested_mode <your_directory>/tf-container-builder/common.env修改common.env内容中网络设置:

4. 生成yaml文件

cd <your_directory>/kubernetes/manifests

bash resolve-manifest.sh contrail-non-nested-kubernetes.yaml > non-nested-contrail.yaml

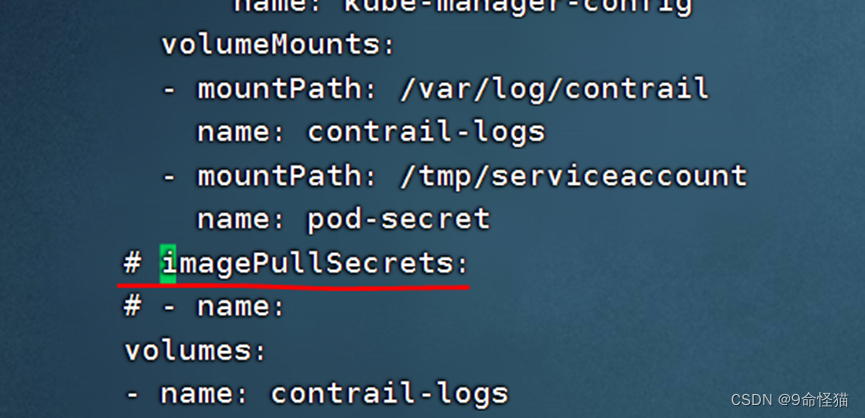

5. 修改新生成yaml文件

修改为master节点标签(见第一步)

注释掉imagePullSecret

注释掉ANALYTICS_API_VIP 和 CONFIG_API_VIP

6. 安装部署tungsten fabric

kubectl apply -f non-nested-contrail.yml

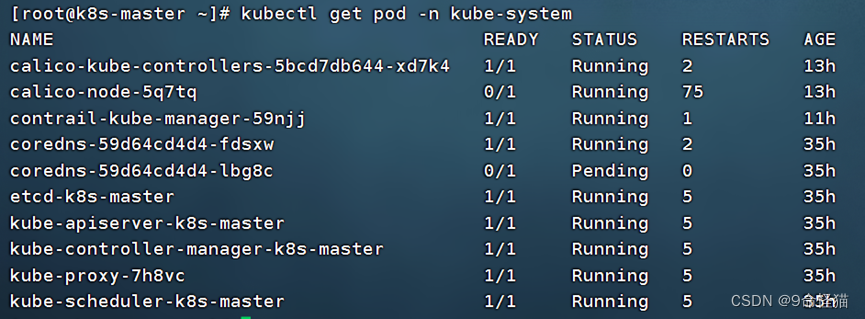

7. 查看pod

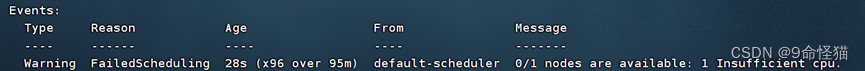

那个coredns的pending问题是因为:

暂时忽略不计。