利用Scrapy框架爬取LOL皮肤站高清壁纸

Lan 2020-03-06 21:22 81 人阅读 0 条评论

成品打包:点击进入

代码:

爬虫文件

# -*- coding: utf-8 -*-

import scrapy

from practice.items import PracticeItem

from urllib import parseclass LolskinSpider(scrapy.Spider):name = 'lolskin'allowed_domains = ['lolskin.cn']start_urls = ['https://lolskin.cn/champions.html']# 获取所有英雄链接def parse(self, response):item = PracticeItem()item['urls'] = response.xpath('//div[2]/div[1]/div/ul/li/a/@href').extract()for url in item['urls']:self.csurl = 'https://lolskin.cn'yield scrapy.Request(url=parse.urljoin(self.csurl, url), dont_filter=True, callback=self.bizhi)return item# 获取所有英雄皮肤链接def bizhi(self, response):skins = (response.xpath('//td/a/@href').extract())for skin in skins:yield scrapy.Request(url=parse.urljoin(self.csurl, skin), dont_filter=True, callback=self.get_bzurl)# 采集每个皮肤的壁纸,获取壁纸链接def get_bzurl(self, response):item = PracticeItem()image_urls = response.xpath('//body/div[1]/div/a/@href').extract()image_name = response.xpath('//h1/text()').extract()yield {'image_urls': image_urls,'image_name': image_name}return itemitems.py

# -*- coding: utf-8 -*-# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.htmlimport scrapyclass PracticeItem(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()# titles = scrapy.Field()# yxpngs = scrapy.Field()urls = scrapy.Field()skin_name = scrapy.Field() # 皮肤名image_urls = scrapy.Field() # 皮肤壁纸urlimages = scrapy.Field()pipelines.py

# -*- coding: utf-8 -*-# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import os

import re

from scrapy.pipelines.images import ImagesPipeline

import scrapy# class PracticePipeline(object):

# def __init__(self):

# self.file = open('text.csv', 'a+')

#

# def process_item(self, item, spider):

# # os.chdir('lolskin')

# # for title in item['titles']:

# # os.makedirs(title)

# skin_name = item['skin_name']

# skin_jpg = item['skin_jpg']

# for i in range(len(skin_name)):

# self.file.write(f'{skin_name[i]},{skin_jpg}\n')

# self.file.flush()

# return item

#

# def down_bizhi(self, item, spider):

# self.file.close()class LoLPipeline(ImagesPipeline):def get_media_requests(self, item, info):for image_url in item['image_urls']:yield scrapy.Request(image_url, meta={'image_name': item['image_name']})# 修改下载之后的路径以及文件名def file_path(self, request, response=None, info=None):image_name = re.findall('/skin/(.*?)/', request.url)[0] + "/" + request.meta[f'image_name'][0] + '.jpg'return image_namesettings.py

# -*- coding: utf-8 -*-# Scrapy settings for practice project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

import osBOT_NAME = 'practice'SPIDER_MODULES = ['practice.spiders']

NEWSPIDER_MODULE = 'practice.spiders'# Crawl responsibly by identifying yourself (and your website) on the user-agent

# USER_AGENT = 'practice (+http://www.yourdomain.com)'# Obey robots.txt rules

ROBOTSTXT_OBEY = False# Configure maximum concurrent requests performed by Scrapy (default: 16)

# CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

# 设置延时

DOWNLOAD_DELAY = 1

# The download delay setting will honor only one of:

# CONCURRENT_REQUESTS_PER_DOMAIN = 16

# CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)

# COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)

# TELNETCONSOLE_ENABLED = False# Override the default request headers:

# DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

# }# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# 'practice.middlewares.PracticeSpiderMiddleware': 543,

# }# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# DOWNLOADER_MIDDLEWARES = {

# 'practice.middlewares.PracticeDownloaderMiddleware': 543,

# }# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

# EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

# }# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {# 'practice.pipelines.PracticePipeline': 300,# 'scrapy.pipelines.images.ImagesPipeline': 1,'practice.pipelines.LoLPipeline': 1

}

# 设置采集文件夹路径

IMAGES_STORE = 'E:\Python\scrapy\practice\practice\LOLskin'

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

# HTTPCACHE_ENABLED = True

# HTTPCACHE_EXPIRATION_SECS = 0

# HTTPCACHE_DIR = 'httpcache'

# HTTPCACHE_IGNORE_HTTP_CODES = []

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'main.py

from scrapy.cmdline import executeexecute(['scrapy', 'crawl', 'lolskin']) 本文地址: https://www.lanol.cn/post/24.html

版权声明:本文为原创文章,版权归 Lan 所有,欢迎分享本文,转载请保留出处!

版权声明:本文为原创文章,版权归 Lan 所有,欢迎分享本文,转载请保留出处!

点赞

赞赏

PREVIOUS:Ascall对照表

NEXT:python3 + flask + sqlalchemy

文章导航

相关文章

我用Python一键爬取了:所有LOL英雄皮肤壁纸!

人生苦短,快学Python!

今天是教使用大家selenium,一键爬取LOL英雄皮肤壁纸。

第一步,先要进行网页分析 一、网页分析 进入LOL官网后,鼠标悬停在游戏资料上,等出现窗口,选择资料库,…

python爬取千图网_python爬取lol官网英雄图片代码

python爬取lol官网英雄图片代码可以帮助用户对英雄联盟官网平台的皮肤图片进行抓取,有很多喜欢lol的玩家们想要官方的英雄图片当作自己的背景或者头像,可以使用这款软件为你爬取图片资源,操作很简单,设置一些保存路径就可以将图片…

抓取 LOL 官网墙纸实现

闲来无事(蛋疼),随手实现了下 Controller 代码 <?php

namespace App\Http\Controllers\Image;use App\Http\Controllers\Controller;

use Illuminate\Filesystem\Filesystem as Fs;class ImageController extends Controller

{public sta…

nodejs http内置模块使用

/** * 创建一个 HTTP 服务,端口为 9000,满足如下需求 * GET /index.html 响应 page/index.html 的文件内容 * GET /css/app.css 响应 page/css/app.css 的文件内容 * GET /images/logo.png 响应 page/images/logo.png 的文件内…

【ONNXRuntime】python找不到指定的模块:onnxruntime\capi\onnxruntime_providers_shared.dll

问题:

使用源码推理的时候onnruntime 能够使用cuda,但是使用pyinstaller导出包之后,推理就会出现找不到 onnxruntime\capi\onnxruntime_providers_shared.dll错误。

原因:原因就是运行的时候没有找到动态链接库呗。

解决方法&a…

关于solidworks软件的显卡驱动

运行像solidworks这样的3D设计软件,装配体稍微复杂,一般的GTX游戏卡就无法胜任,这时候需要专门的图形卡加持。个人可以针对自己的装配体规模选择合适的图形卡,一般500-1000个零件以内的装配体,用丽台K4000完全可以胜任…

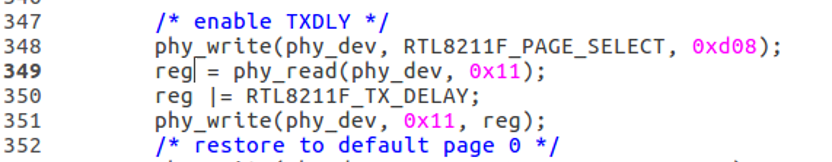

HI3798MV200驱动移植

目录

1.UBOOT配置修改方法

2.由EMMC启动改为SPI NAND FLASH 启动

3.网络调试

4.PHY复位

5.内核起来网络不通

6.增加RTC

7.PHY 灯ACT LINK 问题

8.PHY link状态查询

9.ETH0 网络状态灯修改 1.UBOOT配置修改方法

需要对应版本的HITOOL,个人也是废了很大劲&a…

用ChatGPT解析Wireshark抓取的数据包样例

用Wireshark抓取的数据包,常用于网络故障排查、分析和应用程序通信协议开发。其抓取的分组数据结果为底层数据,看起来比较困难,现在通过chatGPT大模型,可以将原始抓包信息数据提交给AI进行解析,本文即是进行尝试的样例…