实验环境

系统都是centos 7

| IP地址 | 主机名称 |

|---|---|

| 192.168.0.1 | k8s-master01 |

| 192.168.0.2 | k8s-master02 |

| 192.168.0.3 | k8s-master03 |

| 192.168.0.230 | k8s-vip |

| 192.168.0.4 | k8s-node01 |

| 192.168.0.5 | k8s-node02 |

所有节点修改主机名称

cat <<EOF >> /etc/hosts

192.168.0.1 k8s-master01

192.168.0.2 k8s-master02

192.168.0.3 k8s-master03

192.168.0.230 k8s-vip

192.168.0.4 K8s-node01

192.168.0.5 K8s-node02

EOF

所有节点配置阿里云镜像源

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo # 配置centos 7的镜像源

yum install -y yum-utils device-mapper-persistent-data lvm2 # 安装一些后期或需要的的一下依赖

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's/http/https/g' /etc/yum.repos.d/CentOS-Base.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo # 配置阿里云的k8s源

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

所有节点安装一些所需要的环境

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

所有节点关闭服务器防火墙:如果是云服务器记得开放安全组访问权限

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

所有节点关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

所有节点配置limit

ulimit -SHn 65535

-a 显示目前资源限制的设定。

-c <core文件上限> 设定core文件的最大值,单位为区块。

-d <数据节区大小> 程序数据节区的最大值,单位为KB。

-f <文件大小> shell所能建立的最大文件,单位为KB。

-H 设定资源的硬性限制,也就是管理员所设下的限制。

-m <内存大小> 指定可使用内存的上限,单位为KB。

-n <文件数目> 指定同一时间最多可打开的文件数。

-p <缓冲区大小> 指定管道缓冲区的大小,单位512字节。

-s <堆栈大小> 指定堆叠的上限,单位为KB。

-S 设定资源的弹性限制。

-t <CPU时间> 指定CPU使用时间的上限,单位为秒。

-u <进程数目> 用户最多可启动的进程数目。

# vim /etc/security/limits.conf

# 末尾添加如下内容 相当于配置永久生效

cat << EOF >> /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

安装免登方便操作

ssh-keygen -t rsa

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

所有升级内核

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

yum update -y --exclude=kernel* && reboot

cd /root && yum localinstall -y kernel-ml*

所有节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

reboot

[root@k8s-master01 ~]# uname -a # 查看系统内核

Linux k8s-master02 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux

下载安装所有的源码文件

所有节点安装ipvsadm(简单来说介绍LVS)

yum install ipvsadm ipset sysstat conntrack libseccomp -y

所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可

yum install ipvsadm ipset sysstat conntrack libseccomp -y

# 配置内核模块

cat << EOF >> /etc/modules-load.d/ipvs.conf # 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl enable --now systemd-modules-load.service # 开启的时候加载内核模块

所有开启一些k8s集群中必须的内核参数,所有节点配置k8s内核:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

net.ipv4.conf.all.route_localnet = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

K8s组件和Runtime安装

所有节点安装Containerd

yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

modprobe -- overlay

modprobe -- br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system # 加载内核

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sed -i 's#sandbox_image = "registry.k8s.io/pause:3.6"#sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6"#' /etc/containerd/config.toml

systemctl daemon-reload

systemctl enable --now containerd

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

# 配置完成之后建议在进行重启一下,错误如下图所示:

systemctl restart containerd

开始进行安装k8s 组件:

yum install kubeadm-1.25* kubelet-1.25* kubectl-1.25* -y

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_KUBEADM_ARGS="--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

EOF

systemctl daemon-reload

systemctl enable --now kubelet

mstart所有节点进行部署高可用配置

mstart所有节点安装nginx

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

yum install -y nginx

sudo systemctl start nginx

sudo systemctl enable nginx

# 安装完成之后进行配置nginx的四层代理可以参考我之前的文档:https://blog.csdn.net/weixin_44932410/article/details/120155988

user nginx;

worker_processes auto;error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;events {worker_connections 1024;

}

stream {

upstream kube-apiserver {server 192.168.0.201:6443;server 192.168.0.202:6443;server 192.168.0.203:6443;}

server {listen 16443;proxy_pass kube-apiserver;}

}

进行安装和配置keepalived

yum install keepalived -y

mstart01中进行操作vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { # 全局定义块,指定全局配置参数router_id LVS_DEVEL # 指定路由器IDscript_user root # 指定脚本运行用户enable_script_security # 启用脚本安全性检查

}

vrrp_script chk_apiserver { # 健康检查脚本,指定检查API Server状态script "/etc/keepalived/check_apiserver.sh" # 指定检查命令脚本路径interval 5 # 指定健康检查间隔weight -5 # 权重高度降低(可选)fall 2 # 指定失败次数阈值rise 1 # 指定成功次数阈值

}

vrrp_instance VI_1 { # VRRP实例,分配虚拟IP地址和HA代理角色state MASTER # 指定该节点的HA代理角色interface ens33 # 指定HA代理工作的网络接口mcast_src_ip 192.168.0.201 # 指定多播源IP地址virtual_router_id 51 # 指定该VRRP实例唯一标识IDpriority 101 # 指定该节点的HA优先级别advert_int 2 # 广播检查间隔authentication { # 验证选项块auth_type PASS # 指定验证类型auth_pass K8SHA_KA_AUTH # 指定密码}virtual_ipaddress { # 指定虚拟IP地址192.168.0.230 } track_script { # 根据脚本状态切换共享chk_apiserver}

}mstart02中进行操作vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {router_id LVS_DEVEL

script_user rootenable_script_security

}

vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2 rise 1

}

vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.0.202virtual_router_id 51priority 100 # 这个是进行区分权重的advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.0.230}track_script {chk_apiserver}

}

mstart03进行操作vim /etc/keepalived/keepalived.conf

Configuration File for keepalived

global_defs {router_id LVS_DEVEL

script_user rootenable_script_security

}

vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2

rise 1

}

vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.0.203virtual_router_id 51priority 99advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.0.230}track_script {chk_apiserver}

}

进行配置健康检查脚本vim /etc/keepalived/check_apiserver.sh

#!/bin/basherr=0

for k in $(seq 1 3)

docheck_code=$(pgrep nginx)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 1continueelseerr=0breakfi

doneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1

elseexit 0

fi所有mstart节点进行启动nginx和keepalived

systemctl restart nginxsystemctl restart keepalived

安装完成之后开始进行初始化:

mastart01 执行

kubeadm init --apiserver-advertise-address=192.168.0.230 --pod-network-cidr=172.16.0.0/12 --service-cidr=10.10.0.5/15 --image-repository=registry.aliyuncs.com/google_containers --control-plane-endpoint="192.168.0.230:16443" --upload-certs --cri-socket=/run/containerd/containerd.sock

# 可参考官方文档

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

# k8s 命令永久补全

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

进行安装网络插件:

安装网络组件

curl https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/calico-typha.yaml -o calico.yaml # 注意,这边使用的官网的地址,可能会导致无法下载下来

kubectl apply -f calico.yaml # 进行加载此配置即可

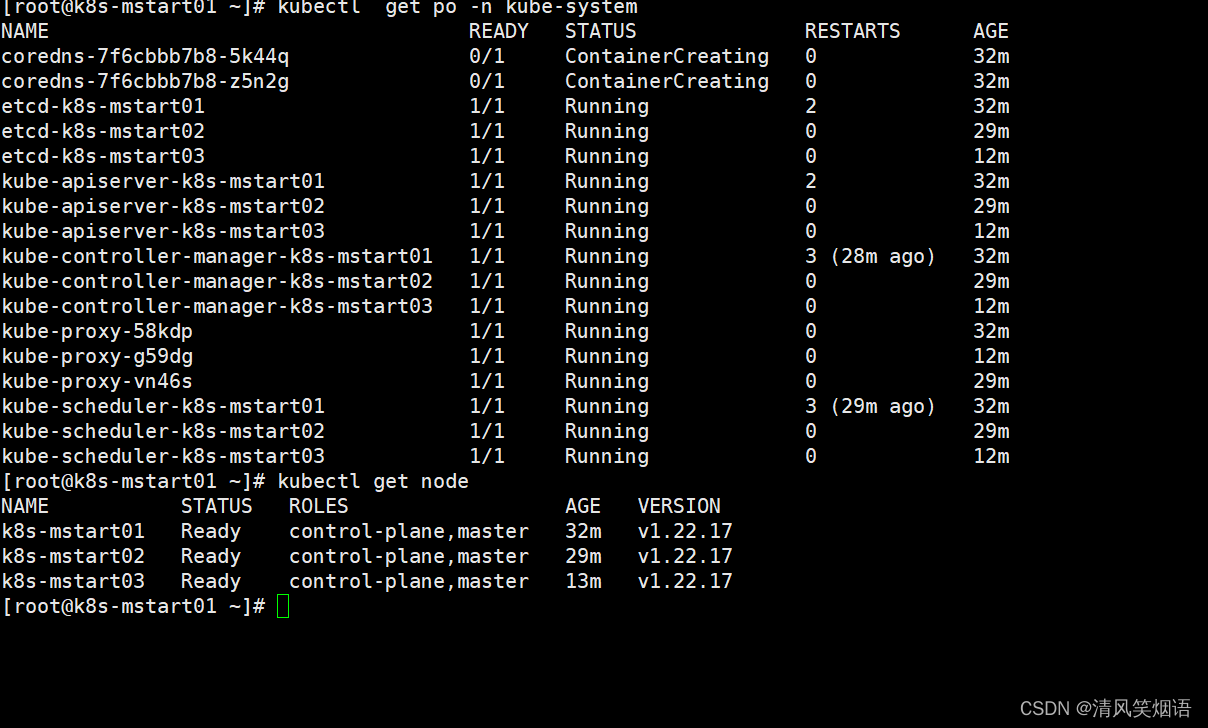

查看是否安装成功

[root@k8s-mstart01 ~]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f6cbbb7b8-5k44q 0/1 ContainerCreating 0 32m

coredns-7f6cbbb7b8-z5n2g 0/1 ContainerCreating 0 32m

etcd-k8s-mstart01 1/1 Running 2 32m

etcd-k8s-mstart02 1/1 Running 0 29m

etcd-k8s-mstart03 1/1 Running 0 12m

kube-apiserver-k8s-mstart01 1/1 Running 2 32m

kube-apiserver-k8s-mstart02 1/1 Running 0 29m

kube-apiserver-k8s-mstart03 1/1 Running 0 12m

kube-controller-manager-k8s-mstart01 1/1 Running 3 (28m ago) 32m

kube-controller-manager-k8s-mstart02 1/1 Running 0 29m

kube-controller-manager-k8s-mstart03 1/1 Running 0 12m

kube-proxy-58kdp 1/1 Running 0 32m

kube-proxy-g59dg 1/1 Running 0 12m

kube-proxy-vn46s 1/1 Running 0 29m

kube-scheduler-k8s-mstart01 1/1 Running 3 (29m ago) 32m

kube-scheduler-k8s-mstart02 1/1 Running 0 29m

kube-scheduler-k8s-mstart03 1/1 Running 0 12m

-------### 下面进行查看节点是否没有问题

[root@k8s-mstart01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-mstart01 Ready control-plane,master 32m v1.22.17

k8s-mstart02 Ready control-plane,master 29m v1.22.17

k8s-mstart03 Ready control-plane,master 13m v1.22.17

![总结button,input type=“button“,input type=“text“中:[在value添加值] 和 [标签内添加值]的区别](https://img-blog.csdnimg.cn/82359b3fb80d4f07867d69d3e772bbdf.png)