简介

对于无状态的组件来说,天然具备高可用特性,无非就是多开几个副本而已;

而对于有状态组件来说,实现高可用则要麻烦很多,一般来说通过选主来达到同一时刻只能有一个组件在处理业务逻辑。

在Kubernetes中,为了实现组件高可用,同一个组件需要部署多个副本,例如多个apiserver、scheduler、controller-manager等,其中apiserver是无状态的,每个组件都可以工作,而scheduler与controller-manager是有状态的,同一时刻只能存在一个活跃的,需要进行选主。

Kubernetes中是通过leaderelection来实现组件的高可用的。在Kubernetes本身的组件中,kube-scheduler和kube-manager-controller两个组件是有leader选举的,这个选举机制是Kubernetes对于这两个组件的高可用保障。即正常情况下kube-scheduler或kube-manager-controller组件的多个副本只有一个是处于业务逻辑运行状态,其它副本则不断的尝试去获取锁,去竞争leader,直到自己成为leader。如果正在运行的leader因某种原因导致当前进程退出,或者锁丢失,则由其它副本去竞争新的leader,获取leader继而执行业务逻辑。

在Kubernetes client-go包中就提供了接口供用户使用。代码路径在client-go/tools/leaderelection下。

如何使用

因为client-go帮我们封装了大部分逻辑,使用起来非常简单

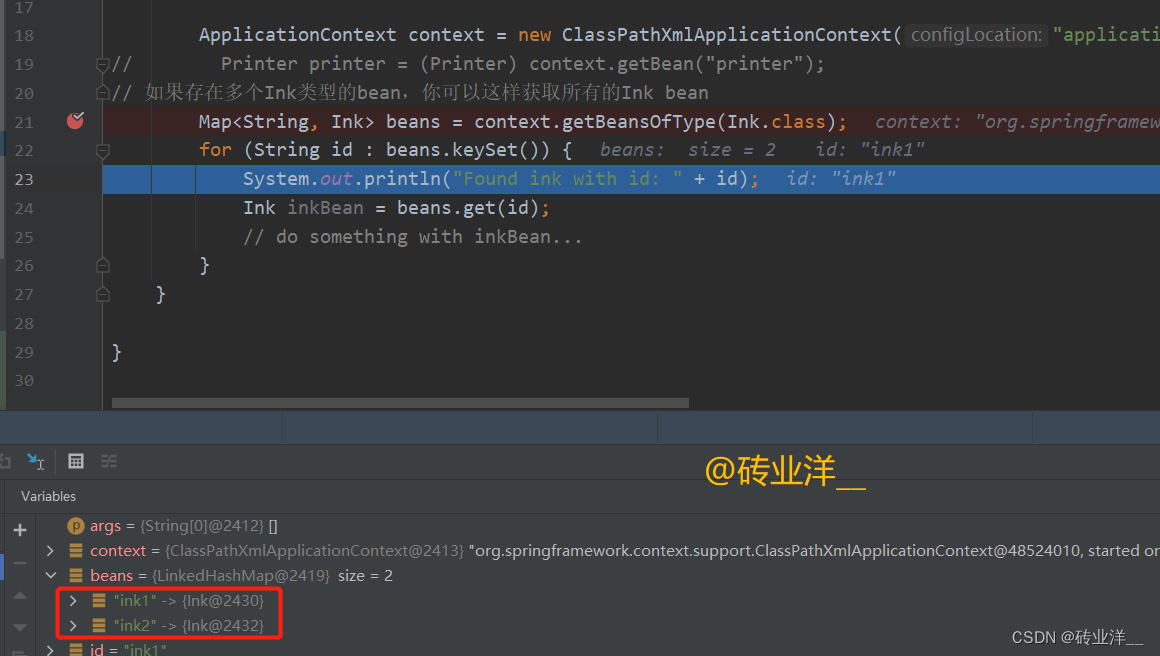

rl, err := resourcelock.New(resourcelock.EndpointsResourceLock,"namespace",lockName,ctx.KubeClient.CoreV1(),resourcelock.ResourceLockConfig{Identity: id,EventRecorder: ctx.Recorder("namespace"),})

if err != nil {log.Fatalf("error creating lock: %v", err)panic(err)

}// Try and become the leader and start cloud controller manager loops

leaderelection.RunOrDie(context.Background(), leaderelection.LeaderElectionConfig{Lock: rl,LeaseDuration: ctx.LeaseDuration,RenewDeadline: ctx.RenewDeadline,RetryPeriod: ctx.RetryPeriod,Callbacks: leaderelection.LeaderCallbacks{OnStartedLeading: func(_ context.Context) {log.Infof("cmdb running in leader elect")run(ctx)},OnStoppedLeading: func() {log.Fatalf("leaderelection lost")},},

})首先是新建给资源锁对象

开始选举,同时业务会提供回调方法给leaderelection:针对不同的选举结果,leaderelection会回调业务相应的方法,有三种回调方法

-

成为leader时的回调

失去leader时的回调

leader变更时的回调

下面就从源码来分析下它是怎么实现的

源码解析

leaderelection基本原理其实就是利用通过Kubernetes中configmap 、endpoints资源实现一个分布式锁,获取到锁的进程成为leader,并且定期更新租约(renew)。其他进程也在不断的尝试进行抢占,抢占不到则继续等待下次循环。当leader节点挂掉之后,租约到期,其他节点就成为新的leader。

为了针对不同资源的锁机制,leaderelection定义了一个接口协议:

type Interface interface {// Get returns the LeaderElectionRecordGet() (*LeaderElectionRecord, error)// Create attempts to create a LeaderElectionRecordCreate(ler LeaderElectionRecord) error// Update will update and existing LeaderElectionRecordUpdate(ler LeaderElectionRecord) error// RecordEvent is used to record eventsRecordEvent(string)// Identity will return the locks IdentityIdentity() string// Describe is used to convert details on current resource lock// into a stringDescribe() string

}目前有configmap和endpoints两种资源的实现。

从上面的使用来说:

第一步,我们首先会新建一个资源锁,对应源码如下:

func New(lockType string, ns string, name string, client corev1.CoreV1Interface, rlc ResourceLockConfig) (Interface, error) {switch lockType {case EndpointsResourceLock:return &EndpointsLock{EndpointsMeta: metav1.ObjectMeta{Namespace: ns,Name: name,},Client: client,LockConfig: rlc,}, nilcase ConfigMapsResourceLock:return &ConfigMapLock{ConfigMapMeta: metav1.ObjectMeta{Namespace: ns,Name: name,},Client: client,LockConfig: rlc,}, nildefault:return nil, fmt.Errorf("Invalid lock-type %s", lockType)}

}可以看到返回的是接口类型,对应到configmap和endpoints两种锁的实现。

第二步,开始选举,对应源码如下:

func RunOrDie(ctx context.Context, lec LeaderElectionConfig) {le, err := NewLeaderElector(lec)if err != nil {panic(err)}if lec.WatchDog != nil {lec.WatchDog.SetLeaderElection(le)}le.Run(ctx)

}

func (le *LeaderElector) Run(ctx context.Context) {defer func() {runtime.HandleCrash()le.config.Callbacks.OnStoppedLeading()}()if !le.acquire(ctx) {return // ctx signalled done}ctx, cancel := context.WithCancel(ctx)defer cancel()go le.config.Callbacks.OnStartedLeading(ctx)le.renew(ctx)

}上面的代码就是尝试去获取锁,然后根据处理结果,回调业务相应的方法,下面来看下具体的选举逻辑:

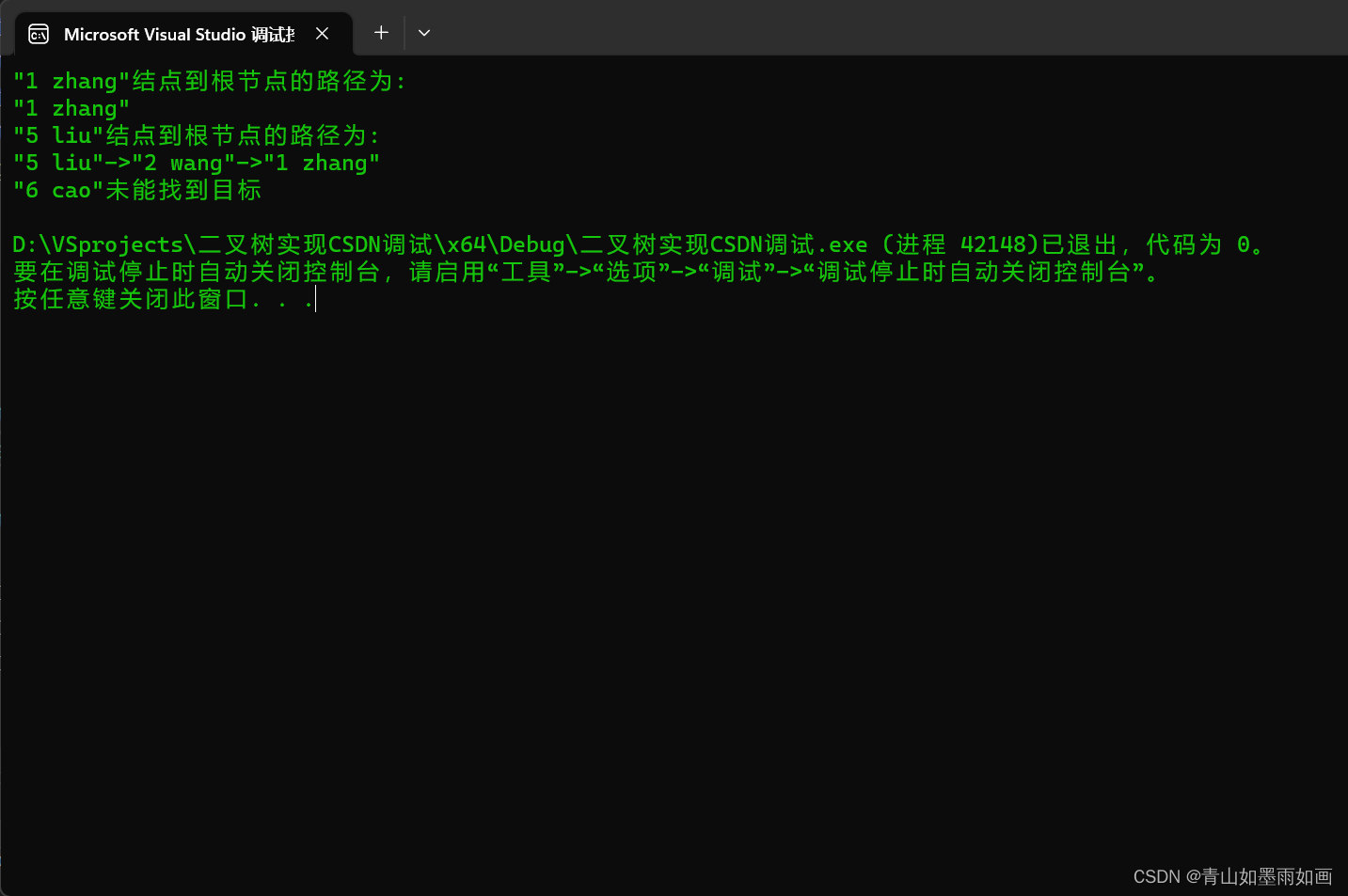

func (le *LeaderElector) acquire(ctx context.Context) bool {ctx, cancel := context.WithCancel(ctx)defer cancel()succeeded := falsedesc := le.config.Lock.Describe()klog.Infof("attempting to acquire leader lease %v...", desc)wait.JitterUntil(func() {succeeded = le.tryAcquireOrRenew()le.maybeReportTransition()if !succeeded {klog.V(4).Infof("failed to acquire lease %v", desc)return}le.config.Lock.RecordEvent("became leader")klog.Infof("successfully acquired lease %v", desc)cancel()}, le.config.RetryPeriod, JitterFactor, true, ctx.Done())return succeeded

}wait是一个周期性任务,该任务主要就是如下逻辑:

尝试去获取锁

如果没有获取到,则直接返回,等待下一次周期的到来再次尝试获取

如果获取到,表示自己已经是leader,可以处理业务逻辑了,此时就通过取消context来退出wait周期性任务

下面来看下具体的获取锁代码:

func (le *LeaderElector) tryAcquireOrRenew() bool {now := metav1.Now()leaderElectionRecord := rl.LeaderElectionRecord{HolderIdentity: le.config.Lock.Identity(),LeaseDurationSeconds: int(le.config.LeaseDuration / time.Second),RenewTime: now,AcquireTime: now,}// 1. obtain or create the ElectionRecordoldLeaderElectionRecord, err := le.config.Lock.Get()if err != nil {if !errors.IsNotFound(err) {klog.Errorf("error retrieving resource lock %v: %v", le.config.Lock.Describe(), err)return false}if err = le.config.Lock.Create(leaderElectionRecord); err != nil {klog.Errorf("error initially creating leader election record: %v", err)return false}le.observedRecord = leaderElectionRecordle.observedTime = le.clock.Now()return true}// 2. Record obtained, check the Identity & Timeif !reflect.DeepEqual(le.observedRecord, *oldLeaderElectionRecord) {le.observedRecord = *oldLeaderElectionRecordle.observedTime = le.clock.Now()}if le.observedTime.Add(le.config.LeaseDuration).After(now.Time) &&!le.IsLeader() {klog.V(4).Infof("lock is held by %v and has not yet expired", oldLeaderElectionRecord.HolderIdentity)return false}// 3. We're going to try to update. The leaderElectionRecord is set to it's default// here. Let's correct it before updating.if le.IsLeader() {leaderElectionRecord.AcquireTime = oldLeaderElectionRecord.AcquireTimeleaderElectionRecord.LeaderTransitions = oldLeaderElectionRecord.LeaderTransitions} else {leaderElectionRecord.LeaderTransitions = oldLeaderElectionRecord.LeaderTransitions + 1}// update the lock itselfif err = le.config.Lock.Update(leaderElectionRecord); err != nil {klog.Errorf("Failed to update lock: %v", err)return false}le.observedRecord = leaderElectionRecordle.observedTime = le.clock.Now()return true

}上面的逻辑如下:

获取锁对象信息LeaderElectionRecord

如果没有获取到,说明此时还没有leader,则去创建一个

如果获取到了,则说明系统中存在leader了,根据id标识检查自己是否是leader;如果自己不是leader,检查锁是否过期,如果没有过期,则退出后面的逻辑,等待wait的下一次调用;如果过期了,则更新锁对象,自己成为leader(续约成功或者新成为)

上面对对象锁信息的处理调用了一系列的接口,根据前面说的,有两种实现configmap和endpoints,下面以endpoints为例看看接口都是怎么处理对象锁的:

func (el *EndpointsLock) Get() (*LeaderElectionRecord, error) {var record LeaderElectionRecordvar err errorel.e, err = el.Client.Endpoints(el.EndpointsMeta.Namespace).Get(el.EndpointsMeta.Name, metav1.GetOptions{})if err != nil {return nil, err}if el.e.Annotations == nil {el.e.Annotations = make(map[string]string)}if recordBytes, found := el.e.Annotations[LeaderElectionRecordAnnotationKey]; found {if err := json.Unmarshal([]byte(recordBytes), &record); err != nil {return nil, err}}return &record, nil

}// Create attempts to create a LeaderElectionRecord annotation

func (el *EndpointsLock) Create(ler LeaderElectionRecord) error {recordBytes, err := json.Marshal(ler)if err != nil {return err}el.e, err = el.Client.Endpoints(el.EndpointsMeta.Namespace).Create(&v1.Endpoints{ObjectMeta: metav1.ObjectMeta{Name: el.EndpointsMeta.Name,Namespace: el.EndpointsMeta.Namespace,Annotations: map[string]string{LeaderElectionRecordAnnotationKey: string(recordBytes),},},})return err

}从上面可以看出,其实所谓的提供接口,无非就是提供一个锁对象信息的存储位置,因为k8s中的资源都是给业务使用的,k8s不想专门提供一种资源来代表组件锁,而是将锁信息存储在了接口实现资源的annotations标签中。