Paper:LSTM之父眼中的深度学习十年简史《The 2010s: Our Decade of Deep Learning / Outlook on the 2020s》的解读

目录

The 2010s: Our Decade of Deep Learning / Outlook on the 2020s

1. The Decade of Long Short-Term Memory (LSTM) 长短时记忆网络的十年(LSTM)

2. The Decade of Feedforward Neural Networks 前馈神经网络的十年

3. LSTMs & FNNs, especially CNNs. LSTMs v FNNs

4. GANs: the Decade's Most Famous Application of our Curiosity Principle (1990) 十年来好奇心原则最著名的应用(1990)

5. Other Hot Topics of the 2010s: Deep Reinforcement Learning, Meta-Learning, World Models, Distilling NNs, Neural Architecture Search, Attention Learning, Fast Weights, Self-Invented Problems ...2010年代的其他热门话题:深度强化学习、元学习、世界模型、提炼NNs、神经架构搜索、注意力学习、快速权重、自创问题……

6. The Future of Data Markets and Privacy 数据市场和隐私的未来

7. Outlook: 2010s v 2020s - Virtual AI v Real AI? 展望:2010年vs 2020年——虚拟AI vs真实AI?

Acknowledgments 致谢

References

The 2010s: Our Decade of Deep Learning / Outlook on the 2020s

| A previous post [MIR] (2019) focused on our Annus Mirabilis 1990-1991 at TU Munich. Back then we published many of the basic ideas that powered the Artificial Intelligence Revolution of the 2010s through Artificial Neural Networks (NNs) and Deep Learning. The present post is partially redundant but much shorter (a 7 min read), focusing on the recent decade's most important developments and applications based on our work, also mentioning related work, and concluding with an outlook on the 2020s, also addressing privacy and data markets. Here the table of contents:

| 先前的一篇文章[MIR](2019)关注的是1990-1991年慕尼黑理工大学的Annus Mirabilis。当时,我们发表了许多基本思想,这些思想通过人工神经网络(NNs)和深度学习为21世纪10年代的人工智能革命提供了动力。本文虽有部分冗余,但篇幅要短得多(7分钟的阅读时间),重点介绍了基于我们工作的近十年来最重要的发展和应用,并提及了相关工作,最后展望了2020年代,还涉及了隐私和数据市场。以下是目录:

|

1. The Decade of Long Short-Term Memory (LSTM) 长短时记忆网络的十年(LSTM)

| Much of AI in the 2010s was about the NN called Long Short-Term Memory (LSTM) [LSTM1-13] [DL4]. The world is sequential by nature, and LSTM has revolutionized sequential data processing, e.g., speech recognition, machine translation, video recognition, connected handwriting recognition, robotics, video game playing, time series prediction, chat bots, healthcare applications, you name it. By 2019, [LSTM1] got more citations per year than any other computer science paper of the past millennium. Below I'll list some of the most visible and historically most significant applications. | 在21世纪10年代,人工智能主要是关于被称为长短时记忆(LSTM)的NN [LSTM1-13] [DL4]。世界的本质是顺序的,LSTM革新了顺序数据处理,例如语音识别、机器翻译、视频识别、联网手写识别、机器人技术、视频游戏、时间序列预测、聊天机器人、医疗保健应用程序等等。到2019年,[LSTM1]每年被引用的次数超过了过去千年中任何其他计算机科学论文。下面我将列出一些最明显和历史上最重要的应用程序。 |

| 2009: Connected Handwriting Recognition. Enormous interest from industry was triggered right before the 2010s when out of the blue my PhD student Alex Graves won three connected handwriting competitions (French, Farsi, Arabic) at ICDAR 2009, the famous conference on document analysis and recognition. He used a combination of two methods developed in my research groups at TU Munich and the Swiss AI Lab IDSIA: LSTM (1990s-2005) [LSTM1-6](which overcomes the famous vanishing gradient problem analyzed by my PhD student Sepp Hochreiter [VAN1] in 1991) and Connectionist Temporal Classification [CTC] (2006). CTC-trained LSTM was the first recurrent NN or RNN [MC43] [K56] to win any international contests. | 2009年:联网手写识别。就在2010年之前,我的博士生Alex Graves在ICDAR 2009(著名的文件分析和识别会议)上意外地赢得了三次书法比赛(法语、波斯语、阿拉伯语),引起了业界的极大兴趣。他使用了我在慕尼黑理工大学和瑞士人工智能实验室IDSIA的研究小组开发的两种方法的组合:LSTM (1990 -2005) [lstmc -6](克服了我的博士生Sepp Hochreiter [VAN1]在1991年分析的著名的消失梯度问题)和连接主义时间分类[CTC](2006)。ctc训练的LSTM是第一个在国际比赛中获胜的循环神经网络或RNN [MC43] [K56]。 |

| CTC-Trained LSTM also was the First Superior End-to-End Neural Speech Recognizer. Already in 2007, our team successfully applied CTC-LSTM to speech [LSTM4], also with hierarchical LSTM stacks [LSTM14]. This was very different from previous hybrid methods since the late 1980s which combined NNs and traditional approachessuch as Hidden Markov Models (HMMs), e.g., [BW] [BRI] [BOU][HYB12]. Alex kept using CTC-LSTM as a postdoc in Toronto [LSTM8]. CTC-LSTM has had massive industrial impact. By 2015, it dramatically improved Google's speech recognition [GSR15] [DL4]. This is now on almost every smartphone. By 2016, more than a quarter of the power of all those Tensor Processing Units in Google's datacenters was used for LSTM (and 5% for convolutional NNs) [JOU17]. Google's on-device speech recognition of 2019 (not any longer on the server) is still based on LSTM. See [MIR], Sec. 4. Microsoft, Baidu, Amazon, Samsung, Apple, and many other famous companies are using LSTM, too [DL4][DL1]. | cCTC训练的LSTM也是第一个高级的端到端神经语音识别器。早在2007年,我们的团队就成功地将CTC-LSTM应用于语音[LSTM4],并使用了层次化LSTM堆栈[LSTM14]。这与上世纪80年代末以来将NNs和隐马尔科夫模型(HMMs)等传统方法相结合的混合方法非常不同,如[BW] [BRI] [BOU][HYB12]。Alex在多伦多做博士后时一直使用CTC-LSTM [LSTM8]。 CTC-LSTM已经产生了巨大的工业影响。到2015年,大大改善了谷歌的语音识别[GSR15] [DL4]。现在几乎所有的智能手机都有这个功能。到2016年,谷歌数据中心中所有这些张量处理单元的四分之一以上的功率用于LSTM(5%用于卷积NNs) [JOU17]。谷歌2019年的设备上语音识别(不在服务器上)仍然基于LSTM,可查看[MIR], Sec. 4。微软、百度、亚马逊、三星、苹果等众多知名企业也在使用LSTM [DL4][DL1]。 |

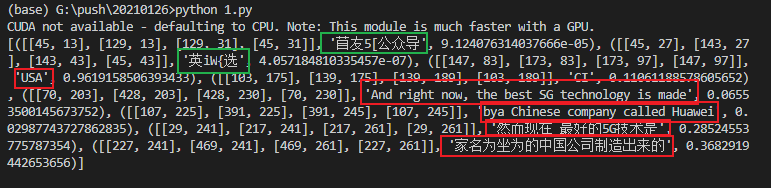

| 2016: The First Superior End-to-End Neural Machine Translation was also Based on LSTM. Already in 2001, my PhD student Felix Gers showed that LSTM can learn languages unlearnable by traditional models such as HMMs [LSTM13]. That is, a neural "subsymbolic" model suddenly excelled at learning "symbolic" tasks! Compute still had to get 1000 times cheaper, but by 2016-17, both Google Translate [GT16] [WU] (which mentions LSTM over 50 times) and Facebook Translate [FB17] were based on two connected LSTMs [S2S], one for incoming texts, one for outgoing translations - much better than what existed before [DL4]. By 2017, Facebook's users made 30 billion LSTM-based translations per week [FB17] [DL4]. Compare: the most popular youtube video (the song "Despacito") got only 6 billion clicks in 2 years. See [MIR], Sec. 4. | 2016:第一个高级端到端神经机器翻译也是基于LSTM的。早在2001年,我的博士生Felix Gers就证明了LSTM可以学习传统模型(如HMMs [LSTM13])无法学习的语言。也就是说,一个神经“亚符号”模型突然擅长学习“符号”任务!计算仍然必须得到1000倍的便宜,但是到2016-17年,谷歌翻译[GT16] [WU](提到LSTM超过50次)和Facebook翻译[FB17]都基于两个连接的LSTM [S2S],一个用于输入文本,一个用于输出翻译—比以前的[DL4]要好得多。到2017年,Facebook的用户每周进行300亿次基于lstm的翻译[FB17] [DL4]。相比之下:youtube上最受欢迎的视频(歌曲“Despacito”)在两年内只有60亿的点击量,可查看[MIR], Sec. 4。 |

| LSTM-Based Robotics. By 2003, our team used LSTM for Reinforcement Learning (RL) and robotics, e.g., [LSTM-RL]. In the 2010s, combinations of RL and LSTM have become standard. For example, in 2018, an RL-trained LSTM was the core of OpenAI'sDactyl which learned to control a dextrous robot hand without a teacher [OAI1]. | LSTM-Based机器人。到2003年,我们团队使用LSTM进行强化学习(RL)和机器人技术,例如[LSTM-RL]。在2010年代,RL和LSTM的组合已经成为标准。例如,在2018年,基于RL训练的LSTM是OpenAI的sdactyl的核心,OpenAI的Dactyl在没有监督的情况下学习控制灵巧的机器人手[OAI1]。 |

| 2018-2019: LSTM for Video Games. In 2019, DeepMind beat a pro player in the game of Starcraft, which is harder than Chess or Go [DM2] in many ways, using Alphastar whose brain has a deep LSTM core trained by RL [DM3]. An RL-trained LSTM (with 84% of the model's total parameter count) also was the core of OpenAI Five which learned to defeat human experts in the Dota 2 video game (2018) [OAI2] [OAI2a]. See [MIR], Sec. 4. The 2010s saw many additional LSTM applications, e.g., [DL1]. LSTM was used for healthcare, chemistry, molecule design, lip reading [LIP1], stock market prediction, self-driving cars, mapping brain signals to speech (Nature, vol 568, 2019), predicting what's going on in nuclear fusion reactors (same volume, p. 526), etc. There is not enough space to mention everything here. | 2018-2019:电子游戏LSTM。在2019年,DeepMind在《星际争霸》(Starcraft)游戏中击败了一名职业棋手,这款游戏在很多方面都比国际象棋或围棋(Go [DM2])更难。rl训练的LSTM(占模型总参数计数的84%)也是OpenAI Five的核心,OpenAI Five学会了在dota2视频游戏中击败人类专家(2018)[OAI2] [OAI2a],可查看[MIR], Sec. 4。 2010年代出现了许多额外的LSTM应用,例如[DL1]。LSTM被用于医疗保健、化学、分子设计、唇读(LIP1)、股市预测、自动驾驶汽车、将大脑信号映射到语音(《自然》,568卷,2019年)、预测核聚变反应堆中正在发生的事情(同样的卷,526页)等。这里没有足够的空间来一一说明。 |

2. The Decade of Feedforward Neural Networks 前馈神经网络的十年

| LSTM is an RNN that can in principle implement any program that runs on your laptop. The more limited feedforward NNs (FNNs) cannot (although they are good enough for board games such as Backgammon [T94] and Go [DM2] and Chess). That is, if we want to build an NN-based Artificial General Intelligence (AGI), then its underlying computational substrate must be something like an RNN. FNNs are fundamentally insufficient. RNNs relate to FNNs like general computers relate to mere calculators. Nevertheless, our Decade of Deep Learning was also about FNNs, as described next. | LSTM是一个RNN,原则上可以实现在笔记本电脑上运行的任何程序。更有限的前馈NNs (FNNs)不能(尽管它们对于像西洋双陆棋[T94]和围棋[DM2]以及国际象棋这样的棋盘游戏来说已经足够好了)。也就是说,如果我们想要构建一个基于NN的人工一般智能(AGI),那么它的底层计算基础必须类似于RNN。FNNs 从根本上是不够的。RNNs与FNNs的关系就像普通计算机与计算器的关系一样。然而,我们这十年的深度学习也是关于FNNs的。 |

| 2010: Deep FNNs Don't Need Unsupervised Pre-Training! In 2009, many thought that deep FNNs cannot learn much withoutunsupervised pre-training [MIR] [UN0-UN5]. But in 2010, our team with my postdoc Dan Ciresan [MLP1] showed that deep FNNs can be trained by plain backpropagation [BP1] (compare [BPA] [BPB] [BP2][R7]) and do not at all require unsupervised pre-training for important applications. Our system set a new performance record [MLP1] on the back then famous and widely used image recognition benchmark called MNIST. This was achieved by greatly accelerating traditional FNNs on highly parallel graphics processing units called GPUs. A reviewer called this a "wake-up call to the machine learning community." Today, very few commercial NN applications are still based on unsupervised pre-training (used in my first deep learner of 1991). See [MIR], Sec. 19. | 2010:深度FNN不需要无监督的前训练!2009年,许多人认为,如果没有监督前训练,深度的FNN学不到多少东西。但是在2010年,我们的团队和我的博士后Dan Ciresan [MLP1]证明,通过简单的反向传播[BP1](比较[BPA] [BPB] [BP2][R7])可以训练深度FNNs,而且对于重要的应用完全不需要无监督的预训练。我们的系统在当时著名且广泛使用的图像识别基准MNIST上创下了新的性能记录[MLP1]。这是通过在称为gpu的高度并行图形处理单元上大大加速传统的FNN来实现的。一位评论者称这是“给机器学习社区敲响的警钟”。如今,很少有商业NN应用程序仍然基于无监督的预训练(在我1991年的第一个深度学习者中使用),可查看[MIR], Sec. 19。 |

| 2011: CNN-Based Computer Vision Revolution. Our team in Switzerland (Dan Ciresan et al.) greatly sped up the convolutional NNs (CNNs) invented and developed by others since the 1970s [CNN1-4].The first superior award-winning CNN, often called "DanNet," was created in 2011 [GPUCNN1,3,5]. It was a practical breakthrough. It was much deeper and faster than earlier GPU-accelerated CNNs [GPUCNN]. Already in 2011, it showed that deep learning worked far better than the existing state-of-the-art for recognizing objects in images. In fact, it won 4 important computer vision competitions in a row between May 15, 2011, and September 10, 2012 [GPUCNN5]before a similar GPU-accelerated CNN of Univ. Toronto won the ImageNet 2012 contest [GPUCNN4-5] [R6]. | 2011年:基于CNN的计算机视觉革命。我们在瑞士的团队(Dan Ciresan et al.)从20世纪70年代起就大大加快了别人发明和开发的卷积NNs (convolutional NNs, CNNs) [CNN1-4]。第一个优等获奖的CNN,通常被称为“DanNet”,创建于2011年[GPUCNN1,3,5]。这是一个实际的突破。它比早期gpu加速的CNNs (GPUCNN)要深得多、快得多。早在2011年,它就证明了深度学习比现有的最先进的图像识别技术效果要好得多。事实上,在2011年5月15日至2012年9月10日之间,它在4次重要的计算机视觉比赛中都取得了胜利[GPUCNN5],之后,类似的gpu加速的CNN在多伦多大学获得了2012年ImageNet大赛的胜利[GPUCNN4-5] [R6]。 |

| At IJCNN 2011 in Silicon Valley, DanNet blew away the competitionthrough the first superhuman visual pattern recognition in a contest. Even the New York Times mentioned this. It was also the first deep CNN to win: a Chinese handwriting contest (ICDAR 2011), an image segmentation contest (ISBI, May 2012), a contest on object detection in large images (ICPR, 10 Sept 2012), at the same time a medical imaging contest on cancer detection. (All before ImageNet 2012 [GPUCNN4-5] [R6].) Our CNN image scanners were 1000 times faster than previous methods [SCAN], with tremendous importance for health care etc. Today IBM, Siemens, Google and many startups are pursuing this approach. Much of modern computer vision is extending the work of 2011, e.g., [MIR], Sec. 19. | 2011年IJCNN在硅谷举办的比赛中,丹内特凭借第一款超人视觉模式识别技术一举击败对手。甚至《纽约时报》也提到了这一点。它也是第一个获得冠军的深度CNN:中国书法比赛(ICDAR 2011),图像分割比赛(ISBI, 2012年5月),大图像对象检测比赛(ICPR, 2012年9月10日),与此同时,癌症检测医学成像比赛。(均在ImageNet 2012之前[GPUCNN4-5] [R6])我们的CNN图像扫描仪比以前的方法(扫描)快1000倍,对医疗保健等有着巨大的重要性。如今,IBM、西门子、谷歌和许多初创公司都在采用这种方法。许多现代计算机视觉正在扩展2011年的工作,可查看[MIR], Sec. 19。 |

| Already in 2010, we introduced our deep and fast GPU-based NNs to Arcelor Mittal, the world's largest steel maker, and were able to greatly improve steel defect detection through CNNs [ST] (before ImageNet 2012). This may have been the first Deep Learning breakthrough in heavy industry, and helped to jump-start our companyNNAISENSE. The early 2010s saw several other applications of our Deep Learning methods. | 早在2010年,我们就向全球最大的钢铁制造商阿塞洛-米塔尔(Arcelor Mittal)推出了基于gpu的深度、快速的NNs系统,并通过CNNs [ST]大大改善了钢铁缺陷检测(在ImageNet 2012之前)。这可能是重工业领域的第一个深度学习突破,帮助我们启动了NNAISENSE。2010年代初,我们还看到了深度学习方法的其他一些应用。 |

| Through my students Rupesh Kumar Srivastava and Klaus Greff, the LSTM principle also led to our Highway Networks [HW1] of May 2015, the first working very deep FNNs with hundreds of layers.Microsoft's popular ResNets [HW2] (which won the ImageNet 2015 contest) are a special case thereof. The earlier Highway Nets perform roughly as well as ResNets on ImageNet [HW3]. Highway layers are also often used for natural language processing, where the simpler residual layers do not work as well [HW3]. | 通过我的学生鲁比什·库马尔·斯里瓦斯塔瓦和克劳斯·格里夫,LSTM原则也促成了我们2015年5月的高速公路网络[HW1],这是第一个使用几百层的非常深的FNNs。微软颇受欢迎的ResNets [HW2](赢得了2015年的ImageNet竞赛)就是一个特例。早期的高速公路网的表现与ImageNet上的ResNets差不多[HW3]。高速公路层也经常用于自然语言处理,其中简单的剩余层不能很好地工作[HW3]。 |

3. LSTMs & FNNs, especially CNNs. LSTMs v FNNs

| In the recent Decade of Deep Learning, the recognition of static patterns (such as images) was mostly driven by CNNs (which are FNNs; see Sec. 2), while sequence processing (such as speech, text, etc.) was mostly driven by LSTMs (which are RNNs [MC43] [K56]; see Sec. 1). Often CNNs and LSTMs were combined, e.g., for video recognition. FNNs and LSTMs also invaded each other's territories on occasion. Two examples:

| 在近十年的深度学习中,静态模式(如图像)的识别主要是由CNNs(即FNNs;可查看 Sec. 2),而序列处理(如语音、文本等)主要由LSTMs驱动(即RNNs [MC43] [K56];可查看 Sec. 2).常将CNNs和LSTMs结合,如用于视频识别。FNNs和LSTMs有时也会入侵对方的领土。两个例子:

|

| Business Week called LSTM "arguably the most commercial AI achievement" [AV1]. As mentioned above, by 2019, [LSTM1] got more citations per year than all other computer science papers of the past millennium [R5]. The record holder of the new millennium [HW2] is an FNN related to LSTM: ResNet [HW2] (Dec 2015) is a special case of our Highway Net (May 2015) [HW1], the FNN version of vanilla LSTM [LSTM2]. | 《商业周刊》称LSTM“可以说是最商业化的人工智能成就”[AV1]。正如上面所提到的,到2019年,[LSTM1]每年被引用的次数超过了过去千年所有其他计算机科学论文[R5]。新千年[HW2]的记录保持者是一个与LSTM相关的FNN: ResNet [HW2](2015年12月)是我国公路网的一个特例(2015年5月)[HW1], FNN版本的vanilla LSTM [LSTM2]。 |

4. GANs: the Decade's Most Famous Application of our Curiosity Principle (1990) 十年来好奇心原则最著名的应用(1990)

| Another concept that has become very popular in the 2010s are Generative Adversarial Networks (GANs), e.g., [GAN0] (2010)[GAN1] (2014). GANs are an instance of my popular adversarialcuriosity principle from 1990 [AC90, AC90b] (see also survey [AC09]). This principle works as follows. One NN probabilistically generates outputs, another NN sees those outputs and predicts environmental reactions to them. Using gradient descent, the predictor NN minimizes its error, while the generator NN tries to make outputs that maximize this error. One net's loss is the other net's gain. GANs are a special case of this where the environment simply returns 1 or 0 depending on whether the generator's output is in a given set [AC19]. (Other early adversarial machine learning settings [S59] [H90] neither involved unsupervised NNs nor were about modeling data nor used gradient descent [AC19].) Compare [SLG] [R2] [AC18] and [MIR], Sec. 5. | 另一个在2010年代变得非常流行的概念是生成式对抗网络(GANs),如[GAN0] (2010)[GAN1](2014)。GANs是我在1990年提出的广受欢迎的反对好奇心原则的一个例子。这个原理如下。一个神经网络可能生成输出,另一个神经网络看到这些输出并预测环境对它们的反应。利用梯度下降法,预测器神经网络使误差最小化,而生成器神经网络使输出误差最大化。一方的损失等于另一方的收获。GANs是这种情况的一个特例,环境只返回1或0,这取决于生成器的输出是否在一个给定的集合[AC19]中。(其他早期的对抗性机器学习设置[S59] [H90]既不涉及无监督NNs,也不涉及建模数据,也不使用梯度下降法[AC19]。)比较[SLG] [R2] [AC18]和 [MIR], Sec. 5. |

5. Other Hot Topics of the 2010s: Deep Reinforcement Learning, Meta-Learning, World Models, Distilling NNs, Neural Architecture Search, Attention Learning, Fast Weights, Self-Invented Problems ...2010年代的其他热门话题:深度强化学习、元学习、世界模型、提炼NNs、神经架构搜索、注意力学习、快速权重、自创问题……

| In July 2013, our Compressed Network Search [CO2] was the first deep learning model to successfully learn control policies directly from high-dimensional sensory input (video) using deep reinforcement learning (RL) (see survey in Sec. 6 of [DL1]), without any unsupervised pre-training (extending earlier work on large NNs with compact codes, e.g., [KO0] [KO2]; compare more recent work [WAV1] [OAI3]). This also helped to jump-start our companyNNAISENSE. A few months later, neuroevolution-based RL (see survey [K96]) also successfully learned to play Atari games [H13]. Soon afterwards, the company DeepMind also had a Deep RL system for high-dimensional sensory input [DM1] [DM2]. See [MIR], Sec. 8. By 2016, DeepMind had a famous superhuman Go player [DM4]. The company was founded in 2010, by some counts the decade's first year. The first DeepMinders with AI publications and PhDs in computer science came from my lab: a co-founder and employee nr. 1. | 2013年7月,我们的压缩网络搜索(二氧化碳)是第一个深学习模型成功地学习控制策略直接从高维感觉输入(视频)使用深强化学习(RL)(见Sec. 6),没有任何未受训(延长早期工作在大型NNs与紧凑的代码,例如,[KO0] [KO2];比较最近的工作[WAV1] [OAI3])。这也帮助我们启动了nisense。 几个月后,基于神经进化的RL(见调查 Sec. 6 of [DL1])也成功学会了玩雅达利游戏[H13]。不久之后,DeepMind公司也有了一个用于高维感官输入的深度RL系统[DM1] [DM2],[MIR], Sec. 8.。 到2016年,DeepMind已经有了一个著名的超人棋手[DM4]。该公司成立于2010年,据一些统计,这是该十年的第一年。第一个拥有人工智能出版物和计算机科学博士学位的DeepMinders来自我的实验室:联合创始人和员工nr. 1。 |

| Our work since 1990 on RL and planning based on a combination of two RNNs called the controller and the world model [PLAN2-6] also has become popular in the 2010s. See [MIR], Sec. 11. (The decade's end also saw a very simple yet novel approach to the old problem of RL [UDRL].) For decades, few have cared for our work on meta-learning or learning to learn since 1987, e.g., [META1] [FASTMETA1-3] [R3]. In the 2010s, meta-learning has finally become a hot topic [META10][META17]. Similar for our work since 1990 on Artificial Curiosity & Creativity [MIR] (Sec. 5, Sec. 6) [AC90-AC10] and Self-Invented Problems [MIR] (Sec. 12) in POWERPLAY style (2011) [PP] [PP1][PP2]. See, e.g., [AC18]. | 自1990年以来,我们在RL方面的工作以及基于控制器和世界模型(PLAN2-6)这两种RNNs组合的规划也在2010年代变得流行起来。看到(MIR)秒。11. (这十年的结束也见证了一个非常简单而新颖的方法来解决RL [UDRL]的老问题。) 几十年来,很少有人关注我们自1987年以来在元学习或学习学习方面的工作,例如[META1] [FASTMETA1-3] [R3]。在2010年代,元学习终于成为一个热门话题[META10][META17]。自1990年以来,我们在人工好奇心和创造力方面的工作也是如此。5秒。6) [AC90-AC10]和自创问题[MIR]12) in POWERPLAY style (2011) [PP] [PP1][PP2]。见,例如,[AC18]。 |

| Similar for our work since 1990 on Hierarchical RL, e.g. [HRL0][HRL1] [HRL2] [HRL4] (see [MIR], Sec. 10), Deterministic Policy Gradients [AC90], e.g., [DPG] [DDPG] (see [MIR], Sec. 14), and Synthetic Gradients [NAN1-NAN4], e.g., [NAN5] (see [MIR], Sec. 15). Similar for our work since 1991 on encoding data by factorial disentangled representations through adversarial NNs [PM2] [PM1]and other methods [LOC] (compare [IG] and [MIR], Sec. 7), and on end-to-end-differentiable systems that learn by gradient descent to quickly manipulate NNs with Fast Weights [FAST0-FAST3a] [R4], separating storage and control like in traditional computers, but in a fully neural way (rather than in a hybrid fashion [PDA1] [PDA2][DNC] [DNC2]). See [MIR], Sec. 8. Similar for our work since 2009 on Neural Architecture Search for LSTM-like architectures that outperform vanilla LSTM in certain applications [LSTM7], e.g., [NAS], and our work since 1991 on compressing or distilling NNs into other NNs [UN0] [UN1], e.g., [DIST2] [R4]. See [MIR], Sec. 2. | 类似于我们自1990年以来在分级RL方面的工作,例如[HRL0][HRL1] [HRL2] [HRL4](参见 [MIR], Sec. 10),决定性策略梯度(AC90),例如,[DPG] [DDPG](见[MIR], Sec. 14),合成梯度(NAN1-NAN4),例如,[NAN5](见[MIR], Sec. 15)。 自1991年以来,我们的工作与此类似,即通过对抗性NNs[PM2][PM1]和其他方法[LOC]通过阶乘分离表示编码数据(比较[IG]和[MIR],第。7) ,以及端到端可微系统,该系统通过梯度下降学习快速操作具有快速权重的NNs[FAST0-FAST3a][R4],与传统计算机一样分离存储和控制,但采用完全神经方式(而不是混合方式[PDA1][PDA2][DNC][DNC2])。见[MIR], Sec. 8。 类似于我们自2009年以来在神经架构方面的工作,搜索在某些应用中优于普通LSTM的LSTM类架构[LSTM7],例如[NAS],以及我们自1991年以来在将NNs压缩或提取为其他NNs[UN0][UN1],例如[DIST2][R4]方面的工作。见[MIR], Sec. 2。 |

| Already in the early 1990s, we had both of the now common types of neural sequential attention: end-to-end-differentiable "soft" attention (in latent space) [FAST2] through multiplicative units within networks [DEEP1-2] (1965), and "hard" attention (in observationspace) in the context of RL [ATT0] [ATT1]. This led to lots of follow-up work. In the 2010s, many have used sequential attention-learning NNs. See [MIR], Sec. 9. | 早在20世纪90年代初,我们就已经拥有了现在常见的两种类型的神经序列注意:端到端可微分的“软”注意(在潜伏期)[FAST2]通过网络内的乘法单元[DEEP1-2](1965),和“硬”注意(在观测空间)在RL [ATT0] [ATT1]上下文中。这导致了大量的后续工作。在2010年代,许多人使用了连续的注意力学习网络。详见 [MIR], Sec. 9。 |

| Many other concepts of the previous millennium [DL1] [MIR] had to wait for the much faster computers of the 2010s to become popular. As mentioned in Sec. 21 of ref [MIR], surveys from the Anglosphere do not always make clear [DLC] that Deep Learning was invented where English is not an official language. It started in 1965 in the Ukraine (back then the USSR) with the first nets of arbitrary depth that really learned [DEEP1-2] [R8]. Five years later, modernbackpropagation was published "next door" in Finland (1970) [BP1]. The basic deep convolutional NN architecture (now widely used) was invented in the 1970s in Japan [CNN1], where NNs with convolutions were later (1987) also combined with "weight sharing" and backpropagation [CNN1a]. We are standing on the shoulders of these authors and many others - see 888 references in ref [DL1]. | 上个千年的许多其他概念[DL1] [MIR]不得不等到2010年代的更快的计算机变得流行。 如Sec所述。在ref [MIR]的调查中,来自英语圈的调查并不总是清楚[DLC],深度学习是在英语不是官方语言的地方发明的。它开始于1965年的乌克兰(当时的苏联),第一网任意深度,真正学习[深度1-2][R8]。五年后,《现代反向传播》在芬兰出版(1970)[BP1]。基本的深度卷积神经网络架构(现在被广泛使用)是20世纪70年代在日本发明的[CNN1],后来(1987)的卷积NNs也结合了“权值共享”和反向传播[CNN1a]。我们正站在这些作者和许多其他人的肩膀上——参见参考文献[DL1]中的888篇参考文献。 |

| Of course, Deep Learning is just a small part of AI, in most applications limited to passive pattern recognition. We view it as a by-product of our research on more general artificial intelligence, which includes optimal universal learning machines such as the Gödel machine (2003-),asymptotically optimal search for programs running on general purpose computers such as RNNs, etc. | 当然,深度学习只是人工智能的一小部分,在大多数应用中仅限于被动模式识别。我们认为它是我们研究更一般的人工智能的副产品,包括最优的通用学习机器,如哥德尔机器(2003-),渐进最优搜索程序运行在通用计算机上,如RNNs等。 |

6. The Future of Data Markets and Privacy 数据市场和隐私的未来

| AIs are trained by data. If it is true that data is the new oil, then it should have a price, just like oil. In the 2010s, the major surveillance platforms (e.g., Sec. 1) [SV1] did not offer you any money for your data and the resulting loss of privacy. The 2020s, however, will see attempts at creating efficient data markets to figure out your data's true financial value through the interplay between supply and demand. Even some of the sensitive medical data will not be priced by governmental regulators but by patients (and healthy persons) who own it and who may sell parts thereof as micro-entrepreneurs in a healthcare data market [SR18] [CNNTV2]. |

人工智能通过数据进行训练。如果数据真的是新的石油,那么它应该有一个价格,就像石油一样。在2010年代,主要的监控平台(如Sec. 1) [SV1]没有为您的数据提供任何金钱,因此导致隐私的丧失。然而,在本世纪20年代,人们将尝试创建有效的数据市场,通过供需之间的相互作用来确定数据的真正金融价值。即使是一些敏感的医疗数据,也不会由政府监管机构定价,而是由拥有这些数据的患者(和健康人)定价,他们可能会在医疗数据市场中以微型企业家的身份出售部分数据[SR18] [CNNTV2]。 |

| Are surveillance and loss of privacy inevitable consequences of increasingly complex societies? Super-organisms such as cities and states and companies consist of numerous people, just like people consist of numerous cells. These cells enjoy little privacy. They are constantly monitored by specialized "police cells" and "border guard cells": Are you a cancer cell? Are you an external intruder, a pathogen? Individual cells sacrifice their freedom for the benefits of being part of a multicellular organism. | 在日益复杂的社会中,监视和隐私丧失是不可避免的后果吗?像城市、州和公司这样的超级有机体由许多人组成,就像人由许多细胞组成一样。这些牢房几乎没有隐私。他们经常被专门的“警察细胞”和“边防警卫细胞”监控:你是癌细胞吗?你是外部入侵者,还是病原体?个体细胞为了成为多细胞生物的一部分而牺牲了它们的自由。 |

| Similar for super-organisms such as nations [FATV]. Over 5000 years ago, writing enabled recorded history and thus became its inaugural and most important invention. Its initial purpose, however, was to facilitate surveillance, to track citizens and their tax payments. The more complex a super-organism, the more comprehensive its collection of information about its components. | 类似的超级有机体如nations [FATV]。5000多年前,书写使有记录的历史成为可能,并因此成为它的第一个也是最重要的发明。然而,它最初的目的是促进监督,追踪公民及其纳税情况。一个超级有机体越复杂,它对其组成部分的信息收集就越全面。

|

| 200 years ago, at least the parish priest in each village knew everything about all the village people, even about those who did not confess, because they appeared in the confessions of others. Also, everyone soon knew about the stranger who had entered the village, because some occasionally peered out of the window, and what they saw got around. Such control mechanisms were temporarily lost through anonymization in rapidly growing cities, but are now returning with the help of new surveillance devices such as smartphones as part of digital nervous systems that tell companies and governments a lot about billions of users [SV1] [SV2]. Cameras and drones [DR16] etc. are becoming tinier all the time and ubiquitous; excellent recognition of faces and gaits etc. is becoming cheaper and cheaper, and soon many will use it to identify others anywhere on earth - the big wide world will not offer any more privacy than the local village. Is this good or bad? Anyway, some nations may find it easier than others to become more complex kinds of super-organisms at the expense of the privacy rights of their constituents [FATV]. | 200年前,至少每个村庄的教区牧师知道村里所有人的一切,包括那些不认罪的人,因为他们出现在别人的忏悔中。而且,大家很快就知道了那个进村子的陌生人的情况,因为有些人不时地向窗外张望,他们所看到的东西四处传播。在快速发展的城市,这种控制机制曾因匿名化而暂时消失,但在智能手机等新型监控设备的帮助下,这种控制机制正卷土重来。智能手机等新型监控设备是数字神经系统的一部分,它向企业和政府提供了数十亿用户的大量信息。摄像机和无人机[DR16]等。变得越来越小,无处不在;对面孔和步态等的出色识别变得越来越便宜,很快许多人将用它来识别地球上任何地方的其他人——这个广阔的世界不会比当地的村庄提供更多的隐私。这是好事还是坏事?无论如何,一些国家可能会比其他国家更容易成为更复杂的超级有机体,其代价是其成员的隐私权[FATV]。 |

7. Outlook: 2010s v 2020s - Virtual AI v Real AI? 展望:2010年vs 2020年——虚拟AI vs真实AI?

| In the 2010s, AI excelled in virtual worlds, e.g., in video games, board games, and especially on the major WWW platforms (Sec. 1). Most AI profits were in marketing. Passive pattern recognition through NNs helped some of the most valuable companies such as Amazon & Alibaba & Google & Facebook & Tencent to keep you longer on their platforms, to predict which items you might be interested in, to make you click at tailored ads etc. However, marketing is just a tiny part of the world economy. What will the next decade bring? |

在21世纪10年代,人工智能在虚拟世界(如电子游戏、棋类游戏,尤其是主要的WWW平台)方面表现出色(见第1节)。被动模式识别通过NNs帮助一些最有价值的公司如亚马逊和阿里巴巴和谷歌和Facebook和腾讯,让你不再在他们的平台上,预测你可能感兴趣的物品,让你点击定制广告等等。然而,市场营销是世界经济的一小部分。下一个十年会带来什么? |

| In the 2020s, Active AI will more and more invade the real world, driving industrial processes and machines and robots, a bit like in the movies. (Better self-driving cars [CAR1] will be part of this, especially fleets of simple electric cars with small & cheap batteries [CAR2].) Although the real world is much more complex than virtual worlds, and less forgiving, the coming wave of "Real World AI" or simply "Real AI" will be much bigger than the previous AI wave, because it will affect all of production, and thus a much bigger part of the economy. That's why NNAISENSE is all about Real AI. | 在21世纪20年代,活跃的人工智能将越来越多地侵入现实世界,推动工业流程、机器和机器人的发展,有点像电影里的情节。(更好的自动驾驶汽车[CAR1]将是其中的一部分,尤其是配备小型廉价电池的简单电动汽车[CAR2]。)尽管现实世界比虚拟世界复杂得多,也不那么宽容,但即将到来的“现实世界人工智能”浪潮,或简单地说“现实人工智能”,将比前一波人工智能浪潮规模大得多,因为它将影响到所有的生产,从而影响到经济的更大一部分。这就是为什么NNAISENSE是关于真正的人工智能的。 |

| Some claim that big platform companies with lots of data from many users will dominate AI. That's absurd. How does a baby learn to become intelligent? Not "by downloading lots of data from Facebook"[NAT2]. No, it learns by actively creating its own data through its own self-invented experiments with toys etc, learning to predict the consequences of its actions, and using this predictive model of physics and the world to become a better and better planner and problem solver [AC90] [PLAN2-6]. | 一些人声称,拥有大量用户数据的大型平台公司将主导人工智能。这是荒谬的。婴儿如何学会变得聪明?不是“从Facebook下载大量数据”[NAT2]。不,它通过主动创造自己的数据来学习,通过自己发明的玩具等实验来学习,学习预测自己行为的后果,并使用这个物理和世界的预测模型来成为一个更好的规划师和问题解决者[AC90] [PLAN2-6]。 |

| We already know how to build AIs that also learn a bit like babies, using what I have called artificial curiosity since 1990 [AC90-AC10][PP-PP2], and incorporating mechanisms that aid in reasoning[FAST3a] [DNC] [DNC2] and in the extraction of abstract objects from raw data [UN1] [OBJ1-3]. In the not too distant future, this will help to create what I have called in interviews see-and-do robotics: quickly teach an NN to control a complex robot with many degrees of freedom to execute complex tasks, such as assembling a smartphone, solely by visual demonstration, and by talking to it, without touching or otherwise directly guiding the robot - a bit like we'd teach a kid [FA18]. This will revolutionize many aspects of our civilization. | 我们已经知道如何构建像婴儿一样学习的人工智能,使用的是我从1990年起就称之为人工好奇心的东西[AC90-AC10][ppp - pp2],并整合了有助于推理[FAST3a] [DNC] [DNC2]和从原始数据中提取抽象对象[UN1] [OBJ1-3]的机制。在不久的将来,这将有助于创建在采访中我所说的看的做机器人:快速教一个神经网络来控制一个复杂和多自由度机器人来执行复杂的任务,如装配一个智能手机,只能通过视觉演示,和它说话,没有接触或直接指导机器人——有点像我们教一个孩子(FA18)。这将彻底改变我们文明的许多方面。 |

| Sure, such AIs have military applications, too. But although an AI arms race seems inevitable [SPE17], almost all of AI research in the 2020s will be about making human lives longer & healthier & easier & happier [SR18]. Our motto is: AI For All! AI won't be controlled by a few big companies or governments. Since 1941, every 5 years, compute has been getting 10 times cheaper [ACM16]. This trend won't break anytime soon. Everybody will own cheap but powerful AIs improving her/his life in many ways. So much for now on the 2020s. In the more distant future, most self-driven & self-replicating & curious & creative & conscious AIs [INV16]will go where most of the physical resources are, eventually colonizing and transforming the entire visible universe [ACM16] [SA17] [FA15][SP16], which may be just one of countably many computable universes [ALL1-3]. | 当然,这样的人工智能也有军事用途。然而,尽管人工智能军备竞赛似乎不可避免[SPE17],但在本世纪20年代,几乎所有的人工智能研究都将着眼于让人类生活得更长久、更健康、更轻松、更快乐[SR18]。我们的座右铭是:人工智能!人工智能不会被少数大公司或政府控制。自1941年以来,每5年,计算机就会便宜10倍[ACM16]。这种趋势短期内不会改变。每个人都将拥有便宜但强大的人工智能在许多方面改善他/她的生活。 在21世纪20年代,就到此为止吧。在更遥远的未来,大多数自我驱动的、自我复制的、好奇的、有创造力的、有意识的人工智能[INV16]将会到达大部分物理资源所在的地方,最终殖民并改造整个可见宇宙[ACM16]、[SA17]、[FA15]、[SP16],这可能只是众多可计算宇宙中的一个[ALL1-3]。 |

Acknowledgments 致谢

| Thanks to several expert reviewers for useful comments. (Let me know under juergen@idsia.ch if you can spot any remaining error.) The contents of this article may be used for educational and non-commercial purposes, including articles for Wikipedia and similar sites. Many additional publications of the past decade can be found in mypublication page and my arXiv page. | 感谢几个专家审稿人提供的有用意见。(在juergen@idsia.ch下让我知道。如果你能发现任何剩余的错误。)本文内容可能用于教育和非商业目的,包括维基百科和类似网站的文章。过去十年的许多其他出版物可以在我的出版物页面和我的arXiv页面找到。 |

References

Paper:LSTM之父眼中的深度学习十年简史《The 2010s: Our Decade of Deep Learning / Outlook on the 2020s》的参考文章