前面的文章中介绍了《H264视频通过RTMP流直播》,下面将介绍一下如何将H264实时视频通过RTSP直播。

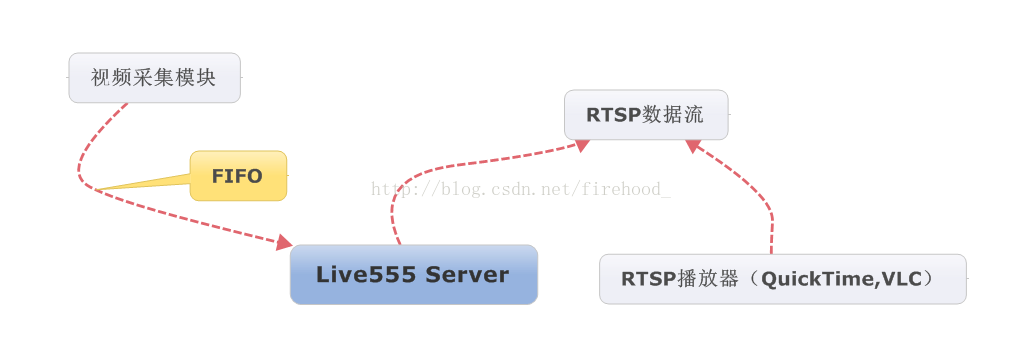

实现思路是将视频流发送给live555, 由live555来实现H264数据流直播。

视频采集模块通过FIFO队列将H264数据帧发送给live555. live555 在收到客户端的RTSP播放请求后,开始从FIFO中读取H264视频数据并通过RTSP直播出去。整个流程如下图所示:

调整和修改Live555 MediaServer

下载live555源码,在media目录下增加四个文件并修改文件live555MediaServer.cpp。增加的四个文件如下:

WW_H264VideoServerMediaSubsession.h

WW_H264VideoServerMediaSubsession.cpp

WW_H264VideoSource.h

WW_H264VideoSource.cpp

下面附上四个文件的源码:

WW_H264VideoServerMediaSubsession.h

#pragma once#include "liveMedia.hh"

#include "BasicUsageEnvironment.hh"

#include "GroupsockHelper.hh"#include "OnDemandServerMediaSubsession.hh"

#include "WW_H264VideoSource.h"class WW_H264VideoServerMediaSubsession : public OnDemandServerMediaSubsession

{

public:WW_H264VideoServerMediaSubsession(UsageEnvironment & env, FramedSource * source);~WW_H264VideoServerMediaSubsession(void);public:virtual char const * getAuxSDPLine(RTPSink * rtpSink, FramedSource * inputSource);virtual FramedSource * createNewStreamSource(unsigned clientSessionId, unsigned & estBitrate); // "estBitrate" is the stream's estimated bitrate, in kbpsvirtual RTPSink * createNewRTPSink(Groupsock * rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource * inputSource);static WW_H264VideoServerMediaSubsession * createNew(UsageEnvironment & env, FramedSource * source);static void afterPlayingDummy(void * ptr);static void chkForAuxSDPLine(void * ptr);void chkForAuxSDPLine1();private:FramedSource * m_pSource;char * m_pSDPLine;RTPSink * m_pDummyRTPSink;char m_done;

};

WW_H264VideoServerMediaSubsession.cpp

#include "WW_H264VideoServerMediaSubsession.h"WW_H264VideoServerMediaSubsession::WW_H264VideoServerMediaSubsession(UsageEnvironment & env, FramedSource * source) : OnDemandServerMediaSubsession(env, True)

{m_pSource = source;m_pSDPLine = 0;

}WW_H264VideoServerMediaSubsession::~WW_H264VideoServerMediaSubsession(void)

{if (m_pSDPLine){free(m_pSDPLine);}

}WW_H264VideoServerMediaSubsession * WW_H264VideoServerMediaSubsession::createNew(UsageEnvironment & env, FramedSource * source)

{return new WW_H264VideoServerMediaSubsession(env, source);

}FramedSource * WW_H264VideoServerMediaSubsession::createNewStreamSource(unsigned clientSessionId, unsigned & estBitrate)

{return H264VideoStreamFramer::createNew(envir(), new WW_H264VideoSource(envir()));

}RTPSink * WW_H264VideoServerMediaSubsession::createNewRTPSink(Groupsock * rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource * inputSource)

{return H264VideoRTPSink::createNew(envir(), rtpGroupsock, rtpPayloadTypeIfDynamic);

}char const * WW_H264VideoServerMediaSubsession::getAuxSDPLine(RTPSink * rtpSink, FramedSource * inputSource)

{if (m_pSDPLine){return m_pSDPLine;}m_pDummyRTPSink = rtpSink;//mp_dummy_rtpsink->startPlaying(*source, afterPlayingDummy, this);m_pDummyRTPSink->startPlaying(*inputSource, 0, 0);chkForAuxSDPLine(this);m_done = 0;envir().taskScheduler().doEventLoop(&m_done);m_pSDPLine = strdup(m_pDummyRTPSink->auxSDPLine());m_pDummyRTPSink->stopPlaying();return m_pSDPLine;

}void WW_H264VideoServerMediaSubsession::afterPlayingDummy(void * ptr)

{WW_H264VideoServerMediaSubsession * This = (WW_H264VideoServerMediaSubsession *)ptr;This->m_done = 0xff;

}void WW_H264VideoServerMediaSubsession::chkForAuxSDPLine(void * ptr)

{WW_H264VideoServerMediaSubsession * This = (WW_H264VideoServerMediaSubsession *)ptr;This->chkForAuxSDPLine1();

}void WW_H264VideoServerMediaSubsession::chkForAuxSDPLine1()

{if (m_pDummyRTPSink->auxSDPLine()){m_done = 0xff;}else{double delay = 1000.0 / (FRAME_PER_SEC); // msint to_delay = delay * 1000; // usnextTask() = envir().taskScheduler().scheduleDelayedTask(to_delay, chkForAuxSDPLine, this);}

}

WW_H264VideoSource.h

#ifndef _WW_H264VideoSource_H

#define _WW_H264VideoSource_H#include "liveMedia.hh"

#include "BasicUsageEnvironment.hh"

#include "GroupsockHelper.hh"

#include "FramedSource.hh"#define FRAME_PER_SEC 25class WW_H264VideoSource : public FramedSource

{

public:WW_H264VideoSource(UsageEnvironment & env);~WW_H264VideoSource(void);public:virtual void doGetNextFrame();virtual unsigned int maxFrameSize() const;static void getNextFrame(void * ptr);void GetFrameData();private:void *m_pToken;char *m_pFrameBuffer;int m_hFifo;

};#endif

#include "WW_H264VideoSource.h"

#include <stdio.h>

#ifdef WIN32

#include <windows.h>

#else

#include <sys/types.h>

#include <sys/stat.h>

#include <string.h>

#include <fcntl.h>

#include <unistd.h>

#include <limits.h>

#endif#define FIFO_NAME "/tmp/H264_fifo"

#define BUFFER_SIZE PIPE_BUF

#define REV_BUF_SIZE (1024*1024)#ifdef WIN32

#define mSleep(ms) Sleep(ms)

#else

#define mSleep(ms) usleep(ms*1000)

#endifWW_H264VideoSource::WW_H264VideoSource(UsageEnvironment & env) :

FramedSource(env),

m_pToken(0),

m_pFrameBuffer(0),

m_hFifo(0)

{m_hFifo = open(FIFO_NAME,O_RDONLY);printf("[MEDIA SERVER] open fifo result = [%d]\n",m_hFifo);if(m_hFifo == -1){return;}m_pFrameBuffer = new char[REV_BUF_SIZE];if(m_pFrameBuffer == NULL){printf("[MEDIA SERVER] error malloc data buffer failed\n");return;}memset(m_pFrameBuffer,0,REV_BUF_SIZE);

}WW_H264VideoSource::~WW_H264VideoSource(void)

{if(m_hFifo){::close(m_hFifo);}envir().taskScheduler().unscheduleDelayedTask(m_pToken);if(m_pFrameBuffer){delete[] m_pFrameBuffer;m_pFrameBuffer = NULL;}printf("[MEDIA SERVER] rtsp connection closed\n");

}void WW_H264VideoSource::doGetNextFrame()

{// 根据 fps,计算等待时间double delay = 1000.0 / (FRAME_PER_SEC * 2); // msint to_delay = delay * 1000; // usm_pToken = envir().taskScheduler().scheduleDelayedTask(to_delay, getNextFrame, this);

}unsigned int WW_H264VideoSource::maxFrameSize() const

{return 1024*200;

}void WW_H264VideoSource::getNextFrame(void * ptr)

{((WW_H264VideoSource *)ptr)->GetFrameData();

}void WW_H264VideoSource::GetFrameData()

{gettimeofday(&fPresentationTime, 0);fFrameSize = 0;int len = 0;unsigned char buffer[BUFFER_SIZE] = {0};while((len = read(m_hFifo,buffer,BUFFER_SIZE))>0){memcpy(m_pFrameBuffer+fFrameSize,buffer,len);fFrameSize+=len;}//printf("[MEDIA SERVER] GetFrameData len = [%d],fMaxSize = [%d]\n",fFrameSize,fMaxSize);// fill frame datamemcpy(fTo,m_pFrameBuffer,fFrameSize);if (fFrameSize > fMaxSize){fNumTruncatedBytes = fFrameSize - fMaxSize;fFrameSize = fMaxSize;}else{fNumTruncatedBytes = 0;}afterGetting(this);

}

修改live555MediaServer.cpp文件如下

/**********

This library is free software; you can redistribute it and/or modify it under

the terms of the GNU Lesser General Public License as published by the

Free Software Foundation; either version 2.1 of the License, or (at your

option) any later version. (See <http://www.gnu.org/copyleft/lesser.html>.)This library is distributed in the hope that it will be useful, but WITHOUT

ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS

FOR A PARTICULAR PURPOSE. See the GNU Lesser General Public License for

more details.You should have received a copy of the GNU Lesser General Public License

along with this library; if not, write to the Free Software Foundation, Inc.,

51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA

**********/

// Copyright (c) 1996-2013, Live Networks, Inc. All rights reserved

// LIVE555 Media Server

// main program#include <BasicUsageEnvironment.hh>

#include "DynamicRTSPServer.hh"

#include "version.hh"

#include "WW_H264VideoSource.h"

#include "WW_H264VideoServerMediaSubsession.h"int main(int argc, char** argv) {// Begin by setting up our usage environment:TaskScheduler* scheduler = BasicTaskScheduler::createNew();UsageEnvironment* env = BasicUsageEnvironment::createNew(*scheduler);UserAuthenticationDatabase* authDB = NULL;

#ifdef ACCESS_CONTROL// To implement client access control to the RTSP server, do the following:authDB = new UserAuthenticationDatabase;authDB->addUserRecord("username1", "password1"); // replace these with real strings// Repeat the above with each <username>, <password> that you wish to allow// access to the server.

#endif// Create the RTSP server:RTSPServer* rtspServer = RTSPServer::createNew(*env, 554, authDB);if (rtspServer == NULL) {*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";exit(1);}// Add live streamWW_H264VideoSource * videoSource = 0;ServerMediaSession * sms = ServerMediaSession::createNew(*env, "live", 0, "ww live test");sms->addSubsession(WW_H264VideoServerMediaSubsession::createNew(*env, videoSource));rtspServer->addServerMediaSession(sms);char * url = rtspServer->rtspURL(sms);*env << "using url \"" << url << "\"\n";delete[] url;// Run loopenv->taskScheduler().doEventLoop();rtspServer->removeServerMediaSession(sms);Medium::close(rtspServer);env->reclaim();delete scheduler;return 1;

}

发送H264视频流的RTSPStream

/********************************************************************

filename: RTSPStream.h

created: 2013-08-01

author: firehood

purpose: 通过live555实现H264 RTSP直播

*********************************************************************/

#pragma once

#include <stdio.h>

#ifdef WIN32

#include <windows.h>

#else

#include <pthread.h>

#endif#ifdef WIN32

typedef HANDLE ThreadHandle;

#define mSleep(ms) Sleep(ms)

#else

typedef unsigned int SOCKET;

typedef pthread_t ThreadHandle;

#define mSleep(ms) usleep(ms*1000)

#endif#define FILEBUFSIZE (1024 * 1024) class CRTSPStream

{

public:CRTSPStream(void);~CRTSPStream(void);

public:// 初始化bool Init();// 卸载void Uninit();// 发送H264文件bool SendH264File(const char *pFileName);// 发送H264数据帧int SendH264Data(const unsigned char *data,unsigned int size);

};

/********************************************************************

filename: RTSPStream.cpp

created: 2013-08-01

author: firehood

purpose: 通过live555实现H264 RTSP直播

*********************************************************************/

#include "RTSPStream.h"

#ifdef WIN32

#else

#include <sys/types.h>

#include <sys/stat.h>

#include <string.h>

#include <fcntl.h>

#include <unistd.h>

#include <limits.h>

#include <errno.h>

#endif#define FIFO_NAME "/tmp/H264_fifo"

#define BUFFERSIZE PIPE_BUFCRTSPStream::CRTSPStream(void)

{}CRTSPStream::~CRTSPStream(void)

{}bool CRTSPStream::Init()

{if(access(FIFO_NAME,F_OK) == -1){int res = mkfifo(FIFO_NAME,0777);if(res != 0){printf("[RTSPStream] Create fifo failed.\n");return false;}}return true;

}void CRTSPStream::Uninit()

{}bool CRTSPStream::SendH264File(const char *pFileName)

{if(pFileName == NULL){return false;}FILE *fp = fopen(pFileName, "rb"); if(!fp) { printf("[RTSPStream] error:open file %s failed!",pFileName);} fseek(fp, 0, SEEK_SET);unsigned char *buffer = new unsigned char[FILEBUFSIZE];int pos = 0;while(1){int readlen = fread(buffer+pos, sizeof(unsigned char), FILEBUFSIZE-pos, fp);if(readlen<=0){break;}readlen+=pos;int writelen = SendH264Data(buffer,readlen);if(writelen<=0){break;}memcpy(buffer,buffer+writelen,readlen-writelen);pos = readlen-writelen;mSleep(25);}fclose(fp);delete[] buffer;return true;

}// 发送H264数据帧

int CRTSPStream::SendH264Data(const unsigned char *data,unsigned int size)

{if(data == NULL){return 0;}// open pipe with non_block modeint pipe_fd = open(FIFO_NAME, O_WRONLY|O_NONBLOCK);//printf("[RTSPStream] open fifo result = [%d]\n",pipe_fd);if(pipe_fd == -1){return 0;}int send_size = 0;int remain_size = size;while(send_size < size){int data_len = (remain_size<BUFFERSIZE) ? remain_size : BUFFERSIZE;int len = write(pipe_fd,data+send_size,data_len);if(len == -1){static int resend_conut = 0;if(errno == EAGAIN && ++resend_conut<=3){printf("[RTSPStream] write fifo error,resend..\n");continue;}resend_conut = 0;printf("[RTSPStream] write fifo error,errorcode[%d],send_size[%d]\n",errno,send_size);break;}else{ send_size+= len;remain_size-= len;}}close(pipe_fd);//printf("[RTSPStream] SendH264Data datalen[%d], sendsize = [%d]\n",size,send_size);return 0;

}

测试程序代码

#include <stdio.h>

#include "RTSPStream.h"int main(int argc,char* argv[])

{CRTSPStream rtspSender;bool bRet = rtspSender.Init();rtspSender.SendH264File("E:\\测试视频\\test.264");system("pause");

}