文章目录

- Scrapy爬取QQ音乐、评论、下载、歌曲、歌词

- 爬取分析

- 1、分析页面的歌手信息

- 2、编写代码

- Item.py中编写爬取的信息

- setting.py中的配置信息

- Spider下的music.py编写代码

- 3、分析歌单列表

- 在music.py中继续编程

- 4、分析歌词请求

- 爬取歌词代码的编写

- 歌词信息的清洗

- 5、分析评论

- 6、下载歌曲的url

- 7、将数据保存到Mongo

- 7、随机User-Agent

- 8、在setting.py中开启中间件的使用

- 9、运行程序

- 总结

Scrapy爬取QQ音乐、评论、下载、歌曲、歌词

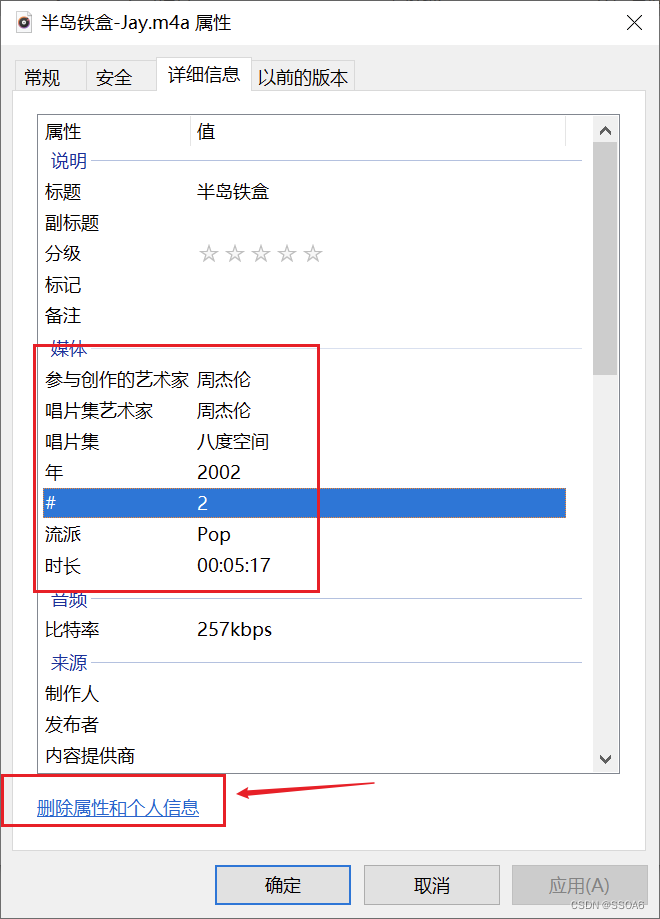

之前写过一篇详细的Scrapy爬取豆瓣电影的教程,这次就不写这么详细了,QQ音乐下载解密的时候用了下以前的文章教程。

Scrapy爬取豆瓣电影

QQ付费音乐爬取

QQ音乐无损下载

目前Python3.7和Scrapy有冲突,建议用3.6,使用的模块如下。

python3.6.5

Scrapy--1.5.1

pymongo--3.7.1

爬取分析

爬取思路:我是从歌手分类开始爬取,然后爬取每个人下面的歌曲,然后依次爬取歌词、评论、下载链接等。

1、分析页面的歌手信息

找到url后我们接着来分析url里面的参数信息。

https://u.y.qq.com/cgi-bin/musicu.fcg?callback=getUCGI43917153213009863&g_tk=5381&jsonpCallback=getUCGI43917153213009863&loginUin=0&hostUin=0&format=jsonp&inCharset=utf8&outCharset=utf-8¬ice=0&platform=yqq&needNewCode=0&data=%7B%22comm%22%3A%7B%22ct%22%3A24%2C%22cv%22%3A10000%7D%2C%22singerList%22%3A%7B%22module%22%3A%22Music.SingerListServer%22%2C%22method%22%3A%22get_singer_list%22%2C%22param%22%3A%7B%22area%22%3A-100%2C%22sex%22%3A-100%2C%22genre%22%3A-100%2C%22index%22%3A-100%2C%22sin%22%3A0%2C%22cur_page%22%3A1%7D%7D%7D

在分析后发现有关的参数只要data里面的就行。

sin:默认为0,当第二页的时候就是80

curl_page:当前的页数

2、编写代码

首先创建Scrapy项目scrapy startproject qq_music,

生成Spider文件scrapy genspider music y.qq.com。

Item.py中编写爬取的信息

# -*- coding: utf-8 -*-# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.htmlimport scrapy

from scrapy import Fieldclass QqMusicItem(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()# 数据库表名collection = table = 'singer'id = Field()# 歌手名字singer_name = Field()# 歌曲名song_name = Field()# 歌曲地址song_url = Field()# 歌词lrc = Field()# 评论comment = Field()

setting.py中的配置信息

MAX_PAGE = 3 # 爬取几页

SONGER_NUM = 1 # 爬取歌手几首歌,按歌手歌曲的火热程度。

MONGO_URL = '127.0.0.1'

MONGO_DB = 'music' # mongo数据库

Spider下的music.py编写代码

# -*- coding: utf-8 -*-

import scrapy

import json

from qq_music.items import QqMusicItem

from scrapy import Requestclass MusicSpider(scrapy.Spider):name = 'music'allowed_domains = ['y.qq.com']start_urls = ['https://u.y.qq.com/cgi-bin/musicu.fcg?data=%7B%22singerList%22%3A%7B%22module%22%3A%22Music.SingerListServer' \'%22%2C%22method%22%3A%22get_singer_list%22%2C%22param%22%3A%7B%22area%22%3A-100%2C%22sex%22%3A-100%2C%22genr' \'e%22%3A-100%2C%22index%22%3A-100%2C%22sin%22%3A{num}%2C%22cur_page%22%3A{id}%7D%7D%7D'] # 其实爬取地址song_down = 'https://c.y.qq.com/base/fcgi-bin/fcg_music_express_mobile3.fcg?&jsonpCallback=MusicJsonCallback&ci' \'d=205361747&songmid={songmid}&filename=C400{songmid}.m4a&guid=9082027038' # 歌曲下载地址song_url = 'https://c.y.qq.com/v8/fcg-bin/fcg_v8_singer_track_cp.fcg?singermid={singer_mid}&order=listen&num={sum}' # 歌曲列表lrc_url = 'https://c.y.qq.com/lyric/fcgi-bin/fcg_query_lyric.fcg?nobase64=1&musicid={musicid}' # 歌词列表discuss_url = 'https://c.y.qq.com/base/fcgi-bin/fcg_global_comment_h5.fcg?cid=205360772&reqtype=2&biztype=1&topid=' \'{song_id}&cmd=8&pagenum=0&pagesize=25' # 歌曲评论# 构造请求,爬取的页数。def start_requests(self):for i in range(1, self.settings.get('MAX_PAGE') + 1): # 在配置信息里获取爬取页数yield Request(self.start_urls[0].format(num=80 * (i - 1), id=i), callback=self.parse_user)def parse_user(self, response):"""依次爬取歌手榜的用户信息singer_mid:用户midsinger_name:用户名称返回爬取用户热歌信息。:param response::return:"""singer_list = json.loads(response.text).get('singerList').get('data').get('singerlist') # 获取歌手列表for singer in singer_list:singer_mid = singer.get('singer_mid') # 歌手midsinger_name = singer.get('singer_name') # 歌手名字yield Request(self.song_url.format(singer_mid=singer_mid, sum=self.settings.get('SONGER_NUM')),callback=self.parse_song, meta={'singer_name': singer_name}) # 爬取歌手的热歌

3、分析歌单列表

我们发现singermid和order参数是必须常量,num是获取歌曲的数量。

我们分析返回的请求里的需要信息。

在music.py中继续编程

def parse_song(self, response):"""爬取歌手下面的热歌歌曲id是获取评论用的歌曲mid是获取歌曲下载地址用的:param response::return:"""songer_list = json.loads(response.text).get('data').get('list')for songer_info in songer_list:music = QqMusicItem()singer_name = response.meta.get('singer_name') # 歌手名字song_name = songer_info.get('musicData').get('songname') # 歌曲名字music['singer_name'] = singer_namemusic['song_name'] = song_namesong_id = songer_info.get('musicData').get('songid') # 歌曲idmusic['id'] = song_idsong_mid = songer_info.get('musicData').get('songmid') # 歌曲midmusicid = songer_info.get('musicData').get('songid') # 歌曲列表yield Request(url=self.discuss_url.format(song_id=song_id), callback=self.parse_comment,meta={'music': music, 'musicid': musicid, 'song_mid': song_mid})

4、分析歌词请求

musicid:参数是刚才获取歌曲列表中的songid,我们继续写music.py里面的代码。

爬取歌词代码的编写

def parse_lrc(self, response):"""爬取歌曲的歌词:param response::return:"""music = response.meta.get('music')music['lrc'] = response.textsong_mid = response.meta.get('song_mid')yield Request(url=self.song_down.format(songmid=song_mid), callback=self.parse_url,meta={'music': music, 'songmid': song_mid})

我们打开歌词的url链接,直接在浏览器上打不开,因为没有referer参数,所以我们在postman里面构造请求,发现数据需要清洗。

歌词信息的清洗

我们在pipelines.py文件中清洗数据。

# -*- coding: utf-8 -*-# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import jsonimport pymongo

import refrom scrapy.exceptions import DropItem

from qq_music.items import QqMusicItemclass QqMusicPipeline(object):def process_item(self, item, spider):return itemclass lrcText(object):"""获取的歌词需要清洗"""def __init__(self):passdef process_item(self, item, spider):"""进行正则匹配获取的单词:param item::param spider::return:"""if isinstance(item, QqMusicItem):if item.get('lrc'):result = re.findall(r'[\u4e00-\u9fa5]+', item['lrc'])item['lrc'] = ' '.join(result)return itemelse:return DropItem('Missing Text')

5、分析评论

一样的套路分析url里面的参数信息,主要的还是刚才获取的singid。

def parse_comment(self, response):"""歌曲的评论:param response::return:"""comments = json.loads(response.text).get('hot_comment').get('commentlist') # 爬取一页的热评if comments:comments = [{'comment_name': comment.get('nick'), 'comment_text': comment.get('rootcommentcontent')} forcomment in comments]else:comments = 'null'music = response.meta.get('music')music['comment'] = commentsmusicid = response.meta.get('musicid') # 传递需要的参数song_mid = response.meta.get('song_mid')yield Request(url=self.lrc_url.format(musicid=musicid), callback=self.parse_lrc,meta={'music': music, 'song_mid': song_mid})

6、下载歌曲的url

歌曲我就不下载了,直接获取的是下载的url链接,这个分析可以看QQ音乐歌曲下载的另一篇博客,这里就直接写代码了。

def parse_url(self, response):"""解析歌曲下载地址的url:param response::return:"""song_text = json.loads(response.text)song_mid = response.meta.get('songmid')vkey = song_text['data']['items'][0]['vkey'] # 加密的参数music = response.meta.get('music')if vkey:music['song_url'] = 'http://dl.stream.qqmusic.qq.com/C400' + song_mid + '.m4a?vkey=' + \vkey + '&guid=9082027038&uin=0&fromtag=66'else:music['song_url'] = 'null'yield music

7、将数据保存到Mongo

配置信息是在setting.py设置,crawler.settings.get可以直接获取配置信息。

class MongoPipline(object):"""保存到Mongo数据库"""def __init__(self, mongo_url, mongo_db):self.mongo_url = mongo_urlself.mongo_db = mongo_dbself.client = pymongo.MongoClient(self.mongo_url)self.db = self.client[self.mongo_db]@classmethoddef from_crawler(cls, crawler):return cls(mongo_url=crawler.settings.get('MONGO_URL'),mongo_db=crawler.settings.get('MONGO_DB'))def open_spider(self, spider):passdef process_item(self, item, spider):if isinstance(item, QqMusicItem):data = dict(item)self.db[item.collection].insert(data)return itemdef close_spider(self, spider):self.client.close()

7、随机User-Agent

我用的自己维护的代理池,这里就不写随机代理了,直接写随机User-Agent。

在middlewares.py中编写随机头。

class my_useragent(object):def process_request(self, request, spider):user_agent_list = ["Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1","Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6","Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5","Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3","Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3","Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24","Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"]user_agent = random.choice(user_agent_list)request.headers['User_Agent'] = user_agent

8、在setting.py中开启中间件的使用

ROBOTSTXT_OBEY = False

DOWNLOADER_MIDDLEWARES = {# 'qq_music.middlewares.QqMusicDownloaderMiddleware': 543,'qq_music.middlewares.my_useragent': 544,

}

ITEM_PIPELINES = {# 'qq_music.pipelines.QqMusicPipeline': 300,'qq_music.pipelines.lrcText': 300,'qq_music.pipelines.MongoPipline': 302,

}

9、运行程序

(venv) ➜ qq_music git:(master) ✗ scrapy crawl music

总结

- 在用requests构造请求测试歌手列表的url时,发现构造嵌套data请求的时候,将data里面的数据封装为字典类型,然后用json.jumps转换为json格式,然后在提交url请求。请求是失败的因为正数前面它有个+号,然后获取失败的url用replace将+删除,就获得最终url了。

https://u.y.qq.com/cgi-bin/musicu.fcg?callback=getUCGI5078555865872545&g_tk=5381&jsonpCallback=getUCGI5078555865872545&loginUin=0&hostUin=0&format=jsonp&inCharset=utf8&outCharset=utf-8¬ice=0&platform=yqq&needNewCode=0&data=%7B%22comm%22%3A%7B%22ct%22%3A24%2C%22cv%22%3A10000%7D%2C%22singerList%22%3A%7B%22module%22%3A%22Music.SingerListServer%22%2C%22method%22%3A%22get_singer_list%22%2C%22param%22%3A%7B%22area%22%3A-100%2C%22sex%22%3A-100%2C%22genre%22%3A-100%2C%22index%22%3A-100%2C%22sin%22%3A0%2C%22cur_page%22%3A1%7D%7D%7D

2. 在使用yield Request(self.start_urls, callback=self.parse_user, meta={‘demo’:demo})调用下个请求方法时,用meta传递参数,不然每次用yield 返回一个item后,就是要进行pipelines处理。

3. 请求要依次处理,调用另一个函数会自动在headers中生成referer链接。